A social impact bond is a type of pay-for-success initiative that shifts the financial risks associated with pursuing public purposes to private investors. Governments throughout the world are hopeful that they can be relied on as a politically feasible policy tool for tackling difficult social problems. Despite the excitement surrounding them, there is very little empirical scholarship on social impact bonds. This article takes stock of this new phenomenon, noting the many reasons for their widespread appeal while also raising some concerns that researchers and practitioners would do well to consider before adopting them. We do so by appraising them through the lens of three dimensions: accountability, measurement, and cost-effectiveness. Throughout, we draw comparisons to conventional government contracting.

Efforts to increase competition, efficiency, and accountability in governments are now the norm (Box, 1999; Brown, Potoski, & Van Slyke, 2006; Dunleavy, Margetts, Bastow, & Tinkler, 2006; Kettl, 1997; United States Government Accountability Office, 2015), and private actors such as for-profit corporations have grown increasingly interested in addressing what have long been considered public concerns (Carroll, 2008; Cooney & Shanks, 2010; Vogel, 2005). Out of this mix of evolving sector roles and public openness to new innovations to address social problems has emerged a distinct type of cross-sector collaboration—the social impact bond (SIB). While SIBs have some appeal, it is useful at this early stage to engage in a critical evaluation of their merits. To that end, this article documents recent developments in SIBs and appraises them according to three dimensions—accountability, measurement, and cost-effectiveness. We conclude that while there is much to like about SIBs, it is important to be clear-eyed about where they fail to provide obvious advantages over more traditional strategies for dealing with social problems.

Social impact bonds (also known as “Pay for Success Bonds”) are a unique cross-sector collaboration where private investors provide early funding for initiatives prioritized by governments, often implemented by nonprofit organizations (McKinsey & Company, 2012). SIBs are not bonds in the traditional sense but, rather, work as a type of “pay-for-success” agreement between a government agency, investors, and service providers (Shah & Costa, 2013).1 Although every SIB is unique, service delivery organizations often provide an intervention that the government pays for—with interest—only if the intervention is determined to be successful. The obvious advantage for governments is that the private investors—instead of taxpayers—assume the risks associated with funding potentially ineffective interventions (United States Government Accountability Office, 2015).

Because SIBs shift the financial risks associated with failed policy interventions to the private sector (Baliga, 2013), they are seen as promising solutions across the political spectrum. They can generate popular support for dealing with widespread problems, but politicians have cover if the initiatives fail and, additionally, constituencies are not left paying for interventions that do not work. As a proof of concept, legislators who are hesitant to fund untried initiatives outright might be amenable to a SIB instead. Then, if the SIB is successful, a legislature would, arguably, be more inclined to allocate taxpayer money to fund (via reimbursement to its private sector partner) the now-tested initiative.

SIBs are noteworthy because they do not depend on the public-spiritedness of private actors. Unlike other cross-sector collaborations that might be contingent on corporate philanthropy or on a corporation's commitment to social responsibility, SIBs work even if they appeal solely to investors’ pecuniary interests. While the prosocial aspect may be an added benefit—and indeed may be a motivating factor for some—the success of the SIB is not contingent on it. In this way, a SIB may work as one of many investment products for clients, similar to socially responsible mutual funds.

SIBs are also seen as promising to the extent that they improve on traditional contracts, which governments rely on regularly (Brudney, Cho, & Wright, 2009; DeHoog & Salamon, 2002; Kelman, 2002), notwithstanding their drawbacks (Savas, 2000; Sclar, 2001). The appeal of contracting is that it allows vendors to compete for the opportunity to provide goods and services on behalf of the government (DeHoog & Salamon, 2002; Van Slyke, 2009) with the expectation is that this will ultimately lower the costs of the services provided (Brown et al., 2006). Relying on vendors can also reduce the apparent size of government as agencies contract with other organizations, such as nonprofits or private firms, that have the additional advantage of being closer to the communities being served.

One of the main concerns about contracting, however, is that it complicates government accountability (DeHoog & Salamon, 2002; Savas, 2000). Contracting is especially risky when outcomes are hard to monitor, as in contracting for social services (Brown & Potoski, 2005). Contracted vendors have an opportunity to shirk in their execution of the contract, and government agencies may lack the motivation, resources, or expertise to monitor them closely (Brown et al., 2006; Kelman, 2002). Some observers regard SIBs as able to improve upon the shortcomings of contracts while retaining their benefits, an issue we consider throughout the article.

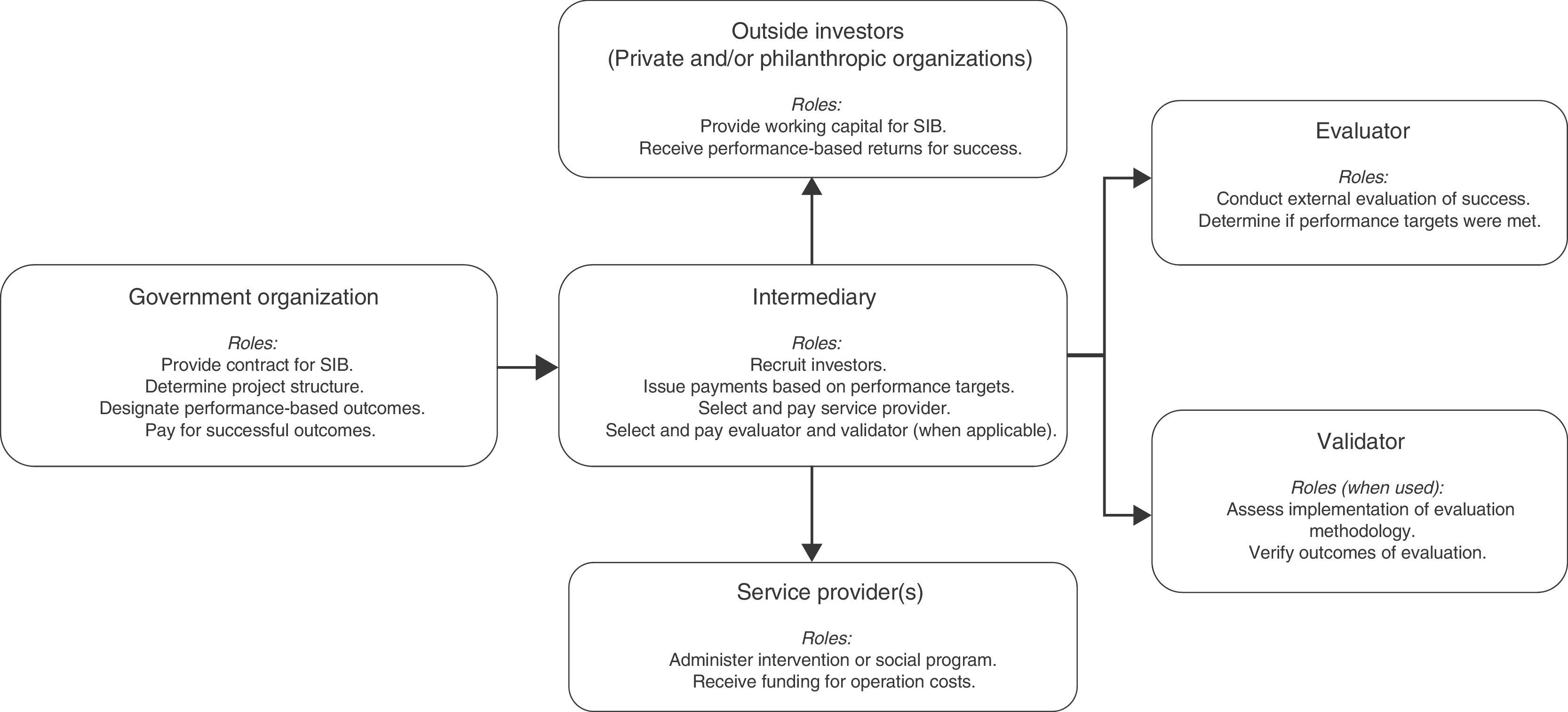

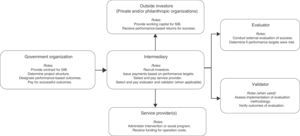

SIBs explainedIn the SIB pay-for-success model, a government identifies a target population and a meaningful outcome and then, with the necessary legislative authority, enters into a contract with a private intermediary organization to oversee an effort to produce the outcome (Liebman, 2011; Shah & Costa, 2013; Social Impact Investment Taskforce, 2014). The intermediary organization—the node of the SIB network—may contract with other organizations, such as nonprofit service providers, to implement the intervention. Critical to this SIB arrangement, the intermediary also enters into agreements with private investors whose investments provide the financial support for implementing the intervention and for service delivery. Thus, investors, not the government, pay for the intervention. If the intervention is determined to be successful, the government then makes funds available to the intermediary organization, which then reimburses the investors—with interest. If the intervention is unsuccessful, the government does not refund the intermediary and investors do not receive a return of their principal or interest. Whatever the outcome, the service delivery organizations receive support for their efforts (an improvement on traditional pay-for-success initiatives, which penalized the service provider if the outcomes were not met). See Fig. 1.

Organizational Structure of SIBs. Note: Information presented in figures is based on Warner (2013) and the GAO report on Pay For Success (2015).

Apparent from this short description, SIBs are fundamentally collaborative efforts whose startup costs may be substantial (Warner, 2013). Unlike simpler forms of contracting, where a government agency decides internally what services need to be provided and issues a request for proposals accordingly, SIB creation requires coordinating among a number of different entities. Adding to the startup costs, these entities—the government agency, the intermediary, the evaluator, and so on—must come to consensus on what outcomes should be measured, how they will be measured, how those results will be validated and communicated, what cost savings might be expected if the intervention is successful, and how this relates to the return that investors might expect. In most cases, these arrangement need to be established prior to introducing government legislation or related actions that would formally create the SIB.

To illustrate how SIBs can work in practice, consider two of the first SIB initiatives to have been approved by legislatures, proceeded through the initial stages of intervention, and to have reported preliminary data. The first is the Peterborough SIB in the UK, which is generally regarded as the first social impact bond (Baliga, 2013; Social Impact Investment Taskforce, 2014). In 2010, organizations such as charities made funds available to an intermediary organization, Social Finance, to facilitate interventions for prisoners serving short-term sentences at Peterborough Prison (Disley, Rubin, Scraggs, Burrowes, & Culley, 2011). The goal of the intervention, assessed by independent researchers, was to reduce the recidivism rate for offenders (Disley & Rubin, 2014). If the recidivism rate for prisoners who received the intervention was reduced by 10% in comparison to a matched group that did not receive the intervention, then the government would provide a return (between 7.5% and 13%) on the initial investment to the organizations that provided the funding (Disley et al., 2011).

To date, early results based on the Peterborough SIB are mixed. Recidivism rates among the first intervention cohort were 8.4% below the control group, which failed to meet the 10% benchmark and to thus trigger repayments to investors (Jolliffe & Hedderman, 2014). Initially, if the recidivism rates combined across the intervention cohorts were 7.5% below the control group by 2016, then the outcomes would have been considered met (Social Finance, 2014). However, the Peterborough intervention has been folded into a national rehabilitation initiative that does not include private investors, so the true success of this particular SIB may never be known (United States Government Accountability Office, 2015).2

The second example comes from the State of Utah. Officially known as the Utah School Readiness Initiative (“Utah school readiness initiative,” 2014), the bill creates the School Readiness Board, a part of the Governor's Office of Management and Budget, and the School Readiness Account, which provides the necessary funding for the bill's enactment (cf. United States Government Accountability Office, 2015). The bill gives the Board authority to enter into agreements with eligible providers of early education (e.g., school districts, charter schools, private preschools) and private investors in the form of results-based contracts. The intervention targets low-income, preschool-age students who score “at or below two standard deviations below the mean” on an assessment of “age-appropriate cognitive or language skills.” The outcome for a child is considered met if the intervention is determined (by an independent evaluator, funded by the Account) to help the at-risk child avoid later placement in special education.

As with most SIBs, private investors pay for the implementation of the intervention. If a child who was at-risk for later special education placement avoids special education assignment, the state pays the investors the equivalent of 95% of the appropriation that would have been made to deliver special education services to that child. To the extent that the preschool program costs are less than the special education payout, the state effectively returns the investors’ investments with a profit. If it does not produce the intended outcome, then the investors assume the loss.

Preliminary results indicate that only one of 110 at-risk children in the first preschool intervention cohort required special education services in kindergarten (Edmondson, Crim, & Grossman, 2015; Goldman Sachs, 2015). The outcome is interpreted to mean that the state has saved money that would have been spent on the 109 at-risk children had they required special education allotments. Accordingly, the results triggered an initial payout to the investors, Goldman Sachs and J.B. Pritzker. Payments are expected to continue if these children avoid later assignment to special education services.

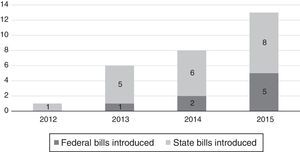

Recent developmentsThe use of SIBs is relatively recent, growing in popularity over the past decade (McKinsey & Company, 2012). Under the UK's presidency, the Group of Eight Industrialized Nations hosted the Social Impact Investment Forum in 2013 and initiated the Social Impact Investment Taskforce to “catalyze the development of the social impact investment market” (Gov.uk, 2014). Progress on this front is already being made, with numerous SIB initiatives being developed around the world (Social Impact Investment Taskforce, 2014). In the US, 15 states as of 2013 had demonstrated interest in SIBs (although most had yet to actually pass SIB legislation) (Shah & Costa, 2013). And at the federal level, the Obama administration regarded pay-for-success programs generally as one of the “key strategies” of its social innovation agenda (Greenblatt, 2014). Indeed, the federal government has already channeled millions of dollars and other support toward such initiatives.

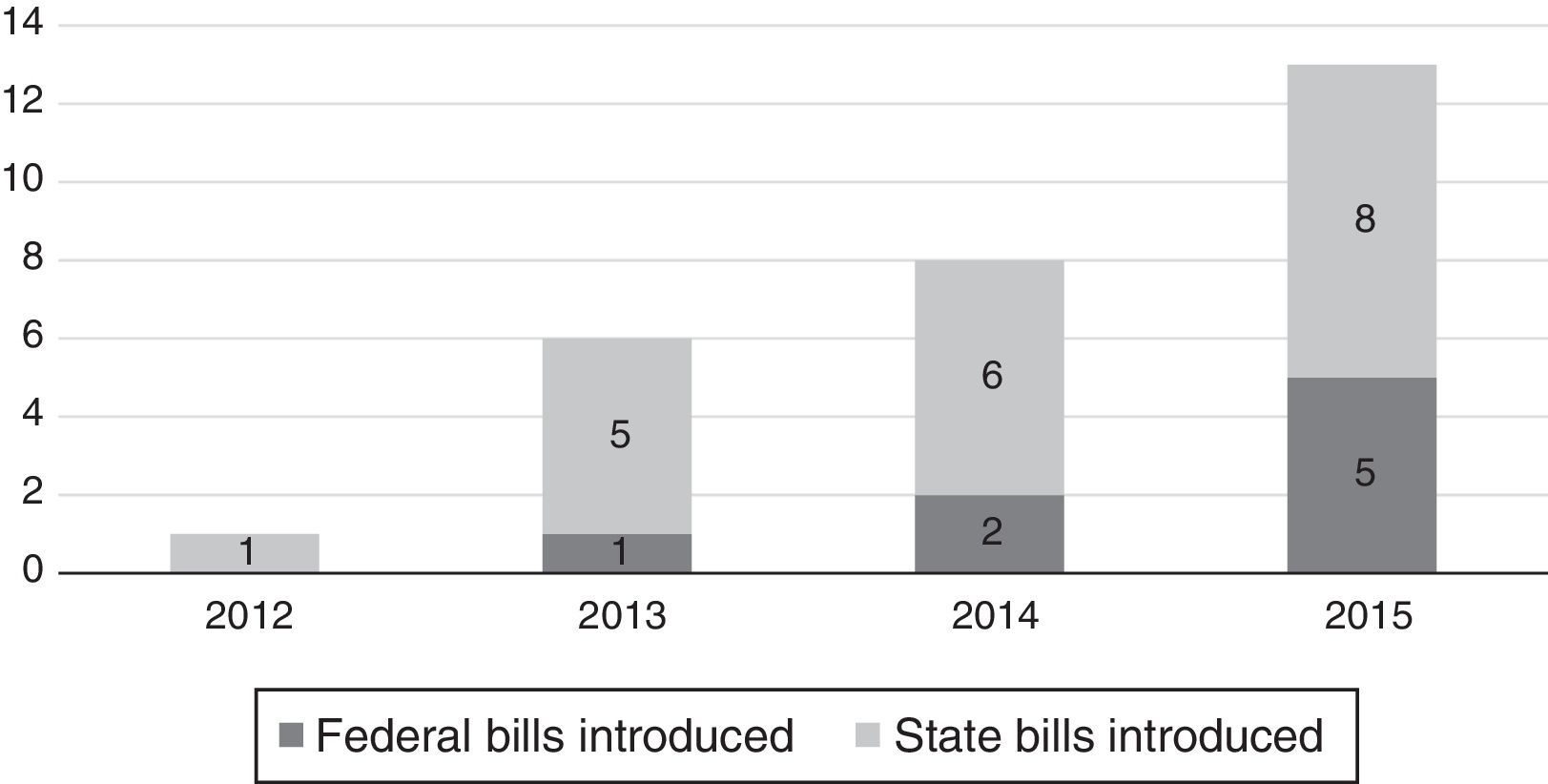

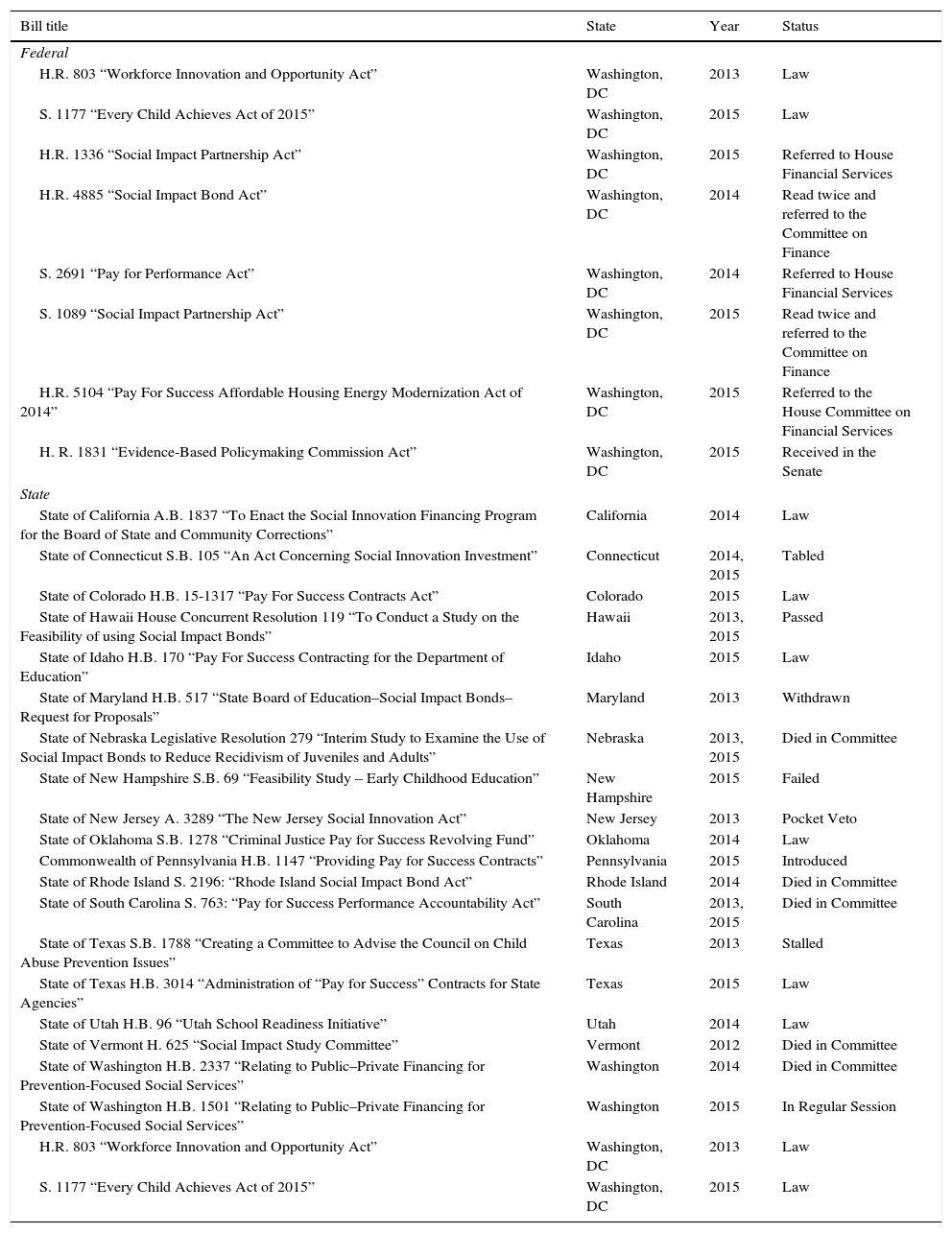

Table 1 and Fig. 2 document the legislative activity associated with SIBs from 2012 to 2015. More than two dozen bills were proposed during these years, with eight passing successfully. Although states are driving legislative activity, Fig. 1 shows an upward trend at both the state and federal levels in the number of bills introduced. Roughly sixty percent of sponsors are members of the Republican Party, and the remaining forty percent are Democrats. This suggests a degree of bipartisan appeal.

Overview of bills proposed and passed pertaining to social impact bonds (2012–2015).

| Bill title | State | Year | Status |

|---|---|---|---|

| Federal | |||

| H.R. 803 “Workforce Innovation and Opportunity Act” | Washington, DC | 2013 | Law |

| S. 1177 “Every Child Achieves Act of 2015” | Washington, DC | 2015 | Law |

| H.R. 1336 “Social Impact Partnership Act” | Washington, DC | 2015 | Referred to House Financial Services |

| H.R. 4885 “Social Impact Bond Act” | Washington, DC | 2014 | Read twice and referred to the Committee on Finance |

| S. 2691 “Pay for Performance Act” | Washington, DC | 2014 | Referred to House Financial Services |

| S. 1089 “Social Impact Partnership Act” | Washington, DC | 2015 | Read twice and referred to the Committee on Finance |

| H.R. 5104 “Pay For Success Affordable Housing Energy Modernization Act of 2014” | Washington, DC | 2015 | Referred to the House Committee on Financial Services |

| H. R. 1831 “Evidence-Based Policymaking Commission Act” | Washington, DC | 2015 | Received in the Senate |

| State | |||

| State of California A.B. 1837 “To Enact the Social Innovation Financing Program for the Board of State and Community Corrections” | California | 2014 | Law |

| State of Connecticut S.B. 105 “An Act Concerning Social Innovation Investment” | Connecticut | 2014, 2015 | Tabled |

| State of Colorado H.B. 15-1317 “Pay For Success Contracts Act” | Colorado | 2015 | Law |

| State of Hawaii House Concurrent Resolution 119 “To Conduct a Study on the Feasibility of using Social Impact Bonds” | Hawaii | 2013, 2015 | Passed |

| State of Idaho H.B. 170 “Pay For Success Contracting for the Department of Education” | Idaho | 2015 | Law |

| State of Maryland H.B. 517 “State Board of Education–Social Impact Bonds–Request for Proposals” | Maryland | 2013 | Withdrawn |

| State of Nebraska Legislative Resolution 279 “Interim Study to Examine the Use of Social Impact Bonds to Reduce Recidivism of Juveniles and Adults” | Nebraska | 2013, 2015 | Died in Committee |

| State of New Hampshire S.B. 69 “Feasibility Study – Early Childhood Education” | New Hampshire | 2015 | Failed |

| State of New Jersey A. 3289 “The New Jersey Social Innovation Act” | New Jersey | 2013 | Pocket Veto |

| State of Oklahoma S.B. 1278 “Criminal Justice Pay for Success Revolving Fund” | Oklahoma | 2014 | Law |

| Commonwealth of Pennsylvania H.B. 1147 “Providing Pay for Success Contracts” | Pennsylvania | 2015 | Introduced |

| State of Rhode Island S. 2196: “Rhode Island Social Impact Bond Act” | Rhode Island | 2014 | Died in Committee |

| State of South Carolina S. 763: “Pay for Success Performance Accountability Act” | South Carolina | 2013, 2015 | Died in Committee |

| State of Texas S.B. 1788 “Creating a Committee to Advise the Council on Child Abuse Prevention Issues” | Texas | 2013 | Stalled |

| State of Texas H.B. 3014 “Administration of “Pay for Success” Contracts for State Agencies” | Texas | 2015 | Law |

| State of Utah H.B. 96 “Utah School Readiness Initiative” | Utah | 2014 | Law |

| State of Vermont H. 625 “Social Impact Study Committee” | Vermont | 2012 | Died in Committee |

| State of Washington H.B. 2337 “Relating to Public–Private Financing for Prevention-Focused Social Services” | Washington | 2014 | Died in Committee |

| State of Washington H.B. 1501 “Relating to Public–Private Financing for Prevention-Focused Social Services” | Washington | 2015 | In Regular Session |

| H.R. 803 “Workforce Innovation and Opportunity Act” | Washington, DC | 2013 | Law |

| S. 1177 “Every Child Achieves Act of 2015” | Washington, DC | 2015 | Law |

Sources: www.congress.gov, www.policyinnovationlab.org, www.payforsuccess.org, www.LegiScan.com, and state legislature websites. Accessed January 13th–14th, 2016.

Note: Some titles abbreviated.

SIBs have thus generated considerable attention in recent years and are gaining traction as a promising public policy tool. Given the newness of SIBs and the subsequent lack of empirical evidence regarding their success, we highlight three dimensions that help illuminate the potential benefits and drawbacks of SIBs.

AccountabilityThere are longstanding concerns about involving private actors in the public policy and service delivery mix (Kettl, 1997; Milward & Provan, 2000), and SIBs do not necessarily resolve these issues. In some respects, they exacerbate them. At the same time, SIBs offer a way to harness some of the resources that are abundant in the private sector, and they do so in a manner that conventional contracts do not. On this dimension—that of accountability—the differences between contracting and SIBs hinge on the role of the private actors in question.

We call attention to three related concerns associated with accountability. First, the introduction of outside investors complicates the accountability issue by locating the intermediary, not the government, in the center of the network, as seen in Fig. 1. Because funding for the SIB relies fundamentally on outside investors, the intermediary is called on to serve two masters. One, the government, is defined explicitly as the entity to which the intermediary is accountable. But the intermediary must also be responsive to the other, the outside investor. Lines of accountability are therefore muddied, and intermediaries must balance the government's interest with the pragmatic reality of needing to remain attractive to investors.3

This is especially problematic in the case of SIBs because the outside investors wield significant (if tacit) veto power in that their refusal to participate could jeopardize the SIB's existence. This highlights our second concern related to accountability: The structure of the SIB gives the contracted entities—the private investors in particular—more power than vendors retain in more conventional contract relationships in that they now occupy positions where they can determine the shape and scope of goods and services provided, all of which is usually done by the government. In the simpler contract scenario, the purpose of proposing a contract is to take a good or service that the government is already committed to providing (say, waste removal) and to see if a vendor can produce it more efficiently. The presumption is that the government could and would provide the good or service, whether by producing the good itself or by contracting with a third party provider. In a SIB arrangement, however, the purpose of proposing the SIB is to see if an outside investor is willing to pay for the service precisely because the government is not interested in doing so (at least not initially). If the intermediary cannot find an investor, then the government will not provide the service. The inability to attract private investors to SIBs thus jeopardizes the provision of the service in a way that the inability to attract vendors for traditional contracts does not, thereby giving investors a much stronger bargaining position. This problem, of course, is weighed against alleged government inaction had the SIB partnership not been available.

The accountability concern for SIBs, then, is similar to but also different from the more general concern about involving private actors in the carrying out of government business. The general concern with any form of contracting is that private actors do not exist to serve the public interest in the same way that proper public entities do (Brown et al., 2006), even when their financial incentives are seemingly aligned with the public good. As Friedman (1970) famously observed, “in his [or her] capacity as a corporate executive, the manager is the agent of the individuals who own the corporations... and his [or her] primary responsibility is to them” (p. SM17). In the case of SIBs, this issue is magnified because the private actors in question are not simply those hired to do the bidding of government. Rather, they are asked, essentially, to fund the initiative on behalf of the government, which elevates their role and any concerns that go along with it.

Third, in a SIB arrangement private entities, such as corporations, wealthy investors, and foundations, are asked to assume an active role in promoting the intervention by virtue of their role as investors. This is different from the more passive role that service providers assume in a conventional contracting relationship. Concretely, this means that the involvement of private actors as investors could limit the range of issues that SIBs are able to address because the SIBs would be limited to taking on those problems in which private actors are interested—financially or otherwise. Thorny problems may become a low priority if their outcomes are hard to measure, if solutions take decades to resolve (if they can be resolved at all), and if they address unpopular problems that investors do not want to be associated with —even if they are the issues that, absent the need to attract private investors, governments might be inclined to take on. Potential areas of focus are thus prioritized according to market values not necessarily public ones. “[M]arkets are not mere mechanisms,” Sandel (2012) writes. “[T]hey embody certain values. And sometimes, market values crowd out nonmarket norms worth caring about” (p. 113). Private investors thus risk relocating an intervention from operating squarely in the public sphere—where concerns about voice and justice are meant to prevail—to the market sphere, which is dominated by concerns about efficiency, price, and profit (Box, 1999).

All this said, SIBs can retain the benefits of collaborating with private actors by keeping the government agency at the head of the agenda-setting table. With the appropriate checks in place, governments can establish desired outcomes and only then solicit the involvement of intermediaries, who would then be obligated to recruit investors under the terms the government set. While “negotiated” contracts are becoming more common (DeHoog & Salamon, 2002), imposing a clear separation between the government and private actors (nonprofit or for-profit) would allow the intervention to draw on the vast resources available in the private sector, not to mention private investors’ amenability to risk-taking, and to channel them to public purposes that are within the boundaries of government priorities. Ideally, then, governments remain in control of, and are ultimately accountable for, the intervention.

It is possible, however, and perhaps likely, that private influence can be quite indirect and, therefore, harder to marshal against. Thus, for example, even if future corporate partners are kept at arm's length during the creation of a SIB, this does not mean that their influence on intermediaries will be completely impeded. Governments and intermediaries recognize that they ultimately will need buy-in from investors, so the specter of corporate disapproval may shape the SIB creation process as if the corporations were actually involved. The potential consequence is that governments could end up having the appearance of accountability when it is actually private market actors whose interests are being served. At least aspirationally, governments are expected to heed all constituencies—those who do not have capital as well as those who do. In turning to private actors to fund government initiatives, governments forfeit even the appearance of treating all constituents as having an equal say in the agenda-setting and oversight process.

MeasurementThe second dimension, measurement, addresses the role of selection and data in establishing and maintaining SIBs. Policy-makers and their critics are increasingly interested in evidence-based policies and practices (Marsh, Pane, & Hamilton, 2006; Rousseau, 2006). They feel that policies need to do more than keep with tradition or reflect an ideological stance. The best policies are those that work, and we can know whether they work if we have a data-oriented perspective on them (United States Government Accountability Office, 2015).

In terms of data orientation, SIBs are commendable for being measurement-focused. As seen in Fig. 1, evaluators are a key component of SIBs, as are validators when they are used. This is one area where SIBs might represent a significant improvement on traditional contracting, where government agencies sometimes lack the expertise or resources to monitor contracts effectively (Brown et al., 2006; Van Slyke, 2009). Measurement is not just one aspect of SIBs—something done as an afterthought or to meet a reporting requirement. In fact, measurement is at the core of SIBs; the SIB could not work if measurements were not being taken and progress analyzed (Warner, 2013). This makes SIBs appealing to those who rightly regard them as more data-driven than most public policy initiatives.

On the other hand, the central role of measurement in SIBs means that any problems with measurement may have an especially pronounced effect on the initiative. The fact that potentially large rewards to private investors are linked to specific measures raises the stakes. We thus identify two issues related to measurement. The first is a pragmatic one, addressing the degree to which it is possible to measure accurately the outcomes that thereby determine the effectiveness of SIBs. The second draws on social science research about the potential consequences of measurement, irrespective of whether measurement can be done well.

Measurement accuracy. First, the structure of SIBs depends on an impressive level of measurement. Measuring is always a process of simplifying the complexity of social life into something lesser but more tangible, and making outcomes operational is notoriously challenging (Kettl, 1997; McHugh, Sinclair, Roy, Huckfield, & Donaldson, 2013) but frequently required (Lynch-Cerullo & Cooney, 2011). Indeed, many SIB-sponsored interventions have seemingly straightforward outcomes that on close inspection can be difficult to discern (Fox & Albertson, 2011).

Related to outcomes, concerns about measurement are compounded by the difficulty of identifying the target population who should qualify for the intervention. In Utah's SIB, for instance, whose intervention is targeted toward children who might later need special education services, it is difficult to identify the children who are “at-risk” and to thereby determine who qualifies for the intervention. The identification of at-risk pre-school children relies heavily on cognitive testing for which there is, at best, debate about how predictive such tests can be for identifying children bound for special education at age three or four.

Measuring the cognitive ability of young children is difficult and not well facilitated by standardized tests, primarily because young children are developmentally unreliable test takers (Meisels, 2007). Early childhood development is characterized by extensive variability and change. During their first eight years of life, children undergo significant brain growth, physiological development, and emotional regulation—all of which is influenced by the vast differences in genetics, experience, and opportunity that children face. Therefore, test scores of young children that are used to make predictions—even relatively short-term predictions—about a child's future educational abilities or needs can be unstable (La Paro & Pianta, 2000). Furthermore, substantial racial and ethnic variations (even after accounting for demographic and socio-economic factors) are often reported on these tests, indicating potential biases (Restrepo et al., 2006; Rock & Stenner, 2005). This all amounts to the possibility of “false positives,” with private investors receiving a financial return from the state when some children who, even without intervention, avoid special education placement simply because the test is not perfectly predictive of later educational outcomes.

The challenges associated with finding the appropriate at-risk group were not anticipated by those who sponsored the Utah bill. For example, according to State Senator Urquhart, the senate sponsor, children who scored two standard deviations below a test like the PPVT would have “a 95% likelihood of requiring special education.” On the house side, State Representative Hughes noted that “the assessments are identifying those who would otherwise, without intervention, absolutely request special education dollars.” Statements like these, which communicate more certainty than the evidence calls for, suggest that advocates do not always address the difficulties associated with measurement.

Moreover, initial data from the Utah SIB suggest that the at-risk group may have been misidentified. Preliminary results indicate that all but one of the treatment cohort in Utah's SIB avoided special education as a result of the SIB intervention. The SIB assumes that 110 students would have required special education allotments but that the preschool intervention remedied the need for nearly every student involved. Such impressive—almost universally positive—results suggest that it is worthwhile to consider other explanations. In fact, the New York Times reports that 30 to 50 percent of the children in the treatment cohort came from homes where English is not the only language spoken, yet the tests were given in English only (Popper, 2015). If low scores are actually reflecting English language ability, then it is not surprising that almost none of the students ended up being assigned to special education.

Relatedly, the ability of the program evaluation to detect the effectiveness of the SIB hinges on how well the evaluation is designed. Randomized controlled trials (RCTs) are the gold standard for evaluating program effectiveness (Institute of Education Sciences, 2003), providing a baseline to measure results against. Despite this strength, most program evaluations do not use RCTs. They are expensive to conduct and require well-trained researchers who operate independently of the state, the service delivery organization, and the private investors. Whether such evaluations would be funded by the state or by private investors as part of the SIB—as well as how they would be selected and hired—is unclear but could make the SIB more costly to implement (e.g., in the Utah SIB, the state pays for the evaluation). Additionally, random assignment of participants to intervention and control groups requires that researchers are involved in the intervention process at the outset, but doing so creates an additional point of negotiation that slows the start-up of the intervention. Random assignment also means that some of those who qualify for the intervention will not be allowed to participate in it, which may be off-putting to constituencies and officials. The Peterborough SIB thus relied on a quasi-experimental method, which is sophisticated methodologically but is still limited in what it can tell researchers (Jolliffe & Hedderman, 2014).

The social consequences of measurement. A second concern about measurement has less to with the challenges of measuring accurately and more to do with the social consequences of measurement. Social scientists have long observed that measuring changes things—sometimes for the better, but often in unanticipated and undesirable ways. Social measures only capture a slice of what they are intended to gauge. This is understandable and only becomes problematic to the extent that relying on the metric bestows added importance on the particular slice in question. Complex social phenomena get simplified such that some bits of information come to be understood as more relevant while others are deemed less so (cf. Kettl, 1997).

One result is that social measures are “reactive” (Espeland & Sauder, 2007). They do more than passively quantify or assess. They change organizations and, indeed, entire fields of activity as stakeholders—funders, overseeing bodies, publics, etc.—develop new ways of judging quality and merit and those who are measured engage in new behaviors to produce favorable outcomes. This might be good if in the process they did not attend less to other, perhaps equally important, behaviors that fall outside the purview of the specific metric. Thus, with the introduction of new and consequential measurements, organizations and the fields in which they are embedded might reorient themselves in critical, and not always beneficial, ways.

Examples are not hard to find. One comes from the field of law, where the emergence and growing importance of law school rankings has fundamentally altered how law schools operate (Espeland & Sauder, 2007; Sauder & Espeland, 2009). Similar critiques have been made about the increased use of impact factors in evaluations of scholarly productivity (Lawrence, 2003; Weingart, 2005) and in the increasingly metrics-based focus of medicine (Wachter, 2016). Closer to the substance of this article, the public education system in the United States was dramatically changed by the introduction of accountability as is promoted through high-stakes testing (Hursh, 2005). The effects have been numerous, including shifts in educational decision-making from communities and local school districts to state and federal governments (Hursh, 2005); a preoccupation with testing and test-based outcomes (Madaus & Russell, 2010), even though most teachers and administrators do not necessarily know how to use these outcomes to improve their instruction (Marsh et al., 2006); a narrowing of curriculum to facilitate a “teaching to the test” approach (Abrams, Pedulla, & Madaus, 2003; Berliner, 2011), including an increased focus on high-stakes subject areas at the cost of creative subjects such as art and music and that emphasize movement and recess (Berliner, 2011; Galton & MacBeath, 2002; Woodworth, Gallagher, & Guha, 2007); and an increased incentive to cheat and “game the system” to avoid unpleasant sanctions that school personnel feel are unfair and outside of their control (Amrein-Beardsley, Berliner, & Rideau, 2010; Madaus & Russell, 2010).

The point, of course, is not to argue that measurement is necessarily a negative feature of SIBs. In fact, it is an essential component of any policy initiative. Rather, we raise the issue to draw attention to the difficulties associated with it as well as its unexpected consequences—difficulties and consequences that might be especially likely in the context of SIBs. Because virtually everything about the successful implementation of a SIB depends on one or a few critical measurements, the potential for these measurements to shape the actors involved seems quite high. Measurement in the context of SIBs is thus not just one of many aspects of a complex intervention effort. It is the issue on which hangs all of the others, so there are good reasons to be thoughtful about the potential impacts measurement may have in fields such as education, prison reform, and the like.

Cost-effectivenessThird, we consider the extent to which SIBs are cost-effective. One of their principal draws, SIBs’ cost-effectiveness is based on the fact that governments are not under obligation to pay for interventions that fail to meet desired outcomes. This is the benefit of having intermediaries as the node of the SIB network (see Fig. 1). They are also seen as cost-effective because SIBs provide a way for governments to harness private capital for public purposes without entering into traditional loan or bond agreements. And, generally, advocates see in SIBs a way to bring market discipline—based in competition and pay tied to performance—to the public sector.

While SIBs certainly offer an innovative way to shift some of the financial risks away from taxpayers—a benefit over traditional contracting (where taxpayers assume the risk of the contracted intervention not working)—it is important to remember that the cost savings for SIBs are a function of paying only for successful outcomes. If the intervention is highly successful, then the government may well end up paying more than it would have cost to fund the intervention directly, since the government could have saved on the interest ultimately paid to private investors by producing the intervention itself.

While the option of paying only for successful interventions is politically and financially appealing, unsuccessful interventions are not free. As a point of fact, many of the legislative SIB bills officially have fiscal notes of $0, yet they require a considerable amount of time, energy, and money on the part of governments to set up and facilitate—the burden of which is most likely shouldered by taxpayers (United States Government Accountability Office, 2015). In this aspect, then, they do not represent an improvement on traditional contracting, which can also be subject to high transaction costs (Brown et al., 2006).

To illustrate, the Peterborough SIB project took over two years to structure. Almost one full year was spent on negotiating the measures by which success would be determined and investors paid. Critics claimed that the time would have been better spent on improving public policy or creating a more widely available program, “rather than designing the arcane structure of a SIB for the benefit of private investors tied to one project” (Cohen, 2014). And even representatives from Social Finance and the Ministry of Justice—two proponents of the project—“described the development of [the Peterborough] SIB – in particular, determining the outcome measurements and payment model – as complex and time-consuming, requiring significant resources in the development stages” (Disley et al., 2011, p. 13; cf. Fox & Albertson, 2011) At the present time, it is unclear which of these costs, if any, will be repaid by private investors.

Thus, considerable effort must go into designing SIBs, drafting legislation, monitoring processes, and so forth—regardless if the initiative is successful or not. Indeed, all effort expended on the part of public officials to make SIBs happen is time and energy not devoted to other efforts, so there are always some costs inherent to SIBs. To our knowledge, costs such as these have not been scrutinized adequately.

Discussion & conclusionTo summarize, we have made an appraisal of SIBs as a new form of public–private partnership. In order to promote more scholarly dialog on SIBs, our contribution is to synthesize the arguments for and against SIBs, in particular by making a comparison of SIBs to more traditional forms of contracting, and to do so in light of the preliminary results of two SIBs. One of the central reasons to approach the use of SIBs critically is because of the unknown risks associated with any new social innovation. There is much to like about SIBs, and in many respects they offer an improvement on conventional government contracts. However, in an effort to create a risk-free approach to pursuing public priorities—or at least to shift risk to private actors—SIBs may in fact trade in one risk for other types of risk. They may reduce financial risks, at least in the short term, by paying only for interventions that produce desired outcomes. But they do so by introducing market risks, which may offset the benefits gained.

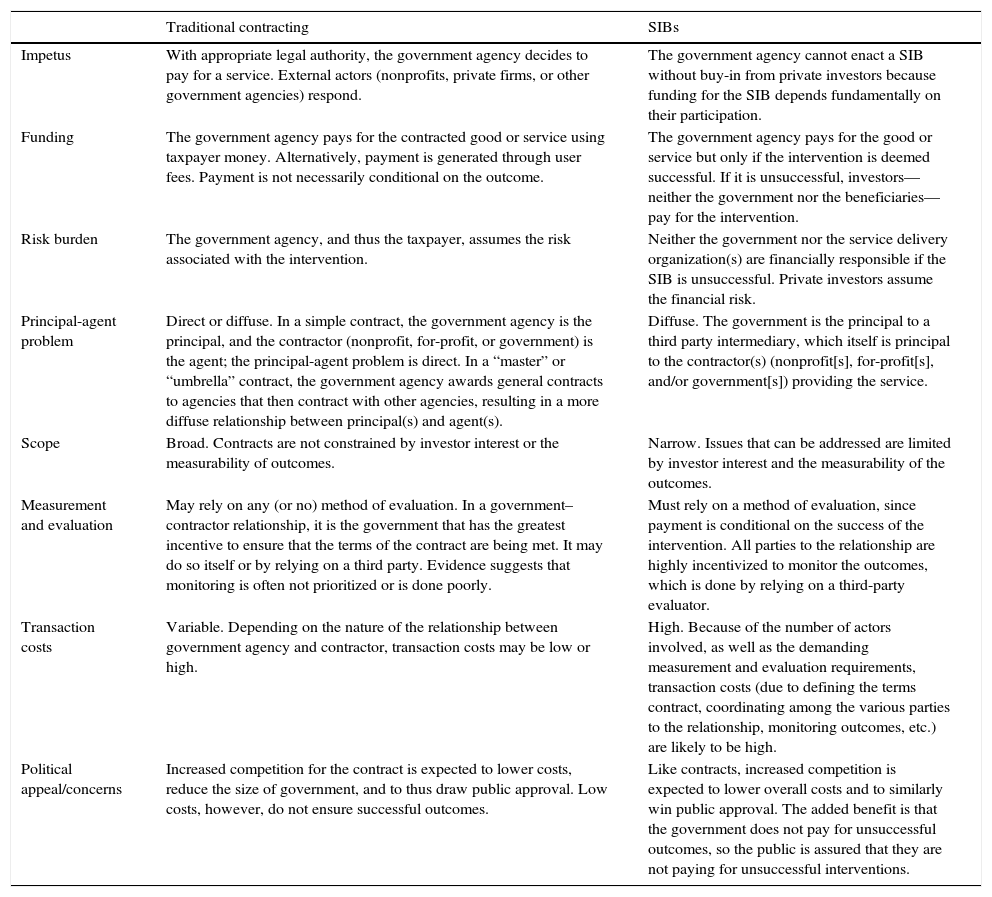

In addressing accountability, measurement, and cost-effectiveness, we drew comparisons to contracting in order to understand if SIBs are in fact an improvement on traditional contracts. On this issue, the results are mixed. In some respects, SIBs do improve on traditional contracts. This is not true universally, however. Appendix A summarizes the comparison.

Most importantly, the comparison between conventional contracts and SIBs suggests that while SIBs have some very desirable qualities that are indeed an improvement on traditional contracting, they also introduce new concerns that are absent or less egregious than in the context of simpler contracts. Arguably, the most significant benefit over contracting is that SIBs shift risk to private actors and away from government agencies and taxpayers. Moreover, SIBs necessarily incentivize high levels of evaluation and measurement in a way that government contracts do not. True, government contracts may specify the need for evaluation, but SIBs are unique in two respects. First, conventional government contracts do not cease to function if poor evaluation procedures are implemented, whereas SIBs do. Second, the major parties to the SIB—the government agency, the intermediary, the investors—are all incentivized to take an interest in the evaluation being produced (Baliga, 2013; Liebman, 2011). More eyes on the process could potentially motivate higher levels of transparency throughout the implementation and evaluation process. This is different from conventional contracts, where it is the government agency that has the greatest incentive to monitor the evaluation process.

Despite these advantages over contracts, SIBs present some concerns that contracts do not. For instance, SIB creation is conditional on buy-in from investors. In a contract, by contrast, the government agency is able to set the terms and only then engage contractors. Thus, the role of private investors in the SIB structure intensifies concerns about the role of private actors in the public policy and service delivery mix (Kettl, 1997; Milward & Provan, 2000) rather than mitigates them. This, along with the emphasis on measurement, means that the scope of social problems that SIBs are useful to address may be much narrower than the scope of problems that contracts can address. Thus, even if SIBs prove to be excellent public policy tools, the range of issues that they can be applied toward could be comparatively small. Finally, SIBs also aggravate concerns about any undesirable social consequences of measurement above and beyond concerns that conventional contracting introduces, and the number of actors involved in a SIB relationship may make them pragmatically more difficult to coordinate than conventional contracts (Warner, 2013).

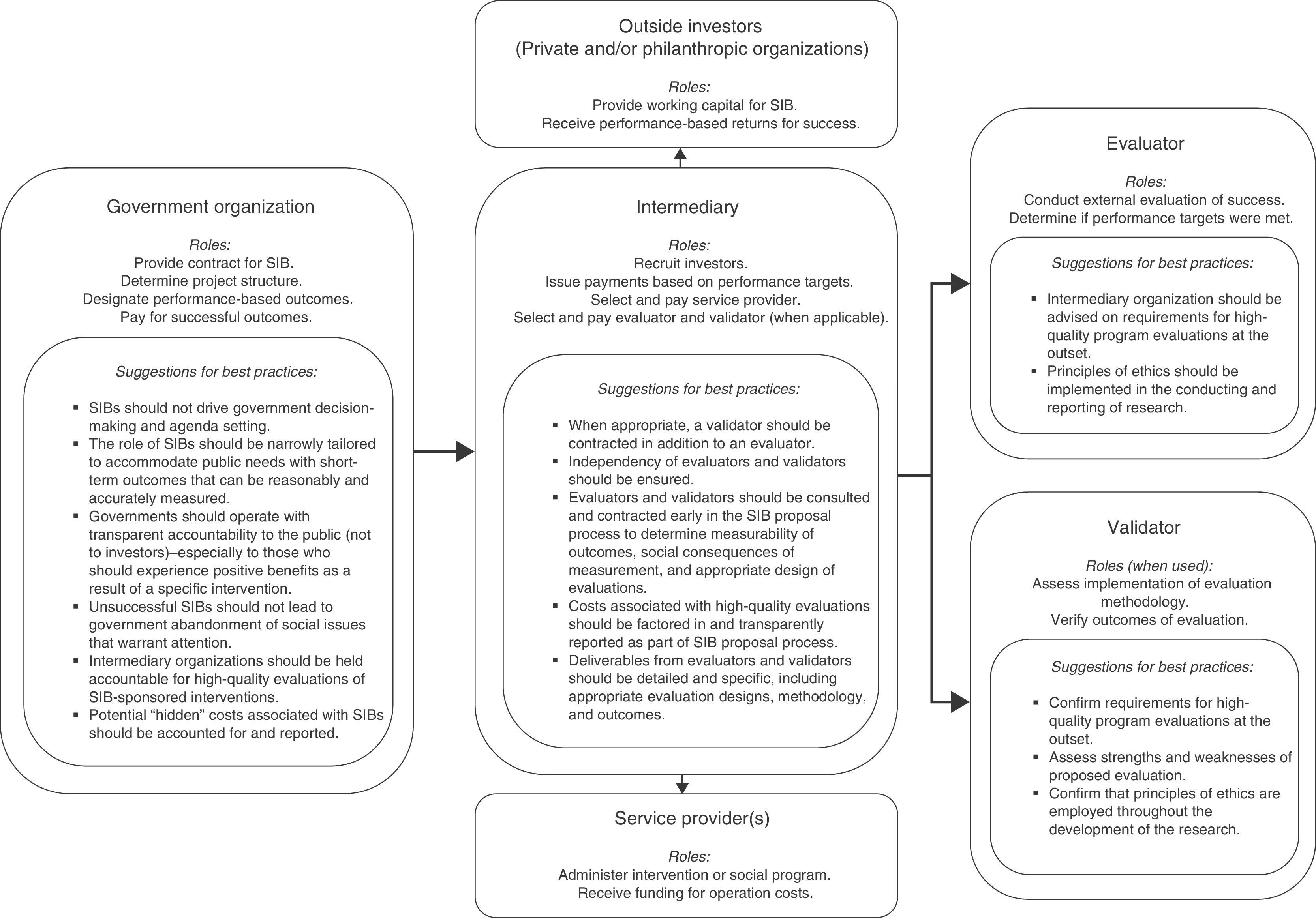

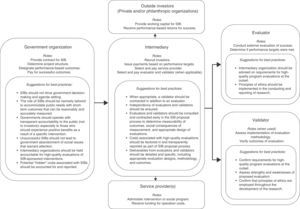

Regardless of their benefits or shortcomings, given the current political climate and the broad neoliberal discourse that prevails in Western nations, it is likely that creative efforts to use the tools of capitalism (cf. Kinsley, 2008) to address social problems are here to stay—at least for the foreseeable future. With that in mind, we end with a few suggestions that might help SIBs avoid some of the pitfalls described here. The discussion follows Fig. 3, which provides a more comprehensive summary of suggestions.

Governments have long been understood as important for addressing market failures and other problems—and with good reason. They can choose to take on problems that private markets have no interest in pursuing, and they can be held accountable to the public while doing so (Kettl, 1997). Thus, if a government feels that an issue should be addressed and sees compelling evidence that an intervention would produce desirable outcomes, then perhaps to avoid the concerns raised above it should fund the effort directly. In jurisdictions where such efforts are difficult to implement, however, then SIBs might be seen as the best option available.

When SIBs are used, policymakers and SIB advocates would do well to remember that private actors, such as for-profit corporations, are under no obligation to act in the public interest and for that reason alone should be kept on the sidelines of important decision-making as it pertains to SIBs. Governments are ultimately accountable to the public, so their (and their constituencies’) interests must frame the SIB-creation process. Some SIBs have relied on private philanthropic foundations as investors (United States Government Accountability Office, 2015; Warner, 2013), which we see as a potentially promising compromise. In the US, private foundations are not public entities in the same way that governments are, yet they are prohibited by law from operating for the benefit of private interests and must operate for exempt—charitable, religious, educational, etc.—purposes.

For their part, intermediaries should do their best to be aware of and take steps to avoid the concerns with SIBs identified throughout this article. For instance, their negotiations with outside investors should be framed according to and driven by public priorities. To avoid being subject to the interests of one or two investors, intermediaries might consider defining the terms of the SIB without input from the investors and only then advertise the SIB to a wide range of potential investors. Moreover, intermediaries should involve evaluators (and validators) from the very early stages of the SIB-creation process so that the SIB can be structured in a way that maximizes the potential for high-quality results.

Finally, evaluators (and validators) should be understood as a critical part of the SIB. The extent to which SIBs are able to become trusted public policy tools will depend significantly on how well the results of their interventions can be trusted. Their trustworthiness will increase if evaluators are involved at every stage of the SIB, if they are completely independent of the individuals and organizations who have a vested interest in the success of the intervention, and if their processes and results are seen as transparently produced.

There are, of course, benefits, drawbacks, and questions about SIBs beyond those addressed here (see, e.g., Butler, Bloom, & Rudd, 2013; Fox & Albertson, 2011; Humphries, 2013; Trupin, Weiss, & Kerns, 2014). Our purpose in writing is not to create an exhaustive list of pros and cons; rather, we have aimed to focus attention on the few issues that we see as most critical, which are no doubt informed by our backgrounds in the social sciences. Legal and public management scholars, economists, financial analysts, specialists (such as healthcare or criminal justice professionals), for example, will certainly raise questions beyond what we have done here.

Advocates of SIBs have likely considered many of these issues, and some SIBs have already taken steps to address them. Whether or not they will be successful remains to be seen, and empirical research will be valuable in helping us evaluate the extent to which these concerns should discourage or promote future investments in SIBs.

We thank Janis Dubno for answering questions and providing feedback on an earlier draft. Any errors are our own.

| Traditional contracting | SIBs | |

|---|---|---|

| Impetus | With appropriate legal authority, the government agency decides to pay for a service. External actors (nonprofits, private firms, or other government agencies) respond. | The government agency cannot enact a SIB without buy-in from private investors because funding for the SIB depends fundamentally on their participation. |

| Funding | The government agency pays for the contracted good or service using taxpayer money. Alternatively, payment is generated through user fees. Payment is not necessarily conditional on the outcome. | The government agency pays for the good or service but only if the intervention is deemed successful. If it is unsuccessful, investors—neither the government nor the beneficiaries—pay for the intervention. |

| Risk burden | The government agency, and thus the taxpayer, assumes the risk associated with the intervention. | Neither the government nor the service delivery organization(s) are financially responsible if the SIB is unsuccessful. Private investors assume the financial risk. |

| Principal-agent problem | Direct or diffuse. In a simple contract, the government agency is the principal, and the contractor (nonprofit, for-profit, or government) is the agent; the principal-agent problem is direct. In a “master” or “umbrella” contract, the government agency awards general contracts to agencies that then contract with other agencies, resulting in a more diffuse relationship between principal(s) and agent(s). | Diffuse. The government is the principal to a third party intermediary, which itself is principal to the contractor(s) (nonprofit[s], for-profit[s], and/or government[s]) providing the service. |

| Scope | Broad. Contracts are not constrained by investor interest or the measurability of outcomes. | Narrow. Issues that can be addressed are limited by investor interest and the measurability of the outcomes. |

| Measurement and evaluation | May rely on any (or no) method of evaluation. In a government–contractor relationship, it is the government that has the greatest incentive to ensure that the terms of the contract are being met. It may do so itself or by relying on a third party. Evidence suggests that monitoring is often not prioritized or is done poorly. | Must rely on a method of evaluation, since payment is conditional on the success of the intervention. All parties to the relationship are highly incentivized to monitor the outcomes, which is done by relying on a third-party evaluator. |

| Transaction costs | Variable. Depending on the nature of the relationship between government agency and contractor, transaction costs may be low or high. | High. Because of the number of actors involved, as well as the demanding measurement and evaluation requirements, transaction costs (due to defining the terms contract, coordinating among the various parties to the relationship, monitoring outcomes, etc.) are likely to be high. |

| Political appeal/concerns | Increased competition for the contract is expected to lower costs, reduce the size of government, and to thus draw public approval. Low costs, however, do not ensure successful outcomes. | Like contracts, increased competition is expected to lower overall costs and to similarly win public approval. The added benefit is that the government does not pay for unsuccessful outcomes, so the public is assured that they are not paying for unsuccessful interventions. |

Note: All authors contributed equally to the manuscript and are listed in alphabetical order.

Only in the broadest terms—as financial assets defined by a contract whose redemption date is a year or more past its issue date (Black et al., 2012)—can SIBs be thought of as bonds. For SIBs, the payout is conditional on certain performance metrics being met. This is not true for conventional bonds, where the returns are typically fixed (McHugh et al., 2013).

Officially, the new rehabilitation initiative was framed by the UK government neither as an indictment nor an endorsement of the Peterborough SIB. The fact that it failed to meet one important benchmark and was ended early provides evidence to critics that the SIB did not live up to its promise. Advocates, however, felt that the SIB was on its way to meeting the next benchmark, observed that the national rehabilitation initiative would continue with some pay-for-success elements (but without funding from private investors), and at least one commentator framed the termination of the Peterborough SIB simply as a casualty of larger policy changes (Cahalane, 2014; Cook, 2014; Ganguly, 2014; United States Government Accountability Office, 2015).

Of course, even in conventional contracting, it is possible that there will be a loss of accountability, as Baliga (2013) argues has happened in the case of the privatization of US prisons.