The objective structured clinical examination (OSCE) is a format of examination that enables students to be evaluated in a uniform, standardized, reliable, and objective way. It is carried out in different clinical stations that simulate real clinical situations and scenarios. Numerous universities in Spain and other countries employ this approach for the final examination for medical school students. This update describes the organization, design, and fundamentals for the OSCE, proposing that radiology should form part of multidisciplinary OSCEs to the extent that it forms part of clinical practice. Moreover, it is interesting and opportune to introduce the OSCE in undergraduate and postgraduate training in radiology. Online platforms enable bidimensional OSCEs that are cost-effective in terms of staff, resources, and physical space, although this approach has certain limitations. Virtual world technologies make it possible to reproduce OSCE stations in three-dimensional scenarios; recent experiences in radiology have shown that this approach interests and motivates students and is widely accepted by them.

La evaluación clínica objetiva estructurada (ECOE) es un formato de examen que permite evaluar habilidades clínicas del alumnado de forma fiable, objetiva, uniforme y estandarizada. Se desarrolla en diferentes estaciones clínicas que simulan escenarios y situaciones clínicas reales. Actualmente, se está utilizando como prueba final en el grado en Medicina en numerosas universidades, incluidas las españolas. Esta actualización presenta su organización, diseño y fundamentos, y propone que la radiología debe estar presente en las ECOE multidisciplinares, en la medida en que lo está en la práctica clínica. Además, es interesante y oportuno introducir la ECOE en la formación radiológica, en pregrado y posgrado. Las plataformas online posibilitan realizar ECOE virtuales bidimensionales coste-efectivas en términos de personal, recursos y espacio físico, aunque con ciertas limitaciones. La tecnología de mundos virtuales permite reproducir estaciones ECOE en escenarios tridimensionales, experiencias recientes en radiología han mostrado gran aceptación, interés y motivación en los alumnos.

The Objective Structured Clinical Examination (OSCE) is a test format which comprises different clinical stations (physical spaces that replicate real-life clinical scenarios and situations where the test takes place and students are evaluated)1. It enables the evaluation of students' clinical skills using checklists in a reliable, objective, consistent and standardised way. It has also been noted that the OSCE increases student autonomy, learning and positive perception, which equates to better quality health care provision in the future2.

The first OSCE was conducted by Harden in 1972 at the University of Dundee as an alternative to traditional clinical assessment methods. He tested the students using a few patients who were available during the examination period3. Traditional assessments had limited reproducibility as a student's score could be affected by the patient's performance or examiner bias as scoring was not standardised. The OSCE model was created to standardise clinical examination and reduce variables and biases that could influence the assessment. The aim of an OSCE is to use a simulated clinical environment to test how well students deal with situations and respond to questions that arise. It also assesses theoretical and practical skills, leadership, situational awareness, resource management and teamwork. In recent decades, the OSCE model has experienced exponential growth in its use around the world, both at undergraduate and postgraduate levels4. An OSCE is typically composed of 10 to 20 stations that assess a wide range of clinical or practical competencies, as shown in Table 15. Since its original implementation, the OSCE has become one of the main methods for assessing clinical competence in undergraduate medical training6. Given the importance of radiology in clinical medicine today, it would be interesting to determine how frequently radiological imaging is used in OSCEs and the variability that exists between universities7.

Clinical skills often assessed in an OSCE.

| Communication skills and professionalism |

| Clinical history-taking skills |

| Physical examination skills |

| Practical/technical skills |

| Clinical reasoning skills |

| Interpretation of findings (including imaging tests) and clinical research |

| Clinical decision-making, including differential diagnosis |

| Clinical management, including treatment and referral |

| Skills for solving clinical problems |

| Acting safely and appropriately in an emergency clinical situation |

| Critical thinking in therapeutic management |

| Patient education |

| Health promotion |

| Teamwork |

| Interdisciplinary management in health care |

This article aims to define the OSCE model and explain the importance of including radiology stations in this test.

OSCEs in SpainIn the 2011/2012 academic year, the National Conference of Medical School Deans (CNDM hereafter, as per the Spanish acronym) agreed that all medical schools in Spain should carry out a final practical assessment of clinical and communication skills by means of an OSCE8. First, an OSCE training course was organised at the University of Alcalá de Henares. Subsequently, the CNDM established that all students from all 40 Spanish medical schools finishing their studies in the 2015/2016 academic year would be required to pass an OSCE that assessed eight clinical competencies: history taking, physical examination, communication, technical skills, clinical judgement, diagnosis and treatment, disease prevention and health promotion, interprofessional relations, and ethical and legal considerations9. Radiological imaging usually features in tests relating to diagnosis; in some universities they feature in stations that are not specific to radiology, and in others in radiology-specific stations. Thus, the OSCE has been integrated at national level at the end of undergraduate studies as a multidisciplinary exam that evaluates theoretical and practical knowledge and assesses how this knowledge is handled and managed.

Organisation, design and core principles of OSCEThe two core principles of the OSCE model are its objectivity and its structure4. Its objectivity is achieved through a standardised examination model, the standardised performance of the actors/patients (standardised patients) and prior examiner training to ensure that they ask the same questions and assess the students in a similar way. The structure focuses on designing the experience in such a way that each OSCE station assesses a specific clinical task in a structured and standardised way, in line with the course plan. These two fundamental principles ensure that a well-designed OSCE is a highly reliable tool with low bias and high internal validity4. Agarwal et al.10 conducted an interesting analysis of the OSCE system's strengths, weaknesses, opportunities and threats (SWOT), which is summarised in Table 2.

Analysis of strengths, weaknesses, opportunities and threats (SWOT) for OSCE.

| Strengths | Weaknesses |

|---|---|

| Objective | Costly (time/effort) |

| Authentic | Time-consuming in preparation |

| Reliable and valid | Requires large space to set up the different OSCE stations |

| Provides candidates feedback | |

| Ability to evaluate in real time | |

| Overcomes bias of traditional evaluation | |

| Ensures wide coverage of curriculum (ethics, communication, patient care, etc.) |

| Opportunities | Threats |

|---|---|

| To replace the traditional evaluation system | Opposition to the idea of revamping the traditional evaluation system |

| To implement OSCE evaluation in all medical specialities | Delay of active participation and brainstorming, which is mandatory for OSCE |

| To usher in objectivity at the expense of subjectivity in assessment | Negative mindset of faculty members due to a feeling of threat |

Key elements of an OSCE include11,12:

- a

The steering committee. This is made up of a group of 6 to 12 clinical professionals with experience, knowledge and skills in running OSCEs. They are responsible for fundamental aspects such as guaranteeing the confidentiality of the contents, defining the level of difficulty to be assessed, evaluating the results, compiling the information to be received by the students, organising the teaching staff responsible for the different stations, and issuing the corresponding certificates. The committee is also responsible for establishing criteria for professional examiners, defining the weighting criteria for the tests, and designing the clinical situations, cases and elements that will comprise the clinical stations.

- b

Specification table. This is a fundamental document that includes the overall design of the test. It consists of rows and columns with the location of the stations, student rotations (circuits or rounds), the subjects to be tested, the assessors, the testing instruments and each station’s design (see Supplementary material, Annex 1).

- c

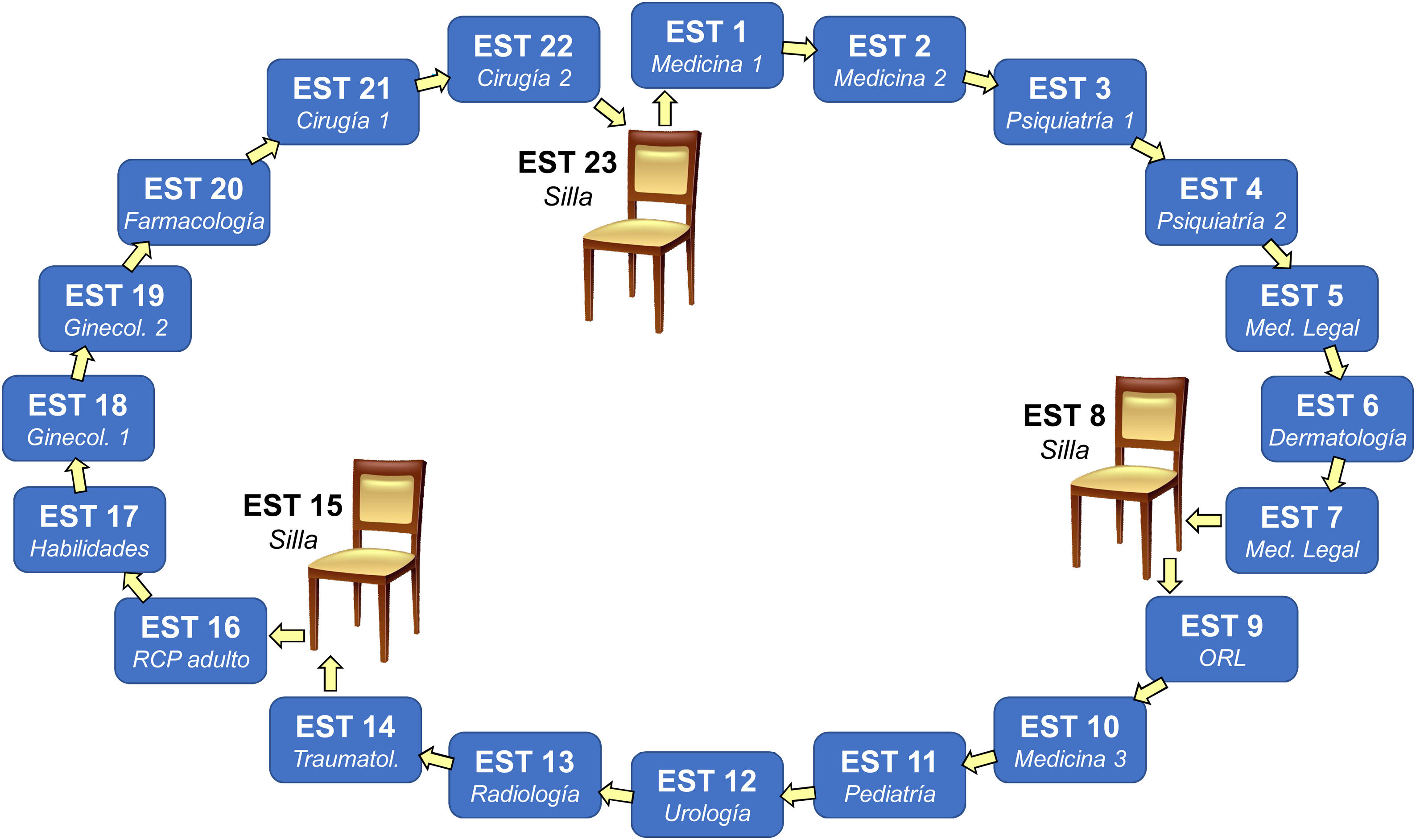

Clinical stations. These are the physical spaces where the simulated cases take place and the assessors score the students. Each clinical station has specific elements and materials that must be specified and checked prior to the test10. Each station is identified with a number which should be easy to locate. Access to the next station should be quick and easy to avoid loss of time or concentration12. Therefore, a circuit of correlating stations optimises resources and time (Fig. 1). It is important to establish and maintain a bank of quality-assured, peer-reviewed OSCE stations that can be used in several examination sessions13.

Figure 1.Example of an Objective Structured Clinical Examination (OSCE) circuit consisting of 20 clinical stations (STN) and 3 rest stations. Each student is given a number from 1 to 23 that corresponds to their first station and at the end of the allotted time (usually 10 min), they must move on to the next station. When a student gets to a station with a chair, they take a rest during that turn. This OSCE circuit was used at a Spanish university in 2019. Note that each station is dedicated to a different medical speciality and number 13 corresponded to radiology.

(0.41MB). - d

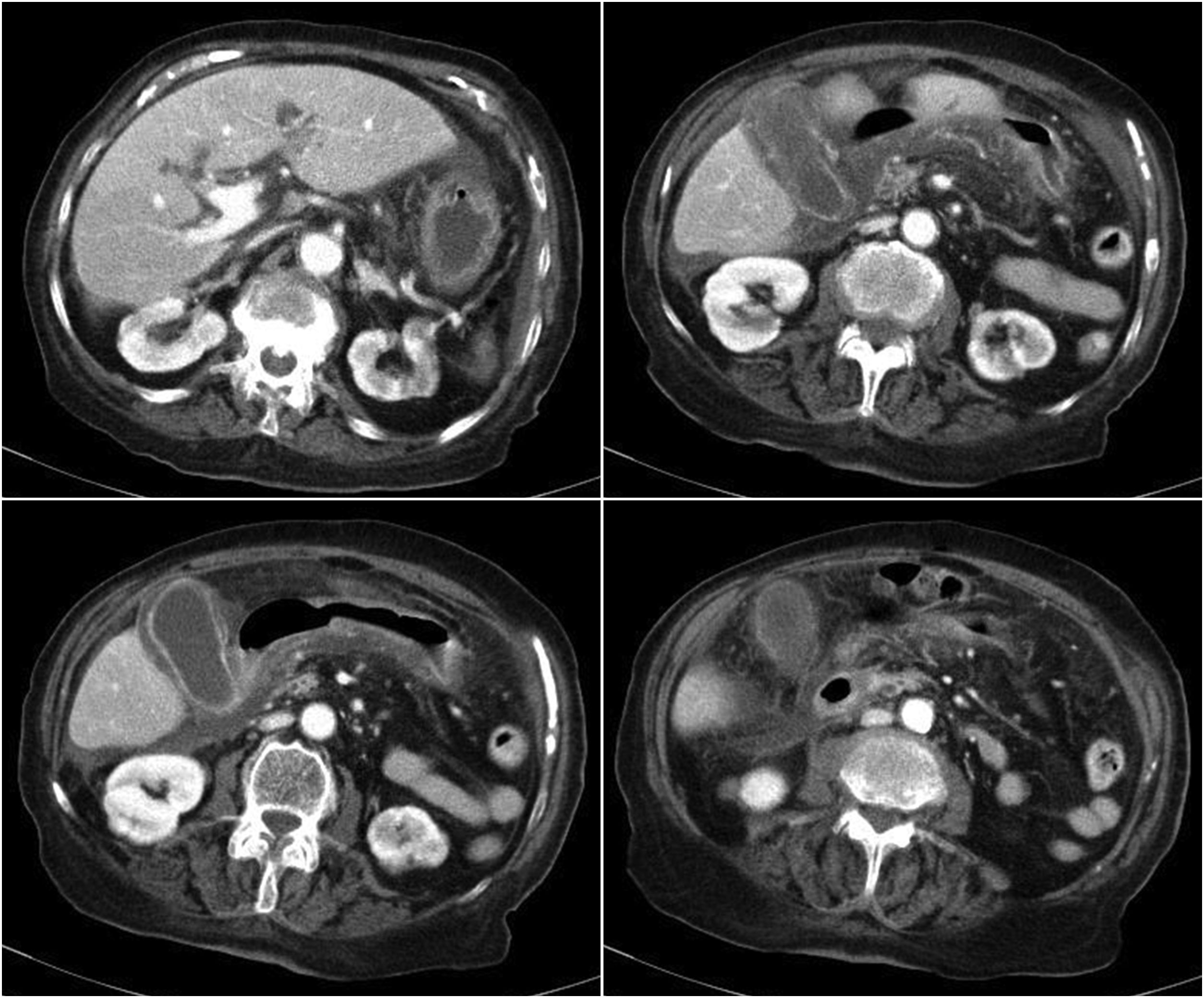

The cases. These are the primary contents in OSCEs and the basis for the different clinical stations. Each case usually consists of a description that includes patient data, medical history and physical examination findings, clinical or imaging tests and examinations. It also features the different questions to be addressed (Fig. 2), and a checklist to assess the required competencies in an objective, uniform and standardised manner (Table 3). As far as possible, the cases should comply with the following characteristics: 1) prevalence: they should address common diseases; 2) comprehensiveness: several skills should be tested in each case; 3) feasibility: the station design has to be realistic given the available resources; and 4) evaluation facilitation, by means of a checklist. It would also be interesting to include the addition of simulation manikins in OSCE patient cases to practise imaging-related skills (for example, pleural markings, image-guided biopsies or ultrasound).

Figure 2.Example of images used in a clinical case in an OSCE station, with the following brief: "55-year-old woman, admitted to the emergency department for dull pain in the right hypochondrium, nausea, vomiting and fever of 38.5 °C. Laboratory tests: hyperbilirubinaemia due to direct bilirubin, elevated transaminases, CRP 89.2, leukocytosis with left shift. An imaging test is performed. You have 10 min to: establish a presumptive clinical judgement, describe the technique and radiological findings and prescribe treatment".

(0.41MB).Table 3.Example of checklist for the clinical case in Fig. 2.

Question Answer Score Clinical judgement 1. Acute cholecystitis Y 3 Radiological findings 2. Axial CT image of the abdomen Y 1 3. IV contrast venous phase Y 2 4. Enlarged gallbladder Y 1 5. Increased enhancement and thickening of the gallbladder wall Y 2 6. Pericholecystic fat stranding Y 1 7. Intra-abdominal free fluid Y 1 8. Intrahepatic biliary dilatation Y 1 Treatment 9. Emergency surgery Y 1 10. In-hospital antibiotics S 1 - e

Selection and training of standardised patients and assessors. Standardised patients are usually actors trained to simulate a patient according to the case scenario—their medical history, physical examination, attitudes and emotional characteristics. They always provide the same information, acting in the same way in front of all students. The assessors must be qualified and experienced personnel, both in the clinical situation to be evaluated and in the way the OSCE functions and develops. Prior to the examination, they must familiarise themselves with the case to be examined, the different points to be completed by the student, the evaluation criteria and the general rules of the test.

Summative assessment is the traditional assessment method that aims to measure learning outcomes to decide whether someone passes a subject or is awarded a qualification or accreditation. Formative assessment evaluates students' progress and knowledge, and is used to diagnose students' difficulties, providing information to improve teaching and learning14. OSCEs are used worldwide for formative and summative assessment in health profession training4,15,16, although it was originally developed as a summative assessment method. It has been suggested that summative OSCEs can have a negative impact on learning due to stress regarding the end mark and can encourage the use of tricks and cheating to acquire better test scores, to the detriment of learning. Typically, students who participate in a formative OSCE value the opportunity as a learning experience, although they sometimes fail to identify its formative nature17.

Formative OSCEs often include immediate feedback from standardised patients or provide students with access to performance data, scores, checklists and video recordings to supplement feedback18. This feedback shows the student their strengths and weaknesses in the different competences assessed, thereby encouraging continuous improvement even after the test has been completed. Therefore, formative OSCEs can also be very useful in postgraduate training17,19.

Radiology in OSCEsRadiological imaging is often present in OSCEs, sometimes as part of a clinical station for another speciality, and sometimes as a radiology station in its own right. The number of radiology stations included in OSCEs has been found to vary greatly from one university to the next. While some universities do not include any, a study based on universities in Scotland found that on average, one station is included in each OSCE7. Radiology stations included in multidisciplinary OSCEs allow teaching staff to assess clinical competencies following teaching activities on conventional radiography20, mammography21, computed tomography, magnetic resonance imaging or ultrasound22,23.

The inclusion of radiological imaging in OSCEs demonstrates its importance in medical student training. Radiologists should be involved in OSCE decision-making committees to ensure that radiology stations are included and that test objectives, contents and processes are properly defined.

OSCE radiology stations often explore interpretative skills. This generally includes a clinical case where a given imaging technique is presented and the student has to answer questions related to their understanding of the essential findings, their interpretation and clinical judgement24.

A radiology station in an OSCE should test the following criteria: a) a good understanding of the clinical history and in what contexts imaging tests are indicated; b) a good description of the radiological findings and technique used; c) a differential diagnosis; and d) diagnosis and treatment and next steps to be taken given the disease in question. Research has shown that medical students who are trained in these types of OSCE stations have a better understanding of various diseases, a better understanding of anatomy and physiology when applied to the real-life patient context, and an improved understanding of therapeutic management, surgical planning and the radiology-pathology correlation of various diseases25. Another advantage of including radiology stations in OSCEs is that they evaluate how effective radiology teaching is when applied to real clinical cases, through clinical contexts that emulate reality. This situation teaches students how to discern when it is appropriate to request radiological tests and which technique is the most appropriate given the presumptive diagnosis, thus avoiding unnecessary exposure to ionising radiation.

There is another type of radiology station that assesses a specific technical skill. It might evaluate how well an ultrasound scan is performed22,23,26, or it might assess ethical, interpersonal or communication skills with patients (for example, managing differences of opinion between colleagues or informing the patient or family member of the diagnosis obtained by an imaging test)21,27. An example of an OSCE radiology station that assesses technical skills is taken from a university final exam and assesses to what extent students have assimilated the learning objectives in their thoracoabdominal ultrasound course22. Students had to demonstrate their ability to perform an ultrasound examination, correctly capturing the requested anatomical planes. Similarly, in another study,23 students were asked to perform a musculoskeletal ultrasound. The task asked the participants to reproduce three standard sectional planes of the shoulder. Also measured was the time from when the probe touched the shoulder until a still image of the final sectional plane was captured. Stations similar to these would enable the assessment of skills in ultrasound-guided interventions, especially in speciality training.

Radiology stations in OSCEs can assess communication skills, usually focused on explaining to the patient or family members the radiological findings and the recommended radiological follow-ups and therapeutic management25. Standardised patients are essential for this type of OSCE station as they enable the assessment of interpersonal skills in real clinical situations that include: a) reporting a misdiagnosis; b) giving bad news; c) disagreeing with a colleague; d) reporting a missed diagnosis; e) explaining a complication in a procedure; or f) taking patients through the informed consent process and requesting consent27. Lown et al.21 went a step further by designing an interesting experience using two formative OSCE stations to improve the communication skills of radiology specialist trainees by requiring them to inform patients of breast imaging findings. To do so, they involved real patients in the test design and in providing feedback based on their interpreted role and their real-life experiences.

Radiology-specific OSCEsThe OSCE method is useful for testing the assimilation of clinical and radiological knowledge24. The OSCE model is being widely used, both at undergraduate and postgraduate level, to assess competencies in different medical specialities28–30. Radiology-specific OSCEs have been used with medical students1,24, diagnostic radiology trainees and radiography students31,32.

Morag et al.24 carried out an OSCE during a compulsory radiology rotation, in which 122 students participated. It comprised five clinical cases. They concluded that OSCEs can detect deficiencies in individuals and groups better than traditional assessments and can also provide guidance for remediation. Staziaki et al.1 set up an OSCE which was held at the end of an elective radiology rotation, in which 184 medical students participated. It included nine conventional radiology stations. They concluded that radiology OSCEs are objective, structured, reproducible and inexpensive. They also highlighted the need for assessor training prior to the test so their scores would correlate better. Hofer et al.26 set up and validated an abdominal ultrasound-specific OSCE for medical students with between 11 and 14 stations. The validation of the included items meant that the same design could also be used with diagnostic radiology trainees with only a few simple modifications such as the cut-off values and the choice of cases33.

Having advanced students assess lower-year students has recently been explored, in a radiology OSCE involving minimal participation from teaching staff, based on peer assessment concepts32. We found the OSCE method to be a valuable tool that helps students develop evaluative judgement and, at the same time, provides an authentic and immersive learning experience. At postgraduate level, at UC Chile, formative OSCEs have been used to evaluate the competencies of diagnostic radiology trainees prior to them working independently during radiology shifts, noting that the OSCE model reveals curricular deficits, fosters feedback and affects future designs of speciality training programmes34,35. Now may be the time to introduce OSCEs as practical radiology tests in both undergraduate courses and specialist training. This will require several collaborative measures10, such as: a) the involvement of the radiology academic community and practitioners from leading educational institutions; b) the organisation of workshops and meetings to transmit OSCE principles; and c) the guarantee that it will be approved, certified and signed off prior to its use.

Virtual OSCEsThe COVID-19 pandemic has revolutionised online learning in higher education. Restrictions on physical contact and attendance have led to technological developments and accustomed lecturers and students to using online resources36. During lockdown in 2020, OSCEs were implemented virtually and accessed online as solutions to these restrictions37–39.

In Spain, the CNDM coordinated a virtual OSCE, which covered the following competencies: history taking, examination, clinical judgement, ethical aspects, interprofessional relations, and disease prevention and health promotion. No technical or communication skills were assessed39. This virtual OSCE consisted of 10 stations in gynaecology, paediatrics, psychiatry, surgery and traumatology, medicine and general practice. Twenty-one Spanish medical schools were involved, with the participation of 3479 sixth-year students, through each university's teaching platform (Moodle, Sakai, Blackboard or the Practicum-Script® Foundation's platform). The students completed a satisfaction survey after the test, in which 52% indicated that they had experienced a considerable level of stress before the test. Nevertheless, the majority rated as satisfactory the information received ahead of the test (70%), the organisation on the day of the test (83%), the prior knowledge acquired (88%) and the type of medical problems presented (76%). Seventy-five percent considered the test to be a good learning experience. This innovative experience brought together different medical schools to prepare a joint OSCE that assessed a large number of required competencies and demonstrated that the virtual version of this assessment model can be useful. Moreover, this virtual OSCE resembles Step 3 of the United States of America (US) medical licensing exam (Computer-based Case Simulation), and this—together with the face-to-face OSCE—could help Spain and the US to recognise each other's degrees in the future39. In June 2021, a virtual OSCE was repeated at national level, with similar characteristics to that of 2020. In 2022, a hybrid experience was carried out: participants from across the whole of Spain simultaneously accessed an online exam with 10 identical virtual stations while the 10 on-site stations were developed at each university in the classic format.

OSCEs in three-dimensional virtual environmentsVirtual OSCEs have been found to be enjoyable, interactive and learner-friendly. They are also cost-effective in terms of staffing and resource requirements, and eliminate the need for large venues10. The drawbacks are that students cannot demonstrate their hands-on or communication skills with standardised patients in a live setting. Twelve tips for implementing a successful online OSCE have been proposed and are reflected in Table 440.

Tips for implementing a successful virtual OSCE.

| 1 | Think about the practical aspects of the test: what skills are to be assessed?; what are the objectives?; what stations should be included and excluded?; what expectations do the students and teachers have?; and what resources are available? |

| 2 | Choose a platform. There are many virtual platforms, but the best option is the one that teachers and students are most familiar with. |

| 3 | Choose the hosts (administrators) carefully. The OSCE largely depends on them to guide the students and examiners, and provide good digital and IT support throughout the process. |

| 4 | Modify selected OSCE stations to adapt them to the online setting. The virtual stations should simulate the in-person experience as closely as possible, especially when assessing practical skills. |

| 5 | Creativity plays an important role in virtual OSCEs. For example, in order to assess stitching, the student could video themselves performing the technique with material they have been sent. |

| 6 | Do not disclose the contents of the OSCE prior to the examination. Confidentiality of the examination contents is essential. |

| 7 | The virtual OSCE requires extra preparation. It is essential that students and teachers are familiar with the online platform where the OSCE will take place. |

| 8 | Remain student-focused. When teachers have multiple tasks to complete at the same time, they may not fully focus on how the exam is going, therefore, record everything to enable a correct posterior evaluation. |

| 9 | The student's screen should display the information as clearly as possible. The student should be able to easily read the instructions for each station, the additional documents, the images provided, and the questions to be answered. |

| 10 | Carry out a full mock virtual OSCE the day before the exam. It is essential to test the whole circuit and check that the connectivity of the hosts and teachers is working properly. |

| 11 | Use an external private communication channel between the OSCE organisers and teachers to solve any problems during the exam. |

| 12 | Encourage detailed feedback from all staff involved in the examination so any errors or mistakes can be addressed and improvements made for the future |

Virtual worlds, also called immersive environments or the metaverse, are three-dimensional computer-generated virtual spaces where people interact with each other remotely. Today's medical students use technology and virtual games for fun and social interaction, so using them as a teaching method could be an effective way to engage students in their learning41. The drawbacks include occasional technical limitations (processor, graphics card or data transmission over the internet), the costs of maintaining the virtual space, and the amount of time teachers need to dedicate to preparing content.

Virtual clinical simulation environments have been created to develop various competencies, such as medical history taking for virtual patients42, solving clinical situations on a respiratory ward43, practising cardiopulmonary resuscitation44 or improving communication skills with patients45. These virtual OSCEs enable students to interact with a scenario through their avatar in a given clinical context, for example, domestic injuries among elderly patients46, or communicate with another avatar representing a standardised patient47, thus providing a practical and appropriate alternative to traditional OSCEs.

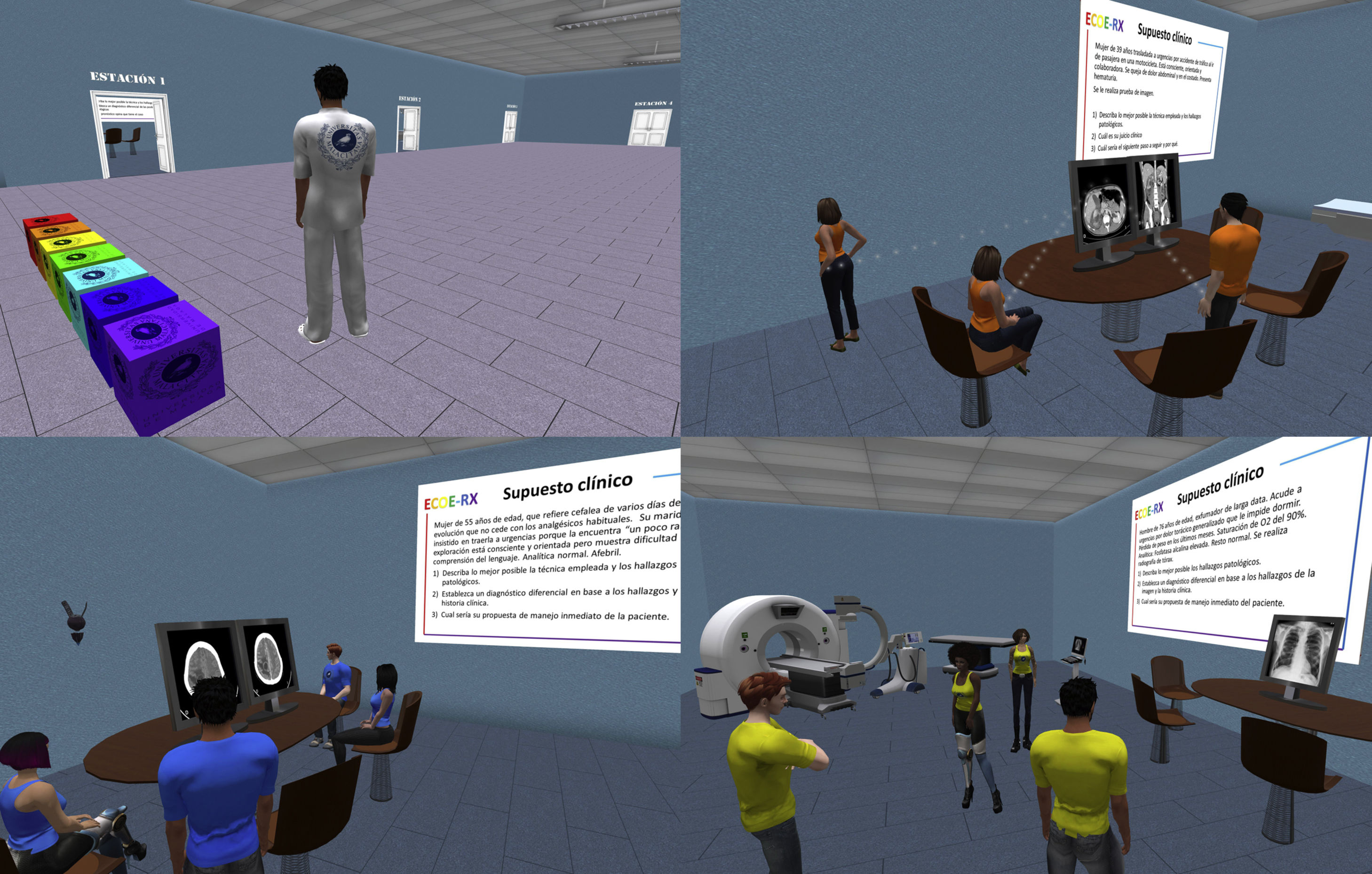

One of the most widely used virtual worlds for teaching healthcare professionals is Second Life®48. It has proven to be a useful teaching and learning tool in both live and self-paced online activities for teaching radiology49. The main advantages are the remote access capability, the strong impression of being in person, the ease of access, and the fact that it is free of charge. It has been described by students in various projects as a playful, fun, attractive and interesting learning tool50–52. These same advantages of Second Life® make it a useful digital platform for both summative and formative OSCEs, as it enables the design and creation of different scenarios to practise decision-making in clinical cases53 and to interact with virtual standardised patients47. A recent virtual teaching experience on emergency radiology required sixth-year medical students to solve emergency radiology cases in groups of three or four in seven virtual OSCE stations (Fig. 3). The students expressed strong acceptance and interest in this system, highly valuing the setting, the OSCE cases and the formative value of the experience54. It would be interesting to design and run radiology OSCEs in virtual environments, both at university level and for speciality training, as this would optimise resources and enable multicentre studies to be carried out.

Scenes from the ECOE-RX undergraduate project. You can see the entrance hall with doors leading to the different rooms hosting the OSCE stations and several groups of students working on the emergency radiology cases at the corresponding stations. Each station depicts a scenario that is typical of a radiology unit. The clinical situations are described on posters attached to each room’s wall and there is a meeting table with one or two monitors displaying images of the case.

Virtual reality, using immersive glasses and hand-held haptic devices (which provide force feedback to the subject manipulating them) can be used to assess manual skills. In vascular interventional radiology training, virtual reality has been used to evaluate how well students perform the Seldinger technique, the vascular access procedure, angiography and angioplasty. It has demonstrated improvements in the skills of radiology speciality trainees, albeit with certain ergonomic limitations55.

ConclusionsThe OSCE is a structured, uniform, systematic, objective and standardised assessment model. It is being increasingly used in undergraduate medical training at global level due to its proven effectiveness in assessing different skills and competencies among students. It is important to include radiology OSCE stations in university assessments so that radiology is accorded the same importance in the examination as it has in the clinical setting. The OSCE is an assessment technique that can—and should—be incorporated into undergraduate radiology training, radiology speciality training and continuous professional development. Virtual OSCEs enable online assessment using two-dimensional platforms, albeit with certain limitations. Virtual reality technology makes it possible to simulate a variety of three-dimensional scenarios for OSCE stations. It is worth us exploring these resources in the field of radiology, as recent experiences have been very well received, with trainees expressing high levels of interest and motivation.

Authors’ contribution- 1

Research coordinators: AVPB and FSP.

- 2

Development of study concept: AVPB and FSP.

- 3

Study design: AVPB and FSP.

- 4

Literature search: AVPB and FSP.

- 5

Article authors: AVPB and FSP.

- 6

Critical review of the manuscript with relevant contributions: AVPB and FSP.

- 7

Approval of the final version: AVPB and FSP.

This research has not received funding support from public sector agencies, the business sector or any non-profit organisations.

Conflicts of interestThe authors declare that they have no conflicts of interest.