Evaluation is a means for significant and rigorous improvement of the educational process. Therefore, competence evaluation should allow assessing the complex activity of medical care, as well as improving the training process. This is the case in the evaluation process of clinical–surgical competences.

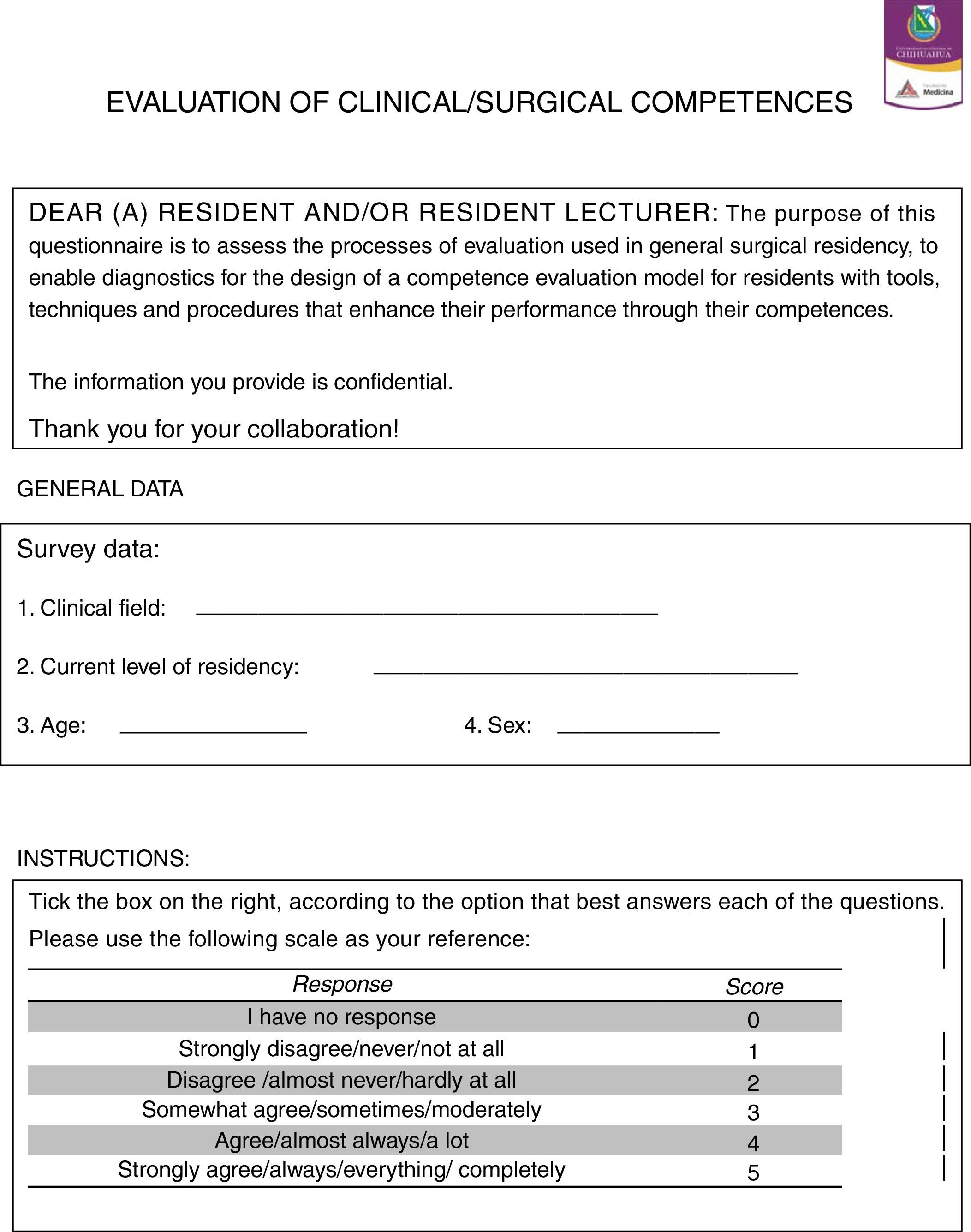

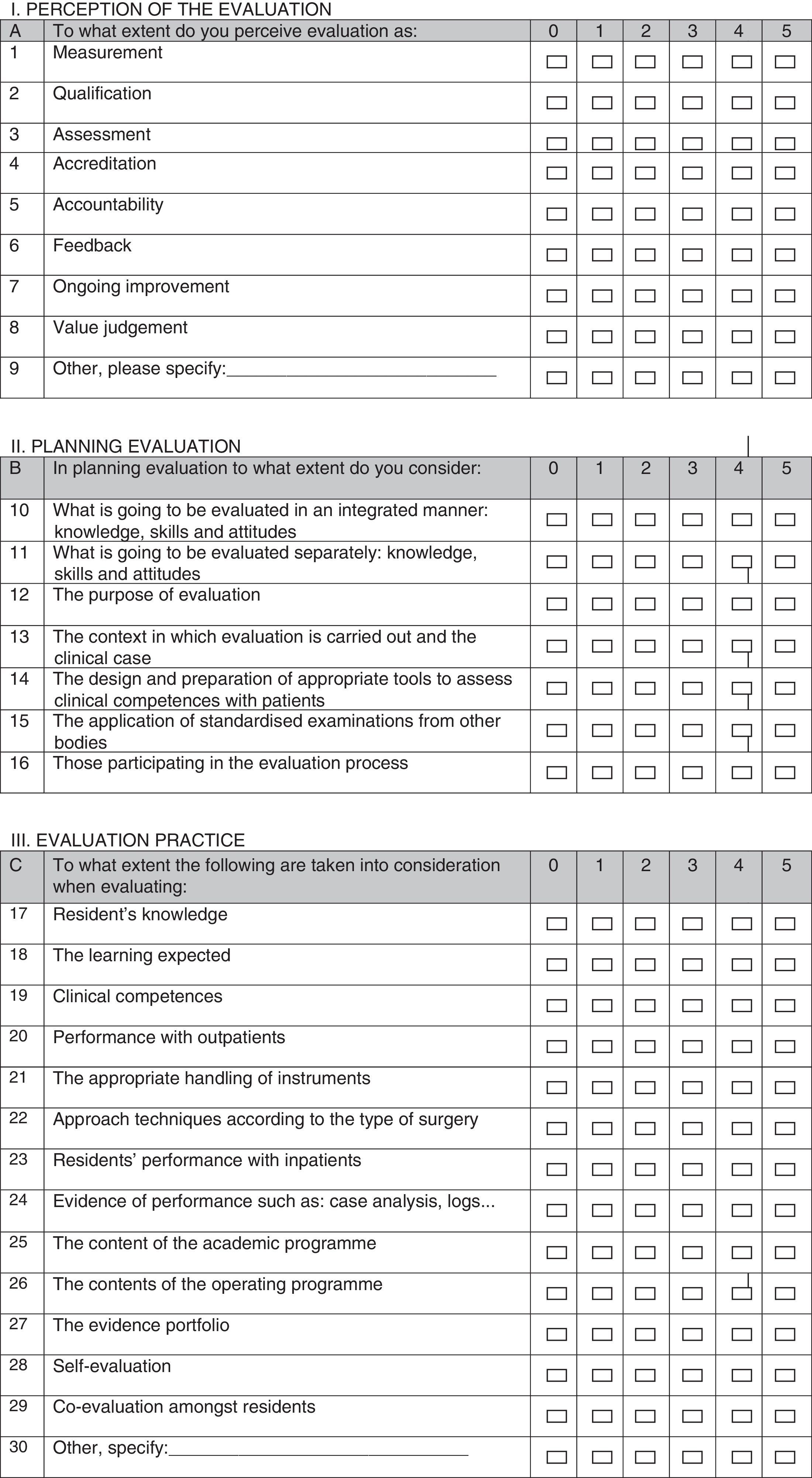

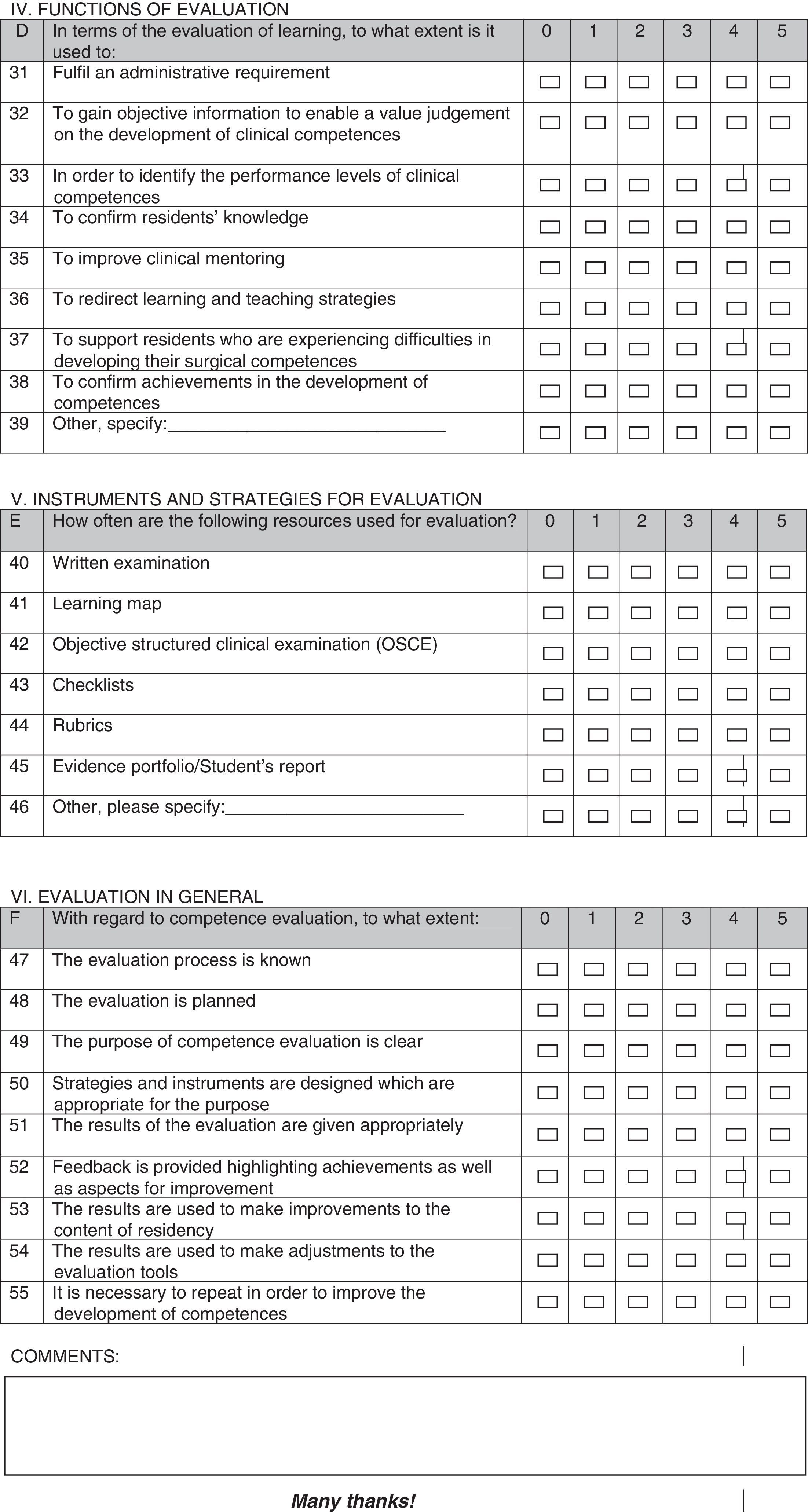

Materials and methodsA cross-sectional study was designed to measure knowledge about the evaluation of clinical–surgical competences for the General Surgery residency programme at the Faculty of Medicine, Universidad Autónoma de Chihuahua (UACH). A 55-item questionnaire divided into six sections was used (perception, planning, practice, function, instruments and strategies, and overall evaluation), with a six level Likert scale, performing a descriptive, correlation and comparative analysis, with a significance level of 0.001.

ResultsIn both groups perception of evaluation was considered as a further qualification. As regards tools, the best known was the written examination. As regards function, evaluation was considered as a further administrative requirement. In the correlation analysis, evaluation was perceived as qualification and was significantly associated with measurement, assessment and accreditation. In the comparative analysis between residents and staff surgeons, a significant difference was found as regards the perception of the evaluation as a measurement of knowledge (Student's t test: p=0.04).

ConclusionThe results provide information about the concept we have about the evaluation of clinical–surgical competences, considering it as a measure of learning achievement for a socially required certification. There is confusion as regards the perception of evaluation, its function, goals and scopes as benefit for those evaluated.

La evaluación de competencias es un proceso sistemático y riguroso que valora la compleja actividad de la educación. Es primordial elaborar un diagnóstico del conocimiento existente sobre el proceso de evaluación de las competencias del ejercicio de la medicina, que contribuya a la mejora del proceso formativo.

Material y métodosEstudio descriptivo, transversal, que mide el conocimiento sobre la evaluación de competencias clínico-quirúrgicas del programa de Cirugía de la Facultad de Medicina de la Universidad Autónoma de Chihuahua (UACH). Esto a través de un cuestionario que integra 55 preguntas, en 6 secciones (percepción, planeación, práctica, función, instrumentos y estrategias y evaluación en general), con una escala de Likert. El procesamiento de la información se realizó mediante un análisis descriptivo de correlación y comparativo, con un nivel de significación de 0.001.

ResultadosLa percepción que tienen los residentes y adscritos de la evaluación es en mayor medida de calificación. En cuanto a los instrumentos, el más conocido fue el examen escrito. Respecto a la función de la evaluación se consideró en mayor medida como un requisito administrativo. En el análisis correlacional la evaluación como calificación se relacionó significativamente con medición, valoración y acreditación. En la comparación entre residentes y adscritos encontramos que existen diferencias significativas en lo que se refiere a la percepción de la evaluación como medición del conocimiento (t de Student p=0.04).

ConclusiónLos resultados aportan información sobre la concepción que se tiene sobre la evaluación de las competencias clínico-quirúrgicas, considerándola como una medición del logro de aprendizajes para una certificación requerida socialmente. Existe confusión en cuanto a la percepción de la evaluación, sus funciones, sus metas y alcances como beneficio para el evaluado.

Through competence-based education, today's universities promote integral training, with features of scientific and human education that foster intellectual, procedural and attitudinal development towards scientific, technological and social problem solving. This enables students to enter the work structure and adapt to social changes, where it is not sufficient to consider elements separately but rather in articulation, and students are evaluated on their performance and not theory.1

Evaluation as a learning strategy is a means towards improving the educational process. This implies ceasing to see evaluation as a tool to assess what students know about specific content and using it to accredit or repeat a course, but rather viewing it as a training process that enhances learning and that helps to improve academic and professional performance through the training process.2

In the context of medicine, clinical training lies at the heart of medical training. Clinical learning is at the core of professional development and has several strengths: (1) it focuses on real problems within the context of professional practice, (2) students are motivated by its relevance and active participation, (3) professional thinking, behaviour and attitude are evaluated by the teacher and (4) it is the only environment in which skills and abilities (taking clinical histories, physical examinations, procedures, clinical reasoning, decision taking, empathy and professionalism) can be demonstrated and learned as a whole, making it possible to assess the different competences involved in this complex activity of medical care.3

The same applies for surgical teaching. Students gain surgical learning by putting known and accepted operating techniques into practice, through the skills and abilities that the resident acquires and perfects during this training period; these are also evaluated by the teacher. This mediation helps students to apply strategies to look for, process and apply knowledge in solving the problems of clinical surgery, employing various means, including information and communication technologies.4

Therefore, the evaluation of competences should be designed to assess knowledge, skills and abilities, and judgement in clinical decision-making in a specific domain.5

Diverse evaluation tools are required to examine surgical skills, which are implemented in scenarios where no ideal model exists which really shows whether a surgeon knows how to operate.6

The Consejo Mexicano de Cirugía General (Medical Council of General Surgery) even acknowledges that for many years they have based their certification on written and oral examinations. This calls into question whether accredited surgeons do indeed possess true surgical skills, and whether the country's training systems are appropriate.7 Therefore, a method for evaluating the competences specific to the speciality of general surgery needs to be constructed.

An essential step, prior to creating this method for evaluating clinical/surgical competences is to prepare a diagnosis of existing knowledge on the process for evaluating clinical/surgical competences in a general surgery residency programme, to establish what staff surgeons and residents know about the process of evaluating these skills at post-graduate level. There were six core ideas to this approach: (1) what evaluation is; (2) how to plan it; (3) its practice; (4) the functions it covers; (5) instruments and strategies; and (6) evaluation in general of these competences.

Material and methodsA descriptive, transversal study was performed in order to measure the knowledge of staff surgeons and residents on the evaluation of clinical/surgical skills in the Surgery Speciality programme of the Medical Faculty of the Universidad Autónoma de Chihuahua (UACH) by means of a questionnaire with 55 items (Annexe 1) divided into 6 sections (perception, planning, practice, function, instruments and strategies, and evaluation in general), with a 6-level Likert scale (0=I have no response; 1=strongly disagree, 2=disagree; 3=somewhat agree; 4=agree; and 5=strongly agree), validated beforehand in a pilot group (n=12). Participation was voluntary, respecting the anonymity of the respondents and was authorised by the Research Ethics Committee of the Hospital General de Chihuahua Salvador Zubirán Anchondo.

A first tool was made (pilot) during the month of July 2013, and was given to 12 residents in the general surgery speciality who were not on the programme of the Universidad Autónoma de Chihuahua (UACH), in order to assess the tool. Five questions were found to be inconsistent, 3 needed adjustment and 2 that proved inappropriate for the purpose of the study were removed.

The survey was given to 1st and 4th year general surgical residents and to general specialist staff surgeons on the general surgery programme endorsed by the Universidad Autónoma de Chihuahua, from 20th of August to 13th September 2013, using the corrected and validated tool, in the programme's main hospitals (Hospital Central del Estado and Hospital General de Chihuahua Salvador Zubirán Anchondo). The tool was used on 9 1st year residents, 2nd year residents, 3 3rd year residents, 4 4th year residents, and 13 staff surgeons.

Descriptive and inferential statistics were used to process and analyse the information on 3 levels (descriptive, correlation and comparative) in the final study group with a significance level of 0.001–0.05.

Descriptive analysisAt this first level, a descriptive analysis was made of the current situation of the residents, how they manifested their clinical/surgical skills according to their level of training, through frequencies, measures of central tendency and variability. The normality limits were established to a standard deviation in order to highlight, from the analysis of the means, the simple variables that behaved as superior atypicals, being observed to be above normal or as inferior atypical, being below normal.

Comparative analysisThe significant differences between the responses of the residents and the staff surgeons were specified using the Student's t-test.

Correlational analysisThe relationship between 2 or more variables was established using Person's r correlation coefficient.

ResultsThe results were organised into 6 sections, according to the areas considered: (1) perception; (2) planning; (3) practice; (4) function; (5) tools and strategies; and (6) evaluation in general.

With regard to perception, both of the residents and the staff surgeons, it was observed that evaluation was considered to be a qualification more (A2=3.33), while it was considered a value judgement to a lesser extent (A8=2.97).

With regard to planning, the consideration of what was to be evaluated in an integrated manner, skills and attitudes was highlighted more (B10=3.33), and to a lesser extent the purpose of the evaluation (B12=2.9).

With regard to the practice element of the evaluation, the residents’ knowledge was considered more (C17=3.53), and the portfolio of evidence to a lesser extent (C27=2.33).

With regard to function, it was considered an administrative requirement more (D31=3.33), and less a source of objective information, enabling a value judgement on the development of clinical competences (D33=3).

With regard to tools, it was found that the most well known was the written examination (E40=3.33), whereas the rubric was less well known (E44=1.7).

Finally, with regard to the evaluation in general, it was considered more that the process of evaluation was planned (F48=2.866667), whereas repeating being necessary was considered to a lesser extent, in order to improve the development of competences (F55=1.933).

The most outstanding aspect in the correlational analysis was when evaluation was perceived as a qualification (A2), it was associated with measurement (A1; r=0.64), with assessment (A3; r=0.77) and with accreditation (A4; r=0.63).

The perception of evaluation as a qualification correlates more significantly with the importance attached to clinical competences in the practice of evaluation (C19; r=0.64), and as a function of the evaluation to identify the performance levels of clinical competences (D33; r=0.68).

With regard to what is to be evaluated in an integrated manner (knowledge, skills and attitudes) (B10) was associated more strongly with: clinical competences (C19; r=0.74), performance with patients in the outpatient clinic (C20; r=0.77), the appropriate handling of tools (C21; r=0.71), the resident's performance with hospitalised patients (C23; r=0.75), evidence of performance such as: case analyses, logs(C24; r=0.82), self-evaluation (C28; r=0.77) in order to identify the performance levels of clinical competences (D33; r=073) and to confirm achievements in the development of competences (D38; r=0.83).

With regard to the residents’ knowledge (C17), there is a strong correlation with expected learning (C18; r=0.84), with the appropriate handling of tools (C21; r=072) and with the contents of the operating programme (C26; r=0.75).

The approach techniques according to the type of surgery (C22) correlated with the expected learning (C18; r=0.73), with performance with patients in the outpatients clinic (C20; r=0.73) and the appropriate management of tools (C21; r=0.72), whereas meeting an administrative requirement (D31) was correlated with measurement (A1; r=0.58), with accountability (A5; r=0.57).

Confirming the residents’ knowledge (D34) was correlated with what was to be evaluated in an integrated manner: knowledge, skills and attitudes (B10; r=0.72), in order to support residents showing difficulties in developing their surgical competences (D37; r=0.70), to confirm achievements in terms of developing competences (D38; r=0.72).

The written examination (E40) was correlated with expected learning (C18; r=0.53).

Planning of the evaluation process (F48) was correlated in that the evaluation process was known (F47; r=0.76), in that feedback was provided highlighting achievements, but also the aspects to be improved (F52; r=0.60).

In the comparison between the opinions of the residents compared to the staff surgeons using the Student's t-test, we found a significant difference with regard to the perception of evaluation as a measurement (p=0.04), and did not find any differences in the rest of the variables.

DiscussionEducational evaluation with a broad approach is a systematic process, which assesses the extent to which means, resources and procedures enable the achievement of objectives and goals in institutions. It is considered an essential activity to be undertaken prior to any action with a view to improving levels of quality. This study pinpointed that there is confusion about the perception of evaluation, its functions, goals and scope as a benefit to those being evaluated.

The results obtained provide information on the perception of both residents and staff surgeons regarding the evaluation of clinical/surgical competences. It was considered a measurement of the social function of evaluation, in that it is only undertaken to confirm having gained learning in order to achieve a socially required certification.8 At no time was the possibility of an ongoing educational function considered in the sense of identifying shortcomings and putting forward suggestions as to how to overcome them. This was confirmed in the correlational analysis, on observing that evaluation was perceived as a qualification and associated with measurement, assessment and accreditation. However, we found a difference in the perception of evaluation as a measurement method in the comparative analysis between the residents and the staff surgeons, which demonstrated that the staff surgeons took greater account of it. In other words, the residents did not consider this type of evaluation important towards their training, even when there was consensus in considering the perception of evaluation a value judgement to a lesser extent.

With regard to planning evaluation, both groups only considered what was to be evaluated in an integrated manner important (knowledge, skills and attitudes) and less importance was attached to its usefulness as a purpose of evaluation, or context of evaluation, thus we could observe that there is a great lack of knowledge about the importance of planning evaluation.

With regard to practice, evaluation was largely considered to enable knowledge in general to be identified, and considered less a portfolio of evidence and co-evaluation, again confirming the lack of knowledge about the usefulness of evaluation for planning practice, which enables the competences of doctors in training to be improved.

With regard to the function of evaluation, it was considered important as an administrative requirement and as a way of confirming the residents’ knowledge, however, its function in terms of obtaining objective information or in identifying performance levels was not identified, and its contribution as part of the learning process even less.

Evaluation has 2 functions: (a) the social function of evaluation (accreditation), i.e., to check the level of achievement of educational goals and to determine and/or confirm specific knowledge gained at the end of a training period, course or cycle, for promotion or otherwise to immediately superior levels or for the certification required by society and (b) as a pedagogical function used to establish how teaching strategies have worked and how the students’ learning is progressing, while improving and guiding the process of teaching and learning in line with the goals of the educational programme, offering students help when learning problems are detected and redirecting the organisation of the content and/or teaching strategies.8

We demonstrated that the only evaluation tool that the residents and staff surgeons knew was the written examination. This confirms the lack of knowledge about the process of the integrated evaluation of competences.

Finally, with regard to general evaluation, both groups consider that it enables the planning of the process, but they did not consider that repeating could improve competences, in identifying weaknesses to be tackled in order to improve learning.

ConclusionsBased on the results obtained and contrasting them with the literature, we find that overall both the residents and the staff surgeons considered evaluation a qualification system that is achieved by written examination alone and which in practice enables their knowledge to be pinpointed and their knowhow, skills and attitudes to be assessed in an integrated manner. However, neither of the 2 groups considered evaluation to have a pedagogical function that would enable us to improve and guide the process of teaching and learning for the purposes of the educational programme. We even observed that there was no clear definition as to how to evaluate in an integrated manner – not only knowledge but also the different skills and abilities that demonstrate the level of competence gained during the training process. This confirms the need to structure a method for the formative and integrated evaluation of general surgical competencies.

Conflict of interestsThe authors have no conflict of interests to declare.

Please cite this article as: Cervantes-Sánchez CR, Chávez-Vizcarra P, Barragán-Ávila MC, Parra-Acosta H, Herrera-Mendoza RE. Qué y cómo se evalúa la competencia clínico-quirúrgica: perspectiva del adscrito y del residente de cirugía. Cirugía y Cirujanos. 2016;84:301–308.