Las revisiones sistemáticas de la evidencia científica son estudios secundarios que sintetizan la mejor evidencia científica disponible mediante métodos explícitos y rigurosos para identificar, seleccionar, evaluar, analizar y sintetizar los estudios empíricos que permitirán responder a cuestiones específicas. El objetivo de este estudio teórico es establecer una serie de normas y recomendaciones para la planificación, desarrollo y redacción de una revisión sistemática en el ámbito de las ciencias de la salud. La exposición del artículo se introduce situando las revisiones sistemáticas en el contexto de la práctica basada en las pruebas científicas, su auge, justificación, aplicabilidad y diferencias con las revisiones tradicionales de la literatura. En segundo lugar se expone la metodología para su desarrollo, estableciendo directrices para su preparación, elaboración y redacción; describiendo las etapas del proceso y elaboración de un protocolo, con énfasis en los pasos a seguir para elaborar una revisión sistemática. Finalmente se exponen algunas consideraciones adicionales para su redacción y publicación en una revista científica. Esta guía está dirigida tanto a los autores como revisores de una revisión sistemática de la evidencia científica.

Systematic reviews are secondary studies that summarize the best scientific evidence available by means of explicit and rigorous methods to identify, select, appraise, analyse and summarise the empirical studies that enable responding to specific questions. The aim of this theoretical study is to set out a series of standards and recommendations for the planning, development and reporting of a systematic review in the field of the health sciences. The article describes the systematic reviews in the context of practice based on scientific evidence, their rise, justification, applicability and differences compared to traditional literature reviews. Secondly, the methodology is set out for their development and guidelines are established for their preparation; the stages of the process and preparation of a protocol are described with emphasis on the steps to follow to prepare and report a systematic review. Finally, some additional considerations are set out for their preparation and publication in a scientific journal. This guide is aimed both at authors and reviewers of a systematic review.

Currently, in any field of the health sciences there is promotion of the quest for scientific evidence on which to base clinical, health and research decisions. In this context, the systematic reviews (SR) of scientific evidence are a methodological resource and research tool that offer us the possibility of being informed and updated without needing to invest both time and resources, starting from efficient integration of the available information (clinical, epidemiological, financial, etc...) with the advantage of saving time for health professionals and facilitating evidence-based practice.

A SR is the synthesis of the best available evidence aimed at answering specific questions by means of explicit and rigorous use of the methods used to identify, appraise and summarise the most relevant studies. During a SR, scientific research strategies are applied to enable minimising bias present in more traditional literature reviews, in those where a systematic and explicit method is not followed for the search, selection and analysis of information (Fernández-Ríos & Buela-Casal, 2009; Higgins & Green, 2011). Nonetheless, we have to consider that the structure, extension and methodological quality of the SR is highly variable for which we require standards and guidelines that improve quality during their development and publication.

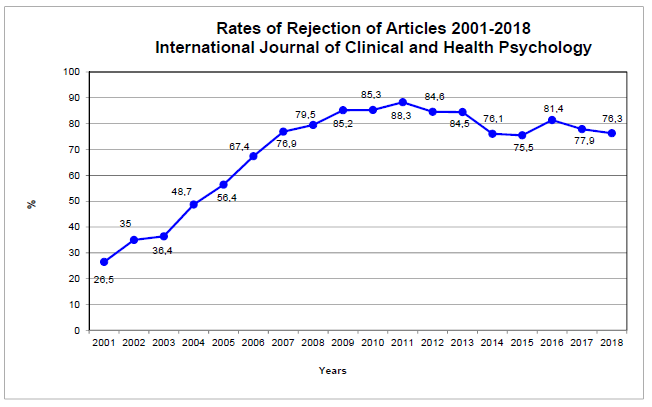

The aim of this theoretical work (Montero & León, 2007) is to briefly present a series of standards and recommendations to develop and write a SR, with the purpose of offering reviewers a structured guide to draw up and publish a SR in a scientific health sciences journal such as the International Journal of Clinical and Health Psychology, that reduces to a bare minimum the effort required for its development and serves as a reference for those who consult it and want to appraise its validity, applicability and implications for practice and research.

Methodology to develop a systematic review

The preparation of a SR is a complex and iterative process that entails a series of considerations and decisions. With the purpose of minimising the risks of bias of a SR, the methods have to be defined a priori, making the systematic process to be followed for the development of a SR explicit and reproducible.

Guidelines for preparation and writing

In the same way as occurs with any prior preparation of an empirical study, in which its development should be preceded by a clearly defined question, an appropriately formulated problem and some empirical background that justifies it being performed, before embarking on the difficult task of preparing and publishing a SR it is recommended to define the problem to be addressed and the specific aims that will guide the process. Thus, it is important to explore whether the scientific methodology of a SR is the most suitable strategy to respond to the problem at issue, whether there are prior SR on the topic and whether there is still uncertainty in this area of knowledge that justifies the study being performed.

Currently it is possible to find a SR in virtually any psychology, medicine or nursing journal on some specific topic that may be of interest for the evidence-based practice. Organisations and researcher groups as the Cochrane Collaboration, the Campbell Collaboration, and the PRISMA Group have developed handbooks and guidelines, broadly accepted and adopted by the scientific community, which guide the preparation and publication of a SR (Botella & Gambara, 2006; Cook & West, 2012; Higgins & Green, 2011; Moher, Liberati, Tetzlaff, Altman, & the PRISMA Group, 2009; Sánchez-Meca, Boruch, Petrosino, & Rosa-Alcázar, 2002; Sánchez-Meca & Botella, 2010; Urrútia & Bonfill, 2010).

Carrying out the protocol and stages of the process to perform a SR

This section reports some methodological considerations and the steps to follow to prepare a SR, considering the format and guidelines used for the preparation and publication of the systematic reviews of the Cochrane Collaboration1, Campbell Collaboration2, and the PRISMA Statement3.

1. Cochrane Collaboration (www.cochrane.org; www.cochrane.es).

2. Campbell Collaboration (www.campbellcollaboration.org).

3. PRISMA Statement (www.prisma-statement.org).

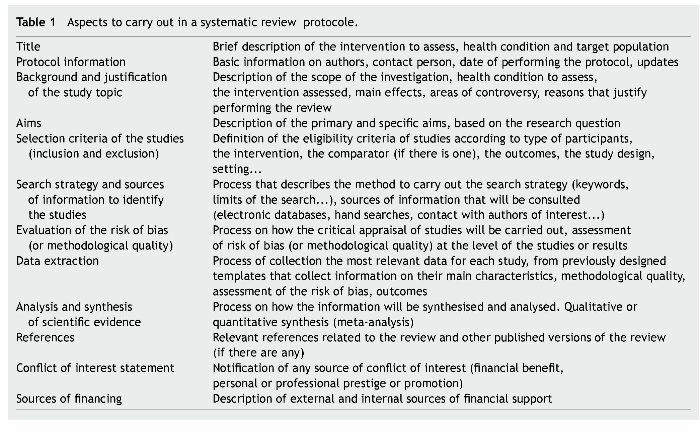

The development of a SR requires prior preparation of a protocol that guides the entire process of the review in an explicit and reproducible way. The review protocol is similar to the protocol of any research study that reports and justifies the problem under study, the aims and methods to follow for data collection and analysis, in addition to the conflict of interest and financial support statement (Table 1). Its development requires the participation and final approval of all reviewers who will take part in the review process (see some examples of protocols for reviews at www.cochrane.com).

It is recommended to comply as much as possible with the initial protocol, nonetheless in the same way as an experimental, quasi-experimental or ex post facto study in which there are times when the research protocol has to be modified (because of problems in recruiting participants, obtaining data or unexpected events...), at times it is also necessary to modify a review protocol. However, given the nature of reviews, it is very important that the modifications be made in the original protocol (in relation to studies to include or exclude, the type of data analysis to perform, etc.); that they be explicitly documented in a record of incidences for the review. The prior publication of the protocol is also recommended, with the purpose of reducing the impact of bias inherent to the author, promoting transparency on methods and the process to follow, in addition to reducing redundant reviews on the topic.

Steps for planning and preparing a systematic reviews

Step 1: Formulation of the problem

The step prior to a SR is the clear and specific formulation of the research question in accordance with some explicit criteria that set out the problem under evaluation (Higgins & Green, 2011; Liberati et al.r, 2009). The research question determines the structure and scope of the review and, as with any research, requires this to be clearly defined and includes a series of key components, which should be specified in the protocol and scientific article method in the section "selection criteria for studies".

The components of a question clearly follow the PICOS format4: description of the participants, interventions, comparisons and outcomes measures of the systematic review, in addition to the type of study (design).

4PICOS. P =participants; I = interventions; C = comparisons; O = outcomes; S =study design.

As an example, a well framed question could be: "In adults with a single or recurrent major depressive episode (participants) what is the efficacy (type of study), in terms of treatment response, recovery, relapses and recurrence (outcomes measures), of behavioural activation therapy (intervention) compared to behavioural cognitive therapy (comparator)?"

The description of participants implies the definition of the disorder or condition to assess (in the example, patients with single or recurrent major depressive episode), adding when relevant other characteristics of the population and data of interest such as age, sex, ethnicity, setting, etc. (in the example, adults). It is important for the limitation or restriction set out in the type of participants to be justified by scientific evidence. So for example, scientific evidence may justify that therapy in adults with depression is different to the intervention on children and adolescents. Nonetheless, when there are queries compared to the effect of including a population subgroup (e.g., outpatients vs. inpatients) it is probably better to include a broader sample and analyse subsequently, if considered appropriate, to what extent these differences affect the results of the intervention.

The description of the intervention is the second key component of the question and this specifies the intervention of interest (pharmacological, psychological treatment, surgery, prevention programme, indication of diagnostic test, etc.), compared with the third key component of the question, comparator or comparators, which is the alternative to the intervention and what may be the comparison of the intervention group compared to a control group that receives placebo or a group that receives usual care or a group on the waiting list or comparison compared to another intervention group that receives another treatment or active intervention (in our example, behavioural activation therapy, which is the intervention, compared to behavioural cognitive therapy, which is the comparator).

The description of the outcomes for the review is the fourth key component of a well-formulated question and refers to measures (outcomes and endpoints) aimed to be attained with the intervention. The outcomes should be clearly defined in explicit terms with the purpose of evaluating whether for example; the intervention relieves or removes symptoms, whether it reduces the number of adverse effects, whether they reduce the number of cases of relapse, etc. Thus, in a review that aims to assess the response to treatment when comparing two psychological interventions to address major depressive disorder we could consider the following specifications: "a reduction of more than 40% on the Beck Depression Questionnaire score and a reduction of 50% on the Beck Hopelessness Scale, being at least in the category "quite improved or highly improved" according to the Clinical Global Impression Change Scale -CGI". In general, the SR should include all those outcomes published that may be significant for people who need to make a decision based on the problem formulated in the review.

A final component that is highly relevant when defining the eligibility criteria to respond to the research question is defining the type of design of studies that will be selected for the review. In the scientific community of the health sciences it is a broadly accepted fact that, according to the type of design of an investigation, not all studies are equally valid and reliable. In this sense, the primary studies based on performing controlled and reliable trials (experimental studies), that imply random assignation of participants to experimental conditions, are the most reliable scientific evidence to guarantee the efficacy of an intervention (Nezu & Nezu, 2008). Nonetheless, randomised controlled trials are not always the most suitable type of study to respond to a research question and not all research topics can be addressed from this methodological viewpoint. If the course of a disease or the effects of an intervention are very obvious, it does not appear necessary or ethical to resort to randomised controlled trials. At times it may be appropriate and necessary to perform a SR from other non-experimental studies, so for example if we wish to respond to a question on prognosis or aetiology, we probably have to resort to some kind of quasi-experimental or ex post facto study such as cohort or case-control studies, more than an experimental study.

It is important to bear in mind that the more restrictive the criteria that define the research question, the more specific and focused the review but we also have to consider that if several restrictions are added a priori, we could encounter difficulties finding information that answers such restricted research questions. Similarly, if the review is aimed at answering a very restricted research question, it is possible that the results and conclusions obtained may not be generalised to other fields, populations or alternative forms of a same intervention. The key is attaining a balance between completeness and precision (sensitivity and specificity).

Step 2: Search for information

Once the research question has been suitably defined, the next step is to identify the available scientific evidence that enables answering this question. Locating the studies requires a systematic process that defines a priori the electronic databases to consult, the necessary information sources to identify the studies, verification of lists of references, review of communications and reports of interest, and the hand search for scientific evidence, which will constitute the strategy to search for the SR.

Currently, there are several information sources and electronic resources that may be consulted and that facilitate the process to search and recover bibliographical references by having a series of publications organised and indexed with keywords, generally journals, although they also include books, theses, communications to congresses, health technology evaluation reports, etc.

The computerised bibliographical databases of studies published in indexed journals may be classified into: primary and secondary. Primary databases (such as Medline, The Cochrane Controlled Trials Register [Central], PsycInfo, Embase, Web of Knowledge [Web of Science, Science Citation Index, Social Sciences Citation Index, Current Content], Lilacs, Cinahl, Cuiden, Biblioteca Virtual en Salud, Índice Médico Español, among others) facilitate mainly obtaining individual studies (although currently some systematic reviews that were published in scientific journals may also be accessed), while the secondary databases (The Cochrane Database of Systematic Reviews, Centre for Reviews and Dissemination (2010) [The Database of Abstracts of Reviews of Effects - DARE, The NHS Economic Evaluation Database - NHS EED, The Health Technology Assessment Database - HTA], National Guideline Clearinghouse, SIGN, GuíaSalud, Fisterra, AUnETS, among others) facilitate obtaining studies that are the result of the critical appraisal, synthesis and analysis of individual researches such as the SR, clinical practice guides or health technology assessment reports.

Other electronic resources available to compile information are meta search engines (Trip Database, ExcelenciaClínica, etc.), which are databases that carry out the same search in various electronic databases at the same time, with the disadvantage that it is not possible to perform very specific searches but the advantage that they enable making a quick and general search of various electronic databases at the same time.

In the chapter on efficient search for scientific evidence by Duque (2010) and in the basic handbook for evidence-based mental health care (Moreno, Bordallo, Blanco, & Romero, 2012) we can find a detailed description of most of these electronic resources, some available free of charge on Internet and others that require access by means of subscription.

It is recommended that reviewers always use more than two or three databases for their consultation with special consideration of those specific databases that incorporate relevant studies in the field of study (e.g., in the field of mental health, the PsycInfo database is an essential resource for review, Cinahl and Cuiden in the field of nursing, etc.).

Nonetheless, these are only some of the electronic resources available for the reviewers; there are other specialised registers (such as the registers of interest groups within the Cochrane Collaboration), sources of specific information by professional category (psychology, nursing, occupational therapy, etc.) (Moreno et al., 2012) and bibliographical resources that may be consulted online (Centre for Reviews and Dissemination - CRD, 2010; Chan, Dennett, Collins, & Topfer, 2006).

The lists of references for relevant articles in the area, in addition to direct contact with experts and researchers within the scope of the review, are other important sources of information to identify unpublished studies, hand search and the grey literature, comprised of health technology assessment reports, communications to congresses, doctoral theses, among others, are references that may be very useful to complete the exhaustive process of search for scientific evidence. There are also specific databases dedicated to referencing the investigation that have not been published, such as the Conference Papers Index, Dissertation Abstracts and, especially, the System for Information on Grey Literature in Europe (SIGLE).

The preparation of the search strategy (combination of terms) to be performed in the different electronic databases relevant for the review should be explicit and well documented. This basically consists of transferring the basic components of the research question (PICOS) to the language of the databases in which searches will be made, combining descriptors (keywords), operators (that serve to relate the terms - and, or, not, next, near, adj2), truncation symbols (that enable searching for terms with the same root and different termination - *, $) and wild cards (symbols that serve to represent any letter - #, ?). The main electronic databases also enable us to make searches in specific fields (title, abstract, author, journal), in addition to applying limits based on the date of publication, type of study, type of publication, language, age of the population, etc. (Duque, 2010).

The development of a search strategy is an iterative process in which the terms used will be modified according to the results obtained until a definitive strategy is attained that requires adapting to the structure and indexing inherent to each one of the databases to use. It is possible that after using a search strategy we find an excessive number of references (usually with references irrelevant to the study at issue), for which we recommend using search filters, which are strategies that enable further specification of the characteristics of the references to be recovered, according to the type of studies, type of participants, etc. (Duque, 2010). Some electronic databases already incorporate these filters (Medline, Embase, Cochrane, CRD) and other relevant references collate several filters with different sensitivity and specificity characteristics (Lokker et al., 2010; Wilczynski, McKibbon, & Haynes, 2011).

Although the process followed to obtain scientific evidence is detailed and rigorous, one of the limitations always present in a SR is the impossibility of recovering absolutely all the information on a subject. Nonetheless, the search for studies should strive to be complete, sensitive, efficient and unbiased. In this context, we should avoid the usual publication bias, in which numerous factors play a role such as: the fact that works that obtain positive results and statistically significant differences are more likely to be published, that once they are accepted they are published more quickly, that they are usually accepted in higher impact journals and they are usually cited more often; additional effort is made to locate unpublished and ongoing studies, by consulting several sources of information that enable identifying the studies. We should also avoid incurring in publication language bias, which consists of limiting searches to just one language (Spanish or English), excluding other potentially relevant studies in other languages; or bias by coverage of the databases used (e.g., more European references in Embase and more North American references in Medline), conducting searches in different databases and extending the search to different languages that may be potentially relevant for the subject at issue.

Once the references to revise have been obtained, we recommend using managers of documentary databases such as Reference Manager, EndNote, EndNote Web, ProCite, Zotero, RefWorks, etc., as useful tools to compile, store and format the information identified in the different databases of bibliographical references. Each one of these programs has some specific characteristics, according to the type of licence, working language, availability of Web or local version, usability, compatible resources, etc., which will determine the selection of one program over another based on the consideration of the pros and cons made by the reviewers (Cordón-García, Martín-Rodero, & Alonso-Arévalo, 2009; Duarte-García, 2007).

Step 3: Preselection of references and selection of studies included

The next step in the SR is to preselect potentially relevant references and, subsequently, select the studies that will be included in the review. For this process it may be useful to use a checklist that includes all those characteristics and criteria a study to be included in the SR should comply with. This checklist basically includes the selection criteria reported in the protocol and based on the PICOS format of the research question. The process of preselection and selection adheres to the following systematic phases during this stage of the process:

• Phase 1. Once searches have been conducted in the different information sources, at least two reviewers will independently proceed to select references by the titles and summaries to preselect potentially relevant references according to the inclusion criteria specified in the protocol (which normally correspond to the PICOS format of the research question). In case of query, it is recommended to be more conservative and preselecting the study.

• Phase 2. Once the initial screening has finished, the preselection of the references will be standardised by the reviewers. When there is disagreement between them this will be resolved after discussion and if there were to be no consensus the full text reference will be analysed for subsequent assessment.

• Phase 3. Once the preselection phase has finished the above methodology will be repeated with the full articles to select and finally include the articles to be appraised, analysed and synthesised in the review. When there is disagreement between the reviewers this will be resolved after discussion and if there is no consensus between them another independent reviewer will be consulted. If the study complies with all the inclusion criteria this will be an "included study" and not an "excluded study"; it is recommended to justify, in this phase, the reason for exclusion.

It is important for all discussions and agreements between reviewers to be documented in a record of incidences for the review.

Step 4: Critical appraisal and assessment of the risk of bias in the studies included

The applicability of the results of a SR, the validity of individual studies and certain design characteristics of a review may affect its interpretation and conclusions.

The process of critical appraisal of a scientific article implies comprehensive reading and detailed analysis of all the information reported in the article. This requires considering at least three relevant aspects: a) the methodological validity of the study, b) assessment of the precision and scope of the results analysis, and c) applicability of the results and study conclusions to our context.

In this context, the quality and validity of the conclusions of a SR depend both on the methodological characteris- tics of the studies included in the review and the results obtained, reported and analysed in these. All the efforts made by the authors to foresee the inclusion of possible risk of bias that may affect the interpretation and conclusions of the review are also important. These factors may or may not be within the control of the reviewers, and include aspects such as: the possibility of identifying all studies of interest, obtaining all relevant data, using appropriate methods for the search, selection of studies, data collation and extraction, analysis, etc.

The assessment of risk of bias (or methodological quality) of studies included in the review, implies evaluation both at study level (methodological and design aspects, such as sequence generation, allocation sequence concealment, blinding of participants, blinding of outcome assessment of data, incomplete outcome data, selective outcome reporting...) and, at times, also at results level (reliability and validity of the data for each specific result from the methods used for their measurement in each individual study) (Higgins et al., 2011; Liberati et al., 2009).

There are different strategies to evaluate the risk of bias, such as the evaluation of individual components, the use of checklists or quality assessment scales, but none of them are free of limitations (García & Yanes, 2010). In this sense, the new guidelines of the Cochrane Collaboration and the PRISMA Statement recommend that in the SR we consider a series of aspects or components for the evaluation of risk of bias summarised in five factors: sequence generation (selection bias), allocation sequence concealment (selection bias), blinding of participants and personnel (performance bias), blinding of outcome assessment (detection bias), incomplete outcome data (attrition bias), selective outcome reporting (reporting bias) and other potential sources of bias; and categorically advises against the use of scales for the methodological quality assessment of the studies. Similarly, although these criteria were explicitly defined for randomised controlled trials, the authors mention that they could also be useful criteria for another type of study (Higgins et al., 2011; Liberati et al., 2009).

Nonetheless, it is important to note there is a large number of scales and checklists available to evaluate the validity and quality of experimental, quasi-experimental, ex post facto, qualitative studies, etc.; in addition to other secondary studies such as the SR, clinical practice guides, economic evaluations, etc.; that vary in terms of scope, validity, reliability, etc. (García & Yanes, 2010; Jarde, Losilla, & Vives, 2012). The choice of one instrument over another will mainly depend on the type of study (design), but also on the number of studies included and the available resources (in terms of time, number of reviewers...).

Recently, the Canadian Agency for Drugs and Technologies in Health (CADTH) published a report (Bai, Shukla, Bak, & Wells, 2012), that concluded that the following scales are the most suitable, valid and useful to evaluate the methodological quality of the studies: the AMSTAR (Assessment of Multiple Systematic Reviews) scale 2005 for SR, the SIGN (Scottish Intercollegiate Guidelines Network) scale 50 2004 for randomised controlled trials and observational studies (cohort and case-control studies), and the GRADE (Grading of Recommendations Assessment, Development and Evaluation) 2004 to set out levels of scientific evidence.

In any case, it is recommended to combine several methods and explicit criteria for the critical appraisal of the quality and risk of bias in the studies, and that each article in the SR be evaluated by at least two independent evaluators, who will resolve their disagreements among themselves based on the protocol and when necessary, will resort to an independent third reviewer.

Step 5: Data extraction

The next stage of a SR process entails collection of the most relevant information from each article included. For this phase we recommend using a template previously defined by reviewers that homogeneously collects all the detailed and relevant information for the analysis, synthesis and interpretation of the data.

Although data extraction templates are not the same for all reviews, they usually cover at least the following topics for the studies included: reviewer, author and year of publication, citation, contact details, type and characteristics of the study design (sequence generation, allocation sequence concealment, blinding...), total duration of the study, number and characteristics of the participants, scope of the study, description of the intervention, comparison alternatives, total number of groups, outcomes, follow-up, dropouts during follow-up, main outcomes, conclusions, funding, conflicts of interest, observations, references from other relevant studies, etc. (in the Cochrane Handbook for SR of Interventions Version 5.1.0 we can find some examples). For the studies excluded, during this phase, the reason for exclusion will be mentioned (e.g., inadequate randomisation, blinding, or handling of dropouts).

It is recommended that data extraction for each article be performed by at least two independent reviewers, who will resolve their disagreements by means of a third reviewer taking part.

Step 6: Analysis and synthesis of the scientific evidence

Once the data have been extracted from all the studies we will proceed with analysis and synthesis. This process entails combining, integrating and summarising the main outcomes of studies included in the review. The aim is to estimate and determine, in the relevant cases, the overall effect of an intervention (compared to another alternative, if applicable); whether the effect is similar or not among the studies; and in the case of differences between them, determining the possible factors that account for the heterogeneity of the outcomes.

When the data from two or more studies are sufficiently homogeneous between themselves and are combined quantitatively making use of a statistical estimator, the synthesis is denominated meta-analysis and when the data cannot be combined by means of a quantitative synthesis, the results are synthesised qualitatively by means of a narrative synthesis.

If the studies are very heterogeneous among themselves, because the study participants are very different from one study to another, the characteristics of the interventions (scope of application, duration, etc.) differ significantly, the outcomes are not similar among studies, or there are differences in the methodological quality of these, the qualitative synthesis is probably more suitable in these cases to explore how the differences between studies influence the efficacy of an intervention. Nonetheless, we should consider that the narrative synthesis uses subjective methods (instead of statistical methods) and it is possible for bias to be introduced if there is inappropriate emphasis in some of the results of a study in detriment of another. In any case, we have to justify the suitability of using one synthesis strategy over another to summarise the results of studies included in the SR (Higgins et al., 2011).

If the characteristics of the studies enable performing a meta-analysis to increase statistical power and precision when estimating the effects of an intervention and the risks of its exposure, in the theoretical study by Botella and Gambara (2006), in this same scientific journal, we can find a detailed description of the prior considerations for its implementation, in addition to the stages of the process for its development and description of the aspects to consider to communicate its results in a journal such as International Journal of Clinical and Health Psychology.

Step 7: Interpretation of the results

The aim of a SR is to facilitate the decision-making process based on the best available evidence. For a review to actually facilitate this process, it is necessary to clearly set out the findings, have a reflective discussion of the scientific evidence and an appropriate presentation of the conclusions.

In this section of the SR we will set out the information of all results important to the review, based on previously defined aims, including information on the quality, validity and reliability of scientific evidence for each one of the outcomes obtained and considering the possible limitations of the studies and methods followed in the review, with the purpose of setting out possible risks of bias. We will also consider the applicability of the results and the relationship between expected risks and benefits, the possible costs and general impact of the intervention evaluated.

Final considerations to write a systematic review

The presentation and publication of a SR in a scientific journal usually adheres to a standard format closely related to the methods applied for its preparation. Its preparation follows a presentation format similar to the protocol that has guided the entire review process. The detailed setting out of each subsection will be limited by editorial guidelines, but in all cases will require a minimum amount of information that describes the methodology of the process, which enables its reproduction and update.

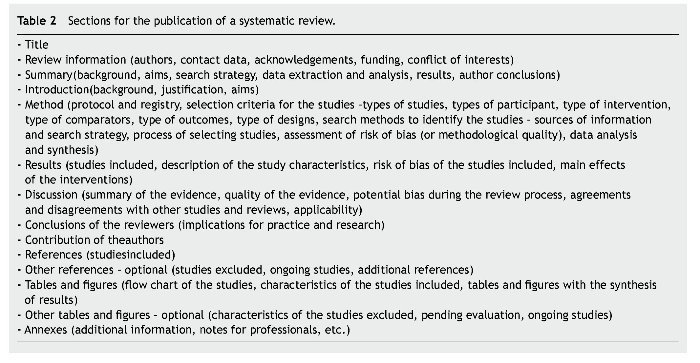

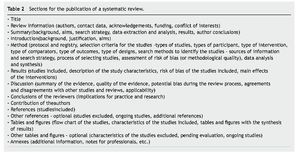

Based on the latest conceptual and methodological progress related to systematic reviews (Higgins et al., 2011; Urrútia & Bonfill, 2010), Table 2 shows the main sections to incorporate into the publication of a SR (with or without meta-analysis), based on the PRISMA Statement (Moher et al., 2009) and the Cochrane Handbook for SR of Interventions Version 5.1.0 (Higgins et al., 2011). Nonetheless, we should consider that the aim of these guidelines is to serve as a basis to contribute to the clarity and transparency in the publication of systematic reviews but under no circumstances should this turn into a "shield for the reviewer". This information may be complemented with the previous article by Botella and Gambara (2006) in which we found a detailed description on the preparation and report of a meta-analysis (quantitative synthesis of results) and the article by Hartley (2012) that reports the most recent techniques on how to make scientific papers easier to read.

As a summary, after mentioning the title, basic information for the review (authors, contact person, acknowledgements, funding, conflict of interests), the summary and a set of keywords; a SR should be based and sustained on the scientific knowledge that justifies its implementation (introduction). Setting out the background of a SR should be clear, concise and structured, including relevant information on the health condition to be explored, a brief description of the intervention, programme or action evaluated in addition to its possible effects and impact on the potential addressees. The background has to clearly establish the reasons that justify the review and why the research question set out is relevant, and finally, the aims that will guide the whole review process should be mentioned.

The method section below describes what has been done to obtain the results and conclusions. We recommend mentioning in this section whether the review has been preceded by a previously published protocol mentioning the possible variations between the protocol and the final review. The method section should explicitly set out the selection criteria for the studies to include in the review, based on the type of studies, participants, intervention, comparator (if applicable), outcomes, and type of designs, following the same PICOS format of the research question. Subsequently, we suggest documenting the search process, including the main sources of information consulted, the search date and strategy for at least one of the databases used (or failing this the key words combined, if the scope of the editorial guidelines does not enable a greater description). We should also mention the possible limits set out (in relation to the search period, language, type of publication, etc.). Next, we will mention the process followed for the selection of results (number of reviewers, participation of other experts in the process, method to resolve discrepancies between them), strategies to assess the risk of bias (or methodological quality), process and method to extract data (if a data collection form was used, whether the data were extracted independently by more than one author, how disagreements were resolved between them), in addition to the method for the analysis and synthesis (qualitative or quantitative).

In the results section it is recommended to begin with the summary of studies found in the search, making use of a flow chart as proposed in the PRISMA statement (Liberati et al., 2009), with the purposes of illustrating the results of the search and selection process for its inclusion in the review. Subsequently, a brief summary of the studies included and the summary tables that contain this information (design, sample size, scope, participants, interventions, outcomes...). In addition, the main reasons for exclusion of the studies excluded in the review should be indicated.

The presentation of results should also mention whether or not the risk of bias of studies included in the review has been evaluated. This aspect is especially relevant given that the degree to which a review may provide valid and reliable conclusions on the effects of an intervention will depend on the validity of the studies finally included in the review. In this sense, a review and meta-analysis based on study data with little internal validity will lead to not very valid and reliable results, for which reason these issues should be considered in the synthesis, analysis and interpretation of the results. Finally, the main effects of the interventions will be mentioned; this will be a summary of the main findings based on the aims initially set out in the review.

The discussion section specifically begins with a summary of the most relevant results of the review but without repeating the previous results section. It is recommended to assess the validity of the results based on the quality of scientific evidence and indicating the possible strengths and weaknesses of the review in general, in addition to the agreements and disagreements with other studies and prior reviews, and the relevance and applicability of these. Finally, the authors' conclusions will be reported in terms of implications for research and practice.

Acknowledgements

The author would like to thank Jason Willis-Lee for his translation and copyediting support.

*Corresponding author at:

Servicio de Evaluación del Servicio Canario de la Salud, C/ Pérez de Rozas, 5, 4 Planta,

38004 Santa Cruz de Tenerife, Spain.

E-mail address:lperperr@gobiernodecanarias.org.

Received September 29, 2012;

accepted October 19, 2012

References

Bai, A., Shukla, V. K., Bak, G., & Wells, G. (2012). Quality assessment tools project report. Ottawa: Canadian Agency for Drugs and Technologies in Health.

Botella, J., & Gambara, H. (2006). Doing and reporting a meta-analysis. International Journal of Clinical and Health Psychology, 6, 425-440.

Centre for Reviews and Dissemination, CRD. (2010). Finding studies for systematic reviews: A resource list for researchers. New York: University of York. Available from: http://www.york.ac. uk/inst/crd/revs.htm [accessed 13 Sep 2012].

Chan, L., Dennett, L., Collins, S., & Topfer, L. A. (2006). Health technology assessment on the net: A guide to Internet sources of information (8thed.). Edmonton: Alberta Heritage Foundation for Medical Research - AHFMR.

Cook, D. A., & West, C. P. (2012). Conducting systematic reviews in medical education: A stepwise approach. Medical Education, 46, 943-952.

Cordón-García, J. A., Martín-Rodero, H., & Alonso-Arévalo, J. (2009). Gestores de referencias de última generación: análisis comparativo de RefWorks, EndNote Web y Zotero. El Profesional de la Información, 18, 445-454.

Duarte-García, E. (2007). Gestores personales de bases de datos de referencias bibliográficas: características y estudio comparativo. El profesional de la Información, 16, 647-656.

Duque, B. (2010). Búsqueda eficiente de la evidencia científica. In B. Duque-González, L. García-Pérez, F. Hernández-Díaz, J. López-Bastida, V. Mahtani-Chugani, L. Perestelo-Pérez, Y. Ramalllo-Fariña, M. Trujillo-Martín, & V. Yanes-López (Eds.), Introducción a la metodología de investigación en evaluación de tecnologías sanitarias(pp. 33-57). Tenerife: Servicio Canario de la Salud.

Fernández-Ríos, L., & Buela-Casal, G. (2009). Standards for the preparation and writing of psychology review articles. International Journal of Clinical and Health Psychology, 9, 329-344.

García, L., & Yanes, V. (2010).Lectura crítica de artículos científicos. In B. Duque-González, L. García-Pérez, F. Hernández-Díaz, J. López-Bastida, V. Mahtani-Chugani, L. Perestelo-Pérez, Y. Ramalllo-Fariña, M. Trujillo-Martín, & V. Yanes-López (Eds.), Introducción a la metodología de investigación en evaluación de tecnologías sanitarias (pp. 59-75). Tenerife: Servicio Canario de la Salud.

Hartley, J. (2012). New ways of making academic articles easier to read.International Journal of Clinical and Health Psychology, 12, 143-160.

Higgins, J. P. T., & Green, S. (Eds.) (2011). Cochrane handbook for systematic reviews of interventions version 5.1.0. Available from: www.cochrane-handbook.or [accessed 13 Sep 2012].

Jarde, A., Losilla, J. M., & Vives, J. (2012). Suitability of three different tools for the assessment of methodological quality in ex post facto studies. International Journal of Clinical and Health Psychology, 12, 97-108.

Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gøtzsche, P. C., Ioannidis, J. P., Clarke, M., Devereaux, P. J., Kleijnen, J., & Moher, D. (2009). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health-care interventions: explanation and elaboration. British Medical Journal, 339, b2700.

Lokker, C., McKibbon, K. A., Wilczynski, N. L., Haynes, R. B., Ciliska, D., Dobbins, M., Davis, D. A., & Straus, S. E. (2010). Finding knowledge translation articles in CINAHL. Studies in Health Technology and Informatics, 160, 1179-1183.

Moher, D., Liberati, A., Tetzlaff, J., Altman, D.G., & the PRISMA Group (2009). Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. British Medical Journal, 339, b2535.

Montero, I., & León, O. G. (2007). A guide for naming research studies in Psychology. International Journal of Clinical and Health Psychology, 7, 847-862.

Moreno, C., Bordallo, A., Blanco, M., & Romero, J. (2012). Manual básico para una atención en salud mental basada en la evidencia. Granada: Escuela Andaluza de Salud Pública.

Nezu, A. M., & Nezu, C. M. (2008). Evidence-based outcome research: A practical guide to conducting randomized controlled trials. London: Oxford University Press.

Sánchez-Meca, J., Boruch, R. F., Petrosino, A., & Rosa-Alcázar, A. I. (2002). La Colaboración Campbell y la práctica basada en la evidencia. Papeles del Psicólogo, 83, 44-48.

Sánchez-Meca, J., & Botella, J. (2010). Revisiones sistemáticas y meta-análisis: Herramientas para la práctica profesional. Papeles del Psicólogo, 31, 7-17.

Urrútia, G., & Bonfill, X. (2010). PRISMA declaration: A proposal to improve the publication of systematic reviews and meta-analyses. Medicina Clínica (Barcelona), 135, 507-511.

Wilczynski, N. L., McKibbon, K. A., & Haynes, R. B. (2011). Sensitive Clinical Queries retrieved relevant systematic reviews as well as primary studies: An analytic survey. Journal of Clinical Epidemiology, 64, 1341-1349.