The design and validation of tools constitutes a fundamental pillar of nursing research, where it is essential to incorporate the most current methodologies and analyses in order to guarantee validity and reliability in their clinical application.

The aim of this paper is to describe the characteristics of the Rasch analysis and the methodology for its development as well as to discuss its relevance and applicability in nursing research, highlighting its potential contribution to the improvement of the quality and accuracy of measurement instruments in the discipline.

Through a narrative synthesis, the theoretical foundations of Rasch analysis are described and the characteristics and assumptions that must be fulfilled to carry out this type of analysis are presented. Subsequently, the implementation methodology is presented in 11 steps: definition of objectives and preparation, instrument design, data collection, initial and unidimensionality analysis, goodness-of-fit assessment (infit-outfit, reliability and separation), item local independence assessment (Yen's Q3 coefficient), item calibration and estimation of skills, measurement invariance analysis (DIF analysis), review and modification of the instrument, final analysis and validation, interpretation of results. Examples of use are presented, as well as the advantages and limitations of the method. In conclusion, the Rasch analysis provides a valuable methodology for the evaluation of clinical competencies and skills, as well as for the development and validation of measurement instruments of great utility for research in care, although it would be necessary to promote training and standardization in its use.

El diseño y validación de herramientas constituye un pilar fundamental de la investigación enfermera, donde es imprescindible incorporar las metodologías y análisis más actuales para poder garantizar la validez y fiabilidad en su aplicación clínica.

El objetivo de este trabajo es describir las características del análisis Rasch y la metodología para su desarrollo así como discutir su relevancia y aplicabilidad en la investigación enfermera, destacando su potencial contribución a la mejora de la calidad y precisión de los instrumentos de medición en la disciplina.

A través de una síntesis narrativa se describen los fundamentos teóricos del análisis Rasch y se presentan las características y supuestos que se deben cumplir para llevar a cabo este tipo de análisis. Posteriormente, se presenta la metodología de implementación en 11 pasos: definición de objetivos y preparación, diseño de instrumento, recolección de datos, Análisis inicial y de la unidimensionalidad, Evaluación de la bondad de ajuste (Infit-outfit, fiabilidad y separación), Evaluación de la independencia local de los ítems (coeficiente Q3 de Yen), calibración de ítems y estimación de las habilidades, análisis de la invarianza de la medida (análisis DIF), revisión y modificación del instrumento, análisis final y validación, interpretación de los resultados. Se presentan ejemplos de uso, así como las ventajas y limitaciones del método. Como conclusión, el análisis Rasch proporciona una metodología valiosa para la evaluación de competencias y habilidades clínicas, así como para el desarrollo y validación de instrumentos de medición de gran utilidad para la investigación en cuidados aunque sería necesario potenciar la formación y estandarización en su uso.

As a healthcare-focused discipline, nursing requires precision tools to ensure the quality and effectiveness of care provided and it therefore plays a vital role in the development of measurement instruments, especially in areas related to health, well-being, quality of life and care assessment. Nurses, together with other health and social science discipline professionals actively contribute to achieving progress in the creation and validation of these instruments.

Within the field of nursing research, Rasch analysis stands out as a powerful and refined statistical tool for the evaluation and improvement of measurement instruments, especially with regard to surveys and competency assessments.

Rasch analysis originated from item response theory (IRT),1 and allows researchers to overcome limitations inherent to traditional scaling methods, providing a more solid and detailed framework for data interpretation in health studies. It offers a robust methodology for the construction and validation of measurement instruments, allowing researchers and clinical professionals to develop scales and tests that accurately reflect clinical competencies and skills.2

In the context of nursing research, Rasch analysis is therefore used to validate and refine measurement instruments, ensuring that they are both reliable and valid for measuring specific phenomena within the discipline.3 This is of utmost importance, as high-quality instruments are essential for the collection of accurate and useful data, which in turn report evidence-based practices and public health policies.4

Against this backdrop, the objectives of this article are to describe Rasch analysis and discuss its relevance and applicability in nursing research, highlighting how this methodology can significantly contribute to improving the quality and accuracy of measurement instruments. In doing so, it seeks to illuminate the importance of Rasch analysis, not only as a statistical tool, but also as an approach that can facilitate meaningful and relevant research in nursing, which is critical to advancing evidence-based practice and policymaking.

There are several advantages to understanding and applying Rasch analysis. It not only improves the ability to develop and validate robust measurement instruments, but also provides a solid basis for the interpretation of research results, ensuring that decisions based on these data are as informed and accurate as possible. Furthermore, Rasch analysis’ emphasis on data quality reinforces the importance of methodological rigour in nursing research, thus contributing to the overall body of knowledge in the discipline.

Theoretical foundations of Rasch analysisRasch analysis, named after the Danish mathematician Georg Rasch,5 is an approach within the IRT which provides a solid base for objective measurement in social and health sciences. Unlike other statistical models that are based on less rigorous suppositions, the Rasch model is characterised by its ability to transform qualitative responses (e.g. correct/incorrect, agree/disagree) into quantitative measurements along a continuum of ability or attitude, so that low values on this continuum of the latent variable represent characteristics of the latent variable that occur frequently or are easily observable. High values on this continuum, however, indicate characteristics that are rarely observed or are more difficult to obtain.6

Description of the Rasch modelThe Rasch model is essentially a mathematical model, with a logistic probability function, which assumes that the probability of a correct response to an item (or the choice of a particular response on a scale) depends solely on the difference between the individual’s ability level and the difficulty of the ítem.7 This unique feature allows the Rasch model to provide measurements that are independent of individuals and items, a property known as “measurement invariance”,8 meaning that the measurements do not depend on the specific set of items presented or the population of respondents in the sample.

Another essential component of this model is “item ranking”,9 a process by which the difficulty of each item within a data set is estimated. In other words, items and individuals are arranged on the same continuum, with the most likely items at the bottom of the continuum.10 The hierarchy of items along the continuum determines the order of item locations relative to the distribution of individuals.9 This ranking is critical to ensure that items are adequately comparable and to facilitate the construction of fair and equitable tests. Finally, the “skills estimation” of individuals is equally important, ensuring the accurate assessment of a person’s skill or competence level in relation to the construct being measured.

It should also be noted that the Rasch model is based on two key assumptions that must be met.6,11 If these assumptions cannot be met, other instrument validation methods should be applied. These assumptions are:

- -

Unidimensionality Assumption: This is the basic assumption of Rasch analysis.12 This concept implies that all questions or items in the test are designed to measure a single underlying dimension or construct. In other words, a set of items is considered unidimensional if all the variations in individuals' responses can be attributed to differences in the level of that single dimension being assessed, and not to other irrelevant dimensions or factors.

For example, if we are assessing reading comprehension ability in a test, we want all items to measure this ability and not be influenced by other abilities such as memory or encyclopaedic knowledge. If the test is unidimensional, we can be confident that differences in individuals' responses truly reflect differences in their reading comprehension and are not distorted by other factors.

- -

Local Independence of items:6,11 This property implies that individuals' responses to each item are independent of each other, once their ability in the dimension being assessed has been taken into account. In other words, local independence means that the probability of an individual correctly answering a given item is not influenced by their responses to other items, once their ability level in the dimension being assessed has been considered.

This property is essential in the Rasch model because it allows each item to contribute singularly to the measurement of the individual's ability in the specific dimension. If this local independence is not met, there may be biases in the interpretation of the test scores and the validity and reliability of the measure could be affected.

Unlike other IRT models, Rasch analysis does not require data to fit a specific distribution, which makes it particularly robust and versatile for different types of evaluations.1 This feature highlights the importance of the Rasch model in the creation of reliable and valid measurement instruments, facilitating their application in a broad spectrum of contexts.

Thus, unlike other IRT models or Classical Test Theory (CTT) methods, the Rasch model offers several unique advantages:

- -

Joint measurement:13 This means that the parameters of the individuals and the items are expressed in the same units and are located on the same continuum. Consequently, the interpretation of the scores is not necessarily based on group norms, but on the identification of the items that the individual has a high or low probability of solving correctly. This feature provides the Rasch model with great diagnostic richness.

- -

Measurement Invariance: As previously mentioned, one of the most significant contributions of Rasch analysis is its ability to facilitate valid comparisons between items and people, regardless of the sample of items or the population of people to whom the test is applied.4

- -

Specific objectivity:14 A measurement can only be considered valid and generalisable if it does not depend on the specific conditions under which it has been obtained. That is to say, the difference between two people in an attribute should not depend on the specific items with which it is estimated. Likewise, the difference between two items should not depend on the specific people used to quantify it. Consequently, if the data fit the model, comparisons between people are independent of the items administered and the estimates of the item parameters will not be influenced by the distribution of the sample used for ranking. The property of specific objectivity is the basis for highly important psychometric applications such as the comparison of scores obtained with different tests, the construction of item banks, and tests adapted to the subject.

- -

Item validation: Rasch analysis provides a detailed review of each item in a test, identifying those that do not fit the model and which, therefore, could be ambiguous or misinterpreted by different subgroups.15

- -

Interval scale: Another distinctive aspect is that the Rasch model converts the responses into an interval scale, which facilitates the interpretation of the differences between scores and improves the precision of the measurements.16

In the context of the development of measurement instruments and scales, items can have different options or types of response. Thus, dichotomous data refer to items that have only two possible response categories: correct/incorrect, true/false, etc. In contrast, polytomous data refer to items that can have three or more response categories, something common in many measurement instruments developed to measure different constructs, such as quality of life, attitude or satisfaction instruments. These instruments often use ordinal response categories that can range, for example, from “totally agree” to “totally disagree”.

In the case of dichotomous data, we refer to the original Rasch model, also known as the Rasch one-parameter logistic model.1,7 Its main characteristics, in addition to those already stated, are simplicity, since its analysis is relatively simple, as each item contributes one point to the total score, facilitating its interpretation and analysis; and being a uniparametric model, where only one parameter is estimated per item, the item difficulty, keeping the modelling and interpretation simpler. This model is ideal for tests that determine whether a response is simply correct or incorrect, as in the case of tests of knowledge or nursing skills.

In the case of polytomous data, where we are using Likert-type scales, extensions of the Rasch model are used such as the “Masters’ partial credit model” (Partial Credit Model)17 or the “Andrich classification model” (Rating Scale Model).18 In this case, their characteristics, in contrast to the original model, are complexity, since it can handle the complexity of items with multiple response categories, allowing a more nuanced evaluation of attitudes or skills. Also, multiparametric models, in addition to the estimation of item difficulty, are able to estimate additional parameters such as the response category threshold.

The rating scale model (rsm)Andrich’s RSM is used to analyse polytomous response data where all questions or items share the same set of response categories. For example, in a survey where responses range from 1 to 5, where 1 is "Strongly Disagree" and 5 is "Strongly Agree", all questions use this same 5-point scale, so that:

- -

The response categories are common to all items.

- -

Thresholds between response categories (the transition from one category to the next) are assumed to be the same across all items.

- -

The model estimates a set of threshold parameters that are applicable to all items.

The PCM, developed by Geoff Masters, is more flexible than the RSM and is used when questions or items have different numbers of response categories or when thresholds between categories are not expected to be the same across all items. For example, some questions may have a response scale of 1–4, while others may have responses of 1–5. This model therefore:

- -

Allows different sets of response categories to be handled for each item.

- -

Thresholds between response categories are estimated per item, not assumed to be equal across all items.

- -

Offers greater flexibility for handling items with a varied response structure.

The choice between RSM and PCM depends on the structure of the measurement instrument and the objectives of the analysis. RSM may be preferable for its simplicity and ease of interpretation when all questions use the same response scale. PCM offers greater flexibility and is suitable for instruments with a variety of response scales, allowing for a more detailed and item-specific analysis. For dichotomous data, where responses to items are binary in nature (e.g., correct/incorrect, yes/no), the original Rasch model, as we have seen, is the most suitable and directly applicable.

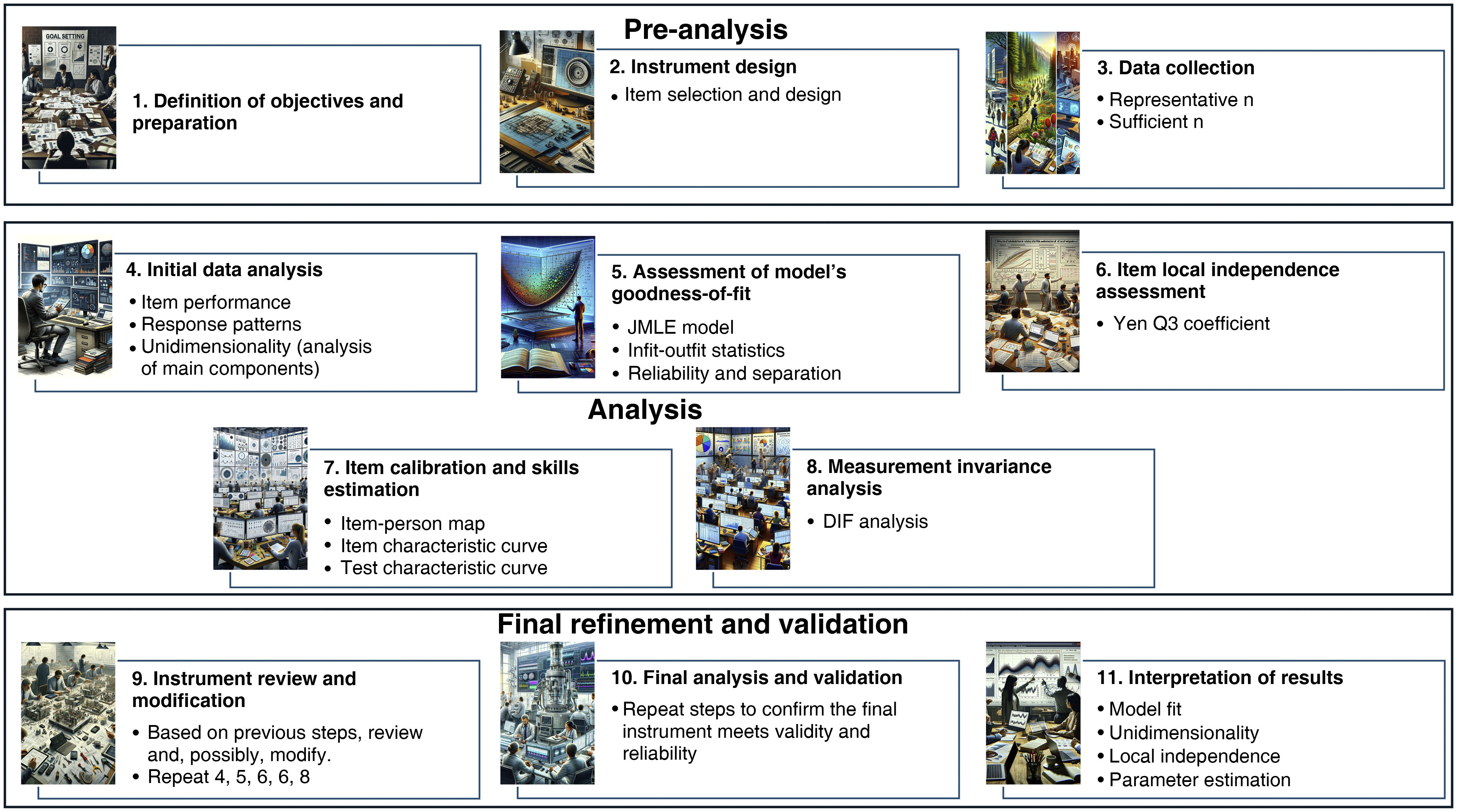

Rasch analysis methodology applicationThe effective implementation of Rasch analysis in research involves several critical steps, from initial study design to interpretation of findings. The 11 essential steps in this process are detailed below (Fig. 1):

Step 1 – Defining objectives and preparation: the first step is to clearly establish the objectives of the research and determine how Rasch analysis can contribute to achieving them, either by evaluating the validity of an existing instrument, developing a new one, refining or reducing existing instruments, or even equating different tests in the measurement.

Step 2 – Designing the measurement instrument: Selecting or designing items that are representative of the construct to be measured. This includes ensuring that the items are clear, unidimensional, and appropriate for the target group.

Step 3 – Collecting data: Collecting data, ensuring a representative sample that is large enough for statistical analysis. However, there is no agreement in the literature on the sample size required for this type of analysis. Thus, Stolt et al.3 present studies that use samples ranging from 43 to 13,113 participants. Rasch analysis can work relatively well regardless of sample size due to the characteristics mentioned, which gives it an advantage over other types of analysis such as factor analysis. Usually, many studies refer to the quote from Polit and Beck19 on the use of samples based on 5–20 participants for each item of the instrument to be analysed.

Step 4 – Initial data analysis: Use Rasch analysis software to perform an initial analysis, identifying items that do not work well, unexpected patterns in the responses, and the general alignment of the data with the Rasch model. In many cases, this initial analysis is combined with simple CCT analyses to study difficulty, lack of knowledge, and discrimination. This leads to the identification of items that do not provide benefits to the construct and that can be eliminated prior to performing the Rasch analysis.

At this stage, it is common in the literature to find that a principal components analysis of the model residuals is carried out to study unidimensionality.20 The usual criteria are that the first component explains at least 50% of the variance and that the second component explains less than 5% (or an Eigen value less than 2.0).21 Another option is, within the framework of a factor analysis, to calculate different unidimensionality indices, as proposed by Ferrando and Lorenzo-Seva.22 The calculation of the UniCo (Unidimensional Congruence), ECV (Explained Common Variance) and MIREAL (Mean of Item REsidual Absolute Loadings) values can help to assess whether the data can be treated as essentially unidimensional.22

Step 5 – Goodness of fit assessment: This provides data on the internal validity of the scale and the validity of the individuals’ response. Fit statistics are typically used, such as Infit and Outfit.4,23 Items or responses that do not fit may indicate problems with the item or the unidimensionality of the construct. It is crucial to identify items that do not fit the Rasch model. The inclusion of items with poor fit hinders the quality of the measurement and decreases the precision of the instrument. These items should be removed, revised or rewritten, and retested. Goodness-of-fit statistics indicate the extent to which each item fits the underlying construct of the test.4

The Joint Maximum Likelihood Estimation model, or JMLE model, refers to a statistical technique used for parameter estimation within IRT models, including Rasch analysis. This approach is characterised by simultaneously estimating item parameters and subject ability parameters based on the joint maximum likelihood of all these parameters, given the observed data.6

In the context of IRT and Rasch analysis, item parameters typically include item difficulty (and potentially other parameters, such as discrimination, depending on the specific IRT model used). Subject parameters, on the other hand, typically refer to the abilities or competencies of the individuals responding to the items. Joint estimation means that the estimation process seeks that set of item and subject parameter values that, taken together, are most likely to have generated the observed data.

This model has advantages such as “Efficiency”, since it allows the simultaneous estimation of item and subject parameters, which can be more computationally efficient compared to approaches that estimate these parameters separately, and “Applicability”, which is especially useful in situations where there is no prior information on item parameters or subject abilities, allowing a direct estimation from the collected data. However, this model also has limitations such as “Inaccuracy at Extremes” (the joint estimation may be less precise for subjects with extremely high or low abilities, since the information to estimate their abilities accurately may be limited in the observed data) and “Stability” (since it may be susceptible to stability and convergence problems, especially in small data sets or with uncommon response patterns).

Although the JMLE is a powerful technique within Rasch analysis and other IRT models, it is important for researchers to be aware of its limitations and to consider using alternative estimation methods, such as the Marginal Maximum Likelihood Estimation (MMLE) or the Conditional Maximum Likelihood Estimation (CMLE), depending on the specific characteristics of their data and the objectives of their study. The choice of estimation method should be based on careful consideration of these factors to ensure the accuracy and validity of the results of the analysis.

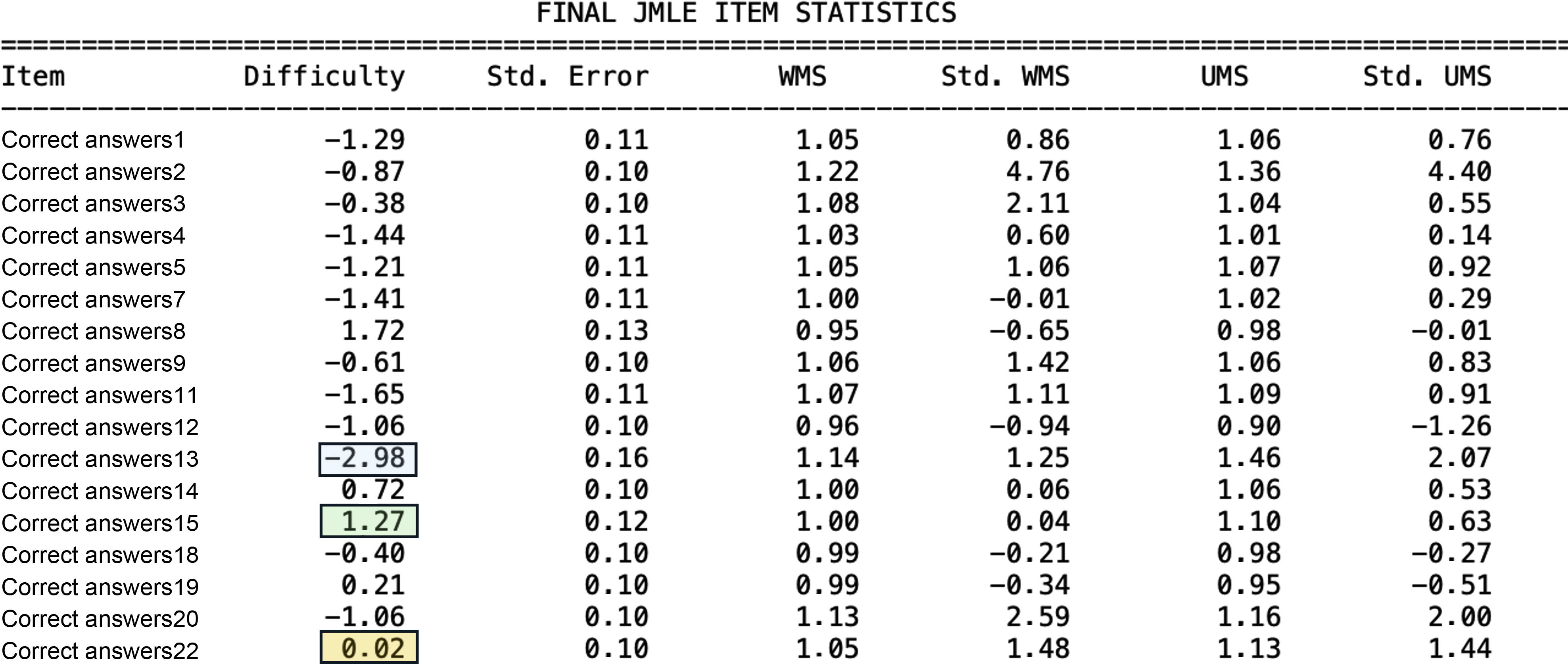

From the JMLE model, the Infit (Weighted-Information), or Weighted Mean Square Fit Statistic (WMS), is a measure of fit that is especially sensitive to response patterns on items that are close to the individual's ability level. The Outfit (Outlier-Sensitive Fit), or Unweighted Mean Square fit statistic (UMS), is a crude fit statistic, more sensitive to unusual or atypical responses ("outliers") among items that are far from the individual's ability level. These values are expressed as mean squares. A value of 1 indicates a perfect fit to the model. Thus, fit index values between.8 and 1.2 represent a good fit15 and values between .5 and 1.5 mean an acceptable fit,11 but it is also recommended to adjust the values according to the sample size.24Fig. 2 represents a partial result of a hypothetical analysis, where the following can be seen: the difficulty and the values of Infit (WMS) and Outfit (UMS) with their respective standard errors. As an example, item 13 would be the easiest, 22 would be close to 0 (medium difficulty) and 15 would be the most difficult of those presented in this table.

If difficulty is present, values close to zero would be half the difficulty, while values greater than zero, as they move away, indicate greater difficulty; values less than zero indicate less difficulty. In the case of Infit and Outfit, all values are within the range of values that indicate good fit to the model.

As we have seen, the analysis of these parameters is of vital importance because it allows us to perform “Item Diagnosis”. With these statistics we are able to identify items that do not behave as expected, either because they are too predictable or because they present unexpected variability in the responses and “Instrument Improvement”. By analysing the Infit and Outfit, test developers can make informed decisions about revising or eliminating items to improve the validity and reliability of the instrument. Finally, this analysis is directly related to “Model Adaptation”. The evaluation of the fit of the items to the Rasch model (or any applied IRT model) is crucial to ensure that the measurements accurately reflect the construct of interest.

Other measures provided by goodness-of-fit analysis include scale quality statistics,6reliability, and separation, which can be calculated for both items and persons. Person reliability is similar to the reliability coefficient for the CCT, with values greater than .8 being desirable. Person separation is similar to reliability in that it represents the extent to which a measure can consistently reproduce and rank scores.

Separation values greater than 2 are desirable, indicating that the instrument can separate persons into at least two strata, for example, low and high ability.25 Item reliability refers to the degree to which item difficulties can be rank-ordered. Inter-item separation provides similar information about the quality of item localisation to the latent trait.

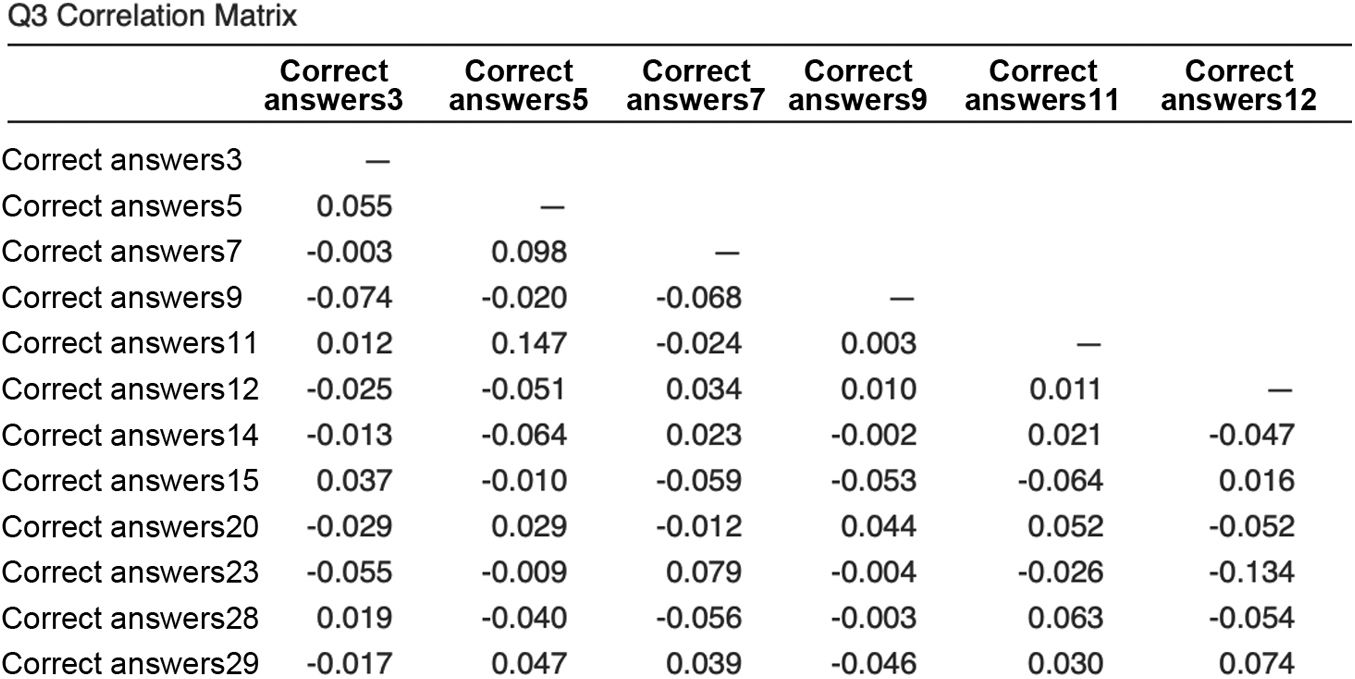

Step 6 – Local Independence assessment: Typically, a correlation analysis is performed on the standardised residuals, which is known as Yen's Q3 coefficient, a statistical measure developed by Yen26 in 1984 and used to assess the local independence of items in the context of IRT models, including Rasch analysis. It is a measure of residual correlation between pairs of items. In other words, it assesses the correlation between responses to two items after controlling for the respondent's general ability. If two items are truly independent of one another, given an individual's ability, then the Q3 coefficient is expected to be close to zero.

A Q3 value significantly different from zero would suggest that there is a dependency between the items, which could indicate a violation of the assumption of local independence, a property necessary for the Rasch model as initially stated. Yen's Q3 coefficient is applied in the analysis of the validity of a measurement instrument for several reasons:

- -

Identification of Dependent Items: It provides identification of pairs of items that may be influencing each other, which is particularly useful in the revision and improvement of measurement instruments. For example, two items may be redundant or may be measuring the same sub-trait or specific ability, rather than single aspects of the general construct.

- -

Improvement of Instrument Design: By identifying items with dependencies, researchers and test developers can make informed decisions about which items to modify or eliminate to improve the local independence, validity, and reliability of the instrument.

- -

Evaluation of Construct Structure: In some cases, the dependencies identified between items can provide valuable information about the underlying structure of the construct being measured. This can lead to a better understanding of the dimensions of the construct and to theoretical refinement of the construct.

It is important to note that the implementation of this analysis requires the use of specialised statistical software that can handle IRT models and calculate residual correlations. High Q3 values may indicate problems with item independence, but they can also reflect multidimensional aspects of the construct that do not necessarily invalidate the use of the instrument. Findings must therefore be evaluated in the context of the underlying theory of the construct and the purpose of the measurement instrument. The interpretation of Yen's Q3 coefficient values, specifically the threshold at which a Q3 value is considered to indicate an unacceptable dependency between items, may vary depending on the context of the study and the standards established by the researcher or the discipline. However, there are some general guidelines that can be considered. Yen, in his original work, did not specify a strict threshold for determining when a Q3 value is unacceptably high, suggesting that interpretation depends largely on the specific context of the analysis and the instrument. In practice, some researchers and methodologists have adopted pragmatic approaches to interpreting Q3 values, considering values above certain cut-off points as indicative of possible dependence between items. A common approach is to consider Q3 values above .2 or .3 as indicative of significant residual correlation between pairs of items, suggesting that these items might not be independent of each other after controlling for the general ability of the respondents. This general recommendation should be used with caution. Some contexts may require a more conservative or more liberal threshold, depending on the nature of the construct measured and the purpose of the assessment instrument. In reality, there is no uniform criterion, as this value depends on the sample size, the number of items, and the number of response categories.27 As an example, Fig. 3 presents a section of a correlation matrix of Yen's Q3 where it can be observed that all values are less than .2 in absolute value, which would indicate local independence of the items.

It is also important to note that a high Q3 value does not necessarily indicate that items should be eliminated or that the instrument is invalid. Instead, it suggests the need for a more detailed review of the items involved to understand the reason for the dependency. This could lead to considering aspects such as the wording of the items, the structure of the questionnaire, or even the possibility of unrecognized underlying dimensions within the construct being measured

Step 7 – Ranking of items and estimation of skills:1 Through Rasch analysis, the difficulty of each item is estimated and the skills or levels of the construct are calculated for each participant. Once all of the above has been carried out, the data can be represented in graphs that allow for better interpretation. Common items-person maps or Wright graphs are used, which are composed of two large histograms (graphed vertically). The left side presents the histogram of the distribution of people's skills. The right side shows the histogram of the item difficulty distribution. The two histograms share the value axis (vertical line).

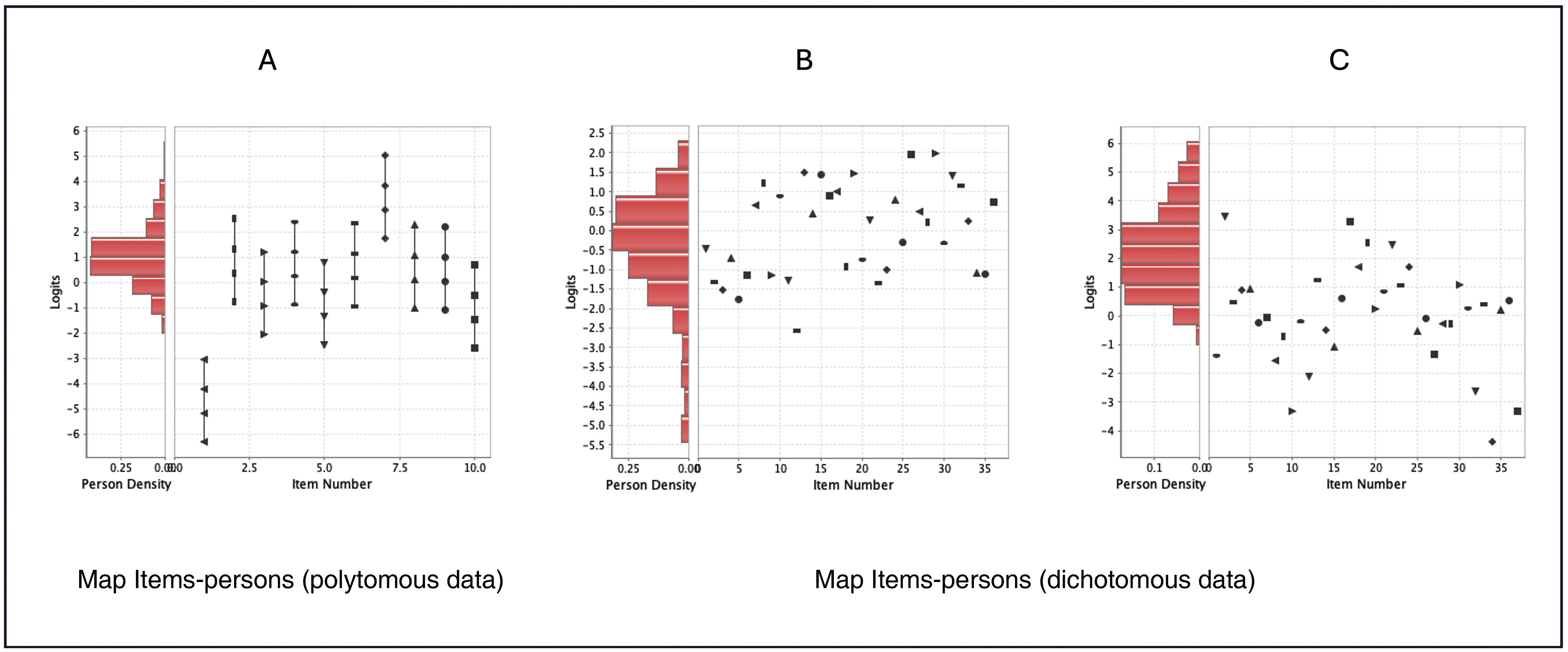

Fig. 4 shows 3 different Wright graphs. The first is a graph of a model with polytomous data. Therefore, on the right side of the graph there are vertical lines with four marks that represent each item and the difficulty of each category (10 items with 4 response categories). On the left side there would be the histogram of people. Both are on the same logit scale, which allows us to compare both elements on the same scale. We see that although there is a fairly normal distribution, it is shifted upwards in ability. In the case of the items, there is a homogeneous distribution along the logit scale, which indicates that there are easier items (the one at the bottom) and more difficult ones (the one at the top), although except for the first, the distribution of people and items is practically the same.

Regarding the other two graphs, they are models with dichotomous data (items are represented with different symbols). In the central graph we see that there is a group of people who have a tendency to have low scores on the instrument and that the items are higher up, which may mean that this instrument (if it were a knowledge instrument) presents relatively difficult questions for a part of the sample. In the graph on the right it is the opposite, people perform very well and the items seem easier.

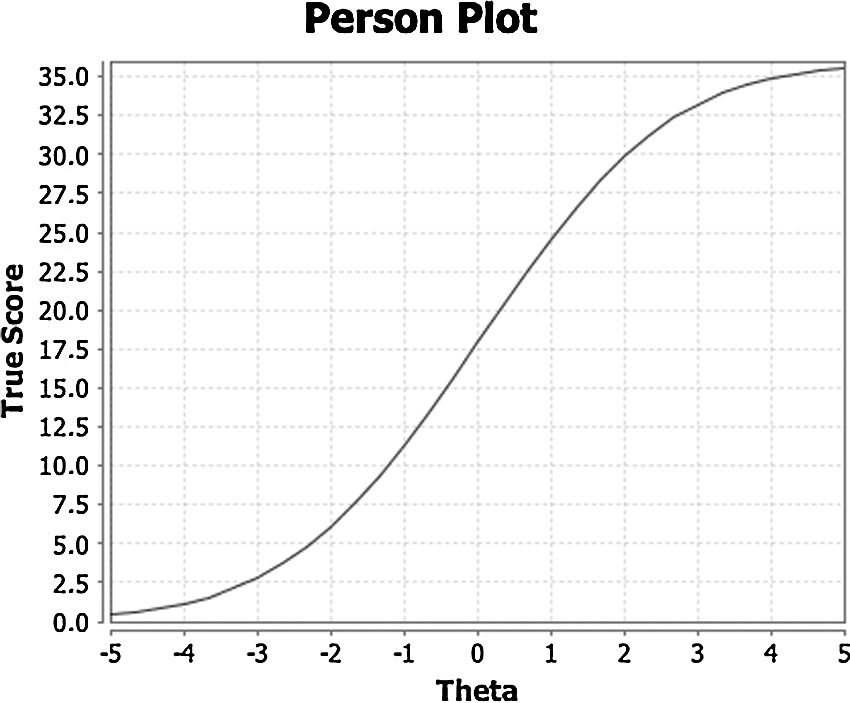

Item characteristic curve (ICC) graphs, which provide a graphical representation of the item characteristics, and also show the relationship between the ability level and the response to the item, in probabilistic terms. Also, the test characteristic curve (TCC) (Fig. 5), which shows the relationship between the total score on a test (not just on one item as occurs with the ICC) and the ability level of a person. In the case of Fig. 5, the graph presents the characteristic curve of a test that fits the Rasch model with a sigmoid curve that faces the score on the latent variable (theta) against the true scores of the people.

Step 8 – Analysis of measurement invariance: ensuring that the measurements are consistent across different subgroups of the sample, such as by gender, age or other characteristics. This is crucial to confirm that the instrument measures fairly for all participants. Typically, this is done using Differential Item Functioning (DIF) analysis.4,6

Differential Item Functioning (DIF) analysis is a statistical technique used to identify whether different groups of individuals respond differently to a specific item, despite having the same level of ability or latent trait.

Although DIF analysis and Rasch analysis are difference concepts, they can be related in the practice of psychometric assessment. Rasch analysis provides a framework for measuring a latent trait based on responses to test items, and one of its main attractions is the ability to generate measurements independent of the sample of items and the population of respondents. Incorporating DIF analysis in this context helps ensure that the instrument items are fair to all groups of individuals, which is essential for the validity of measurements obtained through the Rasch model. The main purpose of DIF analysis is therefore to ensure fairness and impartiality in assessment by identifying items that may be biased or perform differently for specific subgroups within the population, such as subgroups defined by gender, ethnicity, age, or any other relevant demographic or grouping variable. An item that displays DIF suggests that something other than the general ability being measured affects how different groups respond to that item.

DIF can be performed using several methods, including graphical approaches, statistical tests, and model-based techniques such as logistic regression or Rasch analysis itself. Methods based on Rasch models, in particular, allow for the assessment of DIF while maintaining the structure of the model, facilitating the interpretation of how item bias affects the measurement of the latent trait.

In the context of Rasch analysis, DIF is assessed by comparing item difficulty (item location parameter) between different groups, controlled for overall ability. This process is carried out whilst maintaining the structure of the Rasch model, meaning that the estimation of item difficulty and respondent ability is based on the maximum likelihood principle, assuming that items are independent and that the probability of a correct response only depends on the difference between respondent ability and item difficulty.

Some methods that can serve this purpose are:

- -

Group Contrast Analysis: a common technique is to split the sample according to the group of interest (e.g., gender, ethnicity) and perform a separate Rasch analysis for each group. The estimated difficulty of each item is then compared between groups. A significant change in item difficulty indicates the presence of DIF.

- -

Multifaceted Rasch Models: Some versions of the Rasch model allow a group to be directly incorporated as a "facet" into the analysis. This facilitates simultaneous estimation of group effects on item difficulty, providing a direct way to assess DIF.

- -

Specific Statistical Tests: Statistical tests designed to detect differences in item parameters between groups are used, such as the Mantel-Haenszel test or logistic regression-based tests, adapted to the context of the Rasch model. These tests compare item difficulty between groups after adjusting for respondent ability.

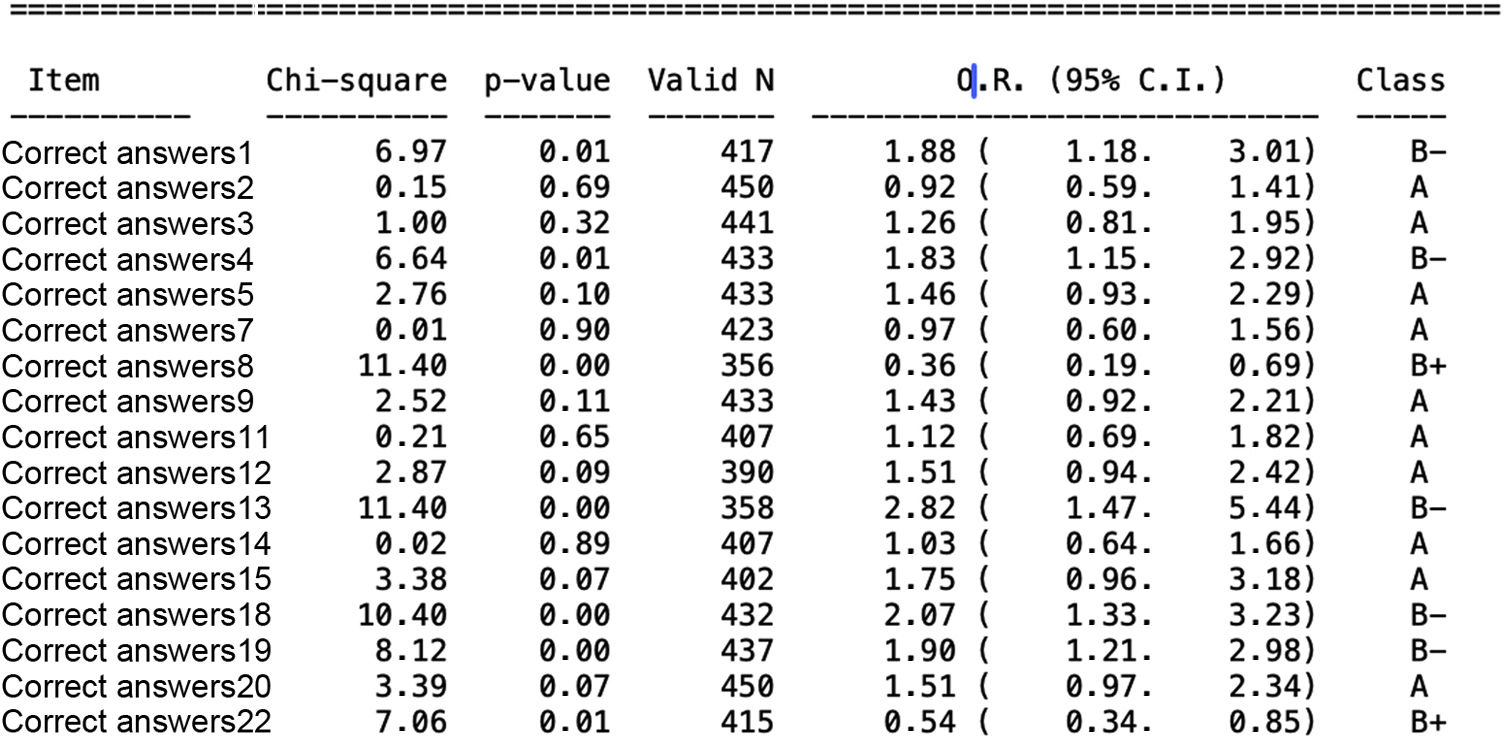

Assessing DIF in the context of Rasch analysis can use Odds Ratios (OR) as a tool to measure the magnitude of DIF between groups. ORs provide a quantitative way to compare the probabilities that individuals from different groups (e.g., men vs. women, or different age groups) will correctly answer an item, given a similar level of ability or latent trait. Thus, ORs are calculated for each item and compare the probability of a correct answer between two groups of interest. An OR value of 1 indicates that the groups are equally likely to correctly answer the item, after adjusting for ability. Values significantly greater or less than 1 suggest the presence of DIF, that is, that an item favours one group over the other.

Process for assessing DIF with OR in Rasch analysisThe sequence would be as follows:

- -

Estimating Ability: First, Rasch analysis is used to estimate individuals' abilities based on their patterns of responding to test items.

- -

Calculating Odds Ratios: Then, for each item, the ORs of answering correctly are calculated for individuals in different groups, controlling for ability. This is done by comparing the ORs of success in one group with those of the other.

- -

Statistical Analysis: Statistical analysis is performed to determine whether the differences in the ORs are significant, which may involve the use of hypothesis tests or confidence intervals. Interpretation based on the OR:

- -

OR > 1: Indicates that the reference group has greater odds of answering the item correctly compared to the comparison group, suggesting a possible bias in favour of the reference group.

- -

OR < 1: Suggests that the comparison group has greater odds of answering correctly, indicating a bias in favour of this group.

- -

OR ≈ 1: Implies that there is no significant evidence of DIF for that item between the groups compared.

It is important to consider that when using ORs to assess DIF in Rasch analysis, it is crucial to consider effect size and statistical significance. Not all items with ORs significantly different from 1 necessarily have a significant practical impact on the measurement. Therefore, it is essential to interpret ORs in the context of practical relevance and in combination with other DIF measures and qualitative item analyses. Fig. 6 presents partial results of a DIF analysis using the Mantel-Haenszel test to estimate the OR of a reference group versus a focal group (1st–2nd grade students versus 3rd–4th grade students in a knowledge test). As can be seen, there are a number of items that present moderate DIF (B+ or B− depending on which group is more skilled for that item). This helps us to evaluate items that function differently and therefore may be measuring another construct or that present differences due to certain elements of the group in question. This would violate the assumptions of the Rasch model. Therefore, items that show DIF (in this case moderate, represented by the letter B) should be eliminated.

Step 9 – Review and modification of the instrument: Based on the results of the initial analysis (steps 4, 5, 6 and 7), review and possibly modify the instrument. This may involve eliminating items that do not fit well, adjusting the response format or clarifying the wording of the items. This step may require more than one adjustment.

Step 10 – Final analysis and validation of the instrument: Perform a final Rasch analysis with the revised instrument to validate its structure and determine its reliability and validity (repeating the previous steps).

Step 11 – Interpretation of findings: Interpret the results in the context of the study objectives, focusing on the quality of the measurements, the validity of the instrument, and the implications for clinical practice and future research. This involves examining several key aspects of the data and the measurement instrument. It also includes interpreting the scales, item-person maps, and information curves. Some elements to consider:

- a

Model Fit: Assessing how the data fit the Rasch model, including the fit of individual items and the overall fit of the instrument. This is done using fit statistics, such as standardised residuals and measures of infit and outfit.

- b

Unidimensionality: Confirming that the set of items measures a single construct or dimension, which is critical for the validity of the instrument.

- c

Local Independence: Verifying that item responses are independent of each other, after controlling for personal ability, which is essential for instrument reliability.

- d

Parameter Estimation: Interpreting the parameters estimated by the model, including item difficulty and personal ability. This provides valuable information about the properties of the instrument and the characteristics of the population studied.

- e

Practical Implications: Finally, it is crucial to consider the practical implications of the results, including how they may provide information for clinical practice, nursing education, or future research.

For performing Rasch analysis, several specialised tools and software that facilitate this process exist. Some of the most popular options include:

- •

RUMM2030® [https://www.rummlab.com.au]: A powerful tool for Rasch analysis, especially useful for analysing polytomous data and assessing the quality of item fit.

- •

Winsteps® [http://www.winsteps.com]:11 Widely used in the Rasch analysis community, Winsteps offers a wide range of functionality for analysing dichotomous and polytomous data.

- •

FACETS:28 Specifically designed for the analysis of multifaceted data. FACETS is ideal for studies involving multiple raters or items with additional complexities.

- •

JMetrik® [https://itemanalysis.com]:6 A free tool that offers capabilities for Rasch analysis, along with other common statistical techniques.

Choosing the right software will depend on the specific needs of the study, including the type of data to be analysed, the complexity of the model, and the researcher’s preferences.

Application of Rasch analysis in nursingIn this section, we explore how Rasch analysis is applied in the discipline, highlighting relevant case studies that illustrate its practical impact.

- •

Assessment of clinical competencies and skills

Accurate assessment of clinical competencies and skills is critical in nursing education and ongoing professional practice. Rasch analysis offers a robust framework for this assessment, enabling educators and administrators to accurately identify areas of strength and progressive needs among students and nurses.

- -

Competency modelling: By using Rasch analysis, the complexity of clinical competencies can be modelled, differentiating between skill levels and ensuring that assessments are appropriate for the expected level of competency.

- -

Curricular Development: The results of Rasch analysis can guide curricular development and educational interventions, ensuring that nursing education programmes are aligned with identified competency requirements.

- •

Development and validation of measurement instruments in nursing.

- •

The development of reliable and valid measurement instruments is another crucial area where Rasch analysis has a significant impact. These instruments are essential for nursing research, the evaluation of educational programmes, and the measurement of outcomes in clinical practice.

- -

Instrument construction: Rasch analysis facilitates the construction of measurement instruments by providing a method to assess the unidimensionality of scales and the adequacy of items, allowing for more accurate and reliable assessment tools.

- -

Scale validation: Through Rasch analysis, it is possible to validate existing measurement scales, refining items to improve the accuracy and clinical relevance of measurements. This includes the ability to identify items that are culturally biased or do not perform uniformly across different population subgroups.

- •

Relevant case studies

- •

The following case studies illustrate the potential practical application and benefits of Rasch analysis in the field of nursing:

- -

Use and quality of reporting on Rasch analysis in nursing: Stolt et al.3 carried out an exploratory review where they highlighted that the use of this methodology in nursing is not very systematic, but that the use of this methodological approach is recommended.

- -

Development of instruments to measure knowledge in professionals and students in different areas: different authors have used Rasch analysis to develop and validate instruments to measure knowledge in different areas, for example, Alzheimer’s care,29 quality of life instruments in adolescents30 or knowledge about the prevention of pressure injuries.31,32

- -

Instruments to measure clinical conditions: such as the one developed to measure computer vision syndrome in the workplace.33 In this case, it is also an example of a combination of methods from the perspective of CCT and IRT.

- -

Refinement or revalidation of instruments: In this case, Rasch analysis reduced the number of items in the pressure ulcer scale in spinal cord injuries, improving its validity and reliability.34

- -

Linking or matching different instruments: Linking scales of two measurement points is a prerequisite for examining a change in competence over time. In large-scale educational assessments, non-identical test forms that share a number of items are often scaled and linked using item response models, as in the case of the article published by Fischer et al.35

These examples show how Rasch analysis is applied to address specific challenges in nursing education and practice, from the assessment of competencies to the development and validation of measurement tools. The Rasch methodology offers a solid basis for continuous improvement in these areas, helping to raise the standards of nursing care and education.

Advantages, limitations and challenges of applying this methodTo summarise, the benefits of using this methodology are as follows:

- -

Accurate and Objective Measurement: Rasch analysis enables qualitative responses to be converted into quantitative measurements on an interval scale, providing a solid basis for evidence-based decision making.

- -

Instrument validation: It facilitates the validation and refinement of measurement instruments, ensuring that they are both reliable and valid for assessing clinical competencies and skills in nursing.

- -

Comparability of Measurements: One of the most significant advantages is its ability to compare measurements across different population groups or points in time, which is essential for longitudinal studies and the evaluation of interventions.

- -

Identification of Problematic Items: The detailed analysis offered by Rasch analysis enables identification of items with inadequate functioning, whether due to their difficulty, ambiguity or cultural bias, thus helping to improve the quality of measurement instruments.

- -

Professional and Educational Development: The results of Rasch analysis can provide information for curricular development and teaching strategies in nursing programmes, aligning education with the clinical competencies required in practice.

For all the above reasons, Rasch analysis can be considered a valuable methodological tool, but it is not exempt from challenges and limitations, such as:

- -

Methodological Complexity: Despite its benefits, Rasch analysis can be methodologically complex, requiring the researcher to have a solid understanding of its principles and assumptions for its accurate application.

- -

Data Requirements: Effective application of the Rasch model requires complete and well-structured data sets, which can be challenging in studies with high non-response rates or missing data.

- -

Interpretation of Results: Interpretation of Rasch analysis results, especially with regard to fit statistics and unidimensionality, can be complex and requires careful consideration.

- -

Limitations in Model Flexibility: Although Rasch analysis is powerful, it can be less flexible than other IRT models in terms of modelling data that do not perfectly fit its assumptions. Rasch analysis assumes that all items in a test are of equal importance and that participants' responses can be modelled using a single latent dimension. This assumption may not be valid for all data sets, especially in areas where constructs are multidimensional.4

Some proposed solutions to the limitations or recommendations for improving knowledge, dissemination, and application of Rasch analysis in nursing research may be:

- a

Training and skills: Promote training in Rasch analysis among nursing researchers by offering workshops, courses and online resources to improve the understanding and application of this methodology.

- b

Use of Specialised Software: Take advantage of tools and software designed specifically for Rasch analysis, many of which include guides and support to facilitate their use.

- c

Data Collection Strategies: Implement robust strategies for data collection, such as intensive follow-up of participants and careful design of questionnaires, to minimise missing or incomplete data and ensure data quality.

- d

Multidisciplinary Collaboration: Work collaboratively with statistical professionals or methodologists specialised in Rasch analysis since this can help overcome some of the methodological and interpretation challenges, ensuring the correct application of the model.

- e

Consideration of Alternative Models: In cases where Rasch analysis may not be the most appropriate due to data characteristics, consider the use of other IRT models that may offer the necessary flexibility or even the use of models based on CCT. It would also be appropriate to combine IRT and CCT methodologies to obtain more robust results.

Rasch analysis provides a valuable methodology for the assessment of clinical competencies and skills, as well as for the development and validation of measurement instruments in nursing. Through its practical application, accurate and objective measurements can be obtained, facilitating evidence-based decision making in nursing education and practice. Featured case studies illustrate the potential of Rasch analysis to improve the quality of patient care and the effectiveness of nursing education programmes, underscoring its importance as a tool in nursing research and practice.

The application of Rasch analysis in nursing is a field rich in opportunities for future research. Some promising pathways include:

- -

Innovation in Measurement Instruments: Development of new measurement instruments for emerging areas of nursing practice and care, using Rasch analysis to ensure their validity and reliability.

- -

Longitudinal Assessment of Competencies: Application of Rasch analysis in longitudinal studies to assess the development of competencies over time, identifying factors that contribute to continued professional growth.

- -

Integration of Digital Technologies: Exploring how digital technologies and big data can be integrated with Rasch analysis to improve data collection and analysis in nursing research and practice.

- -

International Comparative Studies: Using Rasch analysis to facilitate international comparative studies on nursing education and practice, contributing to the global harmonisation of standards and practices.

During the preparation of this study the authors used "ChatGPT Plus/Academic Assistant Pro®" in order to improve/increase quality, relevance and accurate word count. After using this tool/service, the content was reviewed and edited as necessary, with the authors assuming full responsibility for the content of the publication.

Conflict of interestThe authors declare that they have no conflict of interest in relation to this manuscript.