In today's rapidly evolving digital landscape, the substantial advance and rapid growth of data presents companies and their operations with a set of opportunities from different sources that can profoundly impact their competitiveness and success. The literature suggests that data can be considered a hidden weapon that fosters decision-making while determining a company's success in a rapidly changing market. Data are also used to support most organizational activities and decisions. As a result, information, effective data governance, and technology utilization will play a significant role in controlling and maximizing the value of enterprises. This article conducts an extensive methodological and systematic review of the data governance field, covering its key concepts, frameworks, and maturity assessment models. Our goal is to establish the current baseline of knowledge in this field while providing differentiated and unique insights, namely by exploring the relationship between data governance, data assurance, and digital forensics. By analyzing the existing literature, we seek to identify critical practices, challenges, and opportunities for improvement within the data governance discipline while providing organizations, practitioners, and scientists with the necessary knowledge and tools to guide them in the practical definition and application of data governance initiatives.

The ever-expanding volume of data is a key asset for companies (Bennett, 2015). Data can be regarded as a “secret weapon” that determines an organization's capability to make informed decisions and maintain competitiveness in ever-changing and volatile markets, including the financial and industrial sectors (Ragan & Strasser, 2020, p. 1). To effectively manage their data, organizations must acknowledge and recognize the impact that robust and efficient data governance can have on achieving success and making well-founded decisions (Hoppszallern, 2015).

Throughout the years and as data and technology have advanced, the need to find solutions that ensure adequate data governance has grown significantly (Dutta, 2016). As part of the data governance procedure, strategy and actions, it is essential for data to be governed in a way that guarantees accuracy, integrity, validity, and completeness. However, many organizations are currently approaching data governance solely through the narrow lens of non-transactional data (data at rest). They are overlooking the risks associated with transactional data that may hinder their success and ability to be competitive in the market (Dutta, 2016). Hence, this narrow approach exposes organizations to significant risks, namely information errors. These errors can lead to increases in costs, reputational and financial losses, compliance risks, and others that may arise from the organizations’ inability to define an adequate data governance and quality framework (Dutta, 2016).

This field is crucial for an organization's success and any activity performed by data governance and quality practitioners. Recent trends demonstrate that the world and its market are being characterized by an increase in compliance and regulatory requirements, advances and changes in the technology and data landscape, and a heightened focus on excellence, financial governance, and reputation (Dutta, 2016). These trends are compelling organizations to reassess their data governance initiatives and to define and establish frameworks that address their specific data governance needs, providing management with a set of processes that allow for the governance and quality assurance of transactional and non-transaction data (Dutta, 2016). Nonetheless, since data governance corresponds to a complex and evolving field that can be considered one of an organization's most imperative and intricate aspects, there is still a gap to be filled by companies. This gap relates to the necessity for companies and their management to integrate and elevate data governance in their business operations to the highest level (Alhassan et al., 2019a; Bernardo et al., 2022; Johnston, 2016; Sifter, 2017).

According to Zorrilla and Yebenes (2022), data governance is not just a part but a cornerstone of digital transformation, particularly within the context of the Industrial Fourth Revolution (Industry 4.0, also known as I4.0). This revolution represents a significant shift in how organizations manage and control the value derived from the entire life cycle of a given product, or service. These authors emphasize that the digitalization of the industrial environment, achieved through the integration of operational and information technologies (OT and IT), is heavily influenced by effective data governance. This includes the use of cyber-physical systems, the Industrial Internet of Things (IIOT), and the application of real-time data generation for decision-making and insight-gathering.

Given the interconnected and interdependent nature of data, people, processes, services, and cyber-physical systems, the depth and complexity of digital transformation becomes clear. In line with this, for data to become an organization's competitive edge, it must be managed and governed like any other strategic asset, if not more so. Therefore, implementing a data governance framework is essential. This framework should define who has the authority and control to make decisions about data assets, facilitate shared and communicated decision-making, and establish the necessary capabilities within an organization to support these functions (Zorrilla & Yebenes, 2022).

Similarly, organizations must recognize that developing, designing, implementing, and continuously monitoring a data governance program and its initiatives requires a significant investment of resources, including personnel, time, and funds. Besides, building a robust data governance program—including its model, culture, and structure—takes time to fully address the complete needs of an organization, including business operations, risk management, compliance, and choices (Bernardo et al., 2022; Lancaster et al., 2019; Sifter, 2017). Bennett (2015) also emphasizes the vital need for companies to comprehend data governance, its components, standards, and the criteria that the framework must meet. Without this comprehension, enterprises may risk mismanaging data privacy and the information duties they hold and manage, which could lead to financial, operational, and reputational harm (Bernardo et al., 2022).

In a similar vein, Lee (2019) points out that while organizations and their boards are focused on improving cybersecurity, they often neglect to invest sufficient effort and resources in defining and developing a more robust and extensive data governance framework. Such a framework should address the accuracy, protection, availability, and usage of data. Although data governance intersects with cybersecurity, it is a broader field that encompasses additional components. These include concerns that should be considered as high-priority objectives for organizations, such as data management and governance, quality principles, the definition of data roles, responsibilities, processes, and compliance with data regulations and privacy laws (Bernardo et al., 2022; Lee, 2019). In reality, organizations have historically neglected and overlooked data governance, providing this field with minimal attention due to the high level of investment and complexity that it would require (Bernardo et al., 2022; Janssen et al., 2020). Similarly, corporations are refocusing and shifting their efforts to address data governance concerns, recognizing it as one of the top three factors that differentiate successful businesses from those that fail to extract value from their data. As a result, companies are developing their stakeholder positions and roles so that they can emphasize the value of technology, people, and processes within their data governance programs (Bernardo et al., 2022; Janssen et al., 2020).

Moreover, the literature confirms that many businesses across various industries and markets lack a sound data governance and management framework, structure, and plan that could shield them from potential harm, namely data disasters, losses, and system failures (Bernardo et al., 2022; Johnston, 2016; Zhang et al., 2016). Also, some authors emphasize how vital it is for enterprises to define robust auditing and assurance procedures to enhance their data governance and ensure that they are implemented in a productive, approach-oriented, continuous, and compliant manner (Bernardo et al., 2022; Johnston, 2016; Perrin, 2020). Furthermore, Cerrillo-Martínez and Casadesús-de-Mingo (2021) suggest that while this field has great potential, literature sources and guidelines on the subject are still “scarce and generally excessively theoretical.”

The literature alerts the community to the fact that businesses are more focused on experimenting with and utilizing artificial intelligence than on ensuring the quality of the data life cycle. This neglect includes the processes of acquiring, collecting, managing, using, reporting, and safeguarding, or destroying data—steps that are crucial for establishing a solid foundation for artificial intelligence to be used (Bernardo et al., 2022; Janssen et al., 2020). Even though these stages of data quality require a tremendous amount of time, people, and effort, companies tend to give them minimal attention. In reality, companies should prioritize developing and delivering initiatives that would allow them to identify the critical data sets, understand their nature and sources, track their flow through people, processes, and systems, and enhance their knowledge on data governance and quality (Bernardo et al., 2022; Janssen et al., 2020).

In addition, data-dependent activities such as data assurance and digital forensics analysis are strongly affected by an organization's maturity in governing data. Thus, organizations should primarily build and define a solid data governance framework before conducting rigorous digital forensics and data assurance analysis. In fact, only after setting up this data governance framework should organizations engage in forensics and data assurance tasks, because doing so can potentially improve their daily activities and operations, increase and improve data quality, and strengthen data-dependent activities such as reporting and decision-making. Otherwise, without proper data governance, organizations risk making poor decisions based on inadequate or inaccurate data (Bernardo et al., 2022; Ragan & Strasser, 2020).

The challenges associated with the data governance field are growing daily, particularly due to the relentless increase in data and the ongoing race to improve efficiency and competitiveness in the market (Paredes, 2016). Companies are concentrating on identifying roles and responsibilities, such as the Chief Data/Digital Officer (CDO), to lead and govern their data governance frameworks (Bennett, 2015; Bernardo et al., 2022). Ragan and Strasser (2020) emphasize the need for organizations to nominate and design a Data Czar, or other role to oversee their data governance initiatives. However, many firms and stakeholders resist this change due to the complexity and high investment required for a data governance framework (Bernardo et al., 2022; Ragan & Strasser, 2020). Additionally, without a comprehensive understanding of the business and data flow, organizations will face challenges in defining leadership positions, roles, and responsibilities necessary to govern their data and ensure their strategy is effectively implemented. Nonetheless, this overall process is inherently complex because there is no single method, framework or approach to data governance that fits all organizations in a standardized manner (Bernardo et al., 2022; Paredes, 2016; Ragan & Strasser, 2020).

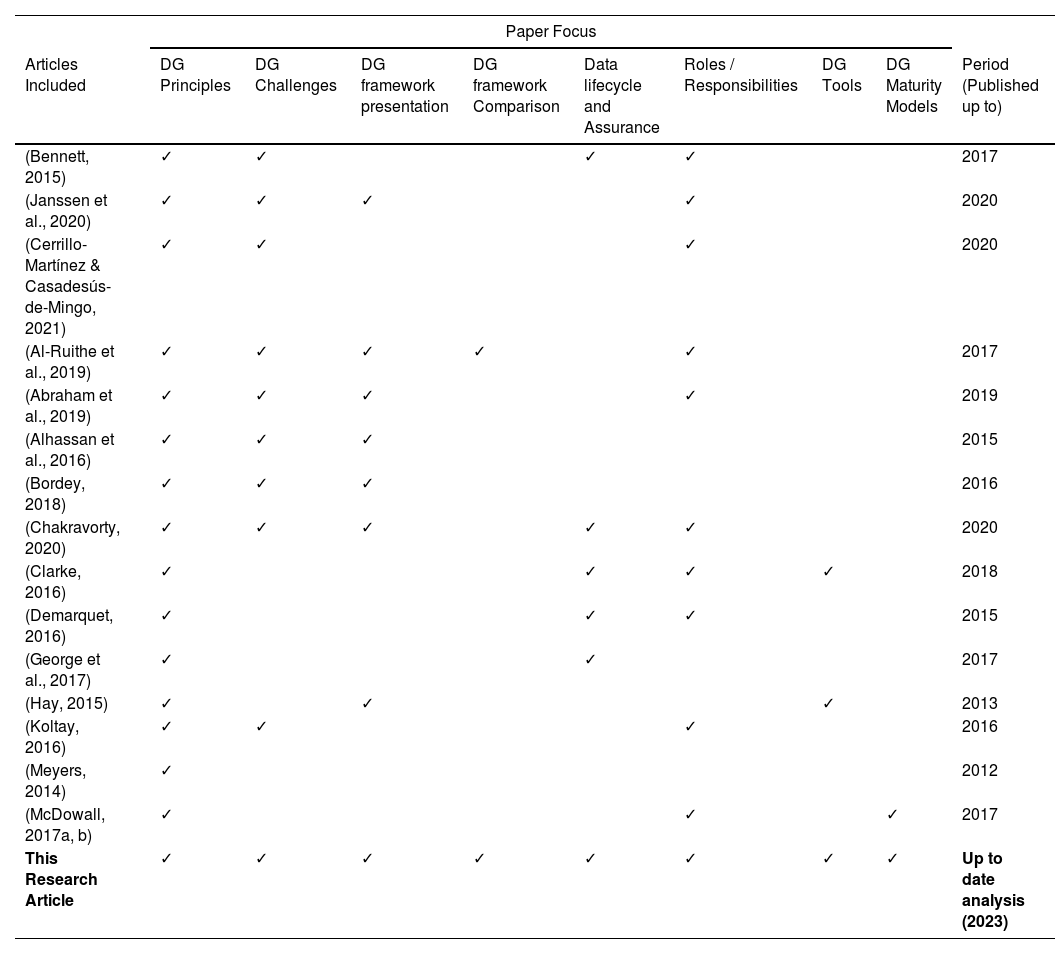

Related studiesThis section aims to summarize previous publications related to literature reviews, including systematic ones, on data governance topics. Sixteen papers were analyzed, revealing that they all focused on defining data governance principles and foundations. The analysis showed that the majority address general aspects of this field, namely key principles (16/16 articles), challenges (10/16 articles), and roles and responsibilities (11/16 articles). However, there was limited exploration of more in-depth essential topics, including: i) the exploration of data governance frameworks and their comparisons (1/16 articles); ii) the data lifecycle and assurance (6/16 articles); iii) analysis on existing data governance tools (3/16 articles); and iv) exploration of data governance maturity models and their impact (2/16 articles).

Moreover, we found that only half of the articles presented a data governance framework, and only one paper, Al-Ruithe et al. (2019), included a comparison of different frameworks. This paper is the closest one related to our work, particularly in its presentation and comparison of data governance frameworks. However, it did not include the relationship between these frameworks with the field's key components that our work intends to explore, such as structures, responsibilities, existing tools, lifecycle, and maturity models.

Much of the research literature was provided and published before 2020. We verified that of the 16 papers reviewed, approximately 69 % were dated and included papers published by 2017, 12 % included papers released by 2019, and the remaining 19 % by 2020. Our research, which was conducted using a thorough and robust process, consists of the latest publications on the subject available at the time of writing. We employed an extensive research process, utilizing relevant databases and rigorous methodologies such as PRISMA and a bibliometric analysis of the data obtained, minimizing the likelihood of not including relevant publications.

Table 1 shows that while most literature reviews focus mainly on the theoretical aspects of data governance, some do address specific domains, such as the data lifecycle, roles and responsibilities, and framework presentation. However, we did not find any article that comprehensively covers all these domains and establishes relationships between them, such as the analysis of multiple frameworks alongside data maturity models. Therefore, Table 1 illustrates our proposal for a novel and comprehensive approach to understanding the critical domains of data governance. This inclusive and accessible approach is intended not only for data governance practitioners and organizations but also for anyone seeking a deeper understanding of the field. It covers a wide range of topics, including data governance concepts and principles, challenges, a framework presentation, a comparison and analysis, the data lifecycle and assurance, existing functions and structures within a data governance framework, data governance tools, and maturity models.

Analysis of papers focusing on the review of the various domains of Data Governance (DG).

| Paper Focus | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Articles Included | DG Principles | DG Challenges | DG framework presentation | DG framework Comparison | Data lifecycle and Assurance | Roles / Responsibilities | DG Tools | DG Maturity Models | Period (Published up to) |

| (Bennett, 2015) | ✓ | ✓ | ✓ | ✓ | 2017 | ||||

| (Janssen et al., 2020) | ✓ | ✓ | ✓ | ✓ | 2020 | ||||

| (Cerrillo-Martínez & Casadesús-de-Mingo, 2021) | ✓ | ✓ | ✓ | 2020 | |||||

| (Al-Ruithe et al., 2019) | ✓ | ✓ | ✓ | ✓ | ✓ | 2017 | |||

| (Abraham et al., 2019) | ✓ | ✓ | ✓ | ✓ | 2019 | ||||

| (Alhassan et al., 2016) | ✓ | ✓ | ✓ | 2015 | |||||

| (Bordey, 2018) | ✓ | ✓ | ✓ | 2016 | |||||

| (Chakravorty, 2020) | ✓ | ✓ | ✓ | ✓ | ✓ | 2020 | |||

| (Clarke, 2016) | ✓ | ✓ | ✓ | ✓ | 2018 | ||||

| (Demarquet, 2016) | ✓ | ✓ | ✓ | 2015 | |||||

| (George et al., 2017) | ✓ | ✓ | 2017 | ||||||

| (Hay, 2015) | ✓ | ✓ | ✓ | 2013 | |||||

| (Koltay, 2016) | ✓ | ✓ | ✓ | 2016 | |||||

| (Meyers, 2014) | ✓ | 2012 | |||||||

| (McDowall, 2017a, b) | ✓ | ✓ | ✓ | 2017 | |||||

| This Research Article | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | Up to date analysis (2023) |

In this article, we address and justify the existing gap in characterizing the foundations of data governance, its evolution, and its maturity within enterprises and among its practitioners, which has been highlighted by several authors in the literature. One of the major challenges is the insufficient consideration and awareness of the importance of data governance and its key concepts, coupled with a lack of sufficient literature sources to guide and support organizations. To address this gap, we provide a detailed analysis of the data governance field, covering all previously described components and examining the relationship between data governance and other data-dependent fields, such as data assurance and digital forensics.

Throughout this article, we explore the relationship between data governance and two different but connected data-dependent fields: data assurance and digital forensics. Data assurance focuses on ensuring the quality and reliability of data throughout the lifecycle, including collection, processing, storage, and reporting stages. Digital forensics is a relatively new discipline within forensic science that offers rigorous methodologies and procedures for data examination and evaluation. This field can be applied in various areas beyond judicial proceedings, providing valuable support for organizations. Our primary reason for this focus is based on the fact that these two areas are vital and represent some of the most novel and emerging areas for the success of data governance. Moreover, both fields, alongside data governance, share a common focus on maintaining data integrity, completeness, and security throughout the data management lifecycle. In addition, digital forensics relies heavily on robust data governance policies to ensure data integrity, transparency, and authenticity. Meanwhile, data assurance focuses on complementing the data governance field with a set of mechanisms to continuously assess, validate and safeguard data quality. This ensures organizations remain in compliance with internal policies, current laws and regulations, and international standards.

As Grobler (2010a) emphasizes, the field of digital forensics can support an organization's operations. It specifically helps management and data users achieve the organization's data strategy, increases their ability to use digital resources, and complements the role of technology and information within the business context. Grobler further states that the interoperability of information systems and the increasing frequency of incidents heighten the need for organizations to leverage digital forensics in establishing their data governance framework (Grobler, 2010a, b).

Similarly, the literature highlights that data governance effectiveness is not solely dependent on the quality of the data, but rather on the absence of well-established policies and procedures, the organization's inability to comply with these standards and the lack of monitoring mechanisms such as the Key Performance Indicators (KPIs) and Key Risk Indicators (KRIs). As a result, organizations must establish methods to ensure that their data assets and the data used within their specific context have their quality attributes assured (Cheong & Chang, 2007; Hikmawati et al., 2021). Likewise, data assurance is closely related to the data governance field because it provides features for analyzing, validating, and verifying data integrity, quality, and compliance. This process helps prevent errors and ensures adherence to policies, standards, and relevant legislation. Therefore, we believe that by exploring digital forensics and data assurance, organizations can achieve a more robust and effective data governance. This will enable companies to: i) implement precise data collection methods, including the identification of relevant data and sources while preserving data quality attributes such as accuracy, consistency, completeness, availability and relevance; ii) enhance their data extraction capabilities using tools, especially automated ones, to preserve data assets and ensure authenticity of the gathered data; and iii) conduct thorough analyses supported by documented data lineage and generate reports based on high-quality data (Martini & Choo, 2012).

It is important to note that data governance interacts with other relevant disciplines and complementary areas, such as data security, business analytics, and artificial intelligence. However, while these areas are related to data governance, our comprehensive analysis indicates that the literature covers data governance more extensively than the fields of digital forensics and assurance, which are considered more novel and emerging.

This study includes a systematic literature review and an in-depth bibliometric examination of the aforementioned topics. It identifies key issues, opportunities, and challenges within these fields and clearly defines the primary research question as follows:

“How to conduct an extensive methodological systematic review to define, design, and enhance a data governance program - breaking through the fundamentals of Data Governance, Data Assurance & Digital Forensics Sciences.”

While seeking the application of the four different stages over the systematic literature review, the purpose of this study is to clearly answer the research questions (RQ) posed, namely:

- •

RQ1: What are the key challenges and opportunities in data governance, and what benefits and issues can be learnt from successful implementations?

In addressing Research Question 1, we focus on analyzing the number of benefits and challenges associated with the definition and implementation of a data governance framework, particularly through real case studies of organizations that have undergone this process. We believe that the benefits and opportunities in this field, outweigh the number of challenges currently faced. Nonetheless, it is crucial to understand what these challenges mean for an organization and what lessons can be learned from existing case studies.

- •

RQ2: What is the current level of maturity and background surrounding the topic of data governance?

For Research Question 2, we aim to analyze the current state of the data governance field and the literature around it to determine its maturity level. Consequently, we examined what the literature identifies as key aspects of the data governance framework that led to greater maturity levels, including existing frameworks, data stewardship roles, and consistent data management practices.

- •

RQ3: What are the current methodologies to support a data governance program and assess its maturity level?

For Research Question 3, we aim to identify the current and different methodologies that support the data governance field, including different frameworks and maturity assessment techniques. We believe that these practices will help organizations evaluate their current practices, identify gaps, and set objectives for improvement, ultimately leading to a more robust data governance environment.

- •

RQ4: What significant baselines from other fields can enhance data governance activity, structure, and archaeology?

Research Question 4 analyzes and explores the foundational principles of other data-related fields whose synergies can enhance an organization's data governance program, with a particular focus on data assurance and digital forensics. We believe that these connections, which have not yet been fully explored by the academic community, could lead to improved data quality management and more precise analytics techniques, offering deeper insights into strategic decision-making.

- •

RQ5: What are the main obstacles and constraints that the data governance discipline is currently facing and may face in the future?

Research Question 5 seeks to showcase the advantages of data governance through real-life case studies of successful data governance frameworks. It also aims to identify the obstacles and challenges organizations face in this field, such as resistance to change, the complexity of integrating diverse data systems, and a lack of specialized skills and management support. Additionally, it considers future challenges that may involve managing growing data volumes and ensuring data privacy and security in an increasingly digital and interconnected environment.

By answering these questions, this article aims to inform and demonstrate to the community how to break through the essential concepts of the data governance field, ultimately fostering its quality. At the same time, we seek to raise awareness and offer a different approach and perspective to understanding the main challenges faced by organizations. We believe that defining these research questions will bring added value to these fields, benefiting both specialists and organizations. This will lead to the definition of four main objectives, which correspond to the following statements:

- •

OB1: Identify, characterize, and evaluate the current environment, namely the latest and most appropriate data governance tools and methodologies, to lay the foundation for OB2, OB3, and OB4;

- •

OB2: Identify the importance of data governance frameworks that provide a sustainable and robust foundation for data governance programs, enabling organizations to obtain a fully committed and up-to-date environment;

- •

OB3: Comprehend the available tools, maturity assessment procedures, and methods, and explore how organizations can leverage them;

- •

OB4: Acknowledge, classify, and compare the differences and similarities among existing tools and methodologies regarding data governance and data quality to find potential barriers and synergies for future exploitation.

These objectives add value to the data governance field by identifying and addressing existing gaps, raising awareness on this field to any researcher and practitioner, and supporting broader goals. Additionally, this work contributes to goal 9 of the UN Global Goals (Industry and Infrastructure) which aims to expand and increase scientific research, enhance the technological capabilities of industrial sectors worldwide, and promote innovation by 2030 (Denoncourt, 2020; United Nations, Washington, DC, 2020).

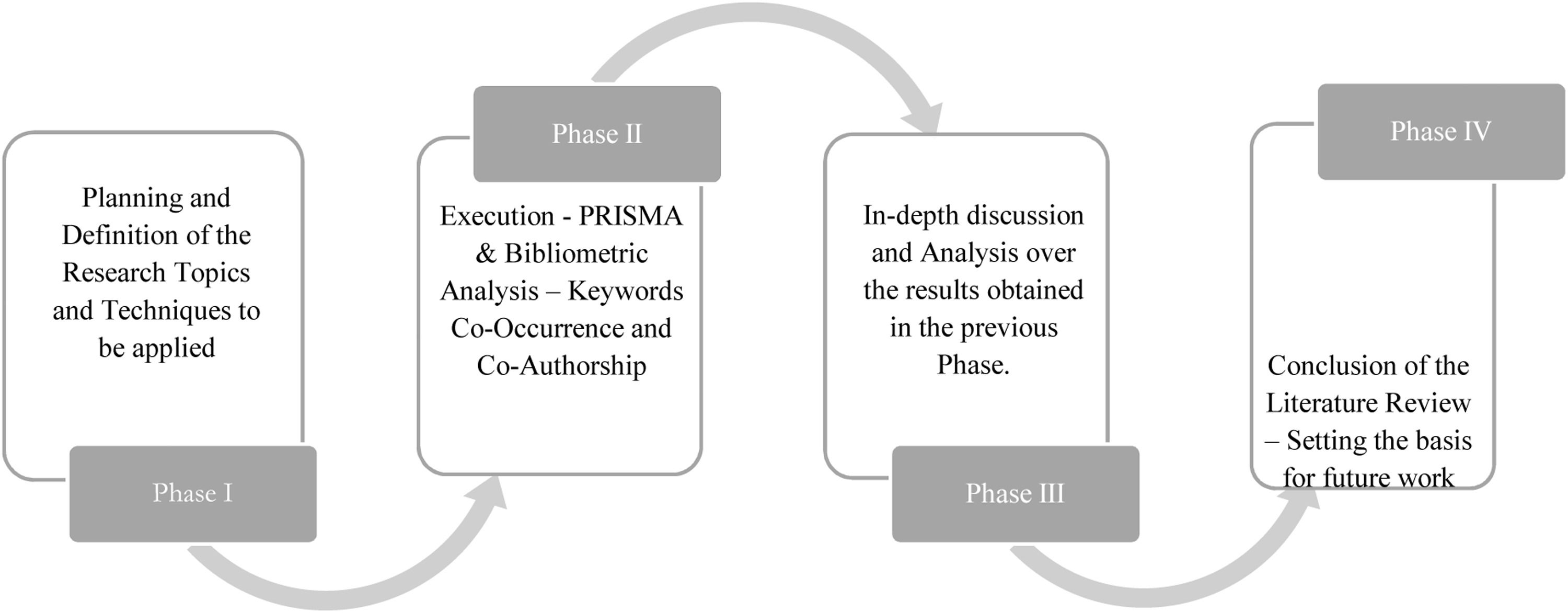

Phase I – Data, methods and planningGiven the complexity of the data governance field, a systematic review of the literature is essential for an in-depth analysis. To achieve this, we employed several methodologies to collect information and data that met pre-defined qualifying criteria, aiming to address the research questions and objectives effectively. As illustrated in Figs. 1 and 2, we structured the article and research into four stages: i) planning and definition, ii) execution of the systematic literature review and its techniques, iii) analysis and discussion of the findings, and iv) conclusions and recommendations for future work.

Likewise, the details for Phase II are presented in Fig. 2, which includes the application of the PRISMA methodology, full-text analysis, scrutiny methods, quality assessment, and bibliographic mapping of the data analyzed.

The theoretical background was established using the well-established systematic literature review method known as PRISMA (Preferred Reporting Items for Systematics and Meta-Analyses). This approach was employed to identify and examine relevant literature in any form that could support this research. It also provided access to the most up-to-date scientific publications encompassing knowledge that is already available and validated by the community (Okoli & Schabram, 2010). The literature review process aims not only to summarize previous concepts and findings but also to present new evidence. This evidence is derived from consolidating all the activities in this article, including the PRISMA method, annotated bibliography, quality assessment, and bibliometric network analysis (Hong & Pluye, 2018).

Similarly, PRISMA is designed to provide scientific documentation on research (Moher et al., 2015). It involves a 27-item checklist containing four main steps that comprise its statements, with the primary goal of aiding researchers improve systematic literature reviews (Moher et al., 2009). In this context, the main goal is to compile and provide an overall analysis on data governance, data assurance and digital fields by scrutinizing the available literature (Palmatier et al., 2018; Snyder, 2019). By integrating different perspectives, we address the research questions and identify areas needing further investigation (Snyder, 2019). As a result, the theoretical background was developed to facilitate more comprehensive research in these fields. Moreover, this structure remains adaptable, allowing for the inclusion of additional literature that may be appropriate for this research. Finally, the PRISMA application can guide the search process using different keyword queries across publication databases (Moher et al., 2015).

It is crucial to understand that the broad range of information available across various databases and levels of significance can sometimes mislead researchers. The literature indicates that with the rapid evolution of Information and Systems Technology, there are now an almost limitless number of open and closed-access publications, journals, search engines, and databases. This abundance necessitates a structured methodological literature review process to be able to define levels and eligibility criteria for articles to be included in the theoretical background review (Bannister & Janssen, 2019; Smallbone & Quinton, 2011).

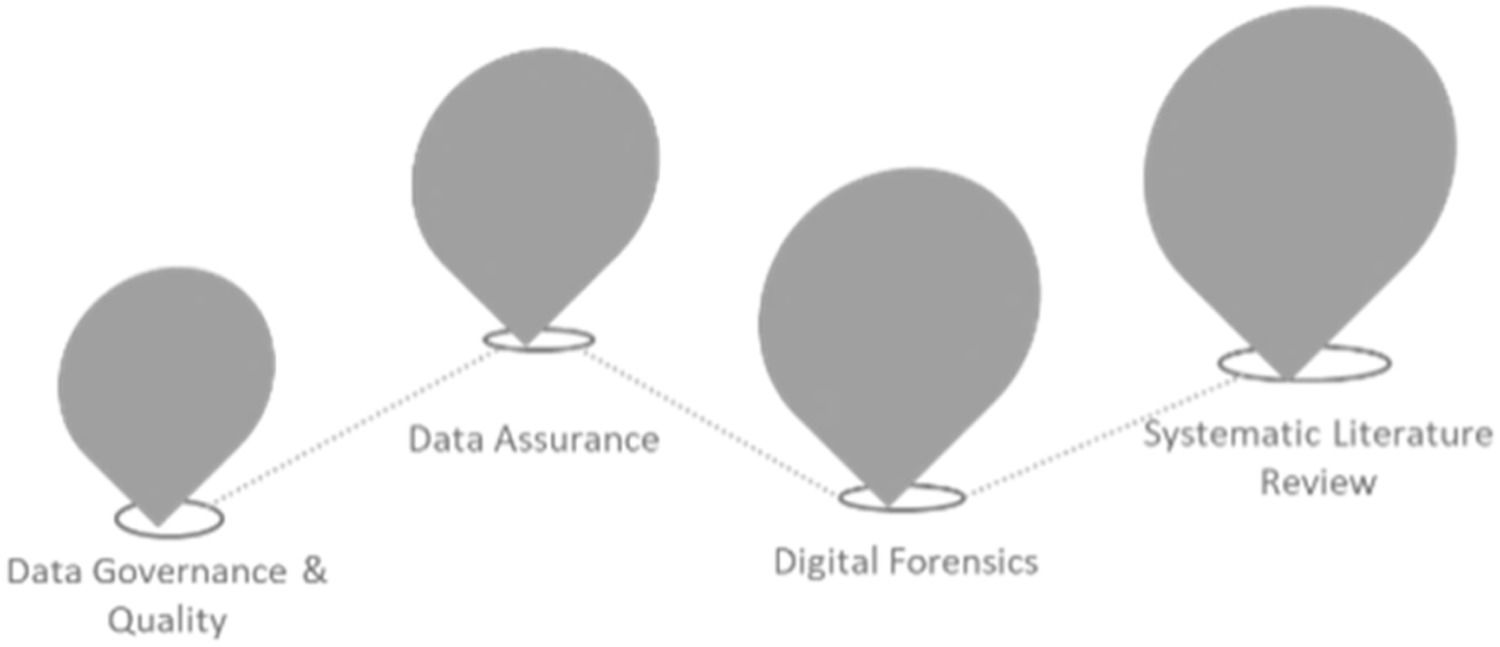

Additionally, considering the initial state-of-art and background review, we recognize that rapid advances and adoption in the fast-paced IT industry have impacted these fields. This has introduced several numerous prospects and challenges that, although prominent in this field, are not exclusive to it. Therefore, an essential step in the literature review is to include existing studies and research and outline the roadmap for analysis (Snyder, 2019). The review roadmap focused on the topics presented in Fig. 3, namely:

Phase II – ExecutionSystematic literature review - PRISMA applicationUsing PRISMA, we identified and collected publications that meet specific predefined criteria, represented by variables such as the search query, database reference, time frame, publication years, language, and other relevant factors. In this first step, scholarly studies and literature considered during the exploration and search process are retrieved from the general database “EBSCOhost Online” (the primary research base). Following PRISMA's guidelines, the SLR, illustrated in Fig. 4, encompassed four main steps: 1) Identification, 2) Screening, 3) Suitability, and 4) Inclusion.

Consequently, a flowchart was produced and adapted from the R package developed and presented by Haddaway et al. (2022) for producing PRISMA flowcharts. The search query and its expression were designed by considering publication characteristics, namely their abstract, title, and keywords. The expression was applied by including Boolean logical operators, i.e., “AND”/“OR,” to establish logical relationships. For Stage 1, Identification, the subsequent search query was formalized and applied to target publications containing specific terms, or expressions in their abstract, title, and keywords, including the following:

(“data governance” OR “Data Assurance and Governance” OR “Data Forensics and Governance” OR “Data Govern” OR “data governance & Quality” OR “data governance and Assurance” OR “data governance and Forensics” OR “data governance and Quality” OR “Data Quality & Governance” OR “Data Quality and Governance” OR “Govern of Data” OR “Digital Forensics” OR “Digital Forensic”).

The search expression outlined above focused on the main topics and any derivations that can be obtained from these terms. Also, the search process was conducted in November 2022 using EBSCOhost Online and its advanced search engine as the primary database. Google Scholar was also used as a secondary database to identify additional relevant publications beyond what was found on EBSCOhost. The analysis of these sources was carried out in 2023.

The PRISMA application assisted in creating a flowchart that represents and includes each stage of the methodology: Stage 1 (Identification), Stage 2-8 (Screening and exclusion criteria), Stage 9 (Eligibility), and Stage 10 (Inclusion). In Stage 1, the search query identified a total of 38,928 records through database searches in EBSCOhost Online.

Given the total number of records, it was essential to define the screening and exclusion stages (Stages 2-8) to filter out studies that adhered to the pre-established exclusion criteria. These criteria included, in the early stage (Stage 2), the requirement that publications fall within the date range from 1 January 2014 to 30 November 2022, resulting in the exclusion of 25,332 publications that fell outside of this range. In Stage 3, a total of 13,596 articles were screened to retrieve only English-language publications, excluding 669 articles. Stage 4 focused on removing publications that were not accessible for analysis and in full text, addressing the 12,927 articles from the previous run and eliminating 3,831 publications. During this screening process, we excluded references that, despite appearing in the search query, lacked available documentation, or full-text resources for our full-text review. Despite these necessary exclusions, our literature review included what we believe are the most relevant articles on the topics. We considered it essential to filter these types of references to maintain the integrity and reliability of the literature review, ensuring that it only contains accessible and verifiable references for further consultation by researchers, though this approach may limit the scope of the review in some cases. Moreover, Stage 5 focused on analyzing the 9,096 articles retrieved so far. In this Stage, the primary task was to remove duplicate studies, i.e., instances where the exact same publication appeared more than once. This process led to the exclusion of 1,631 articles, leaving 7,465 articles to be analyzed in Stage 6. Here, the main goal was to filter and eliminate studies that did not have an abstract section, or were categorized as editorial columns, observations, preludes, book volumes, reviews, and workshops, resulting in the exclusion of 4,015 studies.

This process resulted in 3,450 articles obtained for the run in Stage 7, which focused on excluding publications with abstracts that were clearly outside the research topic, or not directly related to the analysis. In this Stage, 2,988 articles were removed, leaving 462 articles to be included in Stage 8. As a result, in Stage 8, the main objective was to exclude articles whose abstracts were not closely connected to, or explicit about the research subject, resulting in the exclusion of 220 records and leaving 242 for Stage 9, the eligibility phase. Consequently, these 242 articles were retrieved for a full-text, in-depth assessment of their content, relevance, and importance to the studied topics. After this full-text analysis in Stage 9, 155 articles were excluded, leaving 87 articles to be incorporated in the literature review. Additional records may be included if any article is later identified as a vital source of information for this paper.

The final 87 articles obtained through the PRISMA process were distributed relatively evenly across different publication dates. This ensures that the literature review encompasses both older and more recent articles, providing the researcher with a broader perspective on the topics under analysis.

Finally, after applying the PRISMA methodology at each step, presented in Fig. 4, 87 articles were deemed eligible and incorporated into the literature review.

To assist in managing the bibliography and references, the BibTeX information of these articles was imported to Zotero, an open-source reference management tool. Consequently, this tool played a crucial role in the quantitative and qualitative analysis of the literature and in managing the references used throughout this paper (Idri, 2015).

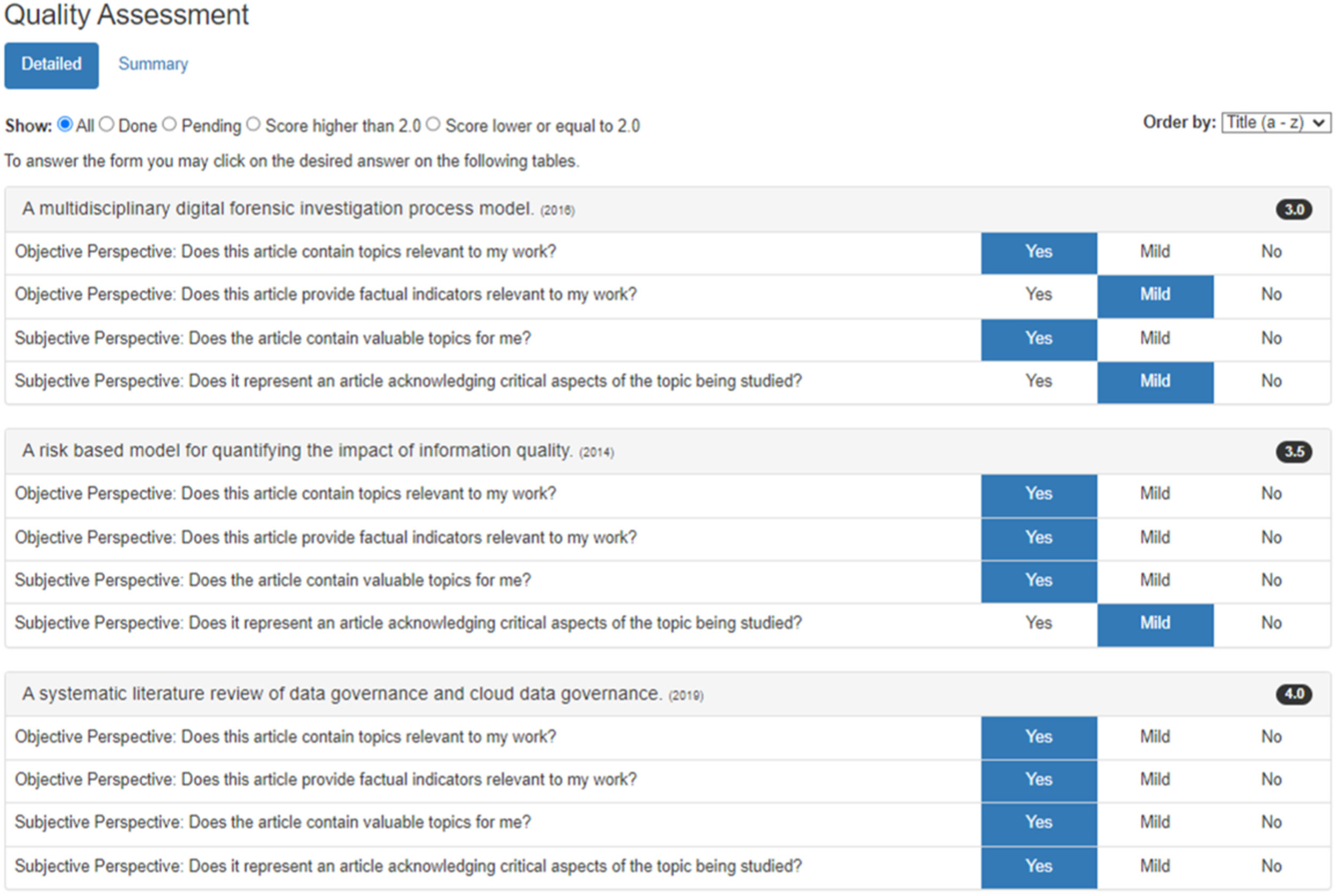

Bibliography quality assessmentIn addition to applying PRISMA, we conducted further analysis by developing a quantitative quality assessment metric to support the literature review. The metric scored articles on a scale from 0.0 (Poor) to 0.5 (Mild) and 1.0 (Good), with a maximum score of 4.0 per article. Articles scoring above the cut-off score of 2.0 were considered more relevant for the literature review, while those scoring below 2.0 were excluded.

This assessment was fully supported by a detailed full-text analysis, with scores assigned based on the criteria presented in the framework shown in Fig. 5:

- •

Objective: Does this article contain topics relevant to my work?

- •

Objective: Does this article provide factual indicators relevant to my work?

- •

Subjective: Does the article contain valuable topics for me?

- •

Subjective: Does it represent an article acknowledging critical aspects of the topic being studied?

Onwuegbuzie and Frels (2015) state that the quality assessment and its metric will be used to address gaps identified in the literature review process and to generate a structure that reflects the researcher's value system. Winning and Beverley (2003) argue that a key concern for researchers when performing reviews should be the trustworthiness and authenticity of the methodologies applied in the theoretical background review process. Therefore, these assessment techniques were designed to enhance the thoroughness and transparency of this process. As Bowen (2009) suggests, the researcher, being the subjective interpreter of information and data in publications, should strive for an analysis process that is as accurate and transparent as possible.

Accordingly, the literature identifies several gaps that affect the literature review process, including: 1) insufficient description of the document analysis and the processes used to perform the literature review; 2) inadequate understanding of what is already known regarding a particular field, leading researchers to investigate research questions on fields and topics that have already been thoroughly analyzed by others; 3) lack of consideration of researcher bias, which can lead to the selection of studies that align with the researcher's perspective; and 4) poor understanding of existing methodological frameworks and techniques regarding how they were applied and how conclusions were derived (Bowen, 2009; Caldwell & Bennett, 2020; Deady, 2011; Levac et al., 2010).

Various authors emphasize the need for researchers to include a quantitative quality evaluation approach in their review process. This approach adds a robust and rigorous technique that extends beyond traditional qualitative assessments, particularly by uncovering and analyzing the publications’ metadata and scientific information (Campos et al., 2018; Mackenzie & Knipe, 2006; Major, 2010; Niazi, 2015).

Consequently, we conducted a full-text analysis of the 87 articles, evaluating both objective and subjective perspectives. As a result, Table 2 presents the quality assessment of all 87 articles that reached this phase. As shown in Table 2, 12 articles were assessed and scored below the cut-off score of 2.0 and were therefore excluded because they were deemed irrelevant, or of no value for the topics under analysis. The quality assessment resulted in 75 articles being selected for the literature review and for analysis in the bibliometric network analysis, which is detailed in Table 2.

Bibliographic references – quality assessment.

As shown in Fig. 6, the 75 publications are distributed according to the defined period. This distribution provides a balance of mature and recent insights and knowledge, proposing a more innovative approach to the fields under research.

Bibliometric networks analysisAlongside PRISMA and the in-depth quality assessment, we conducted a bibliometric analysis of the literature. This analysis examined the co-occurrence of keywords within publications, the correlation between authors, and the most cited articles. The VOSviewer application was used to produce and visualize bibliometric networks, allowing us to explore data through visualization tools and techniques, such as clustering co-authorship and keyword co-occurrence. Bibliometric activity is described in the literature as a statistical method for validating and evaluating scientific publications—including articles, books, chapters, conference papers, or any other scientific works—using statistics and metrics to effectively measure their impact on the scientific community, as well as the relationships between the studies and their bibliographic metadata (de Moya-Anegón et al., 2007; Donthu et al., 2021; Ellegaard & Wallin, 2015; Lo & Chai, 2012; Merigó & Yang, 2017). Ellegaard and Wallin (2015) note that bibliometric analysis and its methods are firmly established as a scientifically valid approach and a fundamental component of the methodology applied in research assessment. This approach introduces a quantitative dimension to the literature review, using accurate and precise metrics to assist the researcher in the literature review process. It enhances the subjective assessment of articles that may be considered eligible for review (Ellegaard & Wallin, 2015; Zupic & Čater, 2015).

As demonstrated by Hong and Pluye (2018), the development and use of literature reviews have been growing over the last 40 years, making them a major component of consolidated scientific work. With this rise, bibliometric analysis has also expanded in recent years, impacting researchers’ literature review and introducing new challenges to the review process. This includes assessing both the objective and subjective quality of the literature and its impact (Zupic & Čater, 2015).

The continuous growth of data, including published works, requires now more than ever a robust methodology for researchers to assess and evaluate the information and data they need from the vast amount available nowadays. As a result, bibliometric analysis and its methods are expected to help filter essential works for researchers (Ellegaard & Wallin, 2015; Zupic & Čater, 2015). In fact, bibliometric scrutiny is vital when analyzing substantial volumes of bibliographic data (Gaviria-Marin et al., 2018).

Consequently, we conducted the following analyses: i) Journal Analysis, ii) Authors Co-Authorship Examination, and iii) Keywords Co-Occurrences Investigation. While performing these analyses, we discussed the results and connected the bibliometric findings to the discussion of the review. The first analysis, i) Journal Analysis, involved summarizing and analyzing the metadata of the journals in which the publications appeared, focusing on characteristics such as Quartile ranking (if applicable), country, and research fields. We used data from the SCImago Journal and Country Rank (SJR) for this analysis. The second analysis, ii) Authors Co-Authorship Examination, explored interactions among authors within a research field to understand how scholars communicate and collaborate, and whether this collaboration was diverse, or isolated. Authors who collaborate amongst themselves form a network that Donthu et al. (2021) describe as “invisible colleges,” where authors focus on developing knowledge within a specific field of interest. This analysis helps the researcher understand the dynamics of author collaboration over different periods of time (Donthu et al., 2021).

Additionally, the Co-Authorship evaluation helps perceive the level of collaboration among authors on a given topic. It provides insight into the field's social structure and allows the researcher to understand whether authors collaborate outside their immediate group (i.e., the group of authors who appear in each other's publications). Often, authors engage with their group and publish together frequently, but they may not engage with authors outside of their group, which limits the inclusion of different perspectives and knowledge in their research (Donthu et al., 2021; Zupic & Čater, 2015). This analysis was conducted using VOSViewer to perform the necessary text mining (Van Eck & Waltman, 2011, 2014).

For the third analysis, iii) Keywords Co-Occurrences Analysis, we also used VOSViewer for clustering visualization. This analysis identifies which keywords meet the predefined criteria (Van Eck & Waltman, 2011, 2014). It compares keywords from articles published within a specified time frame to identify those that appear in at least two, or more articles, although these criteria may vary depending on the researcher's choice (Donthu et al., 2021; Gaviria-Marin et al., 2018).

Thus, this analysis enables the examination of the most frequently used keywords within the eligible articles and serves as a longitudinal method to comprehend and track the progression of a given field over time (Walstrom & Leonard, 2000). It also involves connecting keywords using the bibliographic metadata of the publications. However, a central challenge for the researcher is that keywords can appear in various forms and may have different meanings depending on the context (Zupic & Čater, 2015).

i) Journal Analysis

The aim of this analysis is to summarize and examine the journals associated with each eligible article, focusing on characteristics such as Quartile ranking (if applicable), country, and research fields. To obtain this information, we used the SCImago Journal and Country Rank (SJR) to retrieve the main characteristics of each journal. For articles not listed in the SJR, we used the Google Scholar search engine. As stated by Mañana-Rodríguez (2015), it is important to have a quantitative assessment of different approaches, including theoretical and practical ones. Roldan-Valadez et al. (2019) argue that researchers must understand the impact of a publication using different types of bibliometric indices. One widely used metric is the SJR index, which is based on data from Scopus and incorporates centrality concepts from social networks. This metric is openly available and contains journal- and country-specific scientific measures and indicators, and is available on its website: www.scimagojr.com (Ali & Bano, 2021; Roldan-Valadez et al., 2019).

Considering this, our literature review comprised 75 articles from 56 different journals. The three most represented countries were the United Kingdom, the United States of America, and the Netherlands, illustrated in Figs. 7 and 8.

The 75 articles reviewed come from a total of 56 journals. Of these, 42 articles (56 %) were retrieved from quartile-ranked journals, while the remaining articles have not yet been classified by quartile ranking. Note that journal characteristics can change over time. This information is presented in Table 3.

Journal details description.

Similarly, we categorized each eligible publication into four major areas, outlined in Table 4: data governance and quality, data assurance, artificial intelligence, and digital forensics.

Primary research areas of the literature review.

ii) Authors Co – authorship analysis

In this analysis, we utilized VOSviewer, which provided solid and robust text mining techniques for the researcher. One such technique involves a complete counting analysis of co-authorship, where the unit of analysis is the authors of each of the 75 eligible publications. To avoid restricting this analysis, we did not limit the maximum number of authors per publication, and a minor threshold was set at 2 articles per author. Consequently, the co-authorship analysis was applied to 126 authors of which five met the defined threshold, shown in Fig. 9.

Similarly, we conducted an additional iteration of this analysis, setting the minimum threshold to 1 while allowing an unlimited number of authors per publication and maintaining a minor threshold of 2. This iteration included a total of 126 authors, resulting in the aggregation of 71 distinct clusters, with the largest cluster containing five authors. The top five clusters, ranked by the number of authors per document, are presented in Fig. 10 and Table 5.

Co-authorship analysis - top 5 ranked by clusters of author.

| # Cluster | Reference | # Of Authors per Cluster | Link Strength | Links between authors in different clusters |

|---|---|---|---|---|

| 1 | (Janssen et al., 2020) | 5 | 4 | 0 |

| 2 | (Elyas et al., 2014) | 4 | 3 | 0 |

| 3 | (Borek et al., 2014) | 4 | 3 | 0 |

| 4 | (Mikalef et al., 2020) | 4 | 3 | 0 |

| 5 | (Petzold et al., 2020) | 4 | 3 | 0 |

By analyzing the data, we observed that, although there are a total of 71 distinct clusters, each containing one or more authors, none of these researchers are connected to others from different articles or clusters. This lack of connection may suggest a deficiency in collaboration between clusters, indicating that authors did not engage in collaborative work outside their respective clusters. Additionally, clusters containing more than one author may suggest that the authors within each cluster collaborate on the same publication. According to Kraljić et al. (2014), researchers’ key contributions should focus on both existing and future scientific knowledge and the sharing of this knowledge. Researchers should therefore aim to foster collaborative work and networks to integrate diverse perspectives and approaches.

iii) Keywords co-occurrences analysis

For this analysis, we used the VOSviewer tool to assess 349 keywords, setting the minimum occurrence threshold at 3. Consequently, 30 out of the 349 keywords met this threshold and were selected for analysis. VOSviewer grouped these 30 keywords into four distinct clusters, which are presented in Table 6 and Fig. 11: Cluster 1 (red) – data management; Cluster 2 (green) – computer crimes/forensic sciences; Cluster 3 (blue) – data quality; and Cluster 4 (yellow) – data governance.

Keywords co-occurrences ranked by the occurrences - descending.

Additionally, the top five keywords identified were data governance (17 occurrences, 30 total link strength), data management (15 occurrences, 32 total link strength), big data (9 occurrences, 23 total link strength), computer crimes (9 occurrences, 23 total link strength) and criminal investigation (9 occurrences, 23 total link strength). The analysis, which encompassed four clusters, included a total of 30 items, 117 links, and an overall link strength of 183, shown in Fig. 11.

The overlay visualization, which represents the Co-Occurrence analysis, includes an additional variable represented by the average year of the keywords’ publications. As shown in Fig. 12, the most interconnected terms in this network correspond to articles published between 2016 and 2019. Thus, within Cluster 1 (red) – data management – the average publication year of the keywords is between 2017 and 2018. In Cluster 2 (green) – computer crimes/forensic Sciences – the average year is between 2016 and 2017. For Cluster 3 (blue) – data quality – the time frame falls between 2017 and 2018. Finally, Cluster 4 (yellow) – data governance – stands out from the remaining groups with a more recent average publication year between 2018 and 2019.

Phase III - Results and discussionThis section discusses each Research Question (RQ) and details the results obtained to answer them. Each subsection corresponds to a specific Research Question, labeled RQ1 through RQ5.

Data governance – theoretical backgroundTheoretical backgroundIn response to Research Question 1 (RQ1), it is important to describe the theoretical context, foundations, and key concepts of the data governance field. According to the Data Management Association (DAMA), a significant reference in this area, data governance involves a combination of responsibilities, control environments, governance, and decision-making related to an organization's data assets (Al-Ruithe et al., 2019). This concept is distinct from data management, which DAMA characterizes as the process of defining data elements, acquiring, managing, and storing them within an organization, and overseeing how data flows through IT systems. Data management also includes the policies, procedures, and plans that support this workflow (Al-Ruithe et al., 2019).

Given this, we define data governance as the planning, management, and governance of data and related activities. According to Sifter (2017), data governance can be viewed as a combination of highly managed and structured collections of resources and assets, which are critical to an organization's business and decision-making processes (Sifter, 2017, p. 24). The main objective of data governance is to enhance a company's capability, effectiveness, and sustainability by supporting its decision-making processes with robust, high-quality data, leading to more precise and informed choices (Sifter, 2017).

Ragan and Strasser (2020) similarly view this field as one that enables organizations to leverage data to communicate their needs, while also aligning business strategy with available data. This alignment leads to better supported, more organized processes and more informed decisions.

Moreover, the success of a data governance program is closely tied to an organization's culture, as well as the dedication and engagement of its administration and human resources (Ragan & Strasser, 2020). Hoppszallern (2015) highlights the importance of clear governance in information technology, noting the possible negative effects of unclear governance on an organization and the value that can be created through effective implementation. Similarly, Sifter (2017) argues that strong data governance may address obstacles related to an organization's processes, people, and technology, enabling it to tackle data management problems and uphold a long-term commitment to integrity in data and asset governance. Meyers (2014) shares this perspective, recognizing data governance as the application and oversight of data management rules, processes, and formal procedures throughout an organization's operations.

Furthermore, the literature identifies data governance as one of the most crucial areas for firms to incorporate into their operations. Indeed, it can be seen as a critical asset for the success of their business strategy because data are seen as the future and a competitive edge that can differentiate successful businesses from those that fall short (Ragan & Strasser, 2020, p. 10). Rivett (2015) argues that data governance represents a significant gap that businesses need to address, especially as it becomes increasingly complex over time. However, organizations should remember that data governance involves more than just managing data. It also encompasses how data are collected, produced, utilized, controlled, and reported, as well as who is responsible for ensuring quality throughout the lifecycle (Janssen et al., 2020).

Moreover, Riggins and Klamm (2017) describe this field as an exercise in process and leadership focused on data-based decisions and related matters. It comprises a system of decisions and responsibilities defined by an agreed-upon model, which outlines how an organization can take action, what type of actions can be pursued, and when those actions should occur in relation to data and information. Dutta (2016) describes this field as the combination and definition of standards, processes, activities, and controls over enterprise data to ensure that the data are available, integrated, complete, usable, and secure within the organization. Organizations are striving to define and implement resolutions that manage the quality and master data, ensuring truthfulness, quality, and completeness—specifically non-transactional data, data at rest, while often disregarding transactional data, data in motion (Dutta, 2016).

As highlighted by Abraham et al. (2019), while data governance is increasingly recognized as being critical to the success of organizations, there is still no unanimous agreement within the scientific community regarding its scope. Current literature and other relevant sources often focus on specific aspects of the discipline, such as data protection, security, and the data lifecycle. Alternatively, some sources provide limited reviews that focus on theoretical approaches without offering frameworks and tools to improve and strengthen data governance and quality. Therefore, our approach to this topic ensures that this discipline is analyzed in a way that integrates both theoretical and practical concepts, tools, and frameworks, aiming to improve the overall effectiveness and efficiency of this field and its impact on organizations.

Archaeology and relevant conceptsResearch Question 1 (RQ1) focuses on the recognition that, for a given data governance framework and strategy, there are several concepts that organizations need to understand and embed in their operations. According to Gupta et al. (2020), a data governance framework should encompass strategies, knowledge, decision-making processes related to data management, relevant regulations, policies, data proprietorship, and data protection. One important concept is the notion of data lake, which is considered a centralized repository that allows an organization to collect and distribute large amounts of data. In a data lake, data can be both structured and unstructured, often with limited contextual information, such as the data's purpose, its timeliness, its owner, and how, or whether it has been used (Rivett, 2015).

Another vital concept corresponds to data lineage, which represents the traceability of a given data within an organization. Data lineage allows organizations to visualize and understand the impact of data throughout the organization's system and databases, as well as to trace data back to its primary source, including the stage where it is stored (Dutta, 2016). The literature highlights the importance of embedding data lineage in an organization's end-to-end processes to ensure accurate and complete traceability throughout the data lifecycle, including the systems and processes the data pass through (Dutta, 2016).

Bordey (2018) emphasizes the importance of understanding data lineage and how a firm can control and monitor the data life cycle. To assess a firm's data quality capability, Bordey proposes a set of questions that any firm should consider: i) What activities are being performed, and who is responsible for executing and monitoring them?; ii) What are the conditions and requirements for the planned and performed activities?; iii) Where can the necessary data be retrieved, and what exact phases are required to manage and use that data?; iv) What will be the result of this activity, and for whom is it being produced?; and v) What are the next steps after the result is delivered? (Bordey, 2018).

Moreover, to build and develop a robust and effective data governance strategy and framework, it is essential to establish foundational pillars that should be employed to provide a solid baseline for this field. We recognize that organizations remain vulnerable to data disasters and are hesitant to invest in prevention measures, such as robust and secure infrastructure designed to guard against potential system outages and data disasters, namely through the loss of data (Johnston, 2016).

Johnston (2016) identifies three fundamental topics for managing and maintaining a solid data governance strategy: data visibility, federated data for better governance, and data security. The author emphasizes that defining the baseline for a data governance strategy and framework is crucial. According to this author, the first pillar should be data visibility, which encompasses the activity an organization should go through to understand “what you have, where it is, and how to recover it, when necessary” (Johnston, 2016, p. 52). In addition, Johnston describes the principles organizations should comply with to secure their data: i) deleting low-value data that that has little to no business value, such as outdated, or duplicated data, or data that has exceeded its retention period; ii) organizing valuable data by storing it in controlled repositories using information technology tools; iii) enforcing rigorous data security procedures and mechanisms to ensure data privacy and the protection of financial, personal, and other types of data, while also ensuring it is secure and properly backed-up; and finally, iv) maintaining and controlling access, user privileges and their management (Clarke, 2016). Similarly, Mcintyre (2016) suggests that every organization should implement data redundancy measures to ensure an organization is prepared for a disaster and recovery. This includes setting Recovery Point Objectives (RPOs), which determine how much updated and current data the firm is willing to lose, and Recovery Time Objectives (RTOs), which define how long the firm can go without access to its data assets.

Similarly, Rivett (2015) describes that context, or cataloging of data, is a layer that can answer critical questions and provides the organization with greater visibility over their data and information. This visibility allows organizations to perceive their data, determine their usage, identify ownership roles, and know who to contact in case of data errors. According to Rivett, a business can only define and implement an effective data governance strategy—one that includes appropriate data management procedures to ensure proper governance and compliance—if they have complete visibility of the data within the organization's infrastructure.

In addition to data visibility, Johnston (2016) emphasizes the need for organizations to focus on federated data to improve organizational governance. The process of federating data involves retrieving data from different databases or sources and standardizing it into a single, unified source for front-end analysis and visualization. This process allows companies to analyze and access data from a single source without needing to modify, move, or recover data across different infrastructures (Johnston, 2016). Johnston also identifies data security as the third pillar, which includes protecting and securing data, defining extensive policies, and applying encryption and authentication mechanisms to ensure that only authorized individuals can access the data (Johnston, 2016). Similarly, Rivett (2015) examines the importance of context and governance in implementing a data governance strategy. This author stresses that organizations need to be able to address questions related to data responsibilities, the up-to-date status of data, data meaning and usefulness, and the technologies used for management and storage.

Similarly, Sifter (2017) argues that for a data governance framework to achieve excellence, it must be supported by five essential attributes. The first attribute is related to data accuracy, ensuring that all data flows are managed, controlled, audited, and monitored across the organization's system landscape. Sifter also highlights the importance of data consistency and availability, which are essential for enabling business stakeholders to access data whenever necessary. The other three key attributes, according to Sifter (2017), include: i) the ability of organizations to maintain a single repository for essential data storage, ii) a commitment to maintaining data quality and availability across all processes, and iii) effective planning activities that address data requests by business stakeholders with suitable swiftness and repeatedly whenever necessary. Additionally, it is essential to ensure that data governance aligns with the organization's Master Data Management (MDM). MDM encompasses a set of practices and techniques whose primary goal is to increase and sustain data quality and usage throughout the organization's environment and processes (Vilminko-Heikkinen & Pekkola, 2019).

Benefits and added valueIn response to Research Question 2 (RQ2), and because organizations increasingly focus on fostering robust data governance practices to support their personnel, business processes activities, and technology infrastructure, they are likely to achieve positive outcomes that create value for the overall business and its stakeholders. Sifter (2017) explains that data governance can enhance an organization's human capital by providing more trained, equipped, and knowledgeable personnel, particularly in roles that require ownership and accountability in governance and data management. This approach is not about restricting access to data for certain privileged users, but rather about enabling all users within the organization to access and utilize data governed by appropriate controls (Riggins & Klamm, 2017).

Similarly, this author suggests that an organization's processes will also improve because they are more controlled and monitored for consistency and efficiency due to the strong support of established policies, procedures, and practices. As a result, data governance provides organizations with a set of tools and directives aimed at ensuring that the correct data are accessed and analyzed by the appropriate people whenever and wherever decisions need to be made (Riggins & Klamm, 2017). Additionally, an organization's technology infrastructure will be strengthened as companies strive to optimize and update their systems across the entire environment, rather than just within individual business units (Sifter, 2017). In fact, with better use and understanding of the data they manage, companies are more likely to make well-supported decisions, thereby unlocking greater potential from their data (Burniston, 2015). Consequently, accountability and reliance on data are enhanced when organizations take responsibility for their data and systems, leading to better-designed and controlled decision-making processes (Sánchez & Sarría‐Santamera, 2019). Therefore, robust data management and governance can significantly improve organizational transparency (Cerrillo-Martínez & Casadesús-de-Mingo, 2021)

Moreover, Riggins and Klamm (2017) highlighted several benefits that organizations can gain from data governance including: i) the creation of a mutual, company-wide approach to data processes, which helps generate a single source of truth for the organization's data; ii) the definition and recognition of data controls and policies, which when aligned with organizational needs, foster data quality, accessibility, and security; iii) the development of a central repository for data management, aimed at defining a common terminology and taxonomy within the organization; iv) the establishment of real-time reports and standardized reporting techniques; and v) the enhancement of decision-making processes through the use of trusted data.

Furthermore, the importance of addressing data governance and quality is becoming increasingly prominent in both organizational practices and among regulatory bodies. Shabani (2021) notes that the European Union recently announced a proposal for a new Data Governance Act aimed at facilitating data sharing across various fields while establishing a bridge with the GDPR (General Data Protection Regulation). According to Shabani, robust data governance mechanisms and secure data-sharing practices should be adopted to guarantee compliance with rules and directives from regulatory bodies, such as the GDPR (Shabani, 2021). The author argues that providing organizations with a strong regulatory framework for data governance, which addresses all core elements of this field, can empower both organizations and individuals to better control and enhance their data usage and sharing (Shabani, 2021). Similarly, Janssen et al. (2020) propose that organizations should adopt a framework to regulate and control data sharing, stating that organizations must include requirements and standards for data sharing, formalize agreements, contracts, and service level agreements (SLAs), define authorization levels and approval workflows, and implement audit mechanisms to ensure compliance with relevant legislation and agreements. Kopp (2020) adds that when organizations recur to outsourcing activities, they must clearly describe and characterize the activities being performed as outlined in the formal contract.

Data governance frameworksAreas of applicationConcerning Research Question 2 (RQ2), it is important to recognize that a given data governance strategy and framework can be applied across a wide range of areas and situations. These applications can serve as benchmarks for developing a solid and robust data governance program (Hassan & Chindamo, 2017). For instance, Perrin (2020) describes the recent impact of data governance in the advertising industry, where data fuel decisions made by marketers and brand managers to guide decision-making processes and create brand experiences for consumers. Perrin highlights the significance of current data management and usage regulations, such as the CCPA (California Consumer Privacy Act) and the GDPR (General Data Protection Regulation). Given that advertising relies on vast amounts of data for decision-making and brand promotion, organizations should consider benchmarking their data governance strategies against those in other industries and areas, whether similar, or different from their own. This approach can help them identify key learning points and best practices (Dencik et al., 2019).

Similarly, Perrin (2020) stated that data governance in advertising must encompass data efficacy, transparency, and consumer control. While data can be considered a new currency, increasingly stringent regulations are imposing stricter restrictions on data management and usage. Therefore, organizations must implement a data governance framework and strategy that aligns with the applicable regulations. This includes promoting internal processes to understand the different types of data within the organization, how they are collected, and how they are audited for efficacy and compliance. In the advertising industry, it is crucial for companies to integrate data governance into their operations, because failing to comply with regulations, or jeopardizing consumer trust could make it “nearly impossible to rebuild” that trust (Perrin, 2020, p. 35).

Sifter (2017) describes the importance of data governance in financial institutions, such as insurance companies, financial services, and banks. According to the author, a successful data governance program must address people, processes, and technologies. Sifter notes that these organizations often struggle with managing data quality and access to both data and technology. The author views data governance as a crucial tool for preventing data corruption and issues, maintaining long-term data integrity, and producing vital business data and assets that support operations and business management (Sifter, 2017).

Existing strategies, tools and frameworksRegarding RQ2, Sifter (2017) argues that for a data governance initiative to achieve excellence, it requires support from five key components: foundational elements, data portfolio management, implementation management, engineering and architecture, and operations and support. For the foundational elements, Sifter states that it is important for an organization to establish a charter and vision that can be controlled and assessed over time. This should include clearly defined ownership roles and responsibilities regarding data governance.

For the data portfolio management component, Sifter (2017) argues that organizations must be capable of designing, building, defining, and maintaining an up-to-date data inventory. This inventory should provide a comprehensive overview of how data flows within the organization's technologies, including how data are secured, preserved, and managed. For instance, a data inventory, also known as a data dictionary, or glossary, acts as a catalog of all the data used within the organization. It serves as a reference point for data and assigns data ownership. It resembles a data repository, with detailed metadata, including format, type, technical specifications (e.g., character limits, possible blanks, among others), and accountability and quality criteria and metrics (Clarke, 2019). The author highlights the importance of defining and regularly updating a data inventory to improve an organization's ability to maintain a centralized repository of data definitions, structures, and relationships, which is essential for consistency and clarity across the organization (Clarke, 2019).

Regarding implementation management, Sifter (2017) considers it essential to determine and define methods related to project, change, and access management within an organization. These methods should also facilitate the optimized management of human capital and training programs. Additionally, Sifter (2017) argues that engineering and architecture should support a data governance framework by establishing policies, procedures, and mechanisms across various aspects of the organization. This includes software management, quality assurance and auditing, change and security management, and the management of the technology landscape that characterizes the organization's operations and activities.

Lastly, Sifter (2017) emphasizes the need for organizations to have robust operations and support mechanisms, allowing their personnel to have clear communication channels whenever there is a need for assistance, or in the case of an incident. Additionally, Dutta (2016) proposes a framework to ensure the quality of data in motion, or transactional data. We believe that each of the steps outlined in this framework can be adapted to enhance an organization's data governance framework. Dutta presents a different approach to data governance, which is illustrated in Fig. 13. This approach is structured around the design of a conceptual framework consisting of six interrelated dimensions:

Framework for data quality of data in motion. Adapted from Dutta (2016).

In Stage 1, Discover, the organization must identify and acknowledge all critical information and how it flows within the technological and operational architecture. This involves defining and developing metrics and their foundations. The organization needs to inventory all data across existing systems, databases, and data warehouses, understanding, registering, and documenting data lineage, including sources from both internal systems and external systems and providers (Dutta, 2016). In this stage, it is also important for the organization to establish metric standards and implement techniques to improve data quality through collaboration with data owners.

In Stage 2, the organization should focus on identifying and defining the risks associated with data quality, including data domains and both data at rest and in motion. This involves formalizing issues related to data quality features, as well as recognizing the risks, potential gaps, and pain points that characterize the organization's current environment (Dutta, 2016). By identifying these risks, the organization will be in a position to develop a clear approach to evaluate, mitigate, respond to, and treat them properly.

Data transparency, reliability, and usability can be considered part of the data observability feature in a data governance program. This concept involves all the mechanisms and processes that allow employees to effectively monitor, assess, and produce insights from their data (Schmuck, 2024). According to Schmuck, data observability serves as a solution within a data governance program and encompasses characteristics such as data modeling and design, data quality and its architecture, metadata registry, data warehousing, and business intelligence, data integration and interoperability, and document management (Schmuck, 2024).

In Stage 3, Design, organizations must develop their information analysis techniques and establish processes for managing exceptions, errors, and incidents. To improve these processes, organizations should use automated techniques rather than traditional approaches like sample sizing. They should also implement controls designed to monitor real-time data and processes, such as the success of batch routines (Dutta, 2016). By designing these approaches, organizations should prepare for Stage 4, which involves deploying a set of actions and activities based on the prioritized risks, namely those that are most important and impactful to the environment. The implementation of these actions must involve various components of the organization, including technology, people, and processes, to ensure the effective deployment of these initiatives.

The last Stage, Stage 4, corresponds to Monitoring, where the organization's primary goal is to observe and review the framework that was applied to its context. This includes evaluating data quality metrics, incident management process, risk controls, and any activities that may influence and jeopardize the success of the defined framework (Dutta, 2016).