Although behavioral interventions are powerful tools for parents and teachers, they are unlikely to result in lasting change if the intervention agents find them unacceptable. After developing effective behavior intervention plans for classroom use, we compared social validity of those interventions using three measures: concurrent-chains selections from the intervention consumer (students), verbal report of the intervention agent (teachers), and maintenance of the intervention over time. All three measures of social validity identified an intervention that was acceptable to the intervention consumer and intervention delivery agent. These findings are discussed in terms of applied implications for assessing social validity.

A pesar de que las intervenciones conductuales son herramientas poderosas para padres y maestros, es posible que no representen un cambio duradero si los agentes de la intervención consideran que no son aceptables. Después de desarrollar planes de intervención efectivos para ser utilizados en el aula, se comparó la validez social de dichas intervenciones usando tres medidas: elección de cadenas concurrentes por el consumidor de la intervención (estudiantes), reportes verbales del agente de la intervención (maestros) y mantenimiento de la intervención a lo largo del tiempo. Las tres medidas de validación social identificaron una intervención que era aceptable tanto para el consumidor como para el agente de la intervención. Los resultados se discuten en términos de las implicaciones aplicadas para evaluar la validez social.

Social validity, or the extent to which consumers of our science and practice believe that we are making valuable contributions, has been measured in behavior-analytic work since the 1970’s (Kazdin, 1977; Wolf, 1978). Despite this long history, social validity remains an understudied area of behavior analysis, in part because of its relatively subjective measurement. Most systematic measures of social validity consist of rating scales (e.g., the Intervention Rating Profile; Witt & Elliot, 1985) and questionnaires (e.g., Gresham & Lopez, 1996). These scales directly measure consumers’ verbal behavior only, which may be problematic if the consumers are not accurate reporters. Additionally, measuring social validity through verbal report alone may not predict the extent to which behavior-analytic procedures are acceptable solutions to addressing social problems.

To address these potential limitations, several authors have argued for the use of direct measurement of social validity (Hanley, 2010; Kennedy, 2002). This direct measurement can take at least two forms. One direct measure of social validity is the extent to which consumers maintain behavior-analytic interventions over time (Kennedy). Unlike measures of verbal report, examining maintenance as a direct measure of social validity may help us to identify common features of procedures that are likely to be adopted and persist in a specific environment.

Another direct measure of social validity is the extent to which consumers choose our interventions. Measurements of choice have been used to allow direct consumers (those personally experiencing the intervention), particularly consumers with limited or no verbal skills, to select which procedure they prefer (e.g., Hanley, Piazza, Fisher, Contrucci, & Maglieri, 1997). Consumer preference for interventions has typically been assessed using a modified concurrent-chains procedure. During the initial link of the procedure, consumers select between stimuli that were previously associated with each intervention option. The consumer then experiences the selected intervention during the terminal link of the chain. This kind of modified concurrent-chains procedure effectively evaluated consumer preference for different reinforcement schedules (e.g., Hanley et al., 1997), teaching procedures (e.g., Slocum & Tiger, 2011), and other intervention components.

There are several possible benefits to choice-based measures of social validity with direct consumers. First, it may allow consumers to select an option that best meets their momentary needs, even if those needs change over time. Choice procedures may allow consumers to select the intervention components that are most valuable to them in the moment, thus accounting for shifts in preference or motivating operations. Second, children may prefer situations in which they are permitted to choose over situations that are adult-directed (Fenerty & Tiger, 2010; Schmidt, Hanley, & Layer, 2009; Tiger, Hanley, & Hernandez, 2006; Tiger, Toussaint, & Roath, 2010). Allowing consumers to choose the interventions they experience may dignify the treatment process by allowing input from the client (Bannerman, Sheldon, Sherman, & Harchik, 1990).

There may be benefits to evaluating social validity of interventions with the behavior-change agents (indirect consumers) in addition to the direct consumers who experience the intervention. Allowing indirect consumers to participate in the social validity process provides those individuals with a way to select against procedures that they do not find acceptable (Hanley, 2010). Establishing social validity with indirect consumers is important because treatment implementation may be unlikely to continue if those responsible for implementing the intervention do not also find the procedures acceptable.

To date, few studies have evaluated the social validity of interventions with both direct and indirect consumers, and studies have not evaluated the use of consumer choice and maintenance data to assess the validity of Behavior Intervention Plans (BIP). Additionally, direct measurement of social validity has not been extended to children with ADHD and their teachers. Yet, improving the acceptability or validity of intervention plans may improve the extent to which teachers implement those plans with fidelity (Mautone, DuPaul, Jitendra, Tresco, Vilejundo, & Volpe, 2009), thereby improving student outcomes (St. Peter Pipkin, Vollmer, & Sloman, 2010). To address this gap in the literature, we evaluated the social validity of two multicomponent BIPs using three measures: student choice for procedures, teachers’ verbal reports, and maintenance of intervention over time.

MethodParticipants and SettingThree students who attended an alternative education program and two classroom teachers participated in this study. Zane and Kelvin were diagnosed with Attention Deficit Hyperactivity Disorder (ADHD) and were 6 and 7 years old, respectively. Harmony was an 8-year-old girl diagnosed with mild intellectual disability, ADHD, Post-Traumatic Stress Disorder (PTSD), and phonological disorder. All three participants used complex sentences to communicate, and had an extensive history of engaging in chronic and severe problem behavior that was resistant to intervention. The two classroom teachers, Jamie and Stacy, each had a Master’s degree in Elementary Education with certifications in both general and special education and were Board Certified Behavior Analysts. Jamie had been teaching for 13 years and Stacy had been teaching for seven years. Both teachers had been teaching in the alternative education program for approximately two years.

Prior to the start of this study, all three students had participated in an evaluation comparing the efficacy of two different BIPs on problem behavior (i.e., aggression, disruption, inappropriate language, and noncompliance). Both plans were multicomponent interventions that addressed multiple functions of problem behavior, and both BIPs produced similar reductions in problem behavior. Table 1 shows core components of each BIP.

General Components of Each Behavior Intervention Plan

| BIP 1 | BIP 2 |

Materials: Timer, student specific academic materials, dry erase marker, point card, bin with 4 high preference toys, bin with 4 moderate-to-low preference toys, prize box with small trinkets (e.g., stickers, sucker, eraser, pencils)

| Materials: Timer, student specific academic materials, picture of the students face with a magnetic back, a magnetic dry erase board split in half with the word “work” written on one side of the board and the word “break” written on the other side, dry erase marker, I-Pad, break area with preferred toys and activities

|

All sessions were conducted in the students’ classroom within the alternative education program. During each session, up to two teachers and eight students were present in the classroom. Sessions lasted the entire school day. The teachers were responsible for implementing the BIPs throughout the study.

ProceduresStudent choiceWe used a concurrent-chains procedure (Hanley et al., 1997) to evaluate each student’s relative preference for the two BIPs. The teachers were trained to implement each of the plans before the start of the study. Both BIPs were associated with specific materials and all three students were familiar with these materials. We selected one item from each of the BIPs to represent that BIP during choice trials. We used a point card for BIP 1 and a picture card for BIP 2. We selected these items because they were relatively salient stimuli associated with the plans, were approximately the same size and shape, and the teachers thought that they were unlikely to be differentially preferred independent of the BIP with which they were associated.

Prior to evaluating students’ preference for the different BIPs, the teacher conducted two forced-choice sessions (one session for each BIP) to expose students to the different BIPs associated with selecting each card, and to ensure that students had recent experience with each of the BIPs. During forced-choice sessions, the teacher placed the two cards (the point card and picture card) in front of the student. The teacher pointed to each card and read a script (available from the first author) that briefly described the main components of each BIP. Next, the teacher randomly selected one of the BIPs and instructed the student to hand her the card associated with that intervention. The teacher then implemented that BIP for the rest of the school day (approximately 5 hrs). The next day this procedure was repeated with the other BIP.

After the two forced-exposure days, students were allowed to select the BIP that would be implemented for the day. During student-choice sessions, the teacher presented the two cards to the student, read the script describing the main components of each BIP, and then instructed the student to choose a card. The student selected a BIP by handing the associated card to the teacher. Once the student selected a BIP, the teacher implemented that BIP for the remainder of the school day. If the student had attempted to select both cards, the teacher would have re-presented the cards and asked the student to select only one. However, this never occurred.

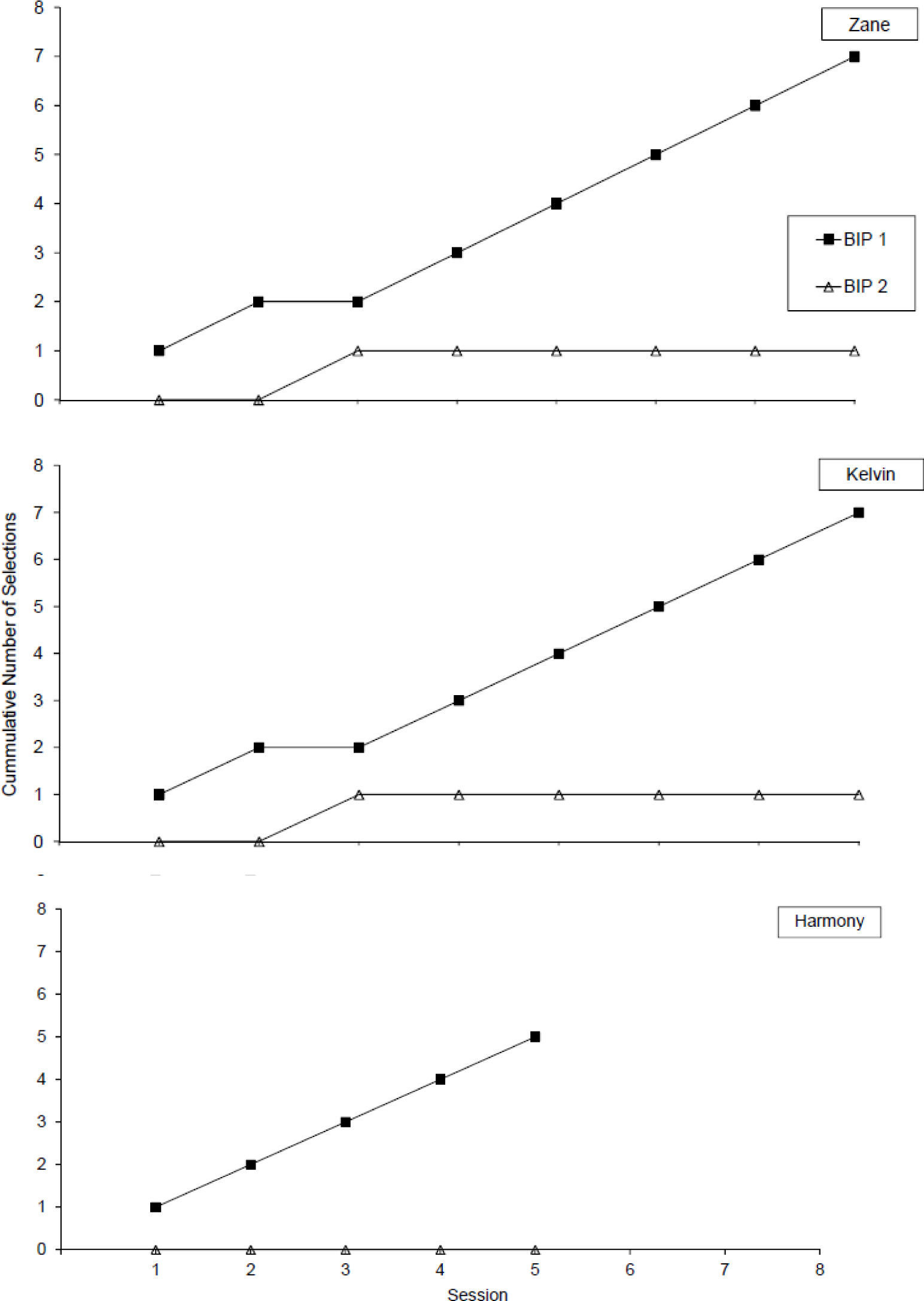

During each session, the teachers collected data on students’ BIP choices, defined as selecting the card associated with a specific BIP and handing it to the teacher. We calculated the cumulative number of selections for each BIP by adding the total number of selections across sessions. Student-choice sessions continued until the student selected the same BIP across five consecutive school days. After the fifth consecutive selection of the same BIP, the teachers adopted that BIP as part of the student’s Individualized Education Plan.

We collected treatment integrity data on the teachers’ correct implementation of BIPs as a secondary measure. Treatment integrity data were collected during an average of 23% of the student-choice sessions across participants. Each observation was divided into six 10-min intervals. At the end of each interval, we scored the implementation of each component of a BIP as either correct or incorrect. We calculated treatment integrity by taking the number of BIP components implemented correctly and dividing it by the total number of components implemented correctly plus the number of components implemented incorrectly, and multiplying by 100.

Teacher ReportWe assessed the extent to which teachers found both the choice procedure and the child-selected BIP to be acceptable. Teacher acceptability was measured immediately after the student-choice phase concluded. Each teacher reported on the child or children with whom she worked most often. Jamie reported on the extent to which she found the choice procedure and BIP acceptable for Harmony. Stacy reported on the acceptability of the choice procedure and BIP for Zane and Kelvin. Teachers were provided one week to complete the social validity measures, and were asked to complete the measures independently of each other.

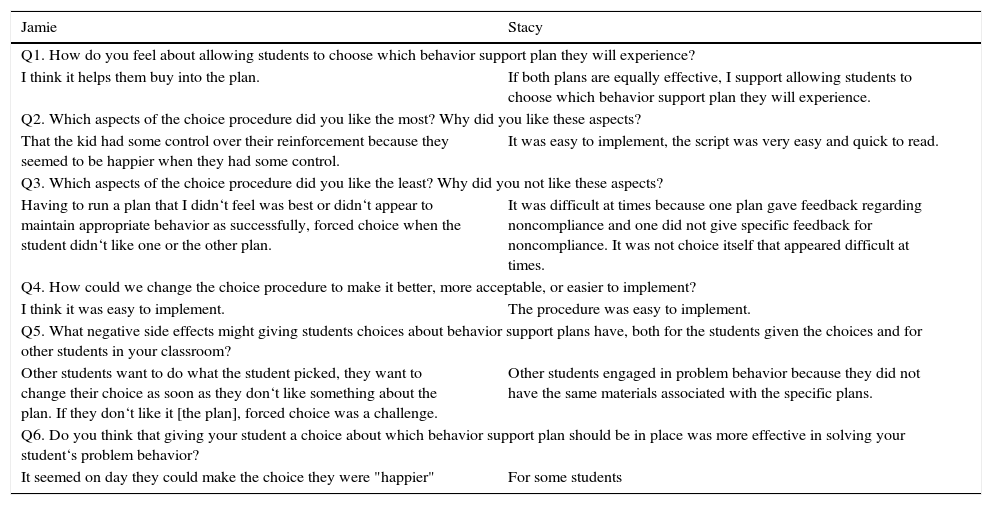

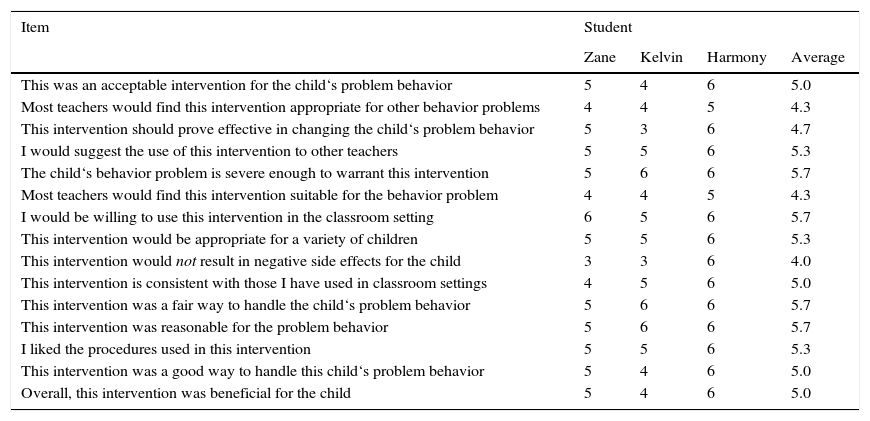

Each teacher was asked to complete two measures. The first measure we used was an open-ended questionnaire based on the one described by Gresham and Lopez (1996) to determine the acceptability of the choice procedures (see Table 2). The questionnaire asked teachers how they felt about allowing children to choose a BIP, the aspects of the procedure they liked the most, the aspects of the procedure they liked the least, how the procedure could be made better or easier, the negative side effects that children might experience, and the efficacy of the choice procedure for reducing problem behavior. The second measure was a modified version of the Intervention Rating Profile-15 (IRP-15; Martens, Witt, Elliot, & Darveaux, 1985), which we used to determine how acceptable the teachers found the child-selected BIP (see Table 3). Teachers rated the child-selected BIP in 15 areas, using a 1 to 6 Likert scale, with 1 indicating “strongly disagree” and 6 indicating “strongly agree.”

Teacher Responses to Open-Ended Questions about Validity of Choice Procedure

| Jamie | Stacy |

|---|---|

| Q1. How do you feel about allowing students to choose which behavior support plan they will experience? | |

| I think it helps them buy into the plan. | If both plans are equally effective, I support allowing students to choose which behavior support plan they will experience. |

| Q2. Which aspects of the choice procedure did you like the most? Why did you like these aspects? | |

| That the kid had some control over their reinforcement because they seemed to be happier when they had some control. | It was easy to implement, the script was very easy and quick to read. |

| Q3. Which aspects of the choice procedure did you like the least? Why did you not like these aspects? | |

| Having to run a plan that I didn‘t feel was best or didn‘t appear to maintain appropriate behavior as successfully, forced choice when the student didn‘t like one or the other plan. | It was difficult at times because one plan gave feedback regarding noncompliance and one did not give specific feedback for noncompliance. It was not choice itself that appeared difficult at times. |

| Q4. How could we change the choice procedure to make it better, more acceptable, or easier to implement? | |

| I think it was easy to implement. | The procedure was easy to implement. |

| Q5. What negative side effects might giving students choices about behavior support plans have, both for the students given the choices and for other students in your classroom? | |

| Other students want to do what the student picked, they want to change their choice as soon as they don‘t like something about the plan. If they don‘t like it [the plan], forced choice was a challenge. | Other students engaged in problem behavior because they did not have the same materials associated with the specific plans. |

| Q6. Do you think that giving your student a choice about which behavior support plan should be in place was more effective in solving your student‘s problem behavior? | |

| It seemed on day they could make the choice they were "happier" | For some students |

Teacher Responses to the Intervention Rating Profile-15 Regarding the Selected Intervention

| Item | Student | |||

|---|---|---|---|---|

| Zane | Kelvin | Harmony | Average | |

| This was an acceptable intervention for the child‘s problem behavior | 5 | 4 | 6 | 5.0 |

| Most teachers would find this intervention appropriate for other behavior problems | 4 | 4 | 5 | 4.3 |

| This intervention should prove effective in changing the child‘s problem behavior | 5 | 3 | 6 | 4.7 |

| I would suggest the use of this intervention to other teachers | 5 | 5 | 6 | 5.3 |

| The child‘s behavior problem is severe enough to warrant this intervention | 5 | 6 | 6 | 5.7 |

| Most teachers would find this intervention suitable for the behavior problem | 4 | 4 | 5 | 4.3 |

| I would be willing to use this intervention in the classroom setting | 6 | 5 | 6 | 5.7 |

| This intervention would be appropriate for a variety of children | 5 | 5 | 6 | 5.3 |

| This intervention would not result in negative side effects for the child | 3 | 3 | 6 | 4.0 |

| This intervention is consistent with those I have used in classroom settings | 4 | 5 | 6 | 5.0 |

| This intervention was a fair way to handle the child‘s problem behavior | 5 | 6 | 6 | 5.7 |

| This intervention was reasonable for the problem behavior | 5 | 6 | 6 | 5.7 |

| I liked the procedures used in this intervention | 5 | 5 | 6 | 5.3 |

| This intervention was a good way to handle this child‘s problem behavior | 5 | 4 | 6 | 5.0 |

| Overall, this intervention was beneficial for the child | 5 | 4 | 6 | 5.0 |

One month following the completion of the student-choice sessions, we conducted a maintenance observation in the classroom. During this observation, we collected treatment integrity data on the teachers’ implementation of the BIP that the student chose most often during the choice sessions. The purpose of this observation was to evaluate the extent to which teachers continued to (a) implement the BIP selected by students during the choice sessions, and (b) implement the components of the BIP accurately.

Interobserver AgreementTeachers collected data on the student’s selection of an intervention by writing the selection on a data sheet provided to them for that purpose. A secondary observer (one of the study authors) independently scored BIP selections during an average of 23% of the choice sessions across students. We compared the primary and secondary observers’ data on a session-by-session basis and calculated IOA for students’ BIP selections by taking the number of sessions with an agreement on a student selection divided by the total number of sessions and multiplied by 100. We scored an agreement if both observers scored the same BIP selection during a session, and a disagreement if both observers scored a different BIP selection for a given session. The IOA scores on BIP selections were 100% for all students.

Two researchers independently scored the IRP-15 measures. The researchers agreed on each teacher rating provided on the IRP-15 for each student (IOA=100%), and ensured that IRP-15 and questionnaire results were transcribed accurately.

ResultsThe results of student choices are shown in Figure 1. All students showed a strong preference for one of the BIPs (BIP 1). Data for Zane are shown in the top graph. Zane selected BIP 2 during only the third choice period. Kelvin’s data are shown in the second graph. Like Zane, Kelvin selected BIP 2 during the third session; notably, Zane’s third session and Kelvin’s third session were not conducted on the same day. Harmony’s data are shown in the bottom graph. Harmony always selected BIP 1.

The results of the teachers’ verbal reports are summarized in Tables 2 and 3. Table 2 shows the teachers’ responses to the six open-ended questions that assessed their acceptability of the student-choice procedure. The teachers reported both positive and negative aspects of the choice procedure. Some of the positive aspects of the choice procedure included ease of implementation and that the students seemed to be happier because the choice procedure gave them some control over reinforcement. Some negative aspects of the choice procedure identified by the teachers included problem behavior that occurred when students could not select the BIP. This problem behavior was reported to occur both for the student participants (e.g., on forced-choice days) as well as other students in the classroom who were not participating in the evaluation. In general, both teachers seemed to find the choice procedure acceptable.

Table 3 summarizes the teachers’ ratings of treatment acceptability and perceived effectiveness of BIP 1 across all three children. In general, the teachers’ ratings of the BIP were positive. The mean rating across all questions and all students was 5.1 (range, 3.0 to 6.0). Across all three students, the teachers slightly agreed to strongly agreed that the BIP was an acceptable intervention for the child’s problem behavior (M=5.0). The teachers also agreed to strongly agreed that the intervention would be appropriate for a variety of children and that they liked the procedures used in the BIP (M=5.3). Finally, the teachers slightly agreed to strongly agreed that the overall BIP was beneficial for the child (M=5.0).

During the one-month follow-up observations, the teachers continued to implement the BIP selected by the students, and mean treatment integrity during these observations was above 90% (range, 91% to 97%) for all three students. Thus, teacher nonverbal behavior corresponded to their verbal behavior regarding the acceptability of the intervention. That is, immediately following the choice phase, both teachers reported that the BIP selected by the child was acceptable, and these verbal reports were confirmed by maintenance of the intervention over time.

DiscussionIn the current study, we used three different methods of social validity to assess the acceptability of a BIP with both direct and indirect consumers. All three measures of social validity (student selection, teacher verbal report, and teacher maintenance of implementation) identified an intervention that was acceptable to all parties. To our knowledge, this is the first study to incorporate three different measures of social validity, and one of the only studies to evaluate social validity of procedures that were implemented across an entire school day. When the students were given a choice between two BIPs, all three children reliably selected one of the interventions, and this intervention was reportedly acceptable to the teachers. The teachers reported finding the choice procedure acceptable and manageable for classroom use. The nonverbal behavior of the teachers also suggested that the BIP selected by the students was acceptable because they continued to implement the intervention with integrity over time.

The results of the current study showed that high levels of treatment acceptability for a BIP were obtained across all three measures of social validity. Future studies should evaluate the consistency with which multiple measures of social validity converge. If multiple measures consistently converge, then the easiest or most efficient method of assessing social validity may be sufficient to ensure the acceptability of interventions. For example, assessing social validity through the teachers’ verbal report required the least amount of time when compared to the other social validity measures we assessed. Thus, verbal reports may be a preferable measure if they are found to consistently match other, direct measures of acceptability.

There are currently no guidelines regarding how to select interventions when multiple measures of social validity do not converge, or when the most acceptable intervention is not the most efficacious. We had existing evidence that both interventions were equally efficacious for the students. However, clinicians may not always have a priori information about the efficacy of potential interventions. Alternatively, an intervention known to be less effective may be more preferred by one or more stakeholder. When social validity and efficacy do not align, careful consideration must be given to the context in which the intervention will be implemented. Whenever possible, effective treatments should be developed that incorporate components with high social validity.

Previous research has typically relied on verbal reports as a measure of social validity (Spear, Strickland-Cohen, Romer, & Albin, 2013). In the current study, the verbal reports of teachers were confirmed by direct measure of treatment integrity over time. Direct measurement of integrity may be a useful addition to the literature on measurement of social validity. This addition may be particularly important because individual’s verbal reports may not match their observed behavior. In the current study, both the direct and indirect measures of social validity indicated that the teachers found the behavioral interventions acceptable. Future studies should examine the extent to which these measures correspond when acceptability on one measure is low.

Our measurement of social validity for the teachers was limited in at least two ways. First, our only direct measure of teachers’ social validity was through the continued use of the intervention over time. We could have also directly measured social validity by replicating our concurrent-chains procedure with the teachers. Future studies could evaluate the extent to which teachers’ choices for BIPs matches those of the students. Second, we only had teachers rate the acceptability of the BIP selected by the students. Although the teachers rated the selected BIP highly, it is possible that teachers would have found both interventions to be equally acceptable.

Quantifying agreement between different measures of social validity also warrants further investigation. We obtained global agreement between different measures of social validity, but found it difficult to quantitatively compare across the measures. For example, how much treatment integrity must be maintained over time for the results of this kind of social validity to be said to correspond with high ratings on a social validity questionnaire? How much endorsement is needed on an indirect measure for the intervention to be considered valid? Across what timespan should direct measures of social validity be collected to be an accurate indicator of the acceptability of the treatment?

We obtained only a direct measurement of social validity from the students. Yet, indirect social validity measures have been developed for use with children (e.g., Children’s Intervention Rating Profile; Witt & Elliot, 1985). To our knowledge, there are no direct comparisons of children’s verbal reports of treatment acceptability and nonverbal selections. However, previous research suggests that there may be a high degree of correspondence between verbal and nonverbal measures of stimulus preference for children who have age-appropriate language (e.g., Northup, Jones, Broussard, & George, 1995), suggesting that correspondence between direct and indirect measures of validity is possible with young informants. Future studies may wish to directly evaluate the extent to which students’ verbal reports of social validity correspond to their choices in a concurrent-chains arrangement.

Overall, the results of the current study suggest that direct measures of social validity can be applied to complex behavior intervention plans for elementary students who engage in chronic and severe challenging behavior. Our results suggest that direct measures of social validity may be possible as part of classroom procedures for special-education students, and that such measures can incorporate both student and teacher responses. Despite these promising initial outcomes, there is still much work to be done to determine best practice for evaluating social validity in complex educational environments.

At the beginning of the day the teacher should meet with the target student, show him/her the point card and provide the following instructions:

“While you are working you will earn smiles for being safe, respectful, and responsible.”

“You can earn a smile for being safe if you have safe hands, you stay in your area (i.e., you stay inside the taped area), and you use the materials in the classroom appropriately (for example, you keep your desk on the ground, and you keep your papers and pencils on your desk).”

“You can earn a smile for being respectful if you use nice words when talking to your teachers and friends and you have a quiet voice while you work.”

“You can earn a smile for being responsible if you do your work and you follow your teacher’s directions.”

“If you get all 3 smiles by being safe, respectful, and responsible, then you will get to have a break with items in the bin with either 2 or 3 smile faces!”

“If you get 2 smiles, then you will get to have a break with the items in the bin with 2 smiles.”

“If you get less than 2 smiles, then you will not get a break, and will have to continue working at your desk and try to work for smiles for the next break time for being safe, responsible, and respectful.”

If you get a total of (goal number) smiles by the end of the day, then you will be able to pick a prize from the prize box.

At the end of the day the teacher should meet with the target student, show him/her the point card and review his/her goal for that day and the number of smiles earned:

“Today you earned ____smiles for being safe, respectful, and responsible while you were working. Your goal was to earn ____smiles.”

“Great job reaching your goal! I am so proud of you and you can pick a prize from the prize box!”

“You did not reach your goal today so you do not get to pick a prize, but you can work hard tomorrow to reach your goal.”

At the beginning of the day the teacher should meet with the target student, show him/her the break/work board and his/her picture and provide the following instructions:

“At the start of work, your picture will be on the break side of the board.”

“If you have safe hands, you stay in your area (i.e., you stay inside the taped area), and use the materials in the classroom appropriately (for example, you keep your desk on the ground, and you keep your papers and pencils on your desk), then your picture will stay on the break side of the board.”

“When the timer goes off if your picture is still on the break side of the board, then you will get to take a break in the break area. For every break you get in a row, you will get a tally above your picture, and if you have 2 or more tallies above your picture, you can play with an I-pad on your break.”

“If while you are working you do not have safe hands, you do not stay in your area (i.e., you step outside of the tape), or you do not use materials appropriately your picture will be moved to the work side of the board. And any tallies above your picture will be erased.”

“When the timer goes off if your picture is on the work side of the board, then you will have to stay at your desk and work, and you can try and earn the next break.”