A systematic review (SR) is an article on the “synthesis of the available evidence”, in which a review is performed on the quantitative and qualitative aspects of primary studies, with the aim of summarising the existing information on a particular topic. After collecting the articles of interest the researchers then analyse them and compare the evidence they provide with that from similar ones. The reasons for justifying performing an SR are: when there is uncertainty as regards the effect of an intervention due to there being existing evidence against its real usefulness; when it is desired to know the magnitude of the effect of an intervention; and, when it is desired to analyse the behaviour of an intervention in subject sub-groups.

The aim of this article is to perform an update on the basic concepts, indications, strengths and weaknesses of SRs, as well as the development of an SR, the most important potential biases to be taken into account in this type of design, and the basic concepts as regards the meta-analysis. Two examples of SR are also included, of use for surgeons, who often come across this type of design when searching for scientific evidence in biomedical journal bases.

Una revisión sistemática (RS), es un artículo de “síntesis de la evidencia disponible”, en el que se realiza una revisión de aspectos cuantitativos y cualitativos de estudios primarios, con el objetivo de resumir la información existente respecto de un tema en particular. Los investigadores luego de recolectar los artículos de interés; los analizan, y comparan la evidencia que aportan con la de otros similares. Las razones que justifican la realización de una RS son: cuando existe incertidumbre en relación al efecto de una intervención debido a que existe evidencia contrapuesta respecto de su real utilidad; cuando se desea conocer el tamaño del efecto de una intervención; y, cuando se desea analizar el comportamiento de una intervención en subgrupos de sujetos.

El objetivo de este manuscrito es realizar una puesta al día sobre los conceptos básicos, indicaciones, fortalezas y debilidades de las RS; así como del desarrollo de una RS, los potenciales sesgos más relevantes a ser tenidos en cuenta en este tipo de diseños, y los conceptos básicos referentes al metaanálisis. Se incluyen, además, dos ejemplos de RS; de utilidad para los cirujanos que con cierta frecuencia se encuentran con este tipo de diseño cuando realizan búsquedas de evidencia científica en bases de revistas biomédicas.

Systematic reviews (SR) enable us to be up-to-date in different topics of interest in a timely manner. Nevertheless, this type of study is not always associated with a level 1 evidence or guaranteed validity, veracity, methodological quality, reliability or reproducibility of the results.1 Furthermore, it must be kept in mind that there are SR whose study populations are randomized clinical trials (RCT), which may possibly reach a level of evidence 1a; however, there are also SR whose study populations are low-quality RCT, cohort studies or other observational studies. In these cases, the level of evidence is obviously lower, which may confuse readers if some of these details are not considered when reading these articles.1

SR are studies in which the population comes from already-published articles, meaning that they are studies of studies. As such, a SR compiles the information generated by clinical investigations about a specific topic, which is sometimes assessed mathematically with a meta-analysis. At the end, these results are used to develop conclusions that summarize the compared effects of different medical interventions.

Strategies are used to limit biases and random errors. These include exhaustive searches of all relevant articles, reproducible selection criteria, design evaluation, study characteristics and synthesis and interpretation of the results.

Systematic reviews should be done objectively, rigorously and meticulously from a qualitative and quantitative standpoint. Methodological and mathematical tools are used to combine the data compiled from primary studies while maintaining the individual effect of each study included. In this way, the weight of each can be determined in the calculation of the combined effect (based on the sample size and methodological quality of each study) as the evidence that is generated is synthesized.

As is the case of all articles, SR should be assessed according to their internal validity, magnitude of the results and external validity. To do so, there are critical reading guidelines2,3 like the CASPe program, which has designed a guide with key points4 and tools for improving precise reporting. There is also the QUOROM initiative, generated for the meta-analysis (MA) of RCT,5,6 and the PRISMA declaration for reporting selected items for SR and MA.7

Reasons for Systematic ReviewsThe vast production of research articles (more than 2 million per year)8 represents a problem for clinicians because, in order to be on top of the latest developments, it has been calculated that at least 17 articles per day would need to be read.9 In addition, the articles are not always of the utmost quality, which sometimes results in more contradiction than clarification. Thus, the essential reason for publishing SR is quite practical: we need SR in order to integrate such a large amount of information and to provide a rational basis for decision-making in health care.10,11 Other reasons for developing SR are the following:

- -

The existence of uncertainty about the effect of an intervention due to the fact that there is opposing evidence of its actual utility.

- -

The desire to quantify the actual effects of an intervention.

- -

The need to analyze the behavior of an intervention in subject subgroups. For example, in a RCT to determine the effectiveness of omeprazole in the prevention of upper gastrointestinal tract bleeding due to stress in critical patients, the question of whether the intervention is particularly effective in the subgroup of subjects on mechanical ventilation or those with cranio-encephalic trauma could not be answered.

- -

The effect of the intervention being studied is small or moderate. For example, if we wanted to compare the effect of two surgical techniques with a RCT and we estimate that the difference in effectiveness between the two is 5%, in order to have an 80% probability of reaching a P value <.05, we would need to treat 3208 patients (1604 with each technique).12 Treatments whose effects are limited may result in P values that are only randomly significant, or there may be biases in the compared groups.13–15

This type of research design is efficient. It can increase estimating power and precision, consistency and generalization of the results, as well as strictly evaluating the information published.9

By combining the information of several primary or individual studies, SR are able to analyze the consistency of the results. A good part of primary studies are usually small in sample size, meaning that they have insufficient statistical power. By integrating studies that try to respond to the same question, the size of the sample is increased, as is the statistical power.13

While there are those whose opinion is that SR is like a mix comparing oranges and apples, others think that this characteristic is able to increase the external validity or generalization of the results. In this way, similar effects seen in different settings, with different inclusion and exclusion criteria for the study subjects, can give us an idea of just how strong the results of an SR are and how transferrable they are to other settings.16

WeaknessesIf studies are included with poor methodological quality that cannot ensure the minimization of hypothetical biases, the SR will produce results that will not be realistic (it must be remembered that articles are the individuals being studied, meaning that, in the analysis, the total number of articles is the sample size).17

When the primary studies are RCT, incorrect random assignation or assignation without a concealed sequence, incorrect blinding and the loss of subjects to follow-up resulting in a different final population than that originally assigned, will notably disrupt the results.9,15,16

Care must also be taken when interpreting the results due to, among other things, the heterogeneity of the primary studies, not only in terms of different design types but also in terms of their potentially diverse methodological quality. In fact, some believe that SR should be considered more of a tool for generating hypotheses than high-quality evidence.18

On the other hand, SR and MA are methodological tools that require knowledge, practice and experience in search and review methods, as well as in the compilation, application and interpretation of the results obtained.19

Other problems of SR have to do with the reviewers. On one hand, the authors may not specify the search process and assessment of the information, while on the other, in a hypothetical situation of lack of information, they may not be able to repeat and verify the results and conclusions of the review.20

In recent years, there has been a great increase in SR in all clinical practice settings. Nonetheless, research into the quality of SR has demonstrated that not all reviews are truly systematic, methodological quality is variable, and biases are evident.19 In other words, while SR have provided hierarchization and summaries of knowledge in a series of situations, it is also necessary to mention that biomedical journal databases include numerous examples of poor-quality SR, either in methodology or subject matter, which confuse clinicians more than they help.

One of the multiple examples related with the previously described facts can be observed in the article by McCulloch et al.21 It is an SR whose objective is to evaluate survival and perioperative mortality after performing gastrectomy due to stomach cancer associated with lymphadenectomy D1 vs D2. In this SR, methodological and technical problems can be found. The methodological issues include the fact that the authors worked with 2 randomly assigned CT, two non-randomly assigned CT and 11 cohort studies. Nevertheless, when the characteristics of the studies included are observed in detail, we see that there are CT, cohort studies and series of retrospective cases (apparently confused with cohort studies). Among the technical problems, it must be mentioned that the studies included were all from the last century, and even one series of cases was published in 1975, together with other publications from 1993 to 1999; meanwhile, peri-operative care has obviously changed in the last 15 years. Moreover, the concept of D2 in recent years has changed, which is a situation that directly affects postoperative morbidity and possibly postoperative mortality and, therefore, the survival of these patients. These elements led us to cautiously read the results and conclusions, which suggested certain benefits with the practice of D2, with a supposed level of evidence 1a. In the light of our findings after critical reading, the level of evidence should be defined as “unclassifiable” because none of the classifications consider SR with different design types.

Due to the issues that we have mentioned, systematic reviews should be assessed with a critical eye before deciding whether the conclusions are based on appropriate internal and external validity.

The stages of Systematic ReviewsFormulating the ProblemAs in any research, the first step is to identify the problem and formulate a very well defined question for the problem at hand. The use of the mnemonic “PICO” is very useful for this, where P is the health problem or patient being studied, I is the intervention to be done, C is the comparison (comparing what is currently done for the problem with the intervention being studied) and O is the outcome.

Localization and Selection of Primary StudiesThis step involves defining the article selection criteria, population characteristics and the intervention performed. For the search, key words should be selected, either MeSH terms or free terms, as well as the Boolean operators to be used. With these words, the search is begun in the SR mega-searchers like the Cochrane Library and TripDatabase, and then later continued in common databases (MEDLINE, EMBASE, SCIENCEDIRECT, SciELO, LILACS, etc.). The search should not be limited to MEDLINE alone, as it only represents approximately 60%–70% of the published material.

In addition to what is published in these and other databases, ideally the so-called “gray literature” should also be searched, which entails reports that have been published in journals that are not included in the Index Medicus or other databases (theses, medical conference summaries, pharmaceutical industry reports, etc.). It is estimated that “gray literature” represents approximately 10% of the information published about any given problem.

Evaluation of the Methodological Quality of the StudiesThis entails assessing internal validity and any possible biases by using published guidelines such as the standard guide published by the Cochrane Collaboration.10 This phase should be done by at least two independent researchers who are blinded in order to avoid evaluation biases.22,23

Extracting DataThe data is compiled with a template for all the information from the primary articles (year of publication, authors, journal, main and secondary results and their methodological evaluation).24

Analysis and Presentation of the ResultsThe role of the reviewers is to try to explain the possible causes for the variations of the results of the primary articles, since these can simply be due to chance or study design, sample size, how the exposure or intervention were measured or the results. These can be interpreted from a qualitative and quantitative standpoint (with a meta-analysis).25

Presentation of the ResultsWhen writing the report, it must be kept in mind that reviews are based on systematization. Therefore, all the steps of the process used for developing the review should be clearly explained in detail so that any reader who may want to repeat the study is able to do so. There are several guidelines for correctly writing systematic reviews, such as the QUORUM initiative5,6 (SR with MA), MOOSE26 (SR of observational studies with MA) or the PRISMA declaration.7 A flowchart for the selection of the articles is essential, as are graphic representations of the results of the studies included and their MA.8

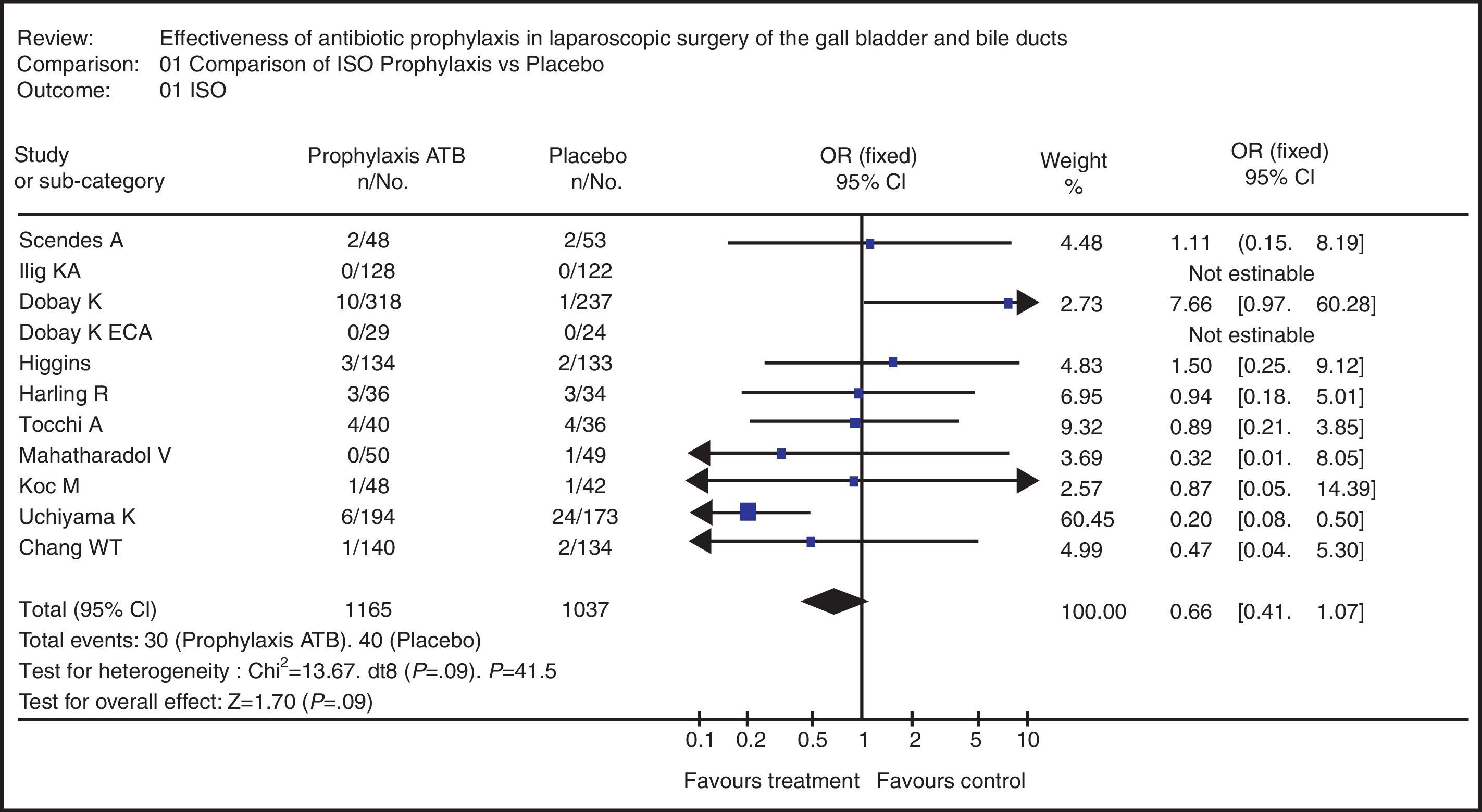

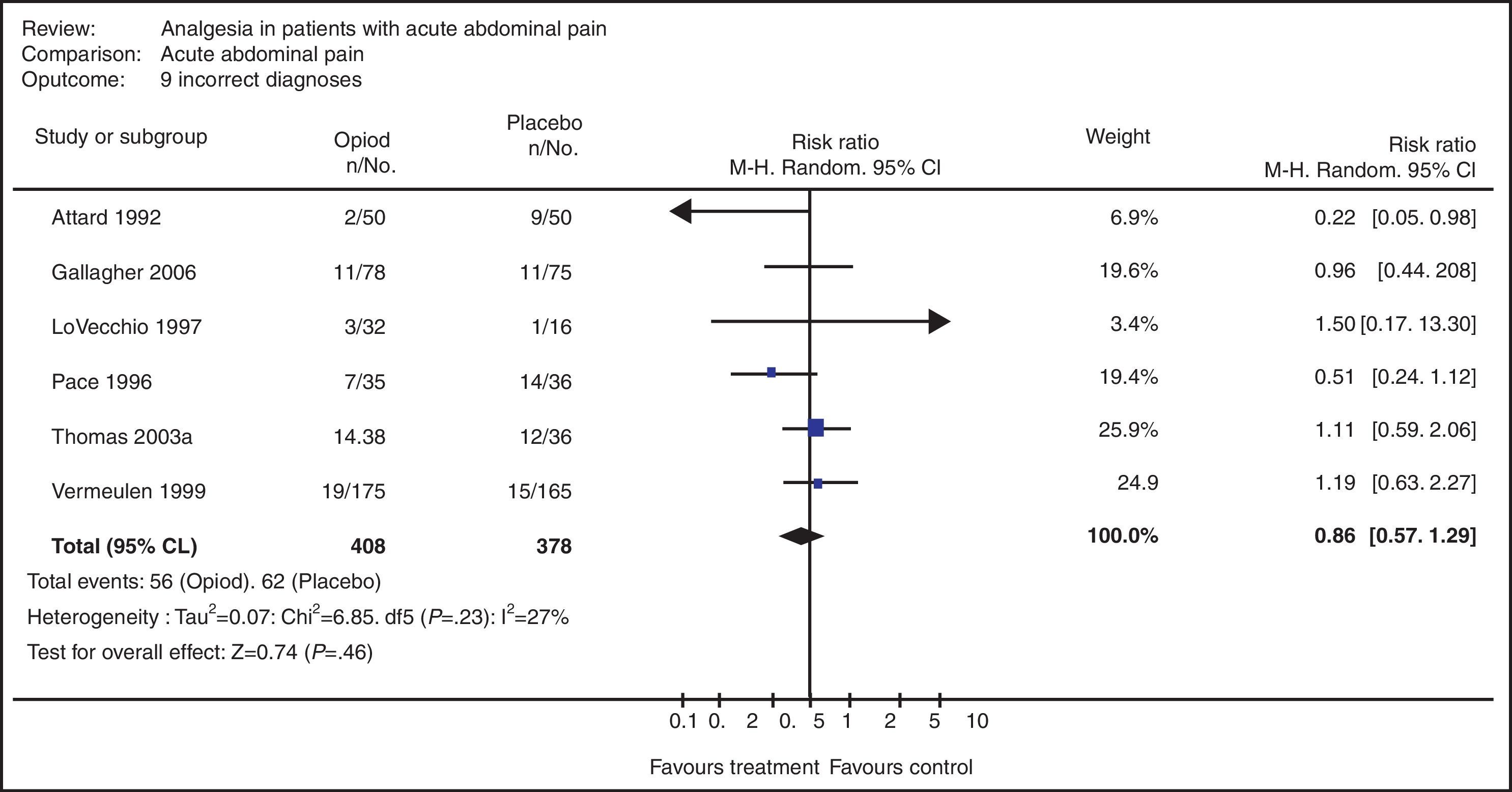

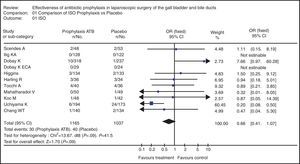

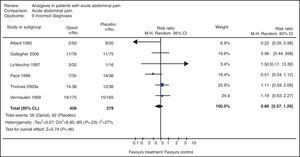

In the examples of Figs. 2 and 3, the results from each primary article are represented as a point in which the size is determined by the sample size provided by that study. It is placed on a horizontal line that represents the confidence interval of that study in relation with a vertical line that divides the chart into two areas. If the confidence interval goes through the vertical line, the article is not considered statistically significant. The results to the left of the vertical line are positive or beneficial, and those to the right are negative or prejudicial. At the bottom of the chart is the result of the statistical analysis providing the degree of heterogeneity of the studies. Finally, a rhombus symbolizes the results of the MA: the length of the rhombus represents the confidence interval and its width is the value of the MA result.

Ethical ConsiderationsThe authors and centers that generated the primary studies should be blinded, and this should be maintained until the end of the study. This guarantees the privacy of the authors and minimizes observer bias. In addition, when the results are assessed, this should be done independently in order to avoid improper manipulation of the research.27

Biases in Systematic ReviewsPublication BiasOccasionally, studies in which an intervention is not effective are not published. Therefore, SR that are not able to include unpublished studies may overestimate the actual effect of an intervention.17,22,33

Selection BiasThis refers to the systematic differences between the groups of patients compared with regards to prognosis or probability of response to treatment. Thus, the differences found between the groups compared cannot be unequivocally attributed to the intervention being studied as they may largely be due to other differences between the compared groups. Random assignation with adequate blinding protects against selection bias, guaranteeing the comparison of both groups except for the intervention administered.17,20,23

Observer BiasThis is not considered very important in the context of SR as it is necessary to report all the articles and authors. Nevertheless, it is possible to conceal the pertinent studies during selection. This is essential because reviewers may have a tendency to favor or disfavor known authors.20

Meta-analysisDescribed in 1976 by Gene Glass, the term meta-analysis (MA) comes from the Greek word meta (meaning after) and analysis (description or interpretation). It involves the statistical analysis of the collected results extracted from primary or individual studies with the aim of integrating the findings obtained.20

MA have two phases. The first entails calculating the measured effect for each study and its confidence interval. The second is to calculate the overall, summary or combined intervention effect as a weighted average of the effects obtained in individual studies.28

The objective of MA is to integrate the studies and later obtain overall information of the results provided by each article. To do so, it is first necessary to define what type of variable the interesting results are. If the interesting result is a continuous variable (days of hospitalization, survival, etc.), we should calculate the size of the effect (Fig. 2). In this manner, the results of the primary studies become a common unit of measurement that can be compared or integrated.20 On the other hand, if the result of interest is a dichotomic variable (alive or dead, complicated or uncomplicated, etc.), then relative measurements should be used, such as odds ratio (which requires constructing cross tabulations and estimating relative risk) and absolute measurements such as absolute risk reduction and the number necessary to treat. The relative measures express the effect or result observed in a group compared with the effect in the other group.

There is a problem, however, that must be kept in mind in this stage of MA: the heterogeneity of the primary studies, which, if present, reduces the accuracy of the final result. In these cases, a subgroup analysis is recommended, using the articles that are most similar for each study subgroup. The heterogeneity of the primary studies may occur as a consequence of the application of definitions or the use of dissimilar selection criteria among the original studies.29

There are two statistical models used to obtain the estimated effect in a group of primary studies: the fixed effects and random effects models. The fixed effects model only includes the imprecision of each study as a source of variation. The random effects model includes two variable components: imprecision in the estimation of each study and study-to-study variation. Despite these differences, there is no agreement regarding what model is best; however, there is agreement that if there is some heterogeneity, it does not seem reasonable to use a fixed-effects model.28–30

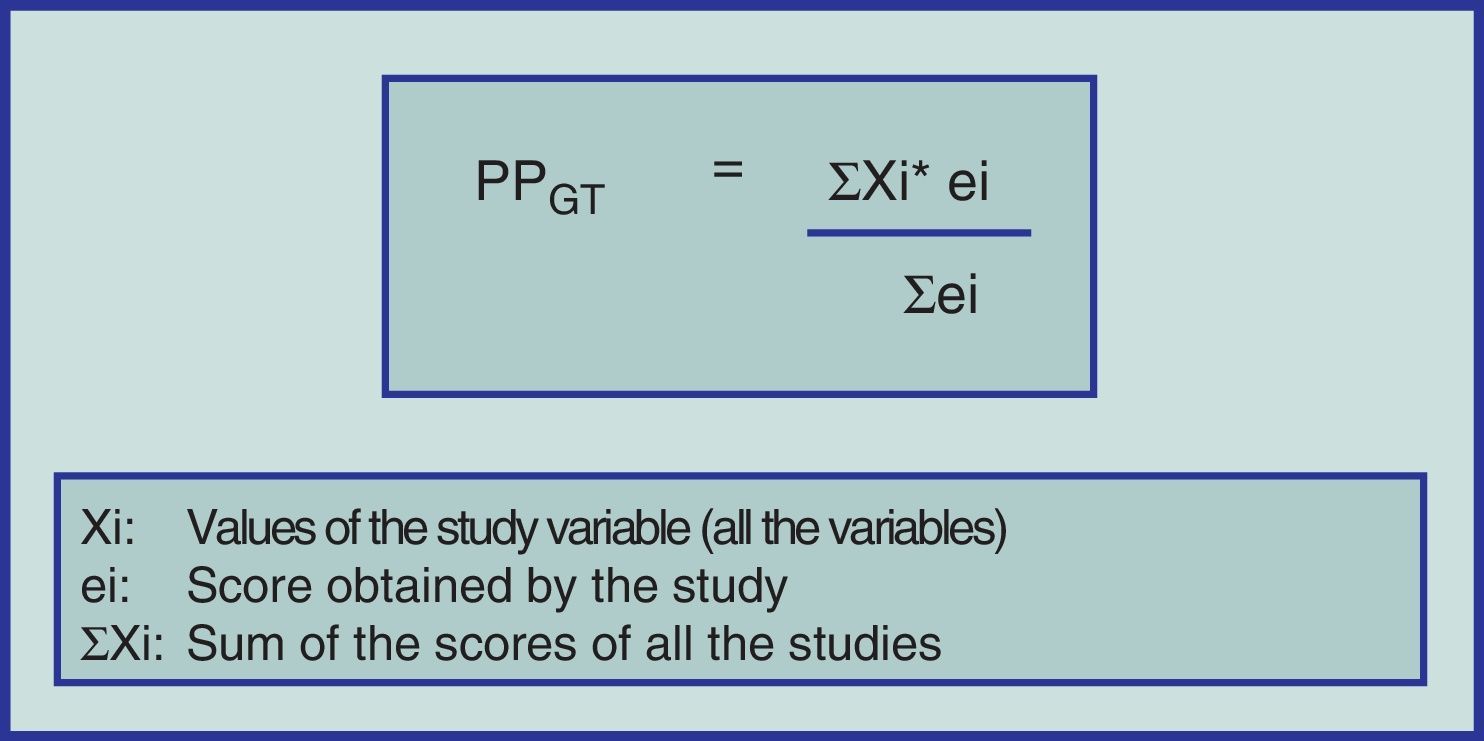

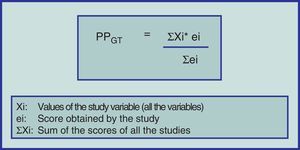

Until now, we have only given a brief explanation of the MA format for studying CT with or without random assignation and eventually cohort studies. Notwithstanding, alternative methodologies have been developed to carry out SR with different design types (including case series) and subsequently compare the results of two or more interventions by meta-analyzing the information. To do this, the “weighted averages” can be applied of the methodological quality of each primary study and for each variable to be studied (Fig. 1).31–33

Example 1SR was used to evaluate the effectiveness of prophylactic antibiotics in laparoscopic cholecystectomy related to the incidence of surgical site infection (SSI). CT and cohort studies of patients over the age of 18 were analyzed. The Cochrane, MEDLINE, SciELO and LILACS databases were searched using MeSH and free terms. 77 articles were found (17 met the inclusion criteria and only in 11 was the complete article obtained).33

In assessing the methodological quality using the MINCIR methodology and weighted averages,23 an average of 18.5 points was found, and the population of the studies was 2271 patients (1196 in the prophylactic antibiotic branch and 1077 who received placebo).

The MA gave an odds ratio of 0.726 (95% CI 0.429–1.226), which clearly defined that the use of prophylactic antibiotics does not protect against the development of SSI in patients who underwent laparoscopic cholecystectomy (Fig. 2). On the chart, it can be observed that there is discrete heterogeneity of the primary studies (P=0.09) and the main rhombus crosses one.33

Meta-analysis of the systematic review on the use of prophylactic antibiotics vs placebo in patients with cholelithiasis who underwent laparoscopic surgery in terms of the variable “surgical site infection”.33

An SR was designed and carried out with the aim to determine if the use of opioid analgesics (OA) in the therapeutic diagnosis of patients with acute abdominal pain (AAP) increases the risk for diagnostic error compared with the administration of placebo.34

A search was done in the Cochrane, MEDLINE and EMBASE databases using MeSH terms, Boolean operators and limits. Included were only those CT with random assignation, with no restrictions for language or date of publication.

The search identified 322 pertinent articles (only 59 [18.3%] met the selection criteria). Out of the 59 articles selected, 51 presented exclusion criteria that were detected when reviewed in detail. The final analysis considered a total of 8 studies that provided the MA with a total of 699 study subjects (363 with OA and 336 with placebo).

The MA was able to verify that there was no evidence that was able to support the fact that the use of opioids increases incorrect diagnoses (Fig. 3). The table shows that there is no heterogeneity in the primary studies (P=0.23) and the main rhombus crosses one. Furthermore, the MA of other variables verified that the use of OA in the therapeutic diagnosis of patients with AAP is useful in terms of patient comfort and does not delay decision-making.34

Meta-analysis of the systematic review on the use of opioid analgesics vs placebo in the diagnosis of patients with acute abdominal pain in terms of the variable “diagnostic error”.34

This study has been partially funded by the DI09-0060 Research Grant from the Universidad de La Frontera.

Conflict of InterestsThe authors have no conflict of interests to declare.

Please cite this article as: Manterola C, et al. Revisiones sistemáticas de la literatura. Qué se debe saber acerca de ellas. Cir Esp. 2013;91:149-55.