The generation and evaluation of scientific evidence and explanations is a fundamental scientific competency that science education should foster. As a learning strategy, self-explaining refers to students’ generation of inferences about causality, which in science can be related to making-sense of how and why phenomena happen. Substantial empirical research has shown that activities that elicit self-explaining enhance learning in the sciences. Despite the potential of self-explaining, college instruction often presents chemistry as a rhetoric of conclusions, thereby instilling the view that chemistry is a mere collection of facts. In addition to a frail understanding of the concept, other factors that may contribute to the underuse of self-explaining activities in college chemistry are the following: lack of an accessible corpus of literature and lack of research related to chemical education. This paper intends to contribute to improving the understanding of self-explaining in chemistry education and to describe the current state of research on self-explaining in tertiary level science education. This work stems from preliminary research to study ways to promote engagement in self-explaining during chemistry instruction and to assess how different levels of engagement influence learning of specific chemistry content.

La generación y evaluación de evidencia y explicaciones científicas es una competencia fundamental que la enseñanza de las ciencias procura cultivar. Como estrategia de aprendizaje, la auto-explicación se refiere a la generación de parte de los estudiantes de inferencias causales. Esto en ciencia se relaciona con la construcción de sentido sobre cómo y por qué ocurren los fenómenos. Una cantidad sustancial de investigación empírica ha mostrado que las actividades que promueven auto-explicación mejoran el aprendizaje de las ciencias. A pesar del potencial de este tipo de estrategias, comúnmente la instrucción universitaria presenta la química como retórica de conclusiones, inculcando con ello la idea de que la química es una mera colección de hechos. La frágil comprensión del concepto, la falta de acceso a literatura y la carencia de investigación relacionada con el campo de la educación química son algunos de los factores que contribuyen al poco uso de las auto-explicaciones. Este artículo pretende contribuir a mejorar la comprensión de las auto-explicaciones en educación química y describir el estado actual de la investigación sobre el tema a nivel de la enseñanza universitaria. Este trabajo se basa en investigación preliminar que estudia maneras de promover las auto-explicaciones durante la instrucción de química y evaluar cómo diferentes niveles de su uso influyen en el aprendizaje de contenidos de química.

“Questions — apart from rhetorical ones — may be considered to invite answers, but not all questions invite explanations”

IntroductionThe prevalent trend in college chemistry instruction in the twentieth century relied on what Lemke (1990) described as the classroom game, which posits students in a passive role (Byers & Eilks, 2009) and is characterized by instruction-centered and teacher-centered, non-interactive lecturing (Kinchin, et al., 2009; Barr & Tagg, 1995; Cooper, 1995). In this model, persistent to date, the instructor does most of the sense-making and explaining, and learning is often trivialized to knowing the correct answers or producing well-rehearsed answers when prompted. This dogmatic instructional approach — that we identify with Schwab and Brandwein’s rhetoric of conclusions (1962) — perpetuates the view of science as a mere collection of facts. Chamizo (2012) underscores the negative impact of reducing education to a means of informing in an era when information is ubiquitous and continuously produced, disseminated and accessible. Furthermore, he maintains that the aim of education should be “to help students to reason through scientific thinking rather than regurgitate the conclusions of science” (Chamizo, 2012).

Many chemistry educators have joined together in order to call attention to the need for a shift in paradigm and to promote “understanding chemistry as a way of thinking” (Talanquer & Pollard, 2010). Likewise, the US National Research Council suggests that the generation and evaluation of scientific evidence and explanations is a fundamental scientific competency that science education should foster (Granger, et al., 2012). Employing instructional methods that prompt learners to engage directly in sense-making supports this objective. There is sound empirical evidence that shows prompting students to do more self-explaining is an effective strategy to this end (Durkin, 2011). Self-explaining refers to students’ generation of inferences about causal connections between objects and events (Siegler & Lin, 2009). In science, this may be summarized as a fundamental aspect of doing science — making sense of how and why actual or hypothetical phenomena take place.

Despite the widely accepted research evidence supporting the use of self-explaining, chemistry instruction at the tertiary level rarely utilizes this type of strategy. It is only natural that many college science instructors, educated in a content-centered and teacher-centered tradition, bring the beliefs and practices associated with their experience as students with them to the learning environment (Byers & Eilks, 2009), thereby perpetuating the use of traditional methods for teaching science (Deslauriers, et al., 2011). In teacher education literature, Lortie (1975) described this phenomenon as “apprenticeship of observation.” For many, this term condenses the idea that as a consequence of having experienced instruction as students — the apprenticeship period — individuals are prone to teach the way that they were taught. Understandably, cases where no formal pedagogical training mediates the transition from student to instructor may be more predisposed to this outcome. This default option is intuitive and imitative, and it generates the false sense of expertise that many discipline-based science educators will agree abounds in college science departments (Sandi-Urena and Gatlin, 2013).

Understanding the differences between explaining-to-oneself as a learning strategy and reproducing a shared and scientifically accepted explanation to test factual knowledge (or how much attention pupils pay to lecture) is essential in improving instruction. This understanding may help chemistry educators identify the potential benefits of self-explaining in promoting science learning as it is recommended by policy makers and educational experts. Furthermore, one would expect that empirical evidence gathered in college chemistry learning environments will make a stronger case for the implementation of self-explaining strategies by college chemistry educators. This paper intends to contribute to improving the understanding of self-explaining in chemistry education and to describe the current state of research on self-explaining in tertiary level science education. This work stems from preliminary research into methods to promote engagement in self-explaining during chemistry instruction and to assess how different levels of engagement influence learning of specific chemistry content.

Self-explaining, an overviewResearch findings have shown implementing activities that elicit self-explaining improves learning (Chi et al., 1994) and enhances authentic learning in the sciences (Chi, 2000; Atkinson et al., 2003; Songer & Gotwals, 2012). Similarly, research suggests that self-explaining influences many aspects of cognition, including acquisition of problem-solving skills, and conceptual understanding (Siegler & Lin, 2009). The act of self-explaining, by its very nature, requires the reader to be aware of the comprehension process, thereby influencing metacognition (McNamara & Magliano, 2009).

We feel it necessary to clarify what self-explaining is not after recent experiences discussing self-explaining with chemistry instructors. Instructors who have been mostly exposed to and immersed in the Instruction Paradigm (Barr & Tagg, 1995) often equate the successful transmission of knowledge — the cornerstone of traditional views of teaching — with the students’ ability to reproduce the teacher’s explanations upon the appropriate prompt. Often, simple utterance or writing of the statement, rule, or theory associated with the question suffices as explanation. Students who become good players of the classroom game may resort to stringing together key words, often producing almost unintelligible sentences, in a wager to score, at least, partial credit; unfortunately, this strategy may often work. Of course, this applies to the rare cases where college introductory chemistry assessment requires written responses and not just recognizing the most likely correct answers from a set of multiple-choice options.

Other agents participating in the instructional process may reinforce the deeply rooted belief that knowing and reproducing a learned answer counts as both explanation and evidence for learning. Our introductory quote of Taber and Watts (2000) succinctly stresses this difference: Questions invite answers, but not all answers are explanations. To exemplify this point, it may suffice to take a quick look at exercises, solved problems and other learning tools in current textbooks and many online homework systems.

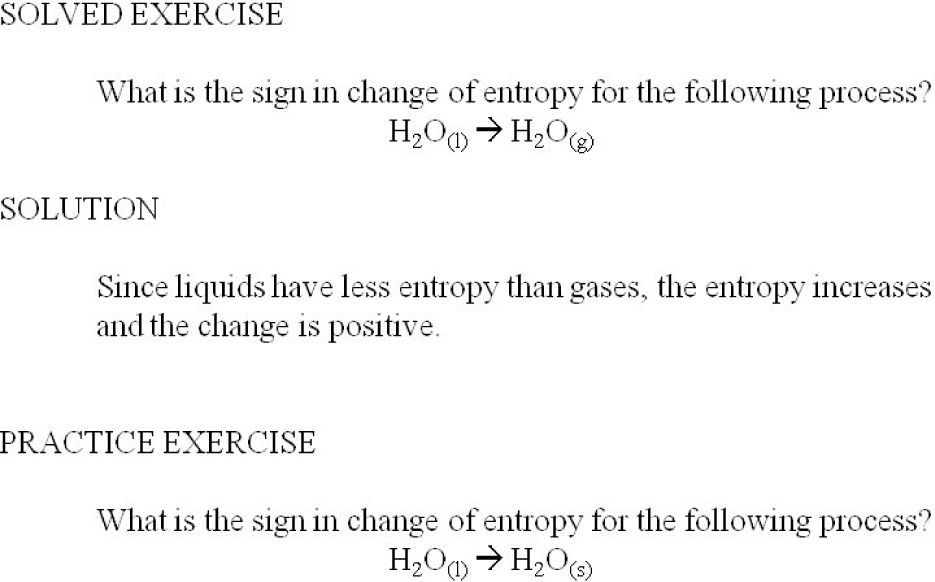

Although created by us, Figure 1 shows an example that accurately resembles those in typical general chemistry textbooks. Evidently, in this particular case it is correct to state that the change in entropy is negative. Likewise, alluding to the decrease in entropy as a consequence of the initial state, water vapor, having greater entropy than the final state, liquid water, is correct, too. However, the process of associating the question with one of several prepackaged answers does not constitute self-explaining, and we would argue it does not constitute explaining at all. Taber and Watts (2000) characterized such responses as pseudoexplanations that are more concerned with “I know that is because” than with “that is because.” Unfortunately, in the traditional ways of looking at learning chemistry, parroting that “gases have more entropy than liquids” (even attributing substance nature to entropy) may be an acceptable form of explaining.

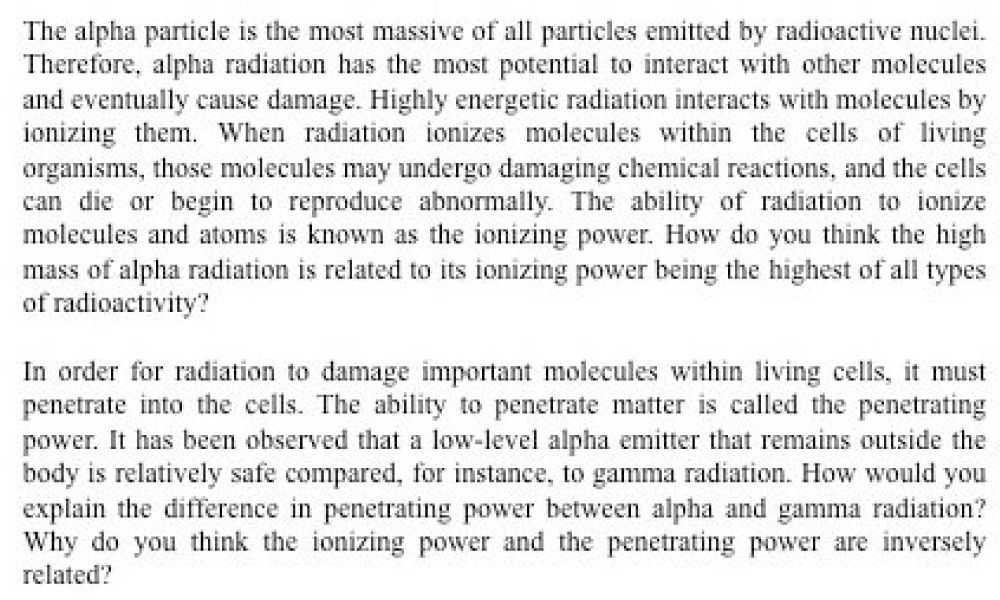

While we do not intend to undermine the relevance of appropriately using well-established scientific explanations in arguments or recalling important information, we do intend to distinguish the practice of regurgitating others’ explanations from self-explaining, which is characterized by the interpretation of evidence to generate explanations. In fact, the National Research Council identifies “the ability to know, use, and interpret scientific explanations of the natural world” as a fundamental scientific competency (Granger et al., 2012; Michaels et al., 2008). As chemistry educators, we expect chemistry learners to become familiar with and proficient in the use of the models and theoretical frameworks of our science, and, in fact, the processes that lead to this proficiency are closely related to self-explaining. Figure 2 shows a short self-explaining task that we are testing with general chemistry students. This task is presented to the students after the discussion of the discovery of radioactivity and framed as an in-class learning activity.

In this simple task, learners are challenged to interpret some basic information in order to explain the relationship between particle mass and its ionization power. Ultimately, self-explaining why larger particles would cause more damage to cells. Then a phenomenon is presented: the relative order of penetrating power. Learners are required to make causal inferences to explain this relative order. The constructive cognitive activities in which the students engage in this process may facilitate the modification of available prior knowledge and understanding and the construction of new knowledge (Ploetzner, et al., 1999).

On the other hand, instructional approaches that favor a passive learning mode (e.g., providing explanation through lecturing) are not conducive to this type of constructive activity. Traditional approaches promote students’ simple creation of another encyclopedic entry in their repertoire of learned answers, that is, they invoke direct storage of the presented information as the main cognitive process (Fon-seca & Chi, 2010). Teaching the conclusions of science nurtures the negative cycle of expectations that places students in a passive role where they see themselves as receptors of knowledge in the form of answers for examinations and their instructors as walking encyclopedias (Willcoxson, 1998).

The matter of what may constitute an explanation in chemistry has been tackled by Taber and Watts (2000). These authors focused on the nature of students’ responses to questions and the distinguishing characteristics that make some of them mere responses while others are framed as explanations. Their analytical framework allows further characterization of pseudo explanations and real explanations. Other studies have continued the investigation of the qualities, nature and structure of explanations in college chemistry courses, thus attempting to fill a void for specific understanding of explanations in specific academic domains (Talanquer, 2007; Talanquer, 2009; Stefani & Tsaparlis, 2009).

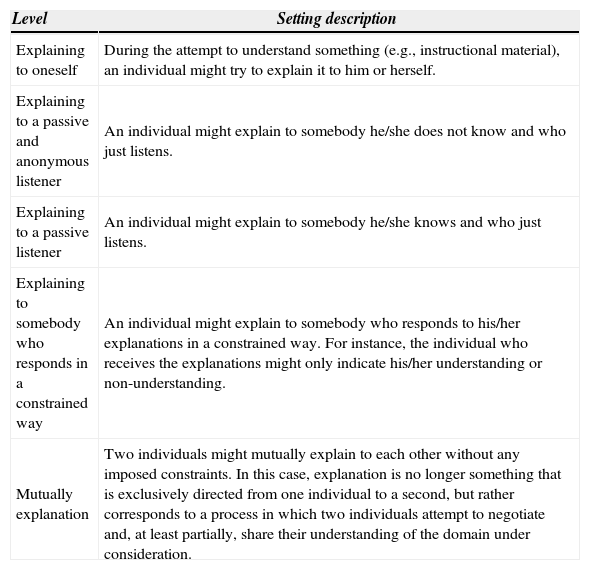

Whereas these studies look at the competence of students in generating explanations of diverse nature and qualities through the response to an assessment instantiation, we are more concerned with the learning facet. Tat is, we are concerned with self-explaining as a learning strategy and its potential impact on learning domain specific concepts — the self-explaining effect (Chi, et al., 1989). We restrict the discussion of self-explaining in this paper to the construction of knowledge and understanding from the generation of explanations to oneself (Fonseca & Chi, 2010). Others have contrasted explanations to oneself with forms of interactive explanation. For instance, Ploetzner and collaborators (1999) described five different levels of interactivity (Table 1) and compared empirical research along these interactivity levels to establish the differences between explaining to oneself and explaining to others and their potential benefits.

Levels of interactivity in the generation of explanations (Ploetzner, et al., 1999).

| Level | Setting description |

|---|---|

| Explaining to oneself | During the attempt to understand something (e.g., instructional material), an individual might try to explain it to him or herself. |

| Explaining to a passive and anonymous listener | An individual might explain to somebody he/she does not know and who just listens. |

| Explaining to a passive listener | An individual might explain to somebody he/she knows and who just listens. |

| Explaining to somebody who responds in a constrained way | An individual might explain to somebody who responds to his/her explanations in a constrained way. For instance, the individual who receives the explanations might only indicate his/her understanding or non-understanding. |

| Mutually explanation | Two individuals might mutually explain to each other without any imposed constraints. In this case, explanation is no longer something that is exclusively directed from one individual to a second, but rather corresponds to a process in which two individuals attempt to negotiate and, at least partially, share their understanding of the domain under consideration. |

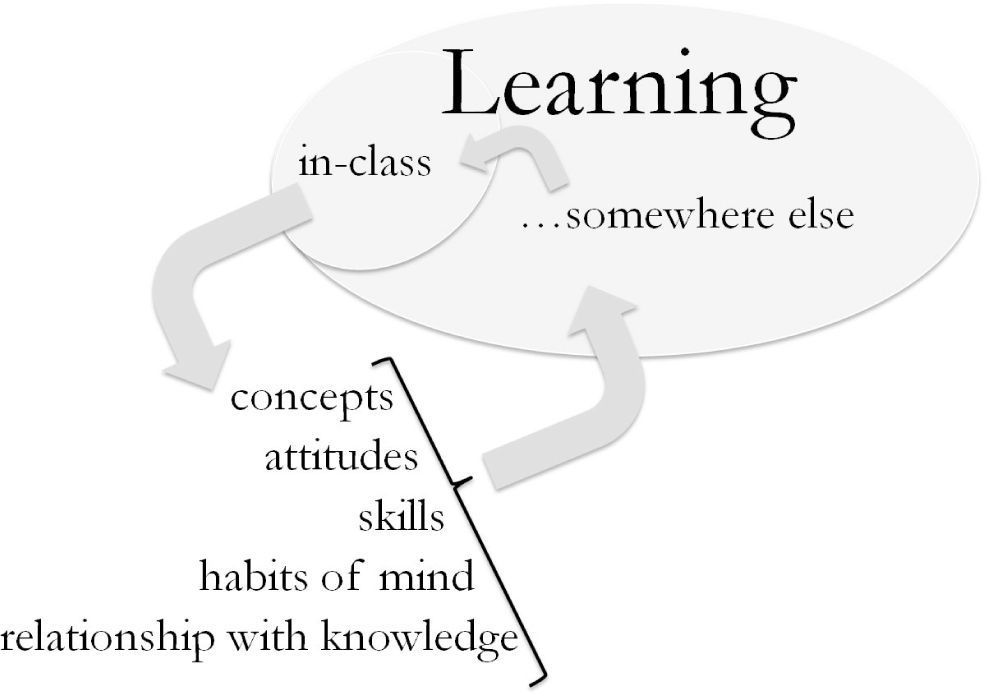

We support collaborative learning in its many expressions. However, we also acknowledge that our students do most of their learning outside the formal environments of our classrooms and laboratories — somewhere else — and away from our direct influence, Figure 3. Preliminary results of study habits at our institution showed that 87% of students enrolled in General Chemistry 1 in the Fall of 2012 did most of their unsupervised learning individually. In reporting the percentage of time that they studied with others outside the classroom, 48% reported “no considerable amount of time,” 21% “up to one quarter of the time,” and 18% “up to one half of the time.” Although the National Survey of Student Engagement, nsse, does not directly address study habits outside the classroom, its 2012 Report shows that more than half of the Physical Sciences majors who responded the survey, never or only sometimes “worked with classmates outside of class to prepare class assignments” (National Survey Student Engagement, 2013).

In the past decades, some fruitful efforts have resulted in implementation of diverse pedagogical approaches and strategies in the chemistry classroom and laboratory, often based on collaborative, small group settings (Towns & Kraft, 2011; Padilla Martínez, 2012). As depicted in Figure 3, habits of mind and learning strategies practiced during supervised collaborative learning activities may transfer to students’ independent and individual learning. By the same token, promoting learning strategies at the individual level that students can then take with them somewhere else is of utmost relevance in the classroom setting. This reality — most learning occurs away from instructor supervision when the learners are unaccompanied — led us to focus on self-explaining experiences as a means to develop transferable learning skills.

Okita, Bailenson and Schwartz (2007) have noted the “mere belief of social interaction improves learning” and these beliefs can be induced through prompting. Therefore, although unaccompanied experiences exclude direct social interaction, prompts may be designed to modify the perception of the learner in order for the experience to gain an indirect social nature. As suggested by Ploetzner (1999) “we may adapt our explanations even when the listener is imagined.” Research findings indicate that self-explaining learning strategies can be learned and developed (Fonseca & Chi, 2010). We maintain that they may become habitual with students incorporating them as part of their personal relationship with knowledge and learning. Moreover, Chi and collaborators have found the frequency of self-explaining is a predictor of the amount of learning (Chi, et al., 1994), thereby underscoring the relevance of promoting independent use of the strategy.

We are interested in investigating the extent to which self-explaining, as a learning strategy, can be manipulated within the conceptual domain of chemistry and its potential to impact chemistry learning. We strongly believe that this work will inform instructors’ views and decisions in relation to the development and implementation of self-explaining in college chemistry courses.

Self-explaining research in stem tertiary educationResearch reports on self-explaining date back to the early 1980s, span a variety of knowledge domains such as biology and history (e.g., McNamara & Kintsch, 1996; Roscoe & Chi, 2008), and have included participants from all educational levels (kindergarten to college graduates). In this section, we intend to provide an overview of the work directly related to college science in general and chemistry specifically. We have identified 31 reviews on self-explaining: seven on studies done with children, 23 on findings with mixed precollege and college participants from diverse majors, and one that exclusively addresses college mathematics (Durkin, 2011). Two of the mixed pre-college and college reviews include stem majors (Graesser, McNamara & Kurt, 2005; Schraw, Crippen & Hartley, 2006). We also found 11 published proposals for the development of learning tools and curriculum design based on self-explaining. Only one of them exclusively addresses college level education, and it focuses on procedural understanding of mathematics (Broers, 2008).

We did not identify any review specific to research done in college level science education. Therefore, we embarked on a comprehensive and systematic literature search to gather studies related to self-explaining in science education that yielded 57 journal articles. This search utilized an inclusion/exclusion process in Educational Full Text and eric databases and was completed in September, 2012. In the first analysis stage, we focused on the study design and context of the studies to extract and condense information about the methodological approaches and populations of interest. Below, we present descriptive information that sheds light about the current state of self-explaining research in tertiary stem education.

Publications through the yearsJudging by the steady increase in the number of articles, interest in the field has proliferated. Papers published in the 10-year period between 2002 and 2012 quadrupled the number of publications in the previous 20 years (1978–2001). Moreover, a third of the total number of articles in the resulting database (19 out of 57) appeared in the last three years of our review timeframe (2010-2012). In our view, this surge is indicative of a vitalized interest in researching stem self-explaining at the college level. On the other hand, the absolute number of papers may indicate that this is still an under-researched topic with much work yet to be done.

Journals of ChoiceThe 57 articles included in our review were published in 25 journals. Only 13 journals (52%) have published more than one article in this field, and only seven journals (28%) have published more than two. Inspection of the journals suggests authors’ preferences for journals in educational psychology, education, and instruction. Nonetheless, in the period from 2008 to 2012, the increase in the number of articles was also refected in the participation of more journals, with 15 publications contributing. In addition, eight of the 12 journals with a single publication made their debut contribution in the last five years.

One may propose that a more diverse choice of journals will carry a broader and more diverse readership. Furthermore, the diversity and uniqueness that each editorial board brings may reduce possible biases towards innovative or divergent ideas or research directions. However, the absence of discipline-based education research (dber) journals in the resulting database is disconcerting, since college science instructors do not typically access specialized educational journals outside their discipline.

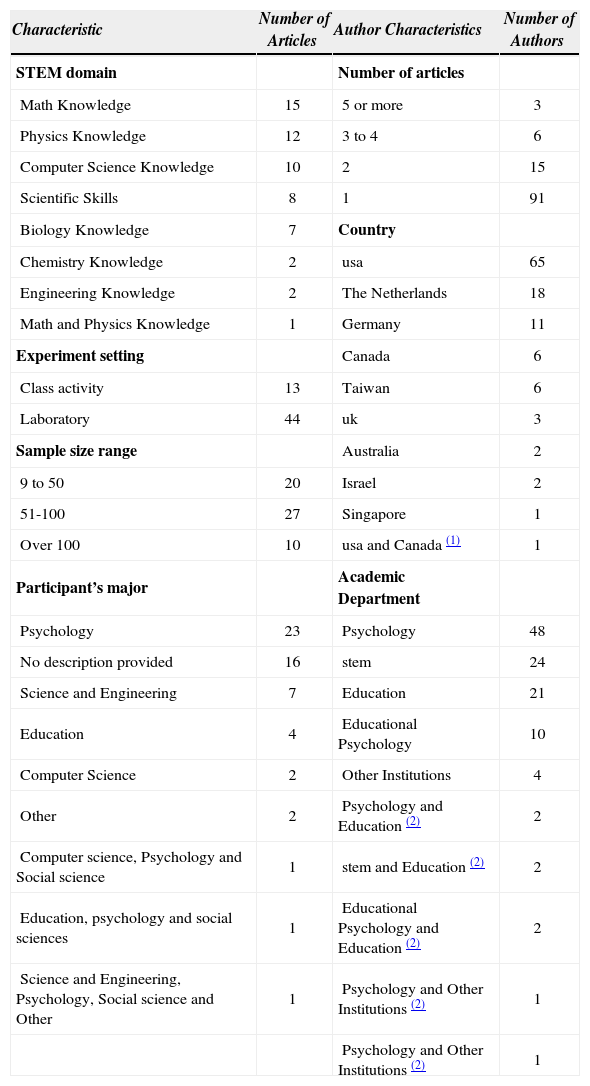

Authors and affiliationsThere are 115 contributing authors in the 57 journal articles that compose the database (Table 2). However, for the vast majority this was the only contribution as only twenty-four (21%) authored more than one article and only nine (8%) more than three. As in any other emerging research field, a shortage of trained researchers with specific expertise leads to a small-size expert community and factors into the rate of publication. Nonetheless, the current surge of interest may cause a change in this trend in the future.

Characteristics of article database.

| Characteristic | Number of Articles | Author Characteristics | Number of Authors |

|---|---|---|---|

| STEM domain | Number of articles | ||

| Math Knowledge | 15 | 5 or more | 3 |

| Physics Knowledge | 12 | 3 to 4 | 6 |

| Computer Science Knowledge | 10 | 2 | 15 |

| Scientific Skills | 8 | 1 | 91 |

| Biology Knowledge | 7 | Country | |

| Chemistry Knowledge | 2 | usa | 65 |

| Engineering Knowledge | 2 | The Netherlands | 18 |

| Math and Physics Knowledge | 1 | Germany | 11 |

| Experiment setting | Canada | 6 | |

| Class activity | 13 | Taiwan | 6 |

| Laboratory | 44 | uk | 3 |

| Sample size range | Australia | 2 | |

| 9 to 50 | 20 | Israel | 2 |

| 51-100 | 27 | Singapore | 1 |

| Over 100 | 10 | usa and Canada (1) | 1 |

| Participant’s major | Academic Department | ||

| Psychology | 23 | Psychology | 48 |

| No description provided | 16 | stem | 24 |

| Science and Engineering | 7 | Education | 21 |

| Education | 4 | Educational Psychology | 10 |

| Computer Science | 2 | Other Institutions | 4 |

| Other | 2 | Psychology and Education (2) | 2 |

| Computer science, Psychology and Social science | 1 | stem and Education (2) | 2 |

| Education, psychology and social sciences | 1 | Educational Psychology and Education (2) | 2 |

| Science and Engineering, Psychology, Social science and Other | 1 | Psychology and Other Institutions (2) | 1 |

| Psychology and Other Institutions (2) | 1 |

The research stems mostly from institutions in the US, The Netherlands and Germany, which represent 82% of the authors (Table 2). Although smaller in number, work originated in Canada and the UK is well disseminated and, based on the citation rates, has impacted work by others. Four of the twenty-two articles published since 2009 came out of Taiwan, Singapore and Australia, thus suggesting this research is making forays into other regions.

Affiliations to departments of psychology, education and educational psychology are predominant in this field (Table 2). Twenty-one of the total 57 articles exclusively listed authors with affiliation to departments of psychology and 12 more listed collaborations between departments of psychology and other departments. This frequency suggests that the departments of psychology bear a considerable weight in the field. Fifteen articles involved 27 authors affiliated with stem departments: chemistry, physics, computer science, engineering, and math. Eleven of these articles use interdepartmental collaborations where stem authors partnered with researchers from departments such as psychology, education, and educational psychology. In our view, this marginal participation of discipline-based researchers coupled with the lack of papers published in dber journals undermines the potential of implementing self-explaining in college science education. Furthermore, the lens of science educators and dber experts could add novel perspectives to the field.

Domain knowledgeMost studies focus on a smaller subset of knowledge domains: math, computer science and physics. This subset accounts for 38 of the 57 studies (Table 2). Also, the citation rates suggest that biology and computer science studies influence research more strongly than the remaining five domains. In contrast, engineering and chemistry had the lowest count with two articles each. In the case of chemistry, the articles appeared in 2004 and 2007 and both by the same authoring dyad: one educational researcher and one chemistry professor. This finding underscores the significance of promoting such work in chemistry education and its potential impact. Eight of the 57 articles addressed the effect of self-explaining on scientific skills (e.g., science text reading, critical thinking skills, argumentation skills) in the context of college stem education. Six of these articles appeared since 2004, which suggests an emerging interest in this sub-field.

Study settingWe classified the studies as class activity or laboratory based on the setting where they took place (Table 2). In class activities, the data collection was embedded within the classroom setting of a course (e.g., lecture or academic laboratory) and did not disrupt students’ normal activities. In the case of a laboratory setting, the participants engaged in an activity that was not part of an enrolled class. Such cases include participants working on activities that were not related to the course domain; interviews with think aloud protocols; and studies with volunteers contacted through advertisement. From a student perspective, the class activity setting was a natural class environment, whereas the laboratory setting was a study environment defined and controlled by the researcher.

The use of laboratory setting designs predominates in stem research on self-explaining at the college level; 44 of the 57 studies used this setting. There was little focus on research in naturalistic class settings. Interestingly, eight of the 13 articles that used class activity settings appeared after 2010, and they represent 42% (n=19) of the total number of articles published since that year. This shift in focus points to the value of investigating self-explaining in settings that better resemble students’ actual learning environments. Likewise, the shift stresses the importance of engaging science educators in this kind of research. The study of more naturalistic settings and the participation of instructors and dber experts may contribute new perspectives that would inform research and enhance the applicability of self-explaining as a learning strategy in college.

Sample sizeTwenty-seven of the studies (47%) used sample sizes between 51 and 100 participants, with only ten studies (18%) having samples larger than 100 participants (Table 2). It may be easier to accommodate a larger number of participants in class activity settings as compared to laboratory settings. This may explain why six of the ten studies with a sample greater than 100 used class activity settings. In the case of laboratory settings, 16 studies used samples between 9 and 50, and 24 samples between 51 and 100.

Participants’ majorSixteen of the 57 articles did not provide the participants’ majors (Table 2). This unreported group represents 15% of the combined total of 4517 participants in the 57 studies. The classification “Others” includes majors such as health sciences, medicine, and business. The most frequent among the reported majors, psychology and psychology-related, accounted for 26 of the 41 articles. In fact, 48% of the total 4517 participants in all studies were psychology students. Furthermore, in only eight of the 41 articles were the participants from science and engineering majors (e.g., computer science, engineering, science majors), meaning 23% of the 4517 participants in all studies were from stem disciplines. It is worth emphasizing here that despite focusing on self-explaining in stem education, the majority of participants recruited for these studies came from non-stem majors. This finding draws an interesting picture of the current state of this field. Either there is a low availability of stem majors to participate in these research studies, or there is a study design preference by researchers to include students from non-stem majors.

Conclusion and practical implicationsPolicy (Rising above the gathering storm committee, 2010) and research reports (Ruiz-Primo, et al., 2011) support and encourage the reform of science instruction and implementation of evidence-based approaches to improve science education. It is intriguing that in spite of this insistence from policy makers and educational researchers, the penetration of educational reform in chemistry departments continues to be discreet, to say the least. Even at institutions that house chemical education research divisions, one wonders how much of this consensus permeates into practice.

One may think that the case of self-explaining is an exemplar of this disconnect between what educational researchers have figured out and the practice of chemistry instruction. The first part of this paper illustrates that research across domains consistently supports the benefits of the self-explaining effect on learning and problem solving. Moreover, self-explaining is a learnable strategy. So the question emerges, what are the practical obstacles keeping educators from implementing modifications to their daily instruction and gradually moving away from playing the learning game?

In our view, self-explaining, as other constructive instructional strategies, has failed to gain recognition within mainstream chemistry education due to the lack of awareness of its potential to promote learning. As suggested above, too often the concept of self-explaining is mistakenly equated with the production of well-rehearsed explanations provided by others. Access to clear and pertinent research information may help repair this gap in understanding. However, as the second part of this paper demonstrated, for all practical purposes, there is no research on the self-explaining effect in chemical education.

To date, participation of chemical education researchers is dismal, and participation of students in naturalistic chemistry learning environments is lacking. It is not surprising then that the published research appears in specialized journals that fall far from the sphere of expertise and interest of most chemistry educators. This is consistent with a recent review analysis by Henderson, Beach and Finkelstein (2011) of the scholarship regarding how to promote change in instructional practices used in undergraduate stem courses. Trough their analyses, these researchers sharply point out that “the research communities that study and enact change are largely isolated from one another.”

We believe that this paper will contribute to improving the understanding of self-explaining in chemistry education. By describing the current state of research on self-explaining in tertiary level science education, this work provides strong support to conduct research in the context of real college science learning environments. In our research group, we have undertaken this challenge and are developing studies that intend to fill that void. As a first approach, we are studying how tasks can be manipulated to modify student engagement in self-explaining in actual large-enrollment general chemistry courses and how this adjustable engagement may impact learning chemistry concepts. We hope this work will contribute in promoting the use of self-explaining as an instructional strategy by chemistry educators.