The impact factor, a measure of the “mean citedness” of articles in a scientific journal, is a tool for the comparison of journals that was used only by a few bibliometricians. In recent years however, the impact factor has become a popular instrument for research evaluation. Bureaucrats in universities and research agencies use it as a surrogate for the actual citation count to measure the scientific production of researchers and research groups.

Using the impact factor to measure scientific production is currently a highly debated matter, even in this journal.1-4 Along with other researchers,1-10 Eugene Garfield,11 the man who came up with the impact factor, has considered the procedure to be inadequate. The European Association of Scientific Editors12 officially recommends that journal impact factors should not “be used for the assessment of single papers, and certainly not for the assessment of researchers or research programs, either directly or as a surrogate”.

Nevertheless, the two major Brazilian research agencies, CAPES and CNPq, are applying the impact factor for the evaluation of postgraduate programs and individual researchers.1-4 The following illustrates the weakness of the impact factor as a measure of science and shows that it actually has a negative impact on science.

A journal's impact factor for a specific year is defined as a quotient. The numerator consists of the number of times a journal's articles from the two preceding years were cited in articles from more than 9,000 journals during the specific year. The denominator counts the “citable” articles published in the journal in the two preceding years. In general, “citable” articles are “original contributions” and “review articles”, but this item is a main point of debate, which has been discussed below.

Out of 38 million items cited between 1900 and 2005, Eugene Garfield13 reported that only 0.5% were cited more than 200 times, and about half of the items were not cited at all. Citations of articles in any specific journal are very heterogeneous, which produces a highly skewed histogram. Different models for this distribution have been suggested. Models have been based on power laws, stretched exponential fitting, and the non-extensive thermostatistical Tsallis formalism.14

The impact factor is an arithmetic mean, which would only make sense for a Gaussian distribution. Thus, it is improper to represent the “average” number of citations of an article in a journal. When either one or several articles receive a high number of citations, the impact factor will rise considerably one year later and remain elevated for one more year. Therefore, the impact factor of some smaller journals that only publish a few articles per year may suffer oscillations of up to 40% between consecutive years. Cellular Oncology is one example of such marked oscillations in their impact factor score.15

Beginning in 2009, all manuscripts accepted by the International Journal of Cardiology must contain a citation to an article on ethical authorship published in the same journal in January 2009.16 The immediacy index (which measures the citations in the same year) of the International Journal of Cardiology rose from 0.413 in 2008 to 2.918 in 2009, and a significant boost in the impact factor will occur in 2010 and 2011. Acta Crystallographica Section A published a very highly cited review article in 2009,17 which caused a 2,330% increase in the impact factor in the following year. These examples illustrate the lack of robustness of the impact factor against single outliers.

The highly skewed distribution of citations within a journal means that we cannot expect the impact factor of a journal to provide an accurate estimate of the number of citations that an individual future article will receive. Figure 1 shows the author's scientific articles (abstracts excluded) published between 1995 and 2006 in journals indexed in Web of Science. In this diagram, there is only a very weak correlation between the journal's impact factor for the publication year and the number of citations in Web of Science in the following four years (Spearman's rank order correlation, r = 0.27; p = 0.048; n = 54). The low Spearman's correlation coefficient shows that the impact factor cannot predict citations to a single article and should not be used as a proxy for citation counts.

There was no correlation (r = 0.27; p = 0.048) between the number of citations accumulated in four years and the impact factor of the journal. These data are based on 54 consecutive publications (without indexed abstracts) of the author published between 1995 and 2006 and indexed in Web of Science.

The public discussion of the impact factor and its use for scientific funding has led to some competition between scientific journals. For many editors, it is important to increase the impact factor to climb the ranking scale, attract good manuscripts, and obtain subscriptions from library selection committees.

Impact factors are used as cut-off points for the classification of scientific journals. When governmental institutions, such as CAPES in Brazil, arbitrarily elevate the cut-off levels for funding purposes,1,2 the existence of some smaller national journals may be jeopardized. Because of bureaucratic pressure, authors will only try to publish their manuscripts in journals with the highest impact factors in their research field, rather than looking for the journal with the most suitable audience for their topic.

The impact factor is a quotient, so it can be elevated by increasing the numerator or decreasing the denominator. Because review articles are cited more often than original contributions, many journals have increased the number of reviews. For example, in 1989 the Journal of Clinical Pathology published 230 original articles and five reviews. In 2009 however, the journal published 184 original articles, and thirty-eight reviews.18

A common practice is to cite articles published in the last two years to boost the impact factor. Usually this is achieved in contributions published in the same journal (journal's so-called “self-cites”), which appear in the form of reviews, editorial comments or by directly encouraging the authors of original papers to do this.

Some journals advertise or publish editorials on their impact factors. Yet, a journal's percentage of self-cites, which contribute to the impact factor, is seldom discussed.19 Recently, Thomson Reuters started to publish impact factors minus journal self-cites. Although some journals have reported increased impact factors, the unbiased impact of these journals (i.e., impact minus self-cites) did not change. For example, neither the Journal of the American Medical Directors Association (impact factor without self cites in 2008 was 1.544 compared with 1.495 in 2009) nor Oral Oncology (impact factor without self-cites in 2008 was 2.400 compared with 2.377 in 2009) improved their unbiased impact, which was in sharp contrast to their editorial messages claiming an increased impact factor.20,21

A more refined impact factor manipulation procedure was observed in the International Journal of Neural Systems.15,18 Its impact factor rose from 0.901 in 2008 to 2.988 in 2009. This was due to both an impressive increase in self-cites, which are used for impact factor calculation (2008: 1%; 2009: 43%), and the editor quoting 92 publications (all only from 2007 and 2008!) from his journal in manuscripts that he authored or co-authored in other journals. For example, in a single article, 48 publications from the International Journal of Neural Systems appeared in the reference list.22 If these citations and the journal self-cites were disregarded, the International Journal of Neural Systems no longer had an increased impact factor.

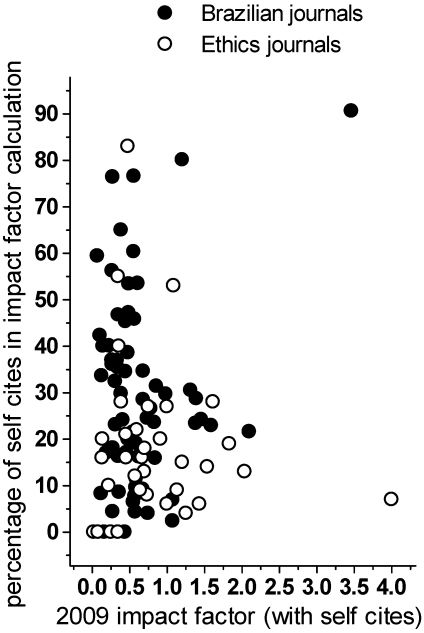

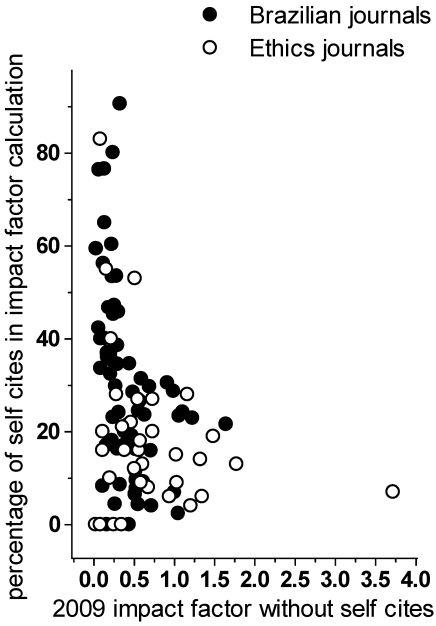

The intensity of self-cites varies widely among journals. As an illustration, we show the contribution of self-cites to the calculation of the impact factor of all journals edited in Brazil compared with all journals from the category “ethics” in the Thomson Reuters Journal Citation Reports.15 (Figure 2 and Figure 3) Brazilian journals showed significantly (p = 0.0015; Mann-Whitney U test) more self-cites (n = 64; median 27.5%) than journals in the “ethics” category (n = 34; median 15.5%); however, the impact factors were not significantly different (p >0.1). When comparing the impact factors without self-cites and the percentages of self-cites, there was a significant negative correlation among the Brazilian journals (Figure 3). Obviously the pressure or temptation to manipulate the impact factor through self-citations is more pronounced in low-impact-factor journals.

Journals with a considerable percentage of self-cites make comparisons based on the impact factor senseless. In 2008, Thomson Reuters began to eliminate journals from the impact factor list (so called “title suppression”) if they “were found to have an exceptionally high self-citation rate”. 23

Because the exact rules for this sanction were not provided, we investigated the citation history of the journals that were eliminated,15 and we found that all of these journals showed self-citation rates of at least 70%. If Thomson Reuters applies the same rules in 2011, at least four Brazilian journals and one ethics journal will be “suppressed”.

A popular impact factor manipulation method is to reduce the denominator (the “citable” publications from the last two years). One simple way is to diminish the number of “original papers” and accept some of them as “letters”. Another way is to increase the number of editorials, because “letters” and “editorials” do not generally count as “citable” articles; however, the citations in these articles still count towards the numerator of the impact factor.

The main problem, however, is that Thomson Reuters does not provide a clear-cut definition of a “citable” item, which has provoked a lot of discussion. Surprisingly, the composition of the denominator can be negotiated. David Tempest, deputy director at Elsevier, said “I would certainly say to publishers that if they have any doubts, work with Thomson to get some agreement”. 24

The editors of the Public Library of Science (PLoS) journal PLoS Medicine discussed the impact factor with Thomson Reuters and reported that the impact factor of PLoS Medicine could have varied between less than 3 and 11 depending on the definition of “citable item”. The editors said, 25 “We came to realize that Thomson Scientific has no explicit process for deciding which articles other than original research articles it deems as citable. We conclude that science is currently rated by a process that is itself unscientific, subjective, and secretive.”

Thus, we can see that the popularization of the impact factor as a rapid and cheap, although clearly unscientific, method for the evaluation of researchers or research groups has stimulated a dynamic interaction between bureaucrats (looking for a quick, mathematically simple method of science evaluation), researchers (forced to publish in journals with the highest possible impact factor), editors (constantly trying to increase their journal's impact factor), and Thomson Reuters (indexing and de-indexing journals, negotiating the impact factor calculations, and operating through rules that are not always transparent).

This is a vicious circle where the measurement process strongly influences the measured variable through a feedback loop, which finally leads to system instability. Our examples demonstrate that there is increasing pressure to manipulate the impact factors. System instabilities, such as excessive self-cites and “title suppressions”, are currently evident and will probably increase in the future.

What are the consequences for the participants of the vicious circle?

In the past, unmanipulated impact factors could be regarded as an estimate of the “prestige” of a journal inside a certain scientific field and the journal's ability to connect researchers and research groups.5 With increasing manipulation, however, the impact factors reflect the engagement and cleverness of editorial boards; thus, they lose their scientific value.

Editors will be tempted to estimate the citation potential of manuscripts, which will cause them to prefer trendy mainstream science. In addition, critical and highly innovative contributions may no longer be published if they are only interesting to a small scientific group. Impact factor calculations will also become more relevant in determining the splitting or merging of journals.

Although some journals may show impressive impact factor jumps due to intensive manipulations, others will disappear. In the worst case scenario, journals could seek to maximize their manipulation of the impact factor without getting “suppressed” from the impact factor list.

Researchers will look for trendy fields in science and choose journals with the highest possible impact factors instead of journals with the best audience for their research. Research fields with lower impact factors will get less funding and less students, and these fields will continuously lose importance in the global scientific context, which will cause them to search for ways to boost their impact factor and start the cycle all over again.

On the bright side, research funding agencies are becoming aware of the negative effects of impact factors. In 2010, the German Foundation for Science (Deutsche Forschungsgemeinschaft) published new guidelines for funding applications, which clearly discouraged the use of quantitative factors (e.g., the impact factor or number of publications) in research evaluation. 26

We hope that CAPES and CNPq will also recognize the detrimental effect of the impact factor as an instrument for research evaluation.