In this paper, we introduce a novel image reconstruction algorithm with Least Squares Support Vector Machines (LS-SVM) and Simulated Annealing Particle Swarm Optimization (APSO), named SAP. This algorithm introduces simulated annealing ideas into Particle Swarm Optimization (PSO), which adopts cooling process functions to replace the inertia weight function and constructs the time variant inertia weight function featured in annealing mechanism. Meanwhile, it employs the APSO procedure to search for the optimized resolution of Electrical Capacitance Tomography (ECT) for image reconstruction. In order to overcome the soft field characteristics of ECT sensitivity field, some image samples with typical flow patterns are chosen for training with LS-SVM. Under the training procedure, the capacitance error caused by the soft field characteristics is predicted, and then is used to construct the fitness function of the particle swarm optimization on basis of the capacitance error. Experimental results demonstrated that the proposed SAP algorithm has a quick convergence rate. Moreover, the proposed SAP outperforms the classic Landweber algorithm and Newton-Raphson algorithm on image reconstruction.

As one of the electrical process tomography imaging technologies, Electrical Capacitance Tomography (ECT) is featured in lower costs, no-irradiative and non-invasive methods, etc., and applicable to the visible measurement of two-phase and multiple-phase flows (York, 2001; Griffiths, 1988). The principle of ECT can be described as: different objects have different permittivities. If the concentration and the composition of the component phase are changed, the permittivity will change to fit the mixture. Variation in permittivity will cause a change of the capacitance measurements and the capacitance measurements reflect the size and distribution of the medium phase concentration of the mixture. On this basis, using a corresponding image reconstruction algorithm can reconstruct the distribution of the test area of the pipeline. Because ECT is non-linearity and the number of capacitances independently measured are much less than the number of pixels for image reconstruction, there is no resolution for the reverse problem. Furthermore, the sensitivity field of ECT is featured in “soft field”, i.e. sensitivity is not evenly distributed, the reverse problem equation is in a seriously abnormal state (Yang, 1997). Therefore, image reconstruction algorithm has been the bottleneck for the further development of ECT, and a high precise image reconstruction algorithm is required.

The existing ECT image reconstruction algorithms can be divided into two mainly types: non-iterative algorithm and iterative algorithm. As one of the typical non-iterative algorithms, Linear Back Projection (LBP) is simple and quick, but unsatisfying in imaging precision. So LBP is only used as a qualification method (Peng, Lu, & Yang, 2004). Iterative methods include: Tikhonov regularization method (Peng, Jiang, Lu, & Xiao, 2007), Landweber algorithm (Yang, Spink, York, & McCann, 1999), Newton-Raphson algorithm (Yang & Peng, 2003) and Conjugate Gradient method, etc. (Wang, Zhu, & Zhang, 2005). Tikhonov method may cause detailed distortion of the reconstructed images due to over-smoothness of regularization functions. As a widely used method in recent years, Landweber returns satisfying results only with large number of iterations as to complex flow patterns. Newton-Raphson algorithm is featured in local convergence, but the iterative convergence can’t be guaranteed if the initial value is not selected appropriately. Conjugate gradient method is applicable to positive definite matrix and thus it can’t obtain better effects when it is applied to complex flow patterns.

In this paper, we introduce an image reconstruction algorithm with LS-SVM and APSO, which is named as SAP. The proposed SAP is described as follows: firstly, we construct LS-SVM and excise the error between the capacitances arising from sensitivity matrix and the actual capacitance measurements; then based on the error, we constructed the fitness function and simulated annealing mechanism for particle swarm optimization; finally, we search for the optimum solution for image reconstruction with APSO.

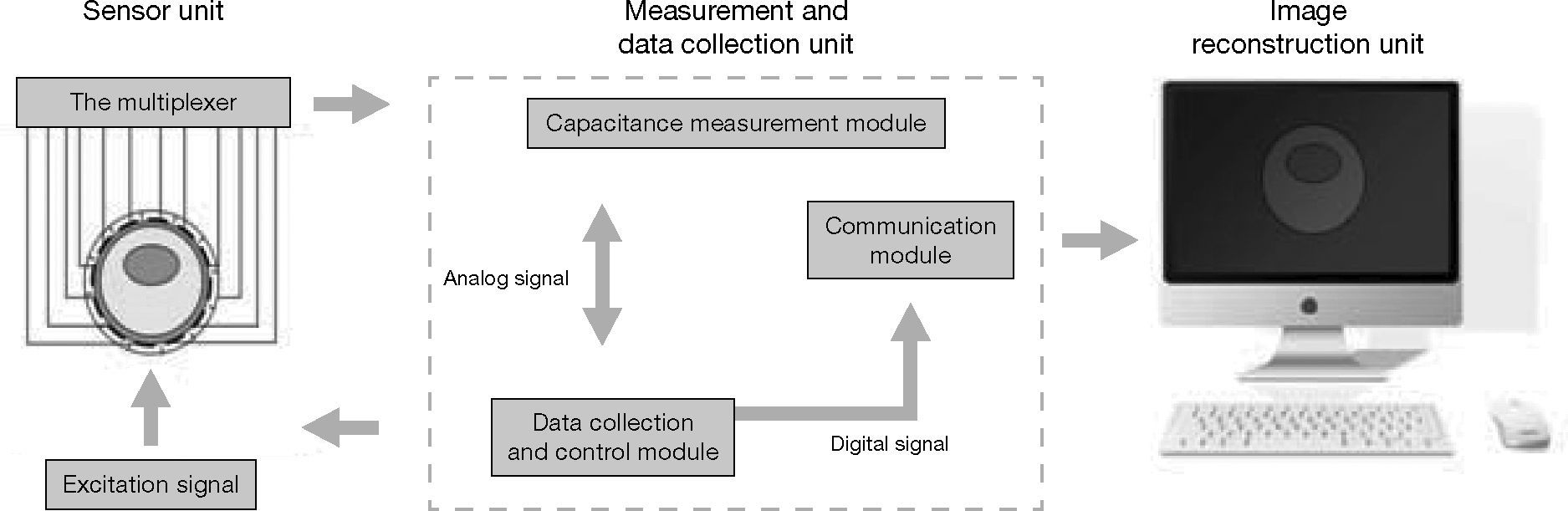

2ECT systemAs shown in Figure 1, ECT System is mainly consisted with three units: a capacitance sensor unit, a measurement and data collection unit, and an image reconstruction unit. By utilizing capacitive fringe effect, the sensor can produce a corresponding capacitance for a medium with certain permittivity. The combination of all sensing electrodes may provide multiple capacitance measurements, which can be taken as the projection data for image reconstruction. The capacitance measurement and data collection unit primarily functions as rapidly, stably and accurately measuring minor capacitance. It changes in various arrays of electrode couples, and transmits the acquired data to a computer. This unit is mainly comprised of three modules: a capacitance measurement module, a data collection control module, and a communication module. The capacitance measurement module is used to realize switching of capacitance to voltage (CV), to measure minor capacitance and effectively inhibit stray capacitance. Currently, two of the most mature methods to measure capacitance are as follows: capacitance charge-discharge method and AC-based CV switching circuit (Yang, 1996; Yang, 2001) The data collection control module generally takes DSP as the control core and takes ADC for data acquisition. Data communication adopts USB2.0 Technology (Yang et al., 2010). As ECT System has more measurement channels, it is difficult for a single DSP to meet real-time requirements. Therefore, CPLD or FPGA is generally adopted to conduct auxiliary control of DSP (Ma, Wang, & Tian, 2006). ECT image reconstruction unit is composed of two parts: hardware and software. Hardware indicates a general-purpose computer, and software indicates image reconstruction algorithm.

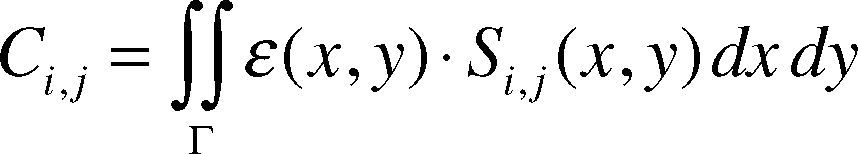

3ECT image reconstructionECT image reconstruction process includes forward and reverse questions to be resolved. As the forward question, capacitance values of all electrode pairs on basis of the permittivity distribution and excitation voltages of the known sensitivity field. The mathematic model of forward question of ECT is expressed as follows (Yang & Peng, 2003):

where Ci,j is the capacitance between the electrode pair of i-j, ¿(x,y) is the permittivity distribution on cross-section of pipes, Si,j(x,y)is the sensitivity functions when the capacitance between electrode pair of i-j is distributed on the cross-section of pipe, and G is the electrode surface. It can be seen that the sensitivity of the electrode in a point is related to its position, namely the sensitivity is not evenly distributed within the sensitivity field, which is the so-called effects of “soft field”.

The capacitance sensor comprising of n electrodes can provide M = n(n – 1)/2 independent capacitances. With M equations similar with equation (1), such equations shall be linearized and discredited to get:

where C is a normalization capacitance vector of M dimension, G is N dimension normalized permittivity distribution vector, i.e. the grey level of pixels for visualization, and S is M×N factor matrix, reflects influence of medium distribution variation on capacitance C, and is called as sensitivity matrix (Tikhonov & Arsenin, 1977).

Where Si,j(ℓ) is the ℓth unit of electrod pair to i-j sensitivity matrix, Ci,jℓ is the measurement capacitance, Ci,jl is the capacitances when the sensor is full of lower permittivity mediums, and Ci,j(h) is the capacitances when the sensor is full of higher permittivity mediums.

The reverse question is to reconstruct permittivity distribution diagram in the sensitivity field with capacitance measurements and sensitivity matrix S representing grey levels of pixels. Currently, most of the image reconstruction algorithms are achieved with the basis of:

There is no annalitic resolution for equation (4). Firstly, permittivity distribution is not linear with the capacitance measurements at boundaries in sensitivity field. Secondly, the data M is independently measurements and far less than the number of pixels N, therefore the solution of equation (4) is not unique. Furthermore, the equation (4) is ill posed, and the resolution is not stable. Minor error of C may have significant influences on G (Yeung & Ibrahim, 2003.). In addition, the matrix S is not truly constant, but varies with the actual permittivity distribution. Therefore, we try to resolve reverse question of ECT with a heuristic algorithm to achieve high-precise imaging in this paper.

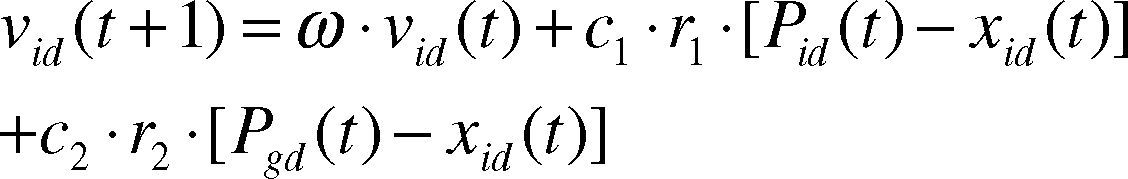

4Image reconstruction algorithm on basis of simulated annealing particle swarm optimization4.1Conventional particle swarm optimization algorithmParticle swarm optimization (PSO) is a well known heuristic algorithm, which is firstly proposed by Kennedy and Eberhart (1995) and is sourced from studies on food-catching of birds. Many kinds of PSO algorithms have been widely studied and have made certain achievements in the last twenty years (Rezazadeh, Ghazanfri, & Sadjadi, 2009; Arce, Covarrubias, Panduro, & Garza, 2012; Gerardo, Mauricio, Nareli, Ricardo, & Jessus, 2009). In PSO system, each alternative resolution is called as a “particle”. Particles are co-existing and shall be optimized. That is because each particle should “fly” towards to a better position in the question space according its own experiences to explore the best resolution. The mathematic expression of PSO is shown as follows (Eberhart & Shi, 2000).

In this paper, we presume the space is D-dimension and the scale of particles are m. The position of the ith particle is Xi = (xi1, xi2,…, xiD). The best position of the ith particle in the “flying” history is Pi= (pi1, pi2,…, piD), and we presume the best value of Pi(i = 1,2,…,m) is located at Pg; the velocity of the ith particle is the vector of Vi= (vi1, vi2,…, viD); the position of ith particle will change according to the following equations:

Where c1 and c2 are positive constants and called as speedup factors; r1 and r2 are two random numbers between [0,1]; w is called as the inertia factor; i is the ith particle 1 ≤ i ≤ m, d is the dth dimension of each particle 1 ≤ d ≤ D. The initial position and speed of particle swarm is generated randomly, and then iterated according to equations (5) and (6). The position variance range is [xd,min, xd,max] and the speed variance range is [vd,min, vd,max]. The boundary value shall be taken if the dimension of xid or vid exceeds the boundary.

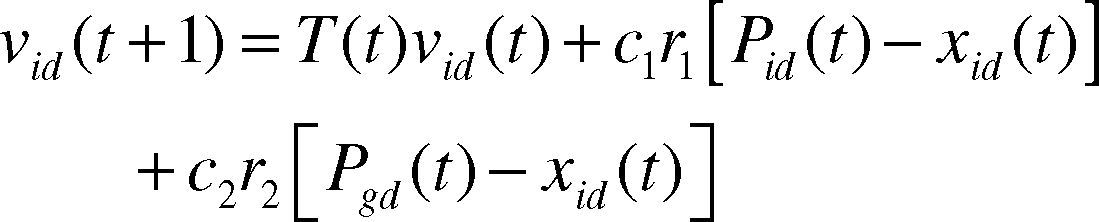

4.2A simulated annealing particle swarm optimization algorithmThe inertia factor v in equation (5) keeps particles with the movement inertia of the last generation, and thus particles intend to extend the search range. When v is larger, the last speed has significant influence and thus has better overall search capabilities; otherwise, the last speed has less influence and thus the local search capability is strong. Local optimization can be skipped by dynamically adjusting v. In their studies, Shi and Ebethartln pointed out that the inertia factors reduce gradually with numbers of iteration and thus questions caused by equivalent factor are improved (Eberhart & Shi, 1998). In other words, if the resolution after movement of particles are inferior to the solution before movement of particles, the movement shall be accepted at a certain possibility, which shall reduce gradually when time passes. However, the study result of Shi and Ebethartln did not definitely give a mathematics definition; they only give a qualitative description with their experiences.

Simulated annealing algorithm is another widely used iterative heuristic algorithm. The powerful feature of its intrinsic hill climbing capability (Kirkpatrick, 1983; Sait, Sheikh, & ElMaleh, 2013). On basis of the ideas of Simulated annealing, we introduced a cooling process function (Lin, 2001) to give a quantitative description on the process that the possibility reduces with the reduction of numbers of iteration.

4.3Selection of fitness functionsFor each iteration of PSO, the fitness function is used to determine whether the position of particle is satisfied or dissatisfied. When PSO is applied to the image reconstruction of ECT, the fitness function is generally taken as follows:

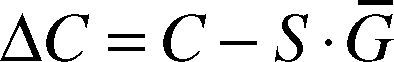

Where C is the normalization measurement capacitance vector, S is a sensitivity matrix and G is position vector of particle (i.e. the required permittivity distribution vector).

Due to the matrix S is not truly constant, but varies with the actual permittivity distribution. PSO taking takes equation (9) as the fitness function, which can achieve an overall optimization of convergence and there may be larger error between the optimization resolution and actual distribution of permittivity. The fitness function in this paper is given as the following:

Where ΔC is the output when LS-SVM takes C as input. The fitness function takes use of the results predicted by LS-SVM so as to eliminate errors arising from that different flow patterns under the same sensitivity matrix S.

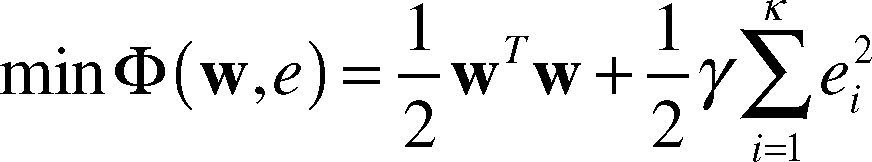

5Least squares support vector machine and its applications in image reconstruction5.1Least squares support vector machineLS-SVM is a kernel function study machine complying with Structural Risk Minimization (SRM) algorithm of the least square algorithm and principle of SRM (Gu, Zhao, & Wu, 2010). The concept is described as follows: specify sample collection {xi,yi}ki=1 map n-dimension input vector Xi and output vector Yi from the original space Rn to the high dimension special space Rn+ through non-linear transformation Φ(x). In this space the optimization linear decision function is given as the following:

Where, W is a hyper plane weight vector, and b is a polarization item. For LS-SVM, the question to be optimized is as follows:

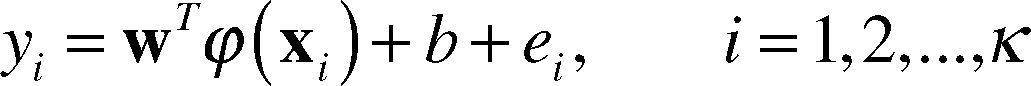

Where, ei is an error variant; ϒ > 0 is a punitive parameter controlling the punishment for samples exceeding errors. LS-SVM converts the inequality constraints into equality constraints (Ott, Grebogi, & Yoke, 1990). For LS-SVM for regression estimate, the constraints are as follows:

According to KKT conditions, the Lagrange factor αi ∈ R (i.e. support vector factor) is introduced to construct Lagrange function.

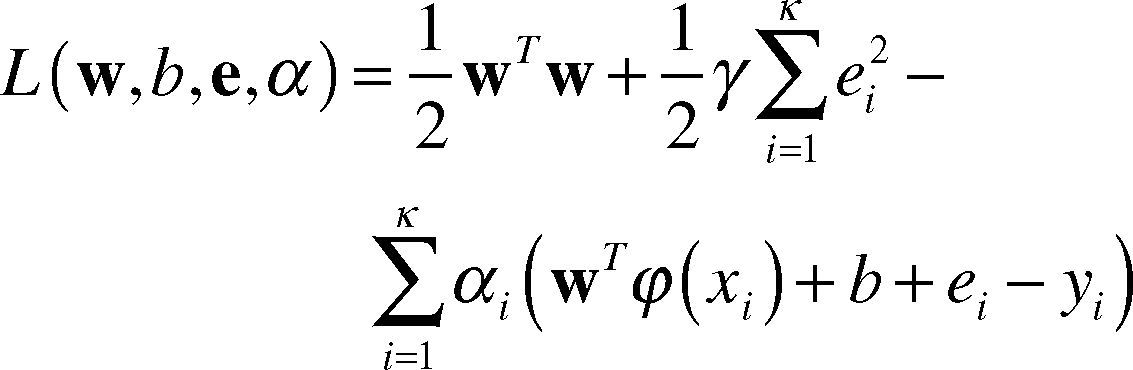

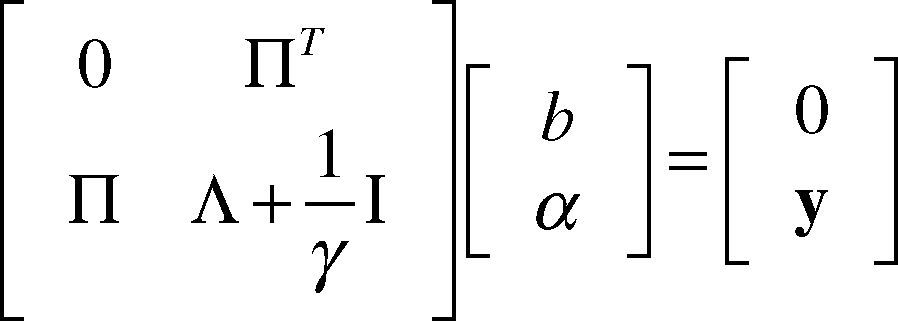

According to the optimization conditions, we make the partial derivative of L against w, b, e and a as zero, and eliminate variant w and e and thus get the following linear equation:

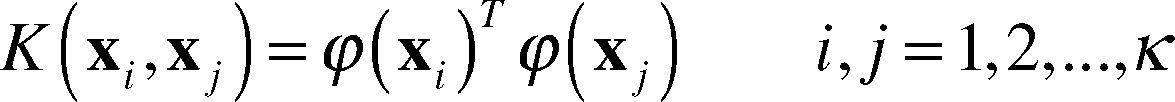

Where, y = [y1, y2,…, yk], a = [a1, a2,…, ak] and P is column vectors with k dimension as 1 and ∧ij=φxiTφxj According to the principle of Mercer, the mapping function Φ() and kernel function Φ() existing and thus:

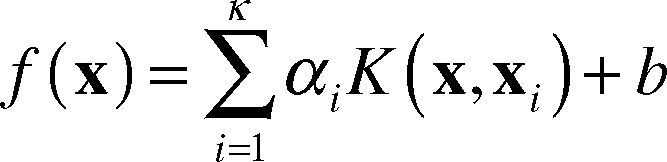

The function estimates expression of the least square algorithm is as follows:Where, ai(i = 1,2,…,k) and b are obtained from equation (15), the specific forms of f(x) is subject to types of function K(x,xi). There are many kinds of kernel functions. In this paper, we take the kernel function of radial basis (i.e. Gaussian) with higher regression capabilities which is defined as follows:

Where, s is Gaussian kernel parameter.

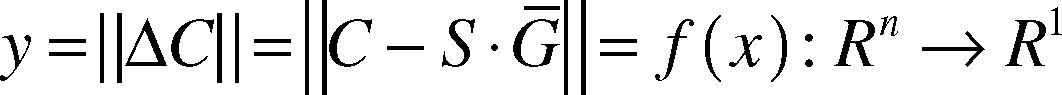

5.2Functions of LS-SVM in ECT image reconstructionAs described above, due to the soft field characteristics of ECT, the capacitance calculated through equation (2) and the actual measurement of capacitance have the following e-rrors:

where, G¯ is actual permittivity distribution vector. In order to get the capacitance error ΔC under any permittivity distribution, we take use of LS-SVM to exercise certain of quantities of samples with LS-SVM. Taking samples of vectors into equation (19) and get:

where the input vector x is normalization capacitance vector, and the output y is the norm of samples of capacitance error. When carrying out exercises with LS-SVM, the input sample collection is actual capacitance vector under all kinds of flow patterns, and output sample collection is the norm of samples of capacitance error under all kinds of flow patterns.

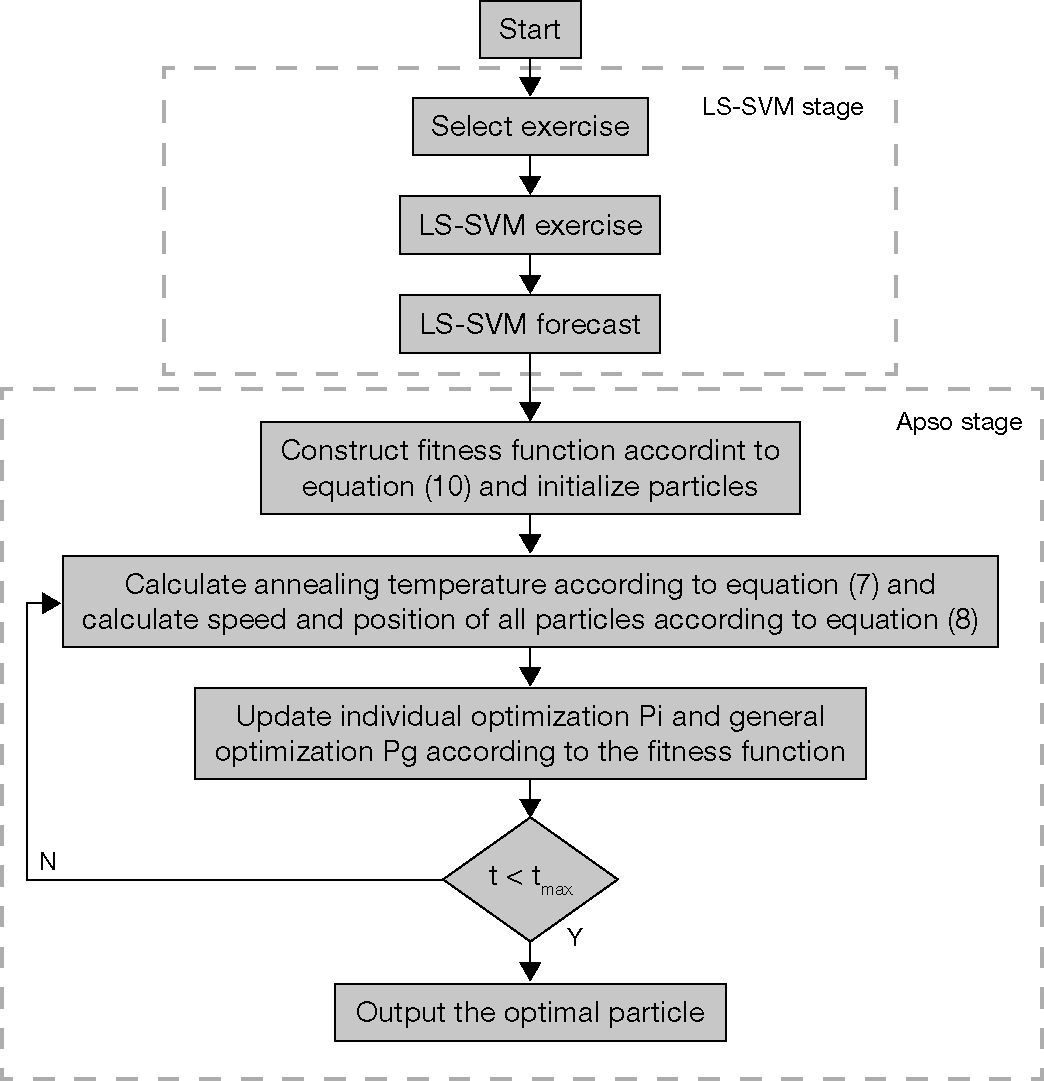

6Simulation results and analysis6.1Algorithm flow processThe SAP algorithm comprises of LS-SVM exercise forecast stage and APSO search stage. The algorithm flow process is shown as Figure 2, in which t is current iteration times of APSO.

In LS-SVM stage, 40 groups of samples including 4 kinds of flow patterns are used as exercise samples, the punitive parameter g and the kernel parameter s shall be selected through experiences. Based on the exercise results of LS-SVM, forecast of the test samples and capacitance error ΔC are achieved. In this paper, we select 8 electrode capacitance sensors to get 28 separated capacitance measurements and thus the input sample data of LS-SVM xi is 28-dimension (i = 1,2,…,40). Capacitance measurements can be obtained with finite element methods. In finite subdivision, we take triangle unit to subdivide the imaging area into 800 units, and we take finite subdivision unit as the pixel unit of images and the permittivity distribution G¯ under all kinds of flow patterns of sample is an 800-dimension vector.

In APSO stage, we firstly construct fitness function according to equation (10), then initialize particle swarm. The swarm scale is set as m=40, the number of dimensions is same as the number of pixels in image domain, i.e. D = 800. The maximum iteration number is tmax. We set up limit position of particles and variance range between limit speed and particle speed. Finally, we search for the optimum solution for image reconstruction with APSO.

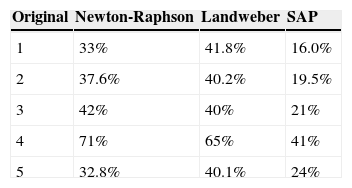

6.2Experimental results and evaluation on algorithmIn order to validate effectiveness of the algorithm, we take SAP algorithm to make image reconstruction for typical flow patterns (i.e. core flow, bubble flow, laminar flow, and circular flow), and then compared them with the imaging results of Newton-Raphson algorithm and Landweber algorithm. The simulation and calculation is carried out with MATIAB 7.10 on Intel Pentium 820 MHz CPU (RAM 512 MB). The experimental results are shown in Table 1. In imaging area, the dark area is even medium of permittivity 40 and the other areas is air (i.e. permittivity 1.0).

As shown in Table 1, we can see that imaging results with Landweber algorithm and Newton-Raphson algorithm are near to the original; however, there are too many false images. Obviously, the quality of images obtained with SAP is much better, for which the resolution of images is much higher and there is nearly no false image.

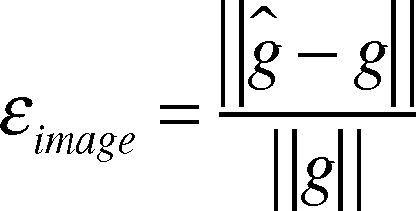

When the quality of image is analyzed, the relative image error shall be used as evaluation index of image quality, which is defined as follows:

where, gˆ is permittivity distribution vector obtained with reconstruction algorithm, and g is permittivity distribution vector in the original. i·i is a vector sample norm, which here is taken as 2.

The experimental results of the relative image error are shown in Table 2. From Table 2 we can see that the quality of reconstruction image with SAP for all above flow types are significantly improved by comparing with Newton-Raphson and Landweber algorithms.

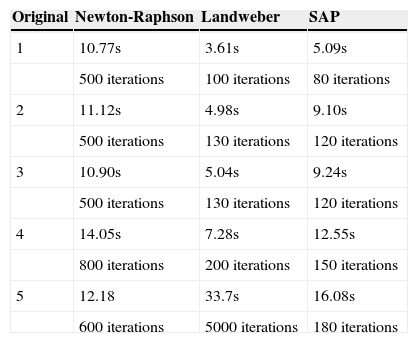

The elapsed time required for reconstruction of the three different algorithms are shown in Table 3. Obviously, the number of iterations for landweber and Newton-Raphson algorithms are greater than that for SAP algorithm. It is interesting to see from Table 3 that, in four cases, the elapsed time for the SAP algorithm is less larger than that for the Landweber algorithm, and is shorter than that for the Newton-Raphson algorithm.

Elapsed time (in seconds).

| Original | Newton-Raphson | Landweber | SAP |

|---|---|---|---|

| 1 | 10.77s | 3.61s | 5.09s |

| 500 iterations | 100 iterations | 80 iterations | |

| 2 | 11.12s | 4.98s | 9.10s |

| 500 iterations | 130 iterations | 120 iterations | |

| 3 | 10.90s | 5.04s | 9.24s |

| 500 iterations | 130 iterations | 120 iterations | |

| 4 | 14.05s | 7.28s | 12.55s |

| 800 iterations | 200 iterations | 150 iterations | |

| 5 | 12.18 | 33.7s | 16.08s |

| 600 iterations | 5000 iterations | 180 iterations |

The chaotic movement is a kind of non-periodic bounded dynamic activity sensitive to initial conditions in definite systems. The chaotic movement is featured in false randomness, ergodicity and sensitivity to boundaries, etc. (Liu, Wang, & Tan, 2006). Chaotic theory has been widely used in particle swarm Optimization algorithm (Alatas, Akin, & Ozer, 2009). When it is determined that PSO algorithm is under premature convergence conditions, the diversity of groups and sustaining searching capabilities of particles can be improved with the chaotic search.

7.1Chaotic annealing particle swarm optimizationMany rules can cause chaos and the representative chaotic model is Logistic equation (Chen & Zhao, 2009).

where u is absorption factor, k is iteration times, and d is dimensions in question. When u = 4,zd0 ∈ (0,1), the equation (22) will be completely under chaos conditions and Zdk=1 shall tour through (0,1) along with the iteration process.

Application of the chaotic search in SAP algorithm is represented in the following two procedures:

- 1.

Initialization of algorithm: generally, the scale of chaotic group mCHOAS is 0.3 times of the original group scale. We need set up the maximum iterations kmax and give initial values to zdk with minor differences separately and then get d chaotic variables zdk with different tracks.

- 2.

Searching with chaotic variables: the chaotic variables are mapped from chaotic space to the solution space for optimization of questions and chaotic search. After each step of iteration, a solution may be created for the solution space.

With kmax iteration, the solution mapped from chaotic variables will tour through the solution space for optimization questions and thus make full optimization.

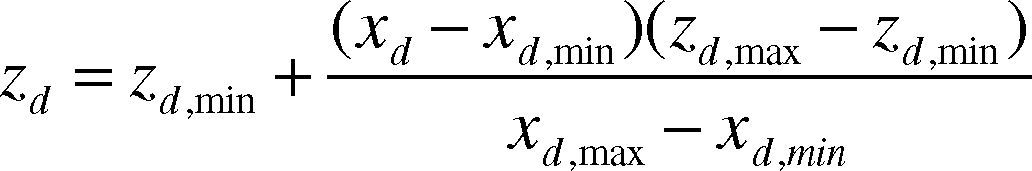

The mapping relationship between the variable xd ∈ [xd,min, xd,max] and chaotic variable zd ∈ [zd,min, zd,max] shall be specified by equation (23) and (24) (Krilc, Vesterstrom, & Riget, 2002).

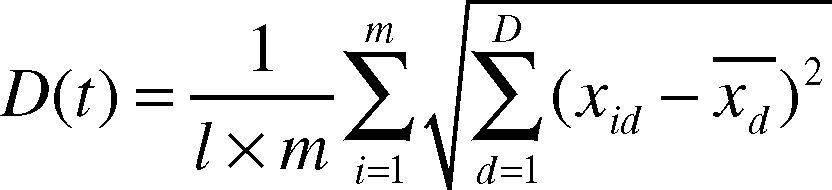

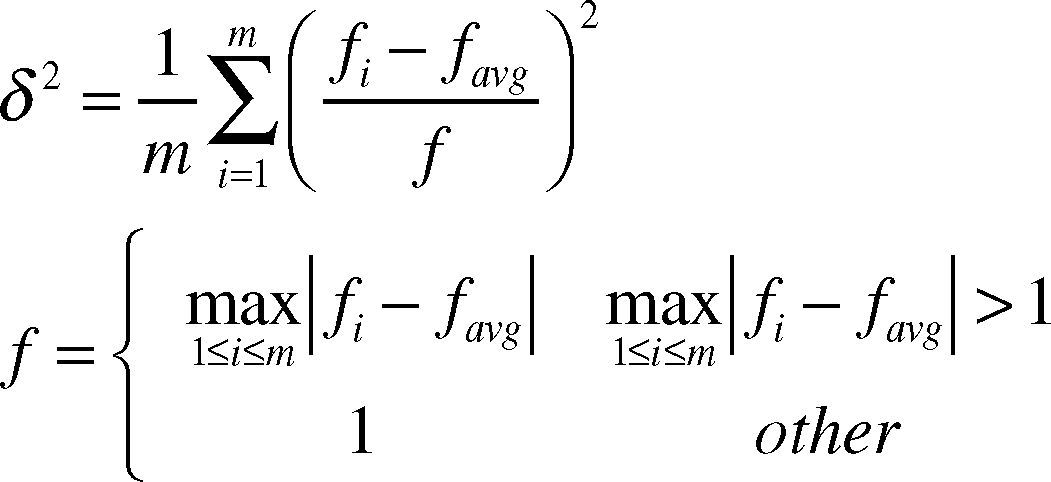

The chaotic search is carried out when the particle swarm algorithm is under premature convergence conditions, i.e. making secondary search in small field neighboring local optimal values so as to get away from local optimal values. Premature convergence is used to search for the overall optimal values with particles. All particles are trending to be collected to the same extreme point, thus the diversity of particles is gradually decreased. If such extreme value is locally optimal one, the algorithm is under premature convergence conditions. In this paper, we take the average particle distance D(t) and the fitness value variance δ2 (Lv & Hou, 2004) as the conditions for determination of premature convergence.

where l is the length of diagonals of the search space, m is group scale, D is the dimension of solution space, xid is dth dimension coordinates of ith particle, and x¯d is the average value of xid.

where fi is the value of fitness function of ith particle, favg is average value of fitness functions of all particles. When δ2 or D(t) is less than the given thresholds, it may be considered that the algorithm has been under premature convergence conditions.7.2Performance analysis of CAPSO algorithmIn order to analyse the performance of chaotic search in SAP algorithm, we take SAP algorithm to make image reconstruction for typical flow patterns (core flow, bubble flow, laminar flow and circular flow), and then compared them with the imaging results of CAPSO algorithm. The imaging results are shown in Table 4. From Table 4, we can see that imaging results of the two kinds of algorithms are almost the same. It indicates that SAP algorithm embedding chaotic search did not significantly improve the imaging precision.

In the worst case, the time complexity of the SAP and CAPSO algorithms are O(tmaxx3xmx2D) and O(tmaxx3xmx2DxmchaosxkmaxxD), where, m is group scale, D is the dimension of particles, tmax is the max iterations, mchaos is the chaotic group scale, and kmax is the maximum chaotic iterations.

Obviously, the time complexity of the CAPSO algorithm is much greater than that of the SAP algorithm.

8ConclusionsIn this paper, we have introduced an ECT image reconstruction algorithm on basis of LS-SVM and APSO, named SAP algorithm. In order to propose this algorithm, we introduced annealing ideas into particle swarm optimization algorithm, taking cooling process function to replace inertia factor and constructing the time variant inertia weight function featured in annealing mechanism. As errors caused by the fixed sensitivity matrix for ECT reverse questions, we took LS-SVM to exercise for the errors and apply exercise results to the improvement of APSO. Then the optimized resolution of reconstructed images was searched. The experimental results demonstrated that using SAP algorithm can get high precise reconstructed images.

Finally, in order to further improved the precision of SAP algorithm, chaotic search process was merged into SAP. Experimental results demonstrated that though SAP algorithm embedding chaotic search did not significantly improve the imaging precision. However the time complexity of the algorithm is greatly improved.

This work is financially supported by Projects 51405381 and 51475013 from the National Natural Science Foundation of China, Project 201314 supported by Engagement Foundation of Xi’an University of Science and Technology, and a Project supported by Scientific Research Foundation for Returned Scholars, Ministry of Education of China 2011508