The digital ecosystem continues to expand around the world and is revolutionising the way markets are researched. Indeed, consumer experiences are advertised and disseminated through so many channels and media that it has become a major challenge for researchers and marketing practitioners to collect, process and generate valuable information to support strategic and operational decisions. In this article, the authors explore how advances in quantum computing, which can be used to process huge amounts of data quickly and accurately, could offer an unprecedented opportunity for researchers to address the challenges of the digital ecosystem. Three studies are presented to define the state of the art and future expectations of quantum computing in market research and business. By means of a bibliometric analysis of 209 publications and a content analysis of the 30 highest-impact articles, we describe the present landscape, and also forecast the future with the help of in-depth interviews with eight experts. The findings reveal that the US and China are at the forefront of scientific development, but the contributions from four other countries (India, the UK, Canada and Spain) are also in double figures. However, graphical analysis identifies four poles of development: the US orbit, which includes Canada and Spain; the Chinese orbit, which includes India; the UK orbit; and the Australian orbit. In terms of expectations, the experts agree on the opportunities offered by quantum computing, but there is less consensus as to how long it will take to develop.

The advent of quantum computing processors is heralding a new era of opportunity for the business world, in which complex problems can be solved with unprecedented speed and efficiency (Bova et al., 2021). Ever since Feynman (1982) highlighted the need for a quantum computer (QC) to solve simulation problems in physics and chemistry, as classical computers were exponentially expensive for such endeavours, work to find a reasonable solution has been ongoing (Preskill, 2018). The compromise solution is called noisy intermediate-scale quantum computing (NISQ). Until recently, this was limited to laboratory studies, but then the first commercial NISQs appeared, developed by companies such as IBM, Amazon, Microsoft (Bayerstadler et al., 2021) and D-Wave, with its "The Leap™ quantum cloud service" (D-Wave, 2021), ushering in the era of industrialisation of quantum computing.

Major global powers, such as the United Kingdom, Germany, the United States and China, are conducting research programmes in quantum technologies (Ménard et al., 2020). The main reasons for funding these projects are to ensure digital sovereignty, safeguard national security and sustain industry competitiveness (Bayerstadler et al., 2021). In other words, they view QC as a means to preserve their industrial capacity.

QC has also attracted interest in the field of business management because of the opportunities that it might generate (Bova et al., 2021; Ruane et al., 2022) due to its potential to solve currently unsolvable optimisation, machine learning and simulation problems in such a short time (Kagermann et al., 2020). For example, Grover (1996) showed that the application of the wave algorithms (with wavelet properties) of quantum mechanics, thanks to their ability to perform several operations simultaneously, can provide faster solutions to a wider range of unstructured search issues. Bova et al. (2023) point out that these problems also occur in business applications. For example, Orús et al. (2019) pro- pose the use of quantum optimisation algorithms to set up stock portfolios, find opportunities for currency arbitrage, and perform credit ratings. Bova et al. (2021) divided the possibilities of quantum algorithms into four sectors: cybersecurity systems, materials and pharmaceutical design, banking and finance management, and manufacturing improvement.

Although the market for quantum solutions to management problems is still in its infancy, with the first tests of Sycamore or Xanadu in 2022 focused on solving "esoteric" mathematical problems that have no practical applications (Madsen et al., 2022), it is expected to expand substantially in the next decade. A BCG (Boston Consulting Group) report estimates that this market will be worth more than $450 billion per annum in the next decade (Langione et al., 2019).

QC's computational power and different approach to generating results will also revolutionise market research in the digital marketing domain. The emergence of the digital ecosystem is posing enormous challenges for marketing practitioners. They must learn to manage new business models and new ways of generating revenue (Sorescu et al., 2011); to communicate via new channels and social platforms (Bolton et al., 2013); and to generate information from the increasing proliferation of basically unstructured data (Kumar et al., 2013). For example, the digital marketplace not only features B2C or B2B ex- changes, but also a prolific C2C marketplace supported by platforms such as LuLu, eBay and YouTube (Leeflang et al., 2014). However, the management of online communication is different from offline. For example, offline communication campaigns involve paying a company for the contracted services, while online communication functions differently, as it is only paid for when clicking on the link to the website (Abou Nabout et al., 2012). At first glance, online communication might seem much more efficient, but there is a widespread perception among marketing practitioners that it does not easily translate into financial impact, due to the use of a number of partial and excessively indirect metrics that make it difficult for causal relationships to be established (De Haan et al., 2013). For example, it is common to attribute a sale to the last click on the banner, completely ignoring the customer's journey and the many stimuli that may have shaped the attitude and the purchase decision (Lemon & Verhoef, 2016). The problem becomes even more complex when companies use multiple online sales and promotion channels and media, and want to estimate the specific contribution of each of these channels to sales (Leeflang et al., 2014).

In short, the digital ecosystem has opened a widening gap between the complexity of digital markets and the ability of marketers to understand and cope with that complexity (Day, 2011; Leeflang et al., 2014). Therefore, new tools are needed for market research, with new metrics, and new data collection, processing and analysis systems to achieve results that will help to make the right decisions (Sáez-Ortuño et al., 2023d). QC will mean these problems can be resolved in a different way from the principles of quantum physics, which will entail new statistical analyses that were previously unaffordable and new ways of presenting the results to decision-makers, who will have to change their mentality in order to propose possible solutions.

While the increasing capacity of digital computers is facilitating the processing of huge databases, so-called big data (Grover et al., 2018), QC will take this to a whole new dimension. For example, in Google's first real test conducted in 2019, a 53-qubit processor called Sycamore was able to solve a problem in 200 s that would have taken a traditional computer 10,000 years (Arute et al., 2019). QC, unlike classical computing, works with qubits. While bits are units of information that can store a zero or a one, quantum bits, also called qubits, can represent any combination of zeros and ones simultaneously. Consequently, 53 qubits correspond to a computational state space of dimension 253 (approximately 1016) (Arute et al., 2019).

This study explores the possibilities of QC for addressing market research problems arising from the digital ecosystem. This is done in three phases: 1) a literature review to reveal the state of the art; 2) a content analysis to determine the main drivers of this technology, and 3) qualitative research with experts to project these drivers into the future.

The article is structured as follows. It begins with a brief description of the origins of quantum computing, which is in quantum mechanics. This is followed by a description of the threefold methodology for exploring the adaptation of this technology to market research for business. It then describes the three studies, and ends with the discussion and conclusions.

Origin of quantum computersQCs were proposed in the early 1980s, and were expected to surpass the computational capacity of classical computers for solving concrete problems (Benioff, 1980). This is not surprising, since QCs apply the basic principles of quantum mechanics at their core (Bova et al., 2021). For example, Shor (1994) proposed an algorithm called Las Vegas to run in a QC to find discrete logarithms and factoring integers. These two problems are considered difficult to solve with a traditional computer, and have been used as the basis for several proposed cryptosystems.

But what are these principles? Basically, that quantum mechanics enables probabilistic calculations of the observed characteristics of the displacements of elementary particles, and, moreover, that they follow a wave function in their displacement. In other words, to estimate the future displacement of a particle according to quantum mechanics, we will obtain as a result a probability density distribution according to a wave function. (Griffiths, 1995).

The quantum concept was proposed in the early 20th century as a means to solve the problem of electromagnetic radiation from a black body because frequencies were detected in the emitted light that could not be explained by classical theory (Bub, 2000). A black body is one that perfectly absorbs and then re-emits all the radiation incident on it. In the world of classical physics, the radiation emitted by a black body was perfectly continuous. According to the results generated by the equations of classical electrodynamics, the sum of all the frequencies emitting thermal radiation in an object generated an amount of energy that tended to infinity. This result contradicted logic. Max Planck, a German physicist, proposed a mathematical stratagem that consisted of substituting a discrete sum of these frequencies instead of calculating their integral, thus solving the problem of the tendency to infinity (cited by Rydnik, 2001). He also hypothesised that electromagnetic radiation is absorbed and emitted by matter in the form of "quanta" of light (Rydnik, 2001).

This quantum proposal emerged when the laws and basic elements that made up classical physics had already been established, so the new proposals were not very well received. The essential elements were particles (atoms) and fields of study had been established where electrical, magnetic and gravitational forces were analysed (Weinberg, 2017). However, as Bub (2000) explains, the basic principles of quantum mechanics were not easy to establish, as they had to overcome strong opposition and disagreement regarding something that challenged a certain theoretical consensus on the behaviour of electromagnetic force, which is one of the four fundamental forces of nature and is the dominant one in interactions between atoms and molecules (Belot, 1998). According to classical theory, the atom consists of a nucleus of protons and neutrons surrounded by a swarm of electrons orbiting around it. Each moving electron will emit more intense light by virtue of its acceleration. This light was recognised as being the result of heating due to self sustained oscillations of electric and magnetic fields (Weinberg, 2017). However, Einstein in 1905 considered these light waves to be like streams of massless particles, which were called "black bodies". In other words, he established that electromagnetism was an essentially non-mechanical theory (Bub, 2000).

Almost two decades later, De Broglie (1923) and Schrödinger (1926) theorised that electrons could only occupy discrete orbits around the nucleus and, moreover, that they behaved as if they were waves (Weinberg, 2017). That is, the Newtonian idea that electrons were like small planets rotating around the nucleus was abandoned in favour of a system similar to that of sound waves (Rydnik, 2001). In addition, two types of frequency were considered to be at work in these waves, the fundamental mode and higher harmonics, the frequencies of higher harmonics being multiple integrals of the fundamental frequency. Thus, the beam of light emitted by an orbiting electron is displaced by integral multiples of the fundamental frequency of the electron's orbital motion. Furthermore, both the upper harmonic and fundamental wave frequencies are emitted simultaneously (Bub, 2000).

These simultaneously occurring combinations of frequencies cause electrons to generate probability waves. This is known as the Born rule, which associates to each point in space a probability distribution and, to the ensemble, a probability density function (Rydnik, 2001). In other words, uncertainty had been incorporated into the theory, as one could not predict with certainty the position that an electron occupies when performing an experiment, and this conveys a sense of imperfect knowledge (Weinberg, 2017). Heisenberg, in 1927, tried to capture this uncertainty by means of his principle of indeterminacy, which posits that when conducting an experiment with a subatomic particle, a definite position and a definite velocity cannot be measured at the same time (cited by Rydnik, 2001). The measurement of a position (momentum) destroys the possibility of measuring its momentum (position) precisely. That is, if you know exactly where a subatomic particle is, you have no idea what it is doing (Rydnik, 2001).

Although an electron does not collide with an atom like a billiard ball, and can instead bounce in any direction, it is most likely to move in the direction in which the wave is strongest. So, for example, a system consisting of a single particle could be represented as a cloud of wave-shaped points distributed across a space and, at each point, an assigned number reflecting the probability of the particle occupying that position (Weinberg, 2017). In other words, the new quantum mechanics called into question the determinism of previous physical theories that came to be known as "classical". In a letter he wrote to Born in 1926, Einstein lamented that: "Quantum mechanics is very impressive. But an inner voice tells me that it is not yet the real thing. The theory produces a good deal but hardly brings us closer to the secret of the Old One. I am at all events convinced that He does not play dice." (Quoted by Pais (1982)).

Since then, there have been two approaches to quantum mechanics: the "realist" and the "instrumentalist". The former, advocated by Einstein and his allies, attempted to undermine the view of uncertainty by proposing, on the one hand, the existence of "hidden variables" that shaped entities behind the wave, and, on the other hand, through an extensive development of mental experiments, which are imaginative devices by which hypothetical scenarios are proposed and reasoning is used to try to obtain a solution. The most famous is the Einstein-Podolsky-Rosen paradox, which, in an attempt to explain the principle of locality, argues that when investigating the information generated by one particle, it would apparently be transmitted instantaneously to a second particle. This possibility of instantaneous remote actions makes localisation impossible and, moreover, does not allow for deterministic measurements and predictions about what will happen. Consequently, this approach suggests that quantum mechanics is a theory under construction and therefore incomplete (Pais, 1982).

The second approach, led by Bohr and his allies, and also known as the "Copenhagen interpretation", proposes that all we can know about the quantum world is the effects that we can observe after an intervention. Hence, the Copenhagen interpretation incorporates the indeterminacy principle, which states that one cannot simultaneously know with absolute precision the position and momentum of a particle. Furthermore, it is proposed that the uncertainty described in the Heisenberg principle does not reflect science's inability to unravel the laws governing nature, but that uncertainty is a law of nature. In an experiment to measure (observe) the displacement of a subatomic particle, it makes no sense to speculate about where it actually is, its momentum or the direction of its spin, but rather to consider that particles exist in a superposition of potential states (Pais, 1982).

The Copenhagen interpretation is considered the traditional or orthodox one. From these premises, some physicists who take an instrumentalist view maintain that the probabilities inferred from the wave function are objective. In quantum mechanics, however, these probabilities do not exist until the researcher decides what he or she wants to measure, such as the probability density of a subatomic particle spinning in a particular direction. Unlike classical physics, in quantum mechanics one has to choose the fact that one wants to measure, since one cannot measure everything simultaneously (Weinberg, 2017).

This instrumentalist view derived from the Copenhagen interpretation has made the development of QC possible. The adoption of a wave function, however simple, always provides much more information than a simple binary choice and makes computers that store and process information in this type of wave function much more powerful than ordinary digital computers. However, little is known about how this computational power is being applied to solve business-related problems and, in particular, market research questions. In order to shed some light on these facts, a bibliometric study is proposed to obtain an overview of the state of the art.

MethodsBibliometric studies are quantitative studies that use bibliographic material, such as authors, source institutions or universities, countries, keywords, citations and co-citations, among others, to reveal the fundamental elements in the evolution of a field of knowledge (Broadus, 1987; Pritchard, 1969). All these studies start from the principle that the bibliographic information recorded for an article captures its essence and is consequently an adequate representation of the knowledge they provide. Thus, the study of a set of bibliographic information within a field of knowledge will represent the knowledge structure of the discipline, i.e. the basis on which the content and results of the research are developed (Culnan et al., 1990; Samiee & Chabowski, 2012). However, although thousands of articles are published every year in most fields, only those that are published in the highest impact journals and those that are widely cited over time end up forming the basis of the discipline (Samiee & Chabowski, 2012).

Although several studies have conducted bibliometric analyses of the applications of CQ in a general field, for example, Sood (2023) or Coccia et al. (2024), there are none on its applications in the field of business and market research. For example, Sood (2023) used a sample of 5096 articles published between 2013 and 2022 in the WoS (Web of Science database) to find that interest in CQ underwent a significant upturn from 2018 onwards. In other words, the topic did not arouse much interest until only about five years ago. Sood also proposes a classification of the fields of study into five domains: quantum communication (QCo), quantum algorithm and simulation (QAS), quantum enhanced analysis methods (QEAM), quantum cryptography and information security (QCIS) and quantum computational complexity (QCC). Of these, the QAS domain is the one that has generated the largest number of publications and, within this domain, market research appears as a topic. By country, the top contributors are China, the USA, India and Australia (Sood, 2023). From another perspective, Coccia et al. (2024) analyse a sample of 14,132 articles from WoS considering three time periods (1990–2000, 2001–2010 and 2011–2020), with the aim of observing whether the topics of study remain the same or evolve over time. Their results suggest that in the first of those decades there was a dispersion of topics around CQ (e.g., Quantum Memory, Adiabatic Quantum Computing), but later the focus shifted towards topics based on innovative applications for markets (e.g., Quantum Image Processing, Quantum Machine Learning, Blind Quantum Computing) (Coccia et al., 2024).

In contrast to most studies that only consider one method of analysis, we will investigate the intellectual structure of CQ applied to market and business research from a multimethod approach. We achieve this by applying a standard bibliometric analysis, a content analysis of the references and a qualitative investigation of the themes highlighted in the above analyses. This multi-method procedure sets a precedent for future background research on topics representing disruptive change, such as CQ, consisting of two types of analysis: (1) examining bibliometrics descriptively and with content analysis and (2) studying salient research streams through in-depth analysis. The focus of our research is on applications of CQ for market research and business problem solving. The specific objectives are: to identify the main journals, topics, authors and countries that are shaping the trends in CQ knowledge in the field; to develop a better understanding of the topics that have generated recent changes in the literature; and to look ahead at the most salient issues.

Study 1Following standard protocol, this study uses bibliometric indicators (Garfield, 1955) such as the number of publications as an estimator of productivity and the number of citations as an estimator of influence (Ding et al., 2014; Svensson, 2010). Although the selection period is usually subjective, in this study we have taken as our starting point the moment when the number of citations exceeded twenty. Thus, the period analysed was from 2008 to 2024 and the data was extracted from the Web of Science (WOS). The numbers are shown in Table 1.

Annual citation structure of publications.

Abbreviations: >500, >200, >100, >50, >20, >10, >5, >1 = Number of papers with more than 500, 200, 100, 50, 20, 10, 5 and 1 citations.

Although the original idea of our research was to analyse the possible precedents of using quantum computing to conduct market research, the results of the combination of the words "marketing", "marketing research" with "quantum computer" did not generate a sufficient database to conduct any analysis, so we extended it to Business in all fields. Thus, the data collection process identified all articles using the combination of three terms: quantum* (Topic) and computing* (Topic) and Business* (All Fields). Similar to other studies, each article was identified based on keyword searches in four critical fields: title, abstract, author keywords and reference-based article identifiers (Cornelius et al., 2006; Sáez-Ortuño et al., 2023b; Samiee & Chabowski, 2012). Once the publications had been extracted, they were reviewed by the authors to determine their suitability for inclusion in the database for analysis. For example, some publications that did not address the topic of business in the abstract were excluded. The resulting number of publications was 209, which formed the database for extracting co-citation data. To complement the analysis, a graphical mapping of the bibliographic material is proposed (Cobo et, al.,2011; Sinkovics, 2016) using VOS visualisation software (Van Eck & Waltman, 2010). The VOS viewer collects bibliographic data and provides graphical maps in terms of bibliographic coupling (Kessler, 1963), co-citations (Small, 1973), co-authorship and co-occurrence of author keywords.

The set of selected studies, 209, generated 11,926 citations as illustrated in Table 1. This table is directly related to Fig. 1, which illustrates the evolution of the number of articles published in the period from 2008 to 2024. The figure shows three basic stages: from 2008 to 2010, where the number of publications per year was below five and the number of citations was under a hundred; the period from 2011 to 2019, where the number of publications still failed to reach double digits, but citations soared (e.g. three articles in 2012 generated 1419 citations, 7 in 2014 generated 1924 citations, and just 5 in 2018 generated 3205 citations); and the last five years, where the highest volume of publications is concentrated. In other words, the graph shows that the topic has only truly begun to arouse interest in the last five years. These results corroborate findings from previous studies about the take-off in the volume of publications in the last five years (Sood, 2023) as well as a concentration of topics of interest in application to the business world (Coccia et al., 2024). However, the distribution of citations is interesting. Based on the premise that the more a study is cited, the greater its influence (Samiee & Chabowski, 2012), the 2012–2019 period can be perceived as the one when the fundamental principles of quantum computing were built, forming the basis for subsequent discoveries, which is reflected in the high number of citations.

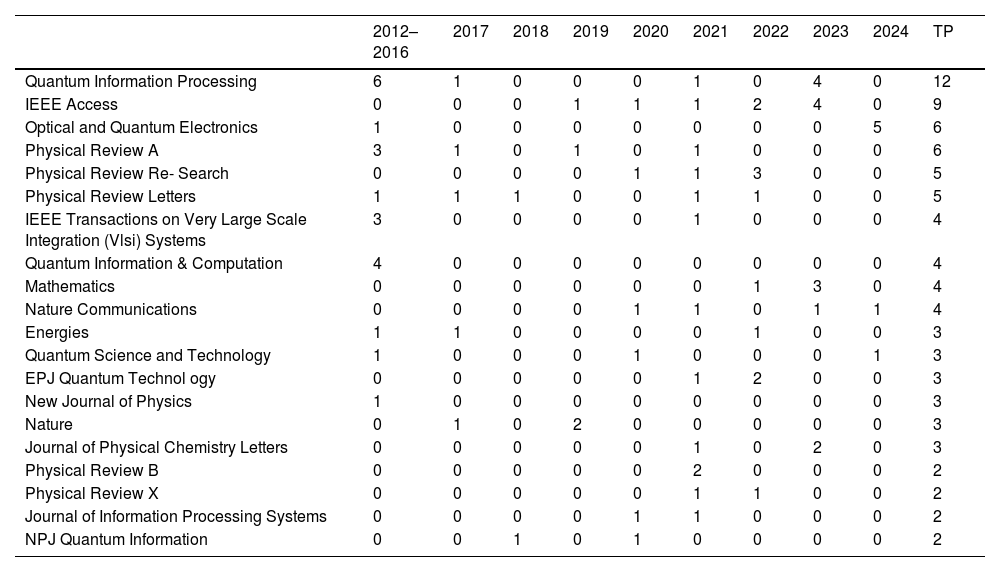

Regarding the list of journals that have published the largest volume of articles on the research topics, Table 2 lists the top 20 and is connected to Fig. 2, which presents a citation analysis of the journals made by VOS (Van Eck & Waltman, 2010). Following previous recommendations in bibliometric research, we consider the journals that have the most influential in their field, namely Quantum Information Processing, IEEE Access, Electronics Physical Review A and Physical Review Letters (Table 2), which also play a central role in the co-citation network shown in Fig. 2. Furthermore, given the objective nature of citation and co-citation data, the emerging maps are true representations of the proximities of cited works (Wasserman & Faust, 1994). Thus, when journals are located closer together, they are jointly contributing to shaping a particular area within quantum computing, while those that are further apart represent emerging paradigms in specialised fields such as energy, chemistry, etc. In addition, the size of the nodes represents the number of citations received by each journal, while the thickness of the connecting lines indicates the strength of the co-citation relationship. Fig. 2 illustrates the existence of five clusters dominated by a representative journal. The central role of the journals Physical Review Letters and Quantum Information Processing (green cluster) and Nature (blue cluster) stands out, while the more distant clusters, led by journals such as the Journal of High Energy Physics (violet cluster), the Journal of Chemical Physics (yellow cluster) and Lecture Notes on Computer Science (red cluster), are not among the top twenty journals.

Top 20 journals.

Abbreviations: TP = total papers.

The analysis of the data clearly reveals a wide range of fields of analysis and applications derived from quantum computing. In order to further specify the lines of research action in this discipline and to identify the references responsible for the construction of the emerging paradigms, the 30 most cited articles (i.e. the most influential) have been selected for analysis of their content (Table 3). Although limiting the analysis of a topic to the most influential publications has methodological support (e.g., Samiee & Chabowski, 2012), it is also essential to establish a selection criterion that balances potential articles by year of publication (e.g., Ramos-Rodríguez & Ruíz-Navarro, 2004). In our case, the selected article needed to have more than three citations per year to be included. Although all the articles refer to some concept linked to the business world, the links are generally rather indirect. However, among this selection, there are three articles that propose direct applications to the business world: Chatterjee et al. (2021), Cheung et al. (2021), and Kusiak (2019).

The 30 most cited documents.

Abbreviations: C/Y = Citations per year. TC = total citations.

Again, we use two bibliometric methods to determine the most cited authors: the frequency ranking (Table 4) and the spatial representation of links and nodes with VOS (Fig. 3). Table 4 shows the authors ordered by the number of publications, but also includes the number of citations and several derived relationships. Recalling that the number of publications is an estimator of productivity and the number of citations of influence (Ding et al., 2014; Svensson, 2010), the correlation between them was estimated as a criterion for the consistency of both indicators. The correlation coefficient between number of publications and citations is r = 0.134, and that of CT/PT and H is r = −0.001, indicating a low degree of coherence. Thus, while the ranking by number of publications is led by Tavernelli, Ivano; Bravyi, Sergey; Gambetta, Jay M.; and Kalanda, Abhinav, the cluster structure, shown in Fig. 3, displays a spatial representation of four groups led by different authors. One led by Shor, Peter W. (the blue cluster) focused on mathematical modelling; another led by McClean, Jarrod R., and Kandala, Abhinav (the green one) focused on chemistry and molecular analysis; somewhat further away, the group led by Nielsen, Michael A. (the red one) focused on geometric development and polynomial equations; and, on the opposite side, totally distant from the rest, the one led by Glover, Fred (the yellow one) had a very particular focus on the development of Quadratic Unconstrained Binary Optimisation models. However, neither Shor, Peter W., nor McClean, Jarrod R., nor Nielsen, Michael A., are among the thirty most cited authors.

Top 30 leading authors.

Abbreviations: TP = total papers; TC = total citations; H = h-index; TC/TP = ratio of citations divided by publications.

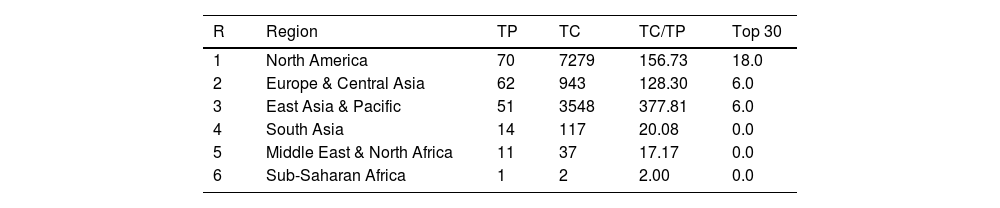

To complete the analysis, in Table 5, an examination of the most productive and influential countries is included. The country analysis is shown in Table 4, Fig. 7, and Table 7, grouped by supranational region. Table 4 shows a ranking of countries by number of publications, citations, population and various derived relationships. The estimated correlation between number of publications and citations gives an r = 94.8, indicating a high degree of consistency in the proposed ranking. The data collected indicate that there are two countries that lead scientific developments in this field, the USA and China, but four countries, India, the United Kingdom, Canada and Spain have a two-digit number of publications. However, the analysis of the clusters in Fig. 7 clearly shows four groups: red, in the US orbit, where Canada and Spain are included; green, in the Chinese orbit, where India is included; blue, in the UK orbit; and yellow, in the Australian orbit. Table 7 synthesises these results by showing the three most relevant supranational regions: North America, Europe & Central Asia, and East Asia & Pacific.

The most productive and influential countries.

Abbreviations: TP = total papers; TC = total citations; H = h-index; TC/TP = ratio of citations divided by publications; Population = thousands of inhabitants; TP/POP = Total papers per million inhabitants; TOP 30 = the 30 most cited papers.

The analysis by institutions is shown in Table 6 and Fig. 4. Table 6 describes a highly complex distribution, for although it is ordered by number of publications, it is very poorly correlated with the distribution of citations (r = 0.19). In other words, the ordering by number of publications is not very consistent. However, when considering the relationship between citations and publications, IBM Thomas J. Watson Research centre, California Institute of Technology, the University of Melbourne, and the University of Macau stand out. Fig. 4 supports interpretation by shedding further light on these relationships. Five clusters are visible: the red cluster, led by the University of Waterloo and Princeton University; the yellow cluster led by Massachusetts Institute of Technology (MIT); the green cluster, led by the University of Oxford; the brown cluster, led by IBM TJ Watson Research Center; the blue cluster, led by the National Physical Laboratory; the orange cluster led by the University of Colorado; and finally, the violet cluster, led by Edhec Business School.

The most productive and influential institutions.

Abbreviations are available in previous tables except for: ARWU = Academic Ranking of World Universities and QS = Quacquarelli Symonds University Ranking.

Publications by supranational regions.

Abbreviations: TC/TP = ratio of citations divided by publications.

Finally, the keyword analysis was carried out using two graphs produced with the VOS viewer software: Fig. 5 shows the co-occurrence of author keywords and Fig. 6 shows the co-occurrence of all keywords. Fig. 5 presents an orbital map with the 100 strongest connections, with a threshold of two documents, and which shows a galactic structure formed by two crowns. A concentration of nodes around "Quantum Computing", with some relevant satellites such as "Artificial Intelligence", and orbiting at the edge of the galaxy is a crown of motley terms such as "Intelligent Manufacturing", "Neuro Decision-making", etc., of little relevance. This graph indicates that this is a developing field of knowledge, as the generic terms that make up the theoretical basis, namely "Quantum Computing", "Optimisation" and "Software Evolution", predominate in the centre, but their application to specific fields is still very scarce, with only "Artificial Intelligence", "Atom Relay Switch" standing out, while numerous applications such as "3 G Mobile Communication" and "Healthcare and Bioinformatics" are being explored.

Study 2A content analysis was performed of the abstracts of the thirty articles selected in Table 3. This is a qualitative, systematic and replicable technique for synthesising a long text, either from an interview or a piece of writing, into a small number of words following specific coding rules (Berelson, 1952; Krippendorff, 2018). Although content analysis is not limited to the study of texts, as coding also exists of print advertisements (Spears et al., 1996), of music included in television commercials (Allan, 2008) or of actions associated to gender roles in television advertisements (Verhellen et al., 2016), the most commonly used data source is written text (Krippendorff, 2018).

Content analysis basically entails three approaches: lexical analysis (nature and richness of vocabulary), syntactic analysis (verb tenses and modes) and thematic analysis (themes and frequency) (Oliveira et al., 2013). These approaches have been used both separately and complementarily in various applications such as identification of authors of anonymous texts, detection of changes in public opinion, or brand image assessments, among others (Stemler, 2000). In this study it was used to examine trends and patterns in documents, i.e. to analyse abstract themes. Although there are software packages that can be used for content analysis, such as MAXQDA® and NVivo®, given that neither of them replaces the role of the researcher in the coding process (Oliveira et al., 2013; Krippendorff, 2018) and that the number of abstracts, 30, is manageable, our analysis was carried out by the researchers themselves.

The procedure consisted of identifying and coding phrases or expressions that refer to the themes proposed in the analysed paper, according to the usual procedure followed in the literature (Sáez-Ortuño et al., 2023c; Tuomi et al., 2021). Coding followed a three-step procedure: First, abstracts were read and phrases or expressions related to the topic of the study were highlighted. Second, the highlighted sentences were coded resulting in 62 initial codes. Third, the codes were grouped between 8 and 10 categories, and in order to improve internal validity two of the researchers read, highlighted and coded the thirty abstracts separately (Sáez- Ortuño et al., 2023c; Zaman et al., 2023). To complement this last step, the degree of convergence in coding was checked using Cohen's κ estimate and, in both cases, indicated good agreement (value above 0.80) (Landis & Koch, 1977). Certain discrepancies were discussed and the resulting eight final codes are shown in Table 8.

Coding of the content of the 30 papers.

Quantum computing has inspired a whole new generation of scientists, including physicists, engineers and computer scientists, to fundamentally change the landscape of information technology. Although it is a recent technique, numerous articles recall the initial emergence of the quantum physics revolution, and the subsequent advances that have led to the current state of knowledge (Preskill, 2018; Havlicek et al., 2019).

The field is still described as being in its infancy, as the available devices have limited capacities (between 5 and 79 qubits) and, moreover, are mostly located in laboratories (Havlicek et al., 2019; Córcoles et al., 2020). However, the lattice structure of the cloud is pushing the boundaries of what could be simulated in the past, enabling exploration of the first quantum systems (Tacchino et al., 2020). These constraints have led to a focus on solving problems that are not overly complex. For example, molecular studies involving only hydrogen and helium molecules have been performed (Kalanda et al., 2017). The resolution of difficult problems is expected to require processors capable of millions of qubits, but with relatively low error rates (Córcoles et al., 2020). Mitigation of the errors generated by these devices appears to be one of the hard-to-solve problems, for which purposes corrective mechanisms such as Noisy Intermediate-Scale Quantum (NISQ) have been proposed (Preskill, 2018). However, even with limitations, the problem-solving capability of medium-sized quantum computers is considered to be superior to classical ones (Havlicek et al., 2019; Córcoles et al., 2020). It is also stimulating to consider the possibility of solving problems that are considered intractable even on the fastest classical computers (Kandala et al., 2019; Córcoles et al., 2020).

Fundamental conceptsGiven the novel nature of the concept, theoretical development on its ontology, methodology and epistemology is required. Quantum computing uses quantum bits (qubits) instead of bits and hence represents a different paradigm in the way calculations are performed and problems are solved compared to standard classical computers (Kandala et al., 2017; Havlicek et al., 2019, 2019). Quantum physics procedures are described for achieving processor acceleration (including entanglement between qubits) so that correlations can be explored in problems with wave functions in a way that generates the correct answer at the end of the computation by means of constructive interference (several waves are added together, creating another wave with a larger amplitude) (Córcoles et al., 2020). These procedures contrast with the standard computational picture, in which every fundamental information unit is definitely in state 0 or 1 (Ollitrault et al., 2020; Bravyi et al., 2021).

However, quantum computers are fragile and susceptible to noise, which leads to errors and incorrect responses. Given the number of operations required to implement any quantum algorithm, it is imperative for any quantum computation to incorporate some means of error correction (Fowler, 2015; Preskill, 2018; Kandala et al., 2019). The problems generated by the use of a set of gates are also discussed. Although gates are used to reduce processing overhead by moving information from one processor to another, they require more effort in terms of calibration and stabilisation (Scherer et al., 2017; Preskill, 2018). Numerous experiments have attempted to optimise these processes (Bravyi et al., 2017; Scherer et al., 2017, 2021).

Algorithmic design and developmentSome authors consider that the main advantage of quantum computers is the possibility of working with very complex algorithms rather than the ability to perform fast operations (Amy et al., 2013). Therefore, getting the most out of quantum computers requires the design of sufficiently complex algorithms that cannot be efficiently simulated on a classical computer (Córcoles et al., 2020). Many algorithms for quantum computing can be simulated on classical computer lattice (cloud) systems, but this has limited capacity, as estimation quickly becomes more difficult as more qubits of information are added (Fowler, 2015).

Also, the design of algorithms needs to address the noise problems of quantum computers, for which purpose the use of QEC (Quantum error correction) codes is proposed. Experiments and simulations are conducted to test their performance, providing estimates of what will ultimately be required to operate various algorithms in fully robust (fault-tolerant) computing (Fowler et al., 2012; Fowler, 2015). To this end, open-source collaborations have been proposed for the design of robust algorithms and systems, such as, for example, Qiskit (Córcoles et al., 2020).

Data analysisAs the speed of information growth exceeds Moore's Law, as it did at the beginning of this century, data overload is both a major challenge for researchers and a source of problems for the population as a whole (Chen & Zhang, 2014). For researchers, there is the problem of dealing with completely unstructured information, while the general public faces uncertainty about the origin, veracity and motivations of information, particularly regarding the spread of ‘fake news’ (Kusiak, 2019). An illustrative example of this concern is that of studies on medical diagnosis and treatment. The availability of electronic medical records has increased the volume and complexity of information available on each patient, leading to an increase in the number of medical alerts, with the consequent ‘alert fatigue’, thus necessitating the design of algorithms to facilitate rapid diagnosis and accurate treatment proposals (Dilsizian & Siegel, 2014). At the moment, the range of data that can be analysed is very limited. For example, quantum computing has been used to improve the performance of a binary classifier (Havlíček et al., 2019) or for stochastic Chebyshev approximations to perform spectral sums of mathematical matrices (Han et al., 2017).

Applied quantum computingThe range of studies is still quite limited. Applications have been reported in chemistry (Córcoles et al., 2020; Havlícek et al., 2021), medicine (Dilsizian & Siegel, 2014) and physics (Abramsky & Brandenburger, 2011; Scherer et al., 2017), as well as some direct applications in management and business. The latter have arisen in contexts such as digital production and manufacturing organisations (Chatterjee, 2021) or logistics and cybersecurity (Cheung et al., 2021).

Big dataSome authors propose the possibility of quantum computing being used to tackle more recent problems, such as the challenges associated to Big Data in terms of data capture, storage, analysis and visualisation (Chen & Zhang, 2014). Abramsky and Brandenburger (2011) address the problems arising from information entanglement, which is often a key computational resource, in the search of solutions and propose a new combinatorial condition, which generalises the "parity tests" that are commonly found in the literature.

Machine learningAnother tool that quantum computing hopes to advance is machine learning. For example, it is hoped that the performance of kernel methods in pattern design can be improved through the use of support vector machines (SVMs) (Havlíček et al., 2019). Applications in quantum machine learning (Fowler et al., 2012) and quantum simulation (Preskill, 2018) have also been explored. Havlícek et al. (2019) demonstrate how quantum state space can be used as a dimension of features that can shape supervised learning. Adaptations and combinations of classical and quantum algorithms are also considered. For example, Chen et al. (2021) propose a quantum neural network architecture for wave space classification, while Kandala et al. (2019) propose the combination of quantum and classical techniques to mitigate errors in noisy quantum processors running machine learning algorithms.

Artificial intelligenceSome articles look at the use of artificial intelligence in cardiac medicine and imaging, as well as the concept of personalised medicine. The combination of AI, big data and massively parallel computing offers the potential to create a revolutionary way to practice personalised and evidence-based medicine (Dilsizian & Siegel, 2014). Chatterjee et al. (2021) propose the dentification of the socio-environmental and technological factors that influence industrial and digital technology companies to adopt technologies that integrate both AI and QC. This is a field of research that, although still in an embryonic state, looks set to be one of the great challenges of the future.

Study 3More questions than answers emerged from the bibliometric study and the content summary of the thirty most cited papers. We derived a guide of semi-structured questions that should be asked to experts in the field. Eight interviews were conducted with experts on quantum computing projects between January and April 2024. These included researchers from public centres such as the BSCCNS (Barcelona Supercomputing centre - Centro Nacional de Supercomputación), researchers at private companies such as Fujitsu International Quantum centre, Multiverse Computing, or Qilimanjaro Quantum Tech, and researchers and owners of quantum software startups. The interview script covered topics such as the current state of development of quantum computing, the role of support centres, the possible effects of its development on the business world, and, finally, advice for those starting out in this field.

To investigate this topic, a descriptive approach was used to narrate the interviewees’ training and research experience, together with an interpretive approach to the issues addressed (Miles et al., 2013; Ospina et al., 2018). The naturalistic interpretive method involves understanding the processes that lead to the development of the object (quantum computing), the entities that foster its development, as well as the effect of their environments and the possible economic and social implications it will have on the development of the object. This helps to shape an enriched account of the experts' view of reality, providing conceptual insights, trends and estimation of effects rather than a description of ‘realities (…) reduced to a few variables’ (Rynes & Gephart Jr., 2004, p. 455).

The interview was structured in two stages (Trinczek, 2009): (1st) Contextualisation phase. The study was briefly introduced, possible doubts were addressed, consent protocols were fulfilled and the interviewee was asked to describe his or her training and research career. (2nd) Argumentative and discursive phase based on a script consisting of four questions: 1) Impact of advanced algorithms and quantum computing on the current business landscape; 2) Challenges and limitations of quantum technologies; 3) Role of universities and research centres; 4) Advice for people currently training in this field.

The content synthesis process began by transcribing the answers to the questions, which was followed by thematic analysis, the latter consisting of identifying phrases or expressions that refer to the object of study, and coding them (Braun & Clarke, 2019). The coding process entailed three steps (Sáez-Ortuño et al., 2023c; Tuomi et al., 2021): 1st) sections or expressions related to the argumentative themes were highlighted; 2nd) codes were assigned to the argumentative themes; 3rd) themes were grouped into nine thematic clusters. To improve internal validity, two of the researchers read the first three interviews and coded them separately to compare the degree of agreement in the coding process, which was estimated using Cohen's κ, reaching values above 0.80 (Landis & Koch, 1977). Furthermore, the different coding criteria were discussed until a consensus was reached.

The results show, broadly speaking, that the development of quantum computing is still in its infancy, but there is an intense demand for transformation driven mainly by external pressures, and the need to solve complex problems, rather than internal, natural research development. Expectations for the medium-term future are optimistic. From the process of highlighting content, coding and clustering, nine thematic groups were formed:

Status of research on advanced algorithms and quantum computingThe content analysis of the abstracts and the feedback gathered from various interviewees shared the view that the process in question, a novel technological or computational method, is still significantly underdeveloped and is presently only offering limited practical applications. The challenges identified include the need to either substantially modify existing algorithms or develop entirely new ones tailored to this process, the occurrence of unstable results that undermine reliability, and a utility that is very specifically constrained to addressing problems related to mathematical series. Despite these substantial hurdles, there is a palpable sense of optimism about the future capabilities of this emerging technology. The potential to solve complex and previously intractable problems is compelling enough for many companies to choose to invest resources and strategies for its eventual maturation and integration. This proactive approach by businesses underscores a broader expectation that, with further development and refinement, the technology could radically transform various industries by offering new solutions and efficiencies. These anticipated advances could lead to significant economic and operational improvements across sectors, driving a wave of innovation and competitive advantage for early adopters.

Indirect effects on market researchAlthough quantum computing has not yet been directly applied to market research, its potential to revolutionise this field is significant, given its capabilities to optimise complex problems, manage vast databases, and generate a multitude of potential solutions with respective probabilities. The intrinsic power of quantum computing lies in its ability to perform computations at speeds and accuracies unachievable by classical computers, making it ideally suited for handling the intricate algorithms often required in market analysis. This could indirectly enhance market research tools, facilitating more sophisticated data analysis techniques that allow for the individual modelling of consumer behaviours and preferences. Such detailed insights could enable companies to predict consumer buying tendencies with far greater precision, tailoring marketing strategies to meet nuanced consumer demands effectively. Furthermore, quantum computing could tackle classical optimisation problems like the travelling salesman problem — a key challenge in logistics — with newfound efficiency. By improving route optimisation, quantum computing could significantly streamline marketing logistics, reducing costs and delivery times, and thereby enhancing the overall efficiency of marketing operations. The ripple effects of such advancements could lead to more targeted and efficient marketing campaigns, optimised resource allocation, and ultimately, a more profound understanding of market dynamics. As quantum technology continues to evolve and integrate into various business processes, its indirect impact on market research and the broader realm of marketing strategy design could be transformative, paving the way for more data-driven, precise, and effective marketing decisions.

Influence on strategic business decisionsQuantum computing represents a profound shift in the landscape of strategic decision-making by offering innovative methods to tackle complex problems with unprecedented efficiency. Traditional decision-making processes are frequently marred by cognitive biases, leading to suboptimal outcomes. However, quantum computing's integration with advanced technologies such as machine learning and artificial intelligence (AI) systems presents a promising avenue to mitigate these biases. By harnessing the superior processing power and parallelism of quantum computers, these AI tools can analyse vast datasets and identify patterns far beyond the capabilities of classical computers, thus reducing the influence of human cognitive limitations and increasing the objectivity of decisions. For instance, in sectors like finance and logistics, quantum-enhanced algorithms could optimise portfolio management or streamline supply chain dynamics more effectively than ever before. Furthermore, these quantum-powered tools offer the potential to deliver not just faster but also more accurate insights, enabling managers to make well-informed decisions swiftly. Despite these promising prospects, it is important to recognise that quantum computing is still in its nascent stages, and its practical implications for business and strategy are not fully realised. The technology's current limitations, including issues related to error rates and qubit coherence, mean that while the theoretical benefits are clear, the real-world impact of quantum computing on decision-making processes has yet to be concretely assessed. Nevertheless, as the technology matures and these limitations are overcome, the potential for quantum computing to significantly enhance decision-making processes and drive strategic advantages is immense, heralding a new era of efficiency and precision in business management.

Challenges for businesses to integrate emerging technologiesThe integration of emerging technologies such as quantum computing into business operations presents a host of significant challenges that go beyond the initial high costs and complex infrastructure requirements. First, the sheer complexity of quantum technology demands an in- depth understanding of the fundaments of quantum mechanics and computing principles, which are markedly different from those of classical computing. This leads to the second major challenge: the scarcity of skilled personnel. Businesses must invest in extensive training programmes or recruit highly specialised talent capable of developing and operating quantum algorithms, which are essential for leveraging the unique capabilities of quantum computing. Furthermore, the identification of practical, viable applications for quantum computing within existing business models is not straightforward. While sectors like cryptography, drug discovery, and complex system simulations stand to benefit immensely, the integration of quantum capabilities into these fields requires significant research and development efforts. Another major challenge is the technological maturity of quantum computing. As it stands, quantum technology is still in its infancy with many unresolved issues, such as error rates and qubit coherence times, which limit practical applications. Businesses must navigate these uncertainties and continuously adapt to rapidly evolving technological advancements. Finally, there is the strategic challenge of bridging the gap between current digital capabilities and the advanced potential of quantum technologies. Businesses need to align their IT strategies with quantum computing advancements without disrupting their ongoing operations. This strategic integration requires foresight, planning, and potentially restructuring of business processes to incorporate and fully exploit the benefits of quantum computing in the future. This balancing act between innovation, practical application, and operational integrity defines the broader landscape of challenges that businesses face when integrating emerging technologies like quantum computing.

Addressing the skills gap in human resourcesIn the rapidly evolving landscape of scientific research and business management, keeping up to date with the latest guidelines and criteria is becoming increasingly more difficult due to the sheer volume of information generated every day. This information overload can obscure critical trends and innovations necessary for effective decision-making in both fields. Quantum computing, with its potential to process vast datasets exponentially faster than classical computers, offers a promising solution to this challenge. Mixed systems that integrate quantum computing capabilities can potentially revolutionise the way data is analysed, allowing for the extraction and synthesis of relevant information much more efficiently. This capability is particularly crucial for researchers and business managers who need to sift through extensive databases to find actionable insights. However, harnessing the full power of quantum computing requires a specialised skill set that is currently scarce in the workforce. Complex quantum algorithms and the principles of quantum mechanics are not typically part of standard education curricula. Recognising this gap, some universities and education institutions have started to offer courses and programmes focused on quantum computing and its applications. However, there is a significant need for broader and more profound initiatives in education spheres to train a new generation of professionals. These programmes should not only provide fundamental knowledge in quantum mechanics but also practical skills for applying quantum computing to real-world problems in science and business. The development of such education programmes must be accelerated and expanded to meet the growing demand for quantum-savvy professionals. This should involve collaboration between academia, industry leaders, and governmental bodies to ensure that the curriculum remains relevant to the rapidly changing technological landscape. As quantum computing continues to mature and its applications become more widespread, the ability of professionals to effectively utilise this technology will become a critical factor for leading innovation and maintaining competitive advantage in various sectors.

Role of universities and research centresEducation and research institutions play a pivotal role in the advancement and adoption of quantum technologies, serving as vital hubs for both fundamental knowledge and practical experience. By offering specialised courses and laboratories equipped with quantum computing resources, these institutions not only educate the next generation of scientists and engineers but also provide them with crucial hands-on experience that is often lacking in more traditional education settings. Beyond their educational role, these institutions are at the forefront of fundamental research that explores the theoretical limits and practical applications of quantum computing. This research is essential for pushing the boundaries of what quantum computing can achieve, leading to breakthroughs that could revolutionise various fields, including cryptography, materials science, and complex system modelling. The collaborative efforts within these institutions—between experienced researchers, industry experts, and young scholars—can foster the kind of innovative ecosystem that is critical for the continual advancement of quantum technologies. This synergy not only accelerates the development of new quantum algorithms and improved quantum hardware but also ensures that the challenges and opportunities of quantum computing are well understood and integrated into broader scientific and technological contexts.

Vision for the future of quantum technologies and economic impactThe interviewees, comprised of leading experts and innovators within the field of quantum technologies, express a uniformly optimistic outlook on the future impact of these emerging technologies on the global economy. They anticipate significant potential for disruptive change across a myriad of sectors, including finance, healthcare, logistics, and cybersecurity, once quantum computing has achieved full maturity and becomes more widely accessible. This optimism is rooted in the inherent capabilities of quantum technologies to process and analyse data at speeds unattainable by classical computers, potentially unlocking new paradigms in problem-solving and decision-making. For instance, in finance, quantum computing could dramatically enhance the speed and accuracy of risk assessment and fraud detection, while in healthcare, it might enable the analysis of vast genomic datasets far more quickly, leading to faster and more personalised medical treatments. Furthermore, in logistics, optimisation processes that currently take days could be completed in minutes, revolutionising the efficiency of supply chains worldwide. The interviewees also highlight the potential for quantum computing to bolster cybersecurity through the development of virtually unbreakable encryption. However, they acknowledge that significant challenges remain, including scaling the technology, reducing error rates, and ensuring broad accessibility and affordability. Despite these obstacles, the collective view of these experts suggests a transformative future, wherein quantum technologies catalyse a new era of economic growth and innovation.

Keeping up with rapidly changing trendsBusinesses aiming to remain at the forefront of technological innovation, particularly in the rapidly evolving field of quantum computing, can employ several strategies to ensure they are well-informed and prepared for the impending changes. One effective approach is to form partnerships with research institutions that are leading quantum technology research. These collaborations can provide businesses with direct insights into cutting-edge advancements and early access to new quantum computing technologies and methodologies. Additionally, keeping a keen eye on industry news through reputable tech news platforms, journals, and conferences focused on quantum computing can help businesses to track general trends, breakthroughs, and the competitive landscape. Another proactive strategy involves the creation of specialised in- house teams dedicated to researching and understanding the potential impacts and applications of quantum computing within the business sector. These teams can focus on translating complex quantum mechanics into strategic business opportunities and preparing the company for integration of quantum technologies. Alternatively, for businesses without the resources to support full-time internal teams, consultation with specialised firms that offer expertise in quantum computing can be a valuable option. These firms can provide tailored advice on how quantum computing can be leveraged for specific business needs and to help develop strategies for adoption as the technology matures. By incorporating these strategies—partnering with academia, keeping up to date with industry news, and investing in either in-house expertise or consultancy services—businesses will not only be informed about developments in quantum computing but will also be positioned to effectively capitalise on this revolutionary technology as it progresses towards commercial viability. This proactive engagement will be crucial for businesses looking to harness the competitive advantages offered by quantum computing, ensuring that they are not left behind in what promises to be a transformative period in technological history.

Advice for researchers and studentsThe researchers underscore the importance of a comprehensive educational foundation in quantum technologies for students aiming to excel in this field. They stress that the rapidly evolving nature of quantum computing and its applications across various industries necessitates not only in-depth, specialised knowledge but also a versatile and adaptable skill set. To truly capitalise on the opportunities that quantum technologies are expected to bring, students should immerse themselves in a broad curriculum that covers the fundamental principles of quantum mechanics, quantum computing algorithms, and their practical implications in real-world scenarios. Moreover, the researchers advocate for an interdisciplinary approach to learning, encouraging students to explore how quantum technologies can be applied to diverse sectors such as cybersecurity, pharmaceuticals, finance, and materials science. For instance, in cybersecurity, quantum computing promises to revolutionise encryption methods, while in pharmaceuticals, it could accelerate drug discovery by simulating molecular interactions at an unprecedented scale and speed. By understanding these applications, students will be better prepared for a wider range of career opportunities, from quantum software development to strategic roles guiding the adoption of quantum technologies in traditional businesses. They are also advised to keep their career paths flexible, adapting to industry needs as they evolve in line with the progression of quantum technologies. This might mean continuing education through advanced degrees, participating in workshops and conferences focused on quantum computing, or engaging in hands-on projects and internships that provide practical experience with quantum systems. The researchers also suggest that students should keep abreast of emerging trends and breakthroughs in the field by means of academic journals, professional networks, and collaboration with peers and mentors. By cultivating a robust, flexible educational background, and staying informed about industry developments, students will be well-prepared to navigate the challenges and exploit the opportunities that arise as quantum technologies continue to develop and integrate into various aspects of the global economy. This preparation will not only make them valuable assets in their future workplaces but will also enable them to make their own contributions to the advancement of quantum technologies.

Discussion, conclusions and future researchAlthough QC technology is at an early stage of development, there are high hopes for the possibility of solving complex problems that will enable innovations in physics, chemistry, medical treatments, cryptography, operations management and even market research, among others, which will help to sustain a competitive advantage for both companies and nations (Atik & Jeutner, 2021; Carberry et al., 2021). The proposed bibliometric study has revealed the existence of four groups of countries vying for dominance of this technology: one led by the United States (with Canada and Spain), the second led by China (with India), the third by the United Kingdom and the fourth by Australia.

QC knowledge and technology are evolving in a complex manner with interactions in different areas that can be classified into five characteristics: 1ª) the development of equipment, which includes the evolution of circuits and connection bridges, among other hardware-related elements; 2) algorithms, either the design of new algorithms or the adaptation of old ones, as well as their implementation; 3) modelling that can be applied with this technology ranging from the use of causal relationship or simulation, combinatorial, probabilistic and optimisation models; 4) applications of the technology to different fields of science and industry, such as physics, chemistry, renewable energies and even macroeconomic forecasting, among others; 5) applications in tools that can be used in market research, such as machine learning, quantum computing, image processing, big data and artificial intelligence, etc.

The practice of market research is at a crossroads, as the e-commerce and virtual environment has simultaneously brought about increases in the volume of customers around the globe and a boom in the amount and complexity of information disseminated through social networks, such as in the form of comments about or ratings of establishments, businesses and products. This environment makes market research increasingly more complex, chaotic and costly (Nunan & Di Domenico, 2013). However, the combination of AI, big data and quantum computing is expected to offer the potential to create a revolutionary way to practice personalised market research that is cost-effective and based on empirical evidence. In addition, ethical, legal, cultural and political issues that might arise from technological solutions that will enable personalised marketing following customised customer research will need to be addressed. For example, the analysis of market segmentation has been one of the main objectives pursued by the adoption of AI tools in data processing, content analysis and decision support systems (Saez-Ortuño et al., 2023a). Thus, with the increased analytical capacity that QC can offer, this methodology can continue to lead the way for other market research techniques and practices. Concretely, several promising future lines of research can be delineated, which hope to leverage the unique capabilities of QC to enhance analytical precision, speed, and depth in understanding market dynamics: (1) Quantum algorithms for consumer behaviour analysis: this line of research focuses on developing and optimising quantum algorithms that can analyse consumer behaviour more efficiently than classical algorithms. By leveraging the parallelism of quantum computing, researchers can model complex consumer interactions and preferences at a granular level, potentially transforming the way businesses predict buying patterns and personalise marketing strategies. (2) Enhancement of real-time market simulations: Quantum Computing could drastically improve the simulation capabilities of market research. Future research could focus on creating quantum-enhanced simulation models that market researchers can use to test and predict market responses to different business strategies in real-time, providing a competitive edge in rapidly changing markets. (3) Quantum-enhanced machine learning for market segmentation: this research area would explore the integration of quantum computing with machine learning techniques to perform market segmentation. The goal would be to develop quantum machine learning models that can quickly process vast amounts of data to identify nuanced market segments, thereby enabling more targeted marketing and product development. (4) Development of quantum resistant cryptography for market research data: as market research increasingly relies on sensitive consumer data, protection of this information becomes paramount. Research into quantum-resistant cryptographic methods will be crucial to safeguard data against potential quantum computing threats, ensuring that consumer information remains secure in a post-quantum world. (5) Cross-disciplinary studies on the impact of QC on Social Sciences: investigation of how quantum computing could influence various aspects of social sciences, including market research, will generate a broader understanding of its potential impacts. This could involve collaborative research between quantum physicists, data scientists, and social scientists to explore new methodologies for data collection and analysis. (6) Quantum Computing infrastructure for data handling and processing: research focused on developing robust quantum computing infrastructures tailored to market research applications. This includes both hardware improvements, such as more stable qubits and scalable quantum processors, and software advancements, such as user- friendly quantum programming environments and data handling frameworks. These research initiatives seek not only to develop the underlying quantum technology but also to tailor its capabilities to meet the specific needs and challenges of modern market research. By financially nurturing these lines of research, the market research industry could be at the forefront of adoption of and benefit from the advancements in quantum computing. Although, as indicated above, QC technology is still in its infancy, researchers and R&D project managers know that the provision of financial resources to a line of research can be an accelerating factor in the development and diffusion of any technology (Roshani et al., 2021). This study brings together different lines of research that are being carried out around QC (e.g. quantum circuits and processors, software development, application to machine learning, etc.). Therefore, depending on the line that is financially nurtured, further development of that innovation will be achieved.

LimitationsThis study presents some interesting results. However, it also has several limitations. First, although a much more specific "marketing research" query was originally proposed, the use of more ambivalent terms such as Business (All Fields) and quantum computing may have resulted in articles that are overly generic and indirectly related to business. Second, the co-occurrence of author keywords shows a scatter map in the form of concentric waves without any dominant line. This indicates that the current bibliometric review is in a state of constant evolution. Clearer lines are likely to emerge in the not-too-distant future that will illustrate research trends more accurately. Third, as a dominant line is formed, the lattice structure might change to even feature different networks of contacts. Hence the need to monitor developments as more data becomes available in order to finalise the lines of action followed by the evolution of QC research and its possible effects on the instruments for developing market research.

CRediT authorship contribution statementLaura Sáez-Ortuño: Writing – original draft, Validation, Project administration, Investigation, Conceptualization. Ruben Huertas-Garcia: . Santiago Forgas-Coll: Writing – review & editing, Writing – original draft, Supervision, Methodology, Formal analysis. Javier Sánchez-García: Writing – review & editing, Validation, Project administration. Eloi Puertas-Prats: Writing – review & editing, Software, Resources, Data curation.

We appreciate the invaluable collaboration of the following scientists: Pol Forn Díaz (Group Leader Quantum Computing Technology Group at IFAE. CTO and co-founder at Qilimanjaro Quantum Tech), Artur Garcia Saez (Leading Researcher at Barcelona Supercomputer Center), Eugeni Graugés (Dean at Facultat de Física, Universitat de Barcelona), Bruno Juliá Díaz (Full Professor Dpt. Quantum Physics and Astrophysics Facultat de Fisica, Universitat de Barcelona), Almudena Justo Martínez and Alejandro Borrallo Rentero (Fujitsu International Quantum Center), Román Orús (Cofounder Multiverse Computing), Marta Pascual Estarellas (Chief Executive Officer at Qilimanjaro Quantum Tech) and Axel Pérez Obiol Castaneda (Visitor at Barcelona Supercomputer Center).