The coronavirus disease caused by SARS-Cov-2 is a pandemic with millions of confirmed cases around the world and a high death toll. Currently, the real-time polymerase chain reaction (RT-PCR) is the standard diagnostic method for determining COVID-19 infection. Various failures in the detection of the disease by means of laboratory samples have raised certain doubts about the characterisation of the infection and the spread of contacts.

In clinical practice, chest radiography (RT) and chest computed tomography (CT) are extremely helpful and have been widely used in the detection and diagnosis of COVID-19. RT is the most common and widely available diagnostic imaging technique, however, its reading by less qualified personnel, in many cases with work overload, causes a high number of errors to be committed. Chest CT can be used for triage, diagnosis, assessment of severity, progression, and response to treatment. Currently, artificial intelligence (AI) algorithms have shown promise in image classification, showing that they can reduce diagnostic errors by at least matching the diagnostic performance of radiologists.

This review shows how AI applied to thoracic radiology speeds up and improves diagnosis, allowing to optimise the workflow of radiologists. It can provide an objective evaluation and achieve a reduction in subjectivity and variability. AI can also help to optimise the resources and increase the efficiency in the management of COVID-19 infection.

La enfermedad causada por el coronavirus SARS-CoV-2 es una pandemia con millones de casos confirmados en todo el mundo, y un alto número de fallecimientos. Actualmente, la reacción en cadena de la polimerasa en tiempo real (RT-PCR) es el método de diagnóstico estándar para determinar la infección por COVID-19. Diversos fracasos en la detección de la enfermedad por medio de muestras de laboratorio han planteado ciertas dudas sobre la caracterización de la infección y la propagación a los contactos.

En la práctica clínica, la radiografía de tórax (RT) y la tomografía computarizada (TC) de tórax son extremadamente útiles y se han utilizado extensamente en la detección y el diagnóstico de la COVID-19. La RT es la técnica de diagnóstico por imagen más común, y la que está más ampliamente disponible, sin embargo, su lectura por personal menos cualificado, en muchos casos con sobrecarga de trabajo, hace que se cometa un gran número de errores. La TC de tórax se puede utilizar para el triaje, el diagnóstico, la evaluación de la gravedad, la progresión y la respuesta al tratamiento. Actualmente, los algoritmos de inteligencia artificial (IA) han resultado prometedores en la clasificación de imágenes, mostrando que pueden reducir los errores de diagnóstico, como mínimo igualando el rendimiento diagnóstico de los radiólogos.

Esta revisión muestra cómo la IA aplicada a la RT acelera y mejora el diagnóstico, lo que permite optimizar el flujo de trabajo de los radiólogos. Puede proporcionar una evaluación objetiva y lograr una reducción de la subjetividad y la variabilidad. La IA también puede ayudar a optimizar los recursos y aumentar la eficiencia en la gestión de la infección por COVID-19.

Coronavirus disease (COVID-19), which is caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) is a pandemic with millions of confirmed cases and hundreds of thousands of deaths.worldwide.1,2 This unprecedented global health crisis and the major challenges involved in controlling the virus prompted the World Health Organization to recognise it as a pandemic on March 11th 2020.2

Viral detection using real-time polymerase chain reaction (RT-PCR) is currently the standard method for the diagnosis of COVID-19 infection,3,4 but in the current emergency, the low sensitivity of the test (60–70%), asymptomatic infection (17.9–33.3%), insufficient availability of sample collection kits and laboratory testing supplies, as well as equipment overload have made it impossible to carry out enough tests for all suspected patients. This has jeopardised the diagnosis of COVID-19 infection and hampered the tracing of contacts of those unconfirmed cases, given the highly contagious nature of the virus.5–8

In clinical practice, easily accessible imaging test such as chest X-ray (CXR) and computed tomography (CT) are extremely helpful and have been widely used for the detection and diagnosis of COVID-19. In China, numerous cases were identified as suspicious of COVID-19 based on CT findings.9–14 Although CT has proven a more sensitive diagnostic imaging technique (showing abnormalities in 96% of COVID-19 patients) which can yield data even before infection becomes detectable by RT-PCR, CXR is more accessible, does not require patient transfer, involves a lower radiation dose and has a considerably lower cost.15,16

Given the limited number of available radiologists, and the high amount of imaging procedures, accurate and fast artificial intelligence (AI) models can therefore be helpful in providing patients with timely medical care.17 AI, essentially Deep Learning (DL), an emerging technology in the field of medical imaging, could actively contribute to combating COVID-19.18

The objective of this article is to review the literature for the main contributions of AI to conventional chest imaging tests used in COVID-19 published up until May 2020.

AI and COVID-19 imaging testsThe term AI is used to describe various technologies which perform processes that mimic human intelligence and cognitive functions. DL is characterised by the use of deep neural networks (many layers with many neurons in each one) which are trained using large amounts of data. Computer algorithms based on Convolutional Neural Networks (CNN, machine learning and processing paradigm which is modelled based on the visual system of animals) have shown promise in image classification and segmentation of different entities, as well as in the recognition of objects within those images. Familiar examples include facial recognition for security applications. In these networks, with respect to programming, there is no need for a well-defined solution to the problem. In the case of imaging-based medical diagnostics for example, there is no explicitly known solution to the problem, only numerous images which have been labelled with a specific diagnosis by human experts. The algorithms are trained using a set of examples for which the output results (outcome or label) are already known. Image recognition systems learn from these outcomes and adjust their parameters to adapt to the input data. Once the model has been properly trained and the internal parameters are consistent, appropriate predictions can be made for new, previously unprocessed data for which the outcome is not yet known. These DL algorithms are capable of autonomous feature learning.19–22

AI-supported image analysis has shown that the human doctor-AI combination reduces diagnostic errors more successfully than either individually.23

There are several ways to perform segmentation, i.e. separating the lesion from the adjacent tissue to “cut out” the region of interest (ROI); the processes carried out by these algorithms are either:

- 1

Manual (user), prone to intra- and inter-individual variability. Very laborious and time-consuming.

- 2

Semi-automatic, the volume is extracted automatically and the user edits the contours manually.

- 3

Automatic, fast and precise, performed by DL. The training of a robust image segmentation network requires a large enough volume of tagged data which are not always readily accessible.

This is an essential step in image processing and analysis for the assessment and quantification of COVID-19, and the segmented regions can be used to extract characteristics useful for diagnosis or other applications. In COVID-19, complete data are often not available and human knowledge may be required in the training loop, which involves interaction with radiologists.24

CXR-based detection of COVID-19CXR is the most common and widespread diagnostic imaging method; however, reading of X-ray images by less expert and often overloaded staff can lead to a high error rates. During the pandemic, in the outpatient setting, 20% of CXR read as ‘normal’ were reportedly changed to ‘abnormal’ after a second reading.25

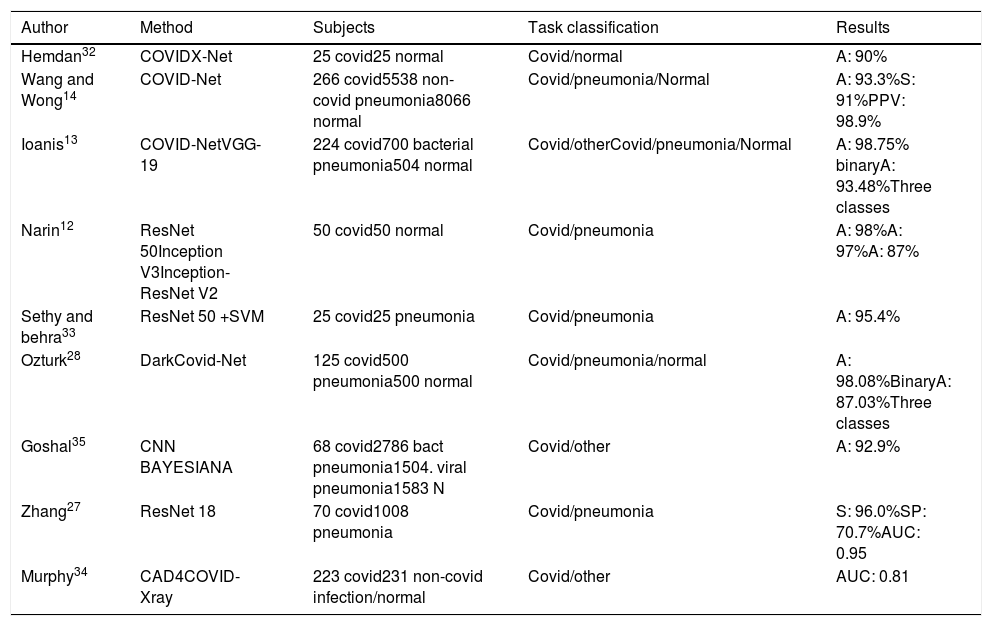

Despite being the first-line imaging method used to investigate cases of COVID-19, the sensitivity of CXR is lower than that of CT, since images may appear normal in early or mild disease. In this regard, 69% of patients have abnormal CXR at initial presentation, vs. 80% at some point after admission.26 The use of AI helps increase diagnostic sensitivity, as shown in Table 1.

Artificial intelligence applied to chest X-ray.

| Author | Method | Subjects | Task classification | Results |

|---|---|---|---|---|

| Hemdan32 | COVIDX-Net | 25 covid25 normal | Covid/normal | A: 90% |

| Wang and Wong14 | COVID-Net | 266 covid5538 non-covid pneumonia8066 normal | Covid/pneumonia/Normal | A: 93.3%S: 91%PPV: 98.9% |

| Ioanis13 | COVID-NetVGG-19 | 224 covid700 bacterial pneumonia504 normal | Covid/otherCovid/pneumonia/Normal | A: 98.75% binaryA: 93.48%Three classes |

| Narin12 | ResNet 50Inception V3Inception-ResNet V2 | 50 covid50 normal | Covid/pneumonia | A: 98%A: 97%A: 87% |

| Sethy and behra33 | ResNet 50 +SVM | 25 covid25 pneumonia | Covid/pneumonia | A: 95.4% |

| Ozturk28 | DarkCovid-Net | 125 covid500 pneumonia500 normal | Covid/pneumonia/normal | A: 98.08%BinaryA: 87.03%Three classes |

| Goshal35 | CNN BAYESIANA | 68 covid2786 bact pneumonia1504. viral pneumonia1583 N | Covid/other | A: 92.9% |

| Zhang27 | ResNet 18 | 70 covid1008 pneumonia | Covid/pneumonia | S: 96.0%SP: 70.7%AUC: 0.95 |

| Murphy34 | CAD4COVID-Xray | 223 covid231 non-covid infection/normal | Covid/other | AUC: 0.81 |

CXR may play an important role in triage for COVID-19, particularly in low-resource settings. Zhang27 presented a model with a performance diagnostic yield similar to that of CT-based methods. Thus, CXR can be considered as an effective tool for rapid and low-cost detection of COVID-19 despite the need to improve on some limitations such as the high number of false-positive results. Ozturk28 was able to diagnose COVID-19 in seconds using raw CXR images and heat maps assessed by an expert radiologist to localise effective regions.

Most current studies tend to use CXR images from small databases, raising questions about the robustness of the methods and the possibility of generalisation in other medical facilities. In this regard, Hurt29 showed that the results of applying the same algorithm to CRX images obtained in different hospitals illustrate a surprising degree of generalisation and robustness of the DL approach. Although these results are not an exhaustive proof of cross-hospital performance, they imply that cross-institutional generalisation is feasible, standing in contrast to what is generally perceived in the field30 despite considerable variation in X-ray technique between the outpatient and inpatient settings.

In addition, most AI systems developed are closed-source and not available for public use. Wang14 used COVID-Net, an open source model developed and tailored for the detection of COVID-19 cases from CXR images applied in COVIDx, an open access benchmark dataset with the largest number of publicly available COVID-19 positive cases and constantly updated. The prototype was built to make one of the following predictions: Non-COVID-19 infection/COVID-19 viral infection/No infection (normal), and thereby help to prioritise PCR testing and to decide which treatment strategy to employ. COVID-19. Net had a lower computational complexity and higher sensitivity than other algorithms, with 92.4% screening accuracy. Using the same public database of fewer than 100 COVID-19 images, Minaee31 trained four neural networks to identify COVID-19 disease on CXR images, most of them achieving a sensitivity of 97% (± 5%) and a specificity of around 90%. Other studies describe classification rates of up to 98%.12,13,32,33

The use of AI seeks to at least match the diagnostic performance of radiologists. Murphy34 thus, demonstrated that the performance of an AI system to detect COVID-19 pneumonia was comparable to that of six independent radiologists, with an operating point of 85% sensitivity and 61% specificity in comparison to RT-PCR as the reference standard for the presence or absence of SARS-CoV-2 viral infection. The AI system correctly classified CXR images as COVID-19 pneumonia with an AUC of 0.81, significantly outperforming each reader (p<0.001) at their highest possible sensitivities.

Many DL methods focus exclusively on improving the classification or prediction accuracy without quantifying the uncertainty in the decision. Knowing the degree of confidence in a diagnosis is essential for doctors to gain trust in the technology. Ghoshal35 proposed a Bayesian Convolutional Neural Network (BCNN) which allows stronger conclusions to be drawn from the data, combining what is already known about the response to estimate diagnostic uncertainty in predicting COVID-19. Bayesian inference improved the detection accuracy of the standard model from 85.7% to 92.9%. The authors generated relevance maps to illustrate the locations and improve the understanding of the results, facilitating a more informed decision-making process.

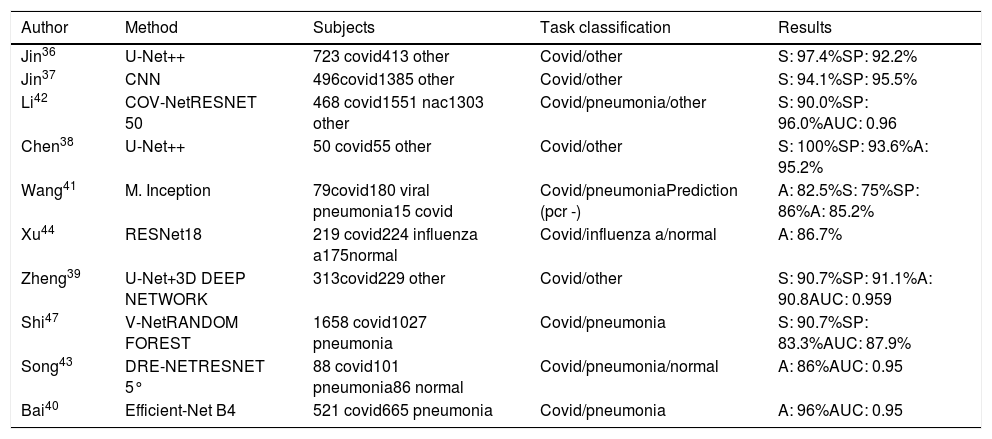

Chest CT-based detection of COVID-19Chest CT can be used to answer various questions in the hospital setting: triage patients, aid diagnosis, and assess disease severity, progression, and response to treatment based on quantification. Table 2 shows studies in which CT scans were used for the diagnosis of COVID-19.

Artificial intelligence applied to thoracic Ct images for diagnosis.

| Author | Method | Subjects | Task classification | Results |

|---|---|---|---|---|

| Jin36 | U-Net++ | 723 covid413 other | Covid/other | S: 97.4%SP: 92.2% |

| Jin37 | CNN | 496covid1385 other | Covid/other | S: 94.1%SP: 95.5% |

| Li42 | COV-NetRESNET 50 | 468 covid1551 nac1303 other | Covid/pneumonia/other | S: 90.0%SP: 96.0%AUC: 0.96 |

| Chen38 | U-Net++ | 50 covid55 other | Covid/other | S: 100%SP: 93.6%A: 95.2% |

| Wang41 | M. Inception | 79covid180 viral pneumonia15 covid | Covid/pneumoniaPrediction (pcr -) | A: 82.5%S: 75%SP: 86%A: 85.2% |

| Xu44 | RESNet18 | 219 covid224 influenza a175normal | Covid/influenza a/normal | A: 86.7% |

| Zheng39 | U-Net+3D DEEP NETWORK | 313covid229 other | Covid/other | S: 90.7%SP: 91.1%A: 90.8AUC: 0.959 |

| Shi47 | V-NetRANDOM FOREST | 1658 covid1027 pneumonia | Covid/pneumonia | S: 90.7%SP: 83.3%AUC: 87.9% |

| Song43 | DRE-NETRESNET 5° | 88 covid101 pneumonia86 normal | Covid/pneumonia/normal | A: 86%AUC: 0.95 |

| Bai40 | Efficient-Net B4 | 521 covid665 pneumonia | Covid/pneumonia | A: 96%AUC: 0.95 |

To achieve early and rapid discriminatory diagnosis, i.e. confirm or rule out disease, various systems are making use of segmentation as a pre-classification step with high accuracy and even allowing to shorten radiologists’ reading times by 65%.36–39

COVID-19 and non-COVID-19 pneumonia share similar characteristics on CT, making accurate differentiation challenging. Bai's model40 achieved greater test accuracy (96% vs. 85%, p<0.001), sensitivity (95% vs. 79%, p<0.001) and specificity (96% vs. 88%, p=0.002) than the radiologists. In addition, the radiologists achieved a higher average test accuracy (90% vs. 85%, p<0.001), sensitivity (88% vs. 79%, p<0.001) and specificity (91% vs. 88%, p=0.001) with the assistance of AI. Comparing the performance of their model with that of two expert radiologists, Wang41 found the former to show much greater accuracy and sensitivity. Each case takes about 10s to screen. This can be done remotely via a shared public platform. The method differentiates COVID-19 from viral pneumonia with an accuracy of 82.5% vs. 55.8% and 55.4% for the two radiologists. COVID-19 was correctly predicted with an accuracy of 85.2% on CT images from patients with negative initial microbiological tests. To distinguish COVID-19 from community-acquired pneumonia (CAP), Li,42 included CAP and other non-pneumonia CT exams to test the robustness of the model, achieving a sensitivity of 90% and specificity of 96%, with an AUC of 0.96 (p value<0.001). A deep learning method used by Song43 achieved an accuracy of 86.0% for the differential classification of the type of pneumonia and an accuracy of 94.% for differential diagnosis between pneumonia and its absence. Xu44 achieved an overall accuracy of 86.7% in distinguishing COVID-19 pneumonia from influenza-A viral pneumonia and healthy cases using deep learning techniques.

Singh45 tuned the initial parameters of a multi-objective differential evolution-based CNN to improve workflow efficiency and save time for healthcare professionals. On the other hand, Li et al.,46 using a system installed in their hospital, improved detection efficiency by alerting technicians within 2min of detection after CT examination, adding that automatic lesion segmentation on CT was also helpful for the quantitative evaluation of COVID-19 progression.

Shi47 used a different approach, segmenting CT images and calculating various quantitative characteristics manually to train the model based on infection size and proportions. This method yielded a sensitivity of 90.7%, although the detection rate was low for patients with a small infection size.

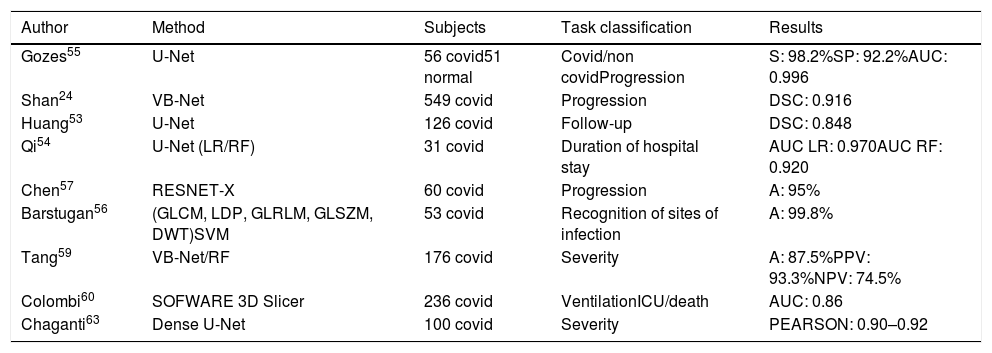

QuantificationAccurate assessment of the extent of the disease is a challenge. In addition, follow-up at intervals of 3–5 days is often recommended to assess disease progression. In the absence of computerised quantification tools, qualitative assessments and a rough description of infected areas are used in radiological reports. Manual calculation of the scores poses a dual challenge: the affected regions are either recorded accurately, a time-consuming process, or assessed subjectively, resulting in low reproducibility of the test. Respiratory severity scales have recently been proposed for COVID-19 which show a correlation between disease progression and severity.10,48–52

Accurate and automated score measurements would address speed and reproducibility issues, as shown in Table 3.

Artificial intelligence applied to thoracic Ct images for quantification.

| Author | Method | Subjects | Task classification | Results |

|---|---|---|---|---|

| Gozes55 | U-Net | 56 covid51 normal | Covid/non covidProgression | S: 98.2%SP: 92.2%AUC: 0.996 |

| Shan24 | VB-Net | 549 covid | Progression | DSC: 0.916 |

| Huang53 | U-Net | 126 covid | Follow-up | DSC: 0.848 |

| Qi54 | U-Net (LR/RF) | 31 covid | Duration of hospital stay | AUC LR: 0.970AUC RF: 0.920 |

| Chen57 | RESNET-X | 60 covid | Progression | A: 95% |

| Barstugan56 | (GLCM, LDP, GLRLM, GLSZM, DWT)SVM | 53 covid | Recognition of sites of infection | A: 99.8% |

| Tang59 | VB-Net/RF | 176 covid | Severity | A: 87.5%PPV: 93.3%NPV: 74.5% |

| Colombi60 | SOFWARE 3D Slicer | 236 covid | VentilationICU/death | AUC: 0.86 |

| Chaganti63 | Dense U-Net | 100 covid | Severity | PEARSON: 0.90–0.92 |

Using an automated DL-based tool for quantification, Huang53 assessed changes in lung opacification percentages, comparing baseline vs. follow-up CT images to monitor progression. Assessing patients ranging from mild to critical, significantly different percentages of opacification were found between clinical groups at baseline, with generally significant increases in opacification percentages between baseline and follow-up CT images. The results were reviewed by two radiologists, and the good correlation with the system and between them (kappa coefficient 0.75) could potentially eliminate subjectivity in the assessment of pulmonary findings in COVID-19.

Qi54 developed models to predict the length of hospital stays in patients with pneumonia based on comparison of short- (≤10 days) and long-term hospital stays (>10 days). These models were effective in predicting the length of hospital stays with an AUC between 0.97 and 0.92, sensitivity 0.89–1.0 and specificity of 0.75 and 1.0, depending on the method used.

Other quantification systems, in addition to providing excellent accuracy, improve the ability to distinguish between a variety of COVID-19 symptoms, can analyse a large number of CT scans and measure progression throughout the follow-up.55–58 It is known that manual assessment of severity can lead to delayed treatment planning, and that visual quantification of disease extent on CT correlates with clinical severity.52 Tang,59 thus, proposed a model to assess the severity of COVID-19 (non-severe or severe). A total of 63 quantitative features were used to calculate the volume of ground-glass opacity (GGO) regions and their relationship to total lung volume, a ratio found to correlate closely with severity. Using a similar principle, Colombi60 sought to quantify the well-aerated lung at baseline chest CT, looking for predictors of ICU admission or death. Quantitative CT analysis of well-aerated lung performed visually (%V-WAL) and using open-source software (%S-WAL) found that patients who were admitted to the ICU or who died showed involvement of 4 or more lobes vs. patients without ICU admission or death (16% vs. 6% of patients, p<0.04). After adjustment for patient demographics and clinical parameters, a well-aerated lung parenchyma at baseline chest CT<73% was associated with the greatest probability of ICU admission or death (OR 5.4, p<.001). Software methods for quantification showed similar results, supporting the robustness of the results. Although visual assessment of well-aerated lung showed good interobserver agreement (ICC 0.85) in a research setting, automated software measurement of WAL could, in theory, offer greater reliability in clinical practice. Quantification of well-aerated lung is known to be useful to estimate alveolar recruitment during ventilation or to predict the prognosis of patients with acute respiratory distress syndrome (ARDS). It is also known that well-aerated lung regions can act as a substitute for functional residual capacity.61,62

Shan24 automatically quantified regions of interest (ROIs) and their volumetric ratios with respect to the lung, providing quantitative assessments of progression disease by visualising the distribution of the lesion and predicting severity based on the percentage of infection (POI). The performance of the system was evaluated by comparing automatically segmented infection regions vs. manually-delineated ones, showing a dramatic reduction in total segmentation time from 4–5h to 4min with excellent agreement (Dice Similarity Coefficient (DSC)=2VP/(FP+2VP+FN)=0.916 agreement) and a mean estimation error of POI of 0.3% for the whole lung. Chaganti63 quantified abnormal areas by generating two measures of severity: the general proportion of the disease in relation to lung volume and the degree of involvement of each lung lobe. Since high opacities such as consolidation were correlated with more severe symptoms, the measurements quantified both the extent of disease and the presence of consolidation, providing valuable information for prioritising risk and predicting prognosis, as well as, response to treatment. Pearson's correlation coefficient for method prediction was 0.90–0.92 and there were virtually no false positives. In addition, automated processing time was 10s per case compared to 30min required for manual annotations. Other papers focused on response to treatment based on imaging findings over time, monitoring of changes during follow-up and the possibility of recovery from disease.

ConclusionsAI, applied to the interpretation of radiological images, allows to streamline and improve diagnosis while optimising the workflow of radiologists. Despite its low sensitivity compared to CT, efforts to improve the diagnostic yield of CXR are of the utmost interest, since it is the most common and widely used imaging method. Its use allows to monitor disease progression, provides an objective assessment based on quantitative information, reduces subjectivity and variability and allow the optimisation of resources due to its potential ability to predict the length of hospital stays. Used as support in clinical practice and, in conjunction with other diagnostic techniques, it could help increase efficiency in the management of the COVID-19 infection.

Conflict of interestsThe authors declare that they have no conflict of interest.