A new class of applications can now be envisaged with the emergence of both mobile ad hoc computing and ubiquitous computing, which imposes a number of new unsolved challenges. Examples of such applications include automatic car control systems and air traffic control systems. Applications of such kind have real-time constraints and are characterised by being highly mobile and proactive, i.e. able to operate without human intervention. Moreover, this kind of applications requires multiple-source multicasting. However, current approaches mainly focus on offering support for continuous flows in low mobile environments where single-source multicasting is assumed. In this paper, we present the QoSMMANET (QoS Management in Mobile Ad hoc Networks) framework, which offers QoS support for real-time event systems in highly mobile ad hoc environments. Our approach is validated by a number of experiments carried out in the ns-2 network simulator.

Una nueva clase de aplicaciones ahora puede ser prevista con la aparición tanto de la computación Ad-hoc móvil y la computación ubicua, que impone una serie de nuevos desafíos sin resolver. Ejemplos de tales aplicaciones son sistemas de control automático de automóviles y sistemas de control de tráfico aéreo. Aplicaciones de este tipo tienen restricciones de tiempo real y son caracterizadas por ser altamente móviles y activas, es decir, capaz de operar sin intervención humana. Además, este tipo de aplicaciones requiere una multidifusión con múltiples fuentes. Sin embargo, los enfoques actuales se centran principalmente en ofrecer soporte a flujos continuos en ambientes de baja movilidad donde se asume una multidifusión con una sola fuente. En este artículo, presentamos el QoSMMANET (QoS Management in Mobile Ad hoc Networks) framework, el cual ofrece soporte en calidad de servicio (QoS) para sistemas de eventos en tiempo real en entornos Ad-hoc altamente móviles. Nuestro enfoque ha sido validado por una serie de experimentos llevados a cabo en el simulador de red NS-2.

Over the last few years we have seen the proliferation of embedded mobile systems such as mobile phones and PDAs. Ubiquitous computing is also taking off in which multiple cooperating possibly embedded controllers are used. A new kind of applications can now be envisaged with the emergence of both mobile ad hoc computing and ubiquitous computing. Applications of such kind are characterised by being highly mobile and proactive, i.e. able to operate without human intervention. Examples of these applications include automatic car control systems in which cars are able to operate independently and cooperate with each other to avoid collisions. Another example is an air traffic control system whereby thousands of aircraft are proactively coordinated to keep them at safe distances from each other, direct them during takeoff and landing from airports and ensure that traffic congestion is avoided. This kind of applications has real-time constraints and use event-based communication.

Real-time event systems in mobile ad hoc environments impose a number of new unsolved challenges. Such environments are characterised by being highly unpredictable. A peer-to-peer communication model is generally used in ad hoc networks. Importantly, nodes act as routers to reach nodes that are out of the transmission range. Communication delays between nodes may vary unexpectedly as the number of hops to reach the destination changes. In addition, a geographical area may unexpectedly become congested, resulting in the lack of communication resources.

Moreover, periods of disconnection are likely to happen at any time due to the conditions of the geographical area. The transmission signal can be severely affected by bad weather conditions and obstacles such as trees, hills and buildings. In the worse case, there may be a network partition whereby one or more nodes are unreachable.

Furthermore, this kind of applications being event-based systems requires multiple-source multicasting since it is needed that every node transmits control information such as for example the node’s position as well as a sudden emergency stop.

Current approaches mainly focus on offering support for continuous flows in low mobile environments where single-source multicasting is assumed. Hence, new efforts are required to provide support to the kind of applications described above. In this paper, we present the QoSMMANET (QoS Management in Mobile Ad hoc Networks) framework, which offers QoS support for real-time event systems in highly mobile ad hoc environments. Node mobility is expressed in terms of node velocity. Our approach is validated by a number of experiments carried out in the ns-2 network simulator. It should be noted that we do not focus on the specific case of Opportunistic Networks [1], rather we focus on the more general case of Mobile Ad Hoc Networks (MANETs). Different from our focus, Opportunistic Networks do assume sparsely scattered mobile nodes where there is tolerance for long delays and target other kind of applications such as disaster recovery [1] and wildlife monitoring [2]. The security issues are not part of the scope of the QoSMMANET framework since the main concern is real time transmissions support and high node mobility in MANETs. However, this can be addressed as proposed in [3].

The routing in the network is addressed by the QoSMMAN framework using the proposed Probabilistic Flooding Protocol (See Section 3.1) based on a flooding mechanism which limits packet redundancy. For this protocol no routing tables are required and retransmission is done with a pre-determined probability p.

The presented experimental work for the QoSMMANET framework is focused on the analysis of performance metrics such as nodes velocity, number of nodes (network density) and coverage area. These parameters are described in section 4. The paper is structured as follows. Section 2 includes related work.

The QoSMMANET framework is presented in section 3. The experimental scenarios are presented in Section 4. Section 5 includes the results and analysis of the experiments. Finally, conclusions are drawn in section 6.

2Related workSeveral efforts have been carried out to provide QoS management support in mobile ad hoc networks. We divide the related research literature into categories based on unicast, multicast, and broadcast protocols.

Unicast Routing ProtocolsSeveral efforts have been carried out to provide QoS management support in mobile ad hoc networks. Initial efforts regard signalling protocols in charge of carrying out resource reservation [4], [5], [6], [7], [8], [9]. Some of these approaches support real-time and best effort services e.g. [7], [8], [6]. Adaptation mechanisms are also supported. For instance, in [7] flows are degraded when resources are scarce whereas flows are scaled-up when resources become available. An admission controller estimates bandwidth availability for real-time traffic as in [6]. More recent efforts have taken into account the neighbourhood contention area whereby false session admissions are avoided [9], [10], [11], [12].

Although these efforts are very valuable, these approaches also have a number of drawbacks. Most approaches are limited to providing at most two service classes i.e. real-time and best effort. Moreover, these protocols assume the use of a unicast routing protocols such as AODV, which are not efficient for high mobility environments since established routes may become rapidly invalid. The reviewed approaches mainly address QoS support for continuous flows in low mobility environments whereas we focus on providing QoS support for event-based communication in high mobility scenarios.

Multicast routing protocolsInitial approaches to support QoS in multicast sessions include [13], [14], [15], [16]. In [17] the authors present a comparative study of five multicast protocols: AMRoute [13], ODMRP [14], AMRIS [15], CAMP [16], and flooding. ODMRP performed well in most experimental scenarios. It was concluded that the mesh-based protocols performed much better (i.e. the packet delivery ratio is higher) than the tree-based protocols in high mobility scenarios. The reason is mesh-based protocols provide redundant routes. In contrast, tree-based protocols must buffer or drop packets until the tree is reconfigured when a route breaks. More recently, multicast protocols with better performance have emerged [18], [19], [20], [21], [22], [23], [24], [25], [26]. However, most of the protocols were designed for single-source multicasting, therefore, having multiple sources (as the kind of scenarios we are targeting) imposes a high overhead impacting the efficiency in the packet delivery ratio [27].

Probabilistic Broadcasting approachesIn [28] the authors present a probabilistic broadcasting approach, which dynamically adjusts the rebroadcasting probability. This approach is similar to our protocol in that the probability changes dynamically. However, our broadcasting protocol bounds the number of rebroadcasts to four, thus, diminishing the possibility of unnecessary rebroadcasts and reducing network congestion.

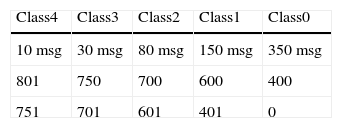

This number represents the maximum number of hops a packet can be forwarded. This can be configured according the network diameter and considering its density and node mobility. We believe that for the presented experiments and as a reasonable default value a maximum of four rebroadcasts can be employed (see Table 1).

The work in [29] presents a flooding protocol used to find routes whereas our work uses a probabilistic flooding as a routing protocol itself. In [30] the authors present, HybridCast, a deterministic and probabilistic broadcast protocol.

The main drawback in this work is the reliability of packet delivery decreases as the network load increases. In contrast, our framework is able to maintain a high packet delivery even if the network load is increased. In [31] the authors present DAPF, a flooding algorithm.

In general it is claimed that their protocol can significantly reduce message overhead and latency while maintaining a comparable reachability. Their approach is suitable for sparser network scenarios whereas we focus on medium and higher density networks.

3The QoSMMANET frameworkAs discussed in the previous section event-based communication in highly mobile networks involves a number of issues. To the best of our knowledge these issues are not approached in the literature in an integral basis but rather considered individually. In some other cases they focus on low mobility or single transmission sources. A QoSMMANET framework is then proposed to integrally consider these issues, as shown in Figure 1. The framework consists of the following building blocks or modules:

- i)

Routing Protocol Block. This module is in charge of enabling end-to-end connectivity. This protocol is based on a probabilistic flooding mechanism. It is intended to cope with the network dynamics derived from node mobility whilst limiting network congestion.

- ii)

Traffic Differentiation: Queuing Discipline. This module is a mechanism oriented to provide packet differentiation and prioritisation. It supports two queuing methods: FIFO and WFQ (Weighted Fair Queuing).

- iii)

Bandwidth Allocation Protocol: QoS Management Protocol. The main goal of this module is to balance network load based on end-to-end connectivity. Network traffic bottle necks are identified and traffic flows are regulated accordingly.

The modules are discussed in detail in the following sections.

3.1Routing Protocol: A Probabilistic Flooding ProtocolThe main goal of the probabilistic flooding protocol is to use a flooding mechanism to increase network coverage under high node mobility conditions whilst minimising network congestion.

The main rationale behind using a flooding protocol instead of a multicast protocol, such as tree- or mesh-based protocols, is that many multicast protocols do not behave well under high mobility conditions [17] and even those that behave better are not suitable for handling multiple sources [27] as we require. We have designed a flooding protocol based on a probabilistic algorithm with damping capability to avoid shared states in nodes. Basically, the protocol disseminates packets by flooding them between nodes.

This guarantees good performance in high mobility and reliability through redundancy, but it also means that network resources are not well managed. For that reason, two additional mechanisms are employed by the protocol. The first has to do with probabilistically forwarding flooding packets.

That is, each node decides if it should forward a flooding packet according to a probability p ª [0,1] which is updated according to the number of nodes a packet has visited. This effectively minimises the number of unnecessary duplicate packets without sacrificing reliability as we have found experimentally through simulations.

The second mechanism, which is called damping, aims to eliminate the number of unneeded duplicates by allowing nodes to wait for a random, small time interval before they will actually forward a packet. During this interval the nodes listen for other neighbouring nodes that will potentially flood the same packet. The first arrived packet will be forwarded whilst all arrived duplicates within the waiting window are discarded.

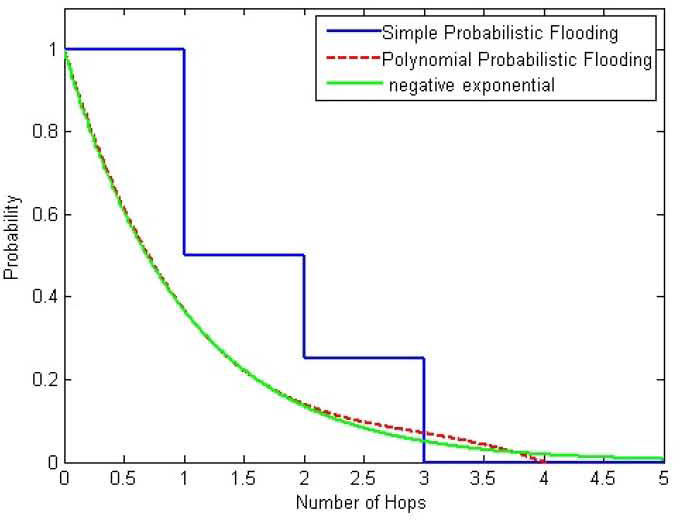

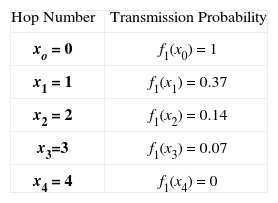

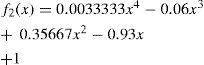

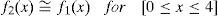

Two probability group values are studied in the retransmission process: i) A “Simple Probabilistic Flooding” which uses four probabilities (1, 0.5, 0.25, 0) where 1 is assigned when the packet visits the first hop, 0.5 for the second hop, and so on; and ii) a set of discrete values (1, 0.37, 0.14, 0.07, 0) derived from a negative exponential probability distribution (3.1).

Each value is assigned to a hop number as in the previous group, see Table 1. This mechanism is referred to as “Polynomial Probabilistic Flooding” (3.2).

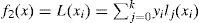

A probability distribution for this mechanism is obtained using Lagrange Polynomial Interpolation as follows:

Let

And

where

As shown in Figure 2 the Polynomial Probabilistic Flooding model is very close to a negative exponential function for the hop number rage of interest. It is important to stress that the rebroadcasting or forwarding probability is not determined by the node itself but rather is associated with the number of nodes a transmitted packet has visited. The forwarding probability is obtained from the negative exponential function (equation 3.1). The associated per hop numbers and retransmission probabilities are shown in Table 1.

In our experimental scenario from section 4, nodes can be either fixed or mobile. Fixed nodes are in charge of transmitting information messages involving adverts of theatres and restaurants as well as sport news.

Mobile nodes exchange control messages to avoid collisions. Messages are 9 bytes long and have the following format:

- a)

Deadline (12 bits).- It is an integer defined in milliseconds. Only control messages have a deadline associated.

- b)

Position (x,y): x coordinate (16 bits), y coordinate (16 bits).- It is used to informs nearby nodes of its current position.

- c)

Hop counter (8 bits).- This number represents the maximum number of hops a packet can be forwarded. This can be configured according the network diameter and considering its density and node mobility.

- d)

Stx (16 bits).- It is used to inform nearby nodes of the node’s supported transmission rate (see Section 3.3)

- e)

Event Types (4 bits).- It defines the type of message. Control messages can be of the following type: carLocation, emergencyStop, move and carBreak. Information messages can be restaurantInfo, theatreInfo and sportNews. Control packets have higher priority whereas information packets have lower priority (see Section 3.2).

Fixed nodes only transmit events, periodically e.g. every 500 ms, vehicles transmit control events and receive control and information events, periodically e.g. every 100 ms.

The control event carLocation sends the location of the vehicle whereas emergencyStop indicates the vehicle is breaking abruptly. Moreover, move sends node velocity and carBreak indicates the vehicle is breaking slowly.

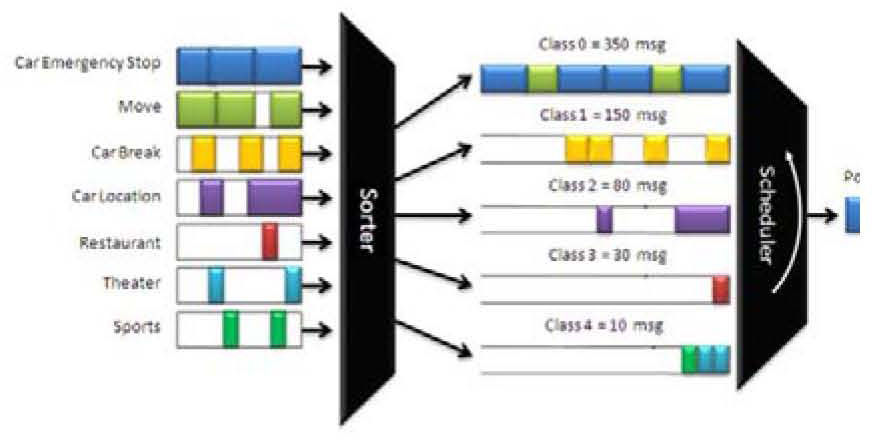

3.2Traffic Differentiation: A Queuing DisciplineFor this module, we consider two key components:

- i)

packet differentiation; and ii) packet prioritisation. To comply with both factors a simple approximation to Weighted Fair Queuing [32, 33] and Weighted Round Robin (WRR) [34] is proposed, see Figure 3.

This “Proposed Queue” consists of the assignment of a packet quantum according to the priority. A quantum is defined to as the maximum number of queued packets sent every transmission turn. Higher priority means higher quantum.

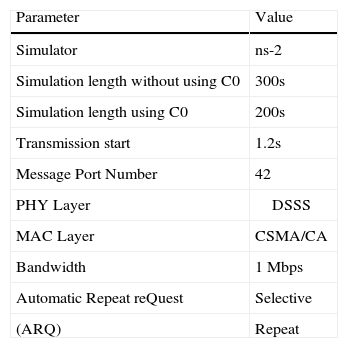

The number of classes to be defined is application specific. For example, we have defined five classes for the experimental scenario (see section 5). If maximum quantums are reached, the following channel usage percentages would result (see Table 2): class0 = 56.45%, class1 = 24.20%, class2 = 12.90%, class3 = 4.83% and class4 = 1.62%. The channel usage percentage is obtained from the number of packets in each class divided by the total number of packages from all five classes.

An alternative approach is rather than using packet quantum quotas, assign time quotas to the classes. In other words, classes with higher priority are assigned higher time quantum quotas. Class packet types are discussed later in section 5.

3.3Bandwidth Allocation Protocol: A QoSManagement ProtocolThe main goal of the QoS management protocol is to regulate traffic in a mobile ad hoc environment. Also, support is provided to avoid the hidden terminal problem [35]. Network QoS management is achieved as follows.

Firstly, every node is able to listen to traffic as the dissemination of packets is carried out by using the probabilistic flooding protocol. Secondly, available bandwidth is fairly distributed among the nodes within a transmission area [35].For this purpose, a fully distributed protocol is used.

Every node is associated with a supported transmission rate (Stx) and a downgraded transmission rate (Dtx). The Stx defines the maximum rate at which a node is able to receive messages.

A node obtains this value by fairly allocating a portion of the bandwidth according to both the amount of traffic and the number of nodes that are listened.

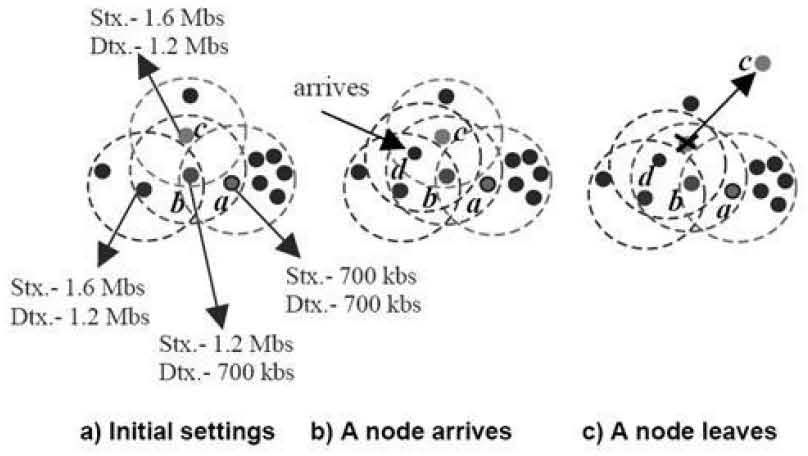

The Dtx determines the maximum rate at which a node can transmit messages without negatively affecting the neighbouring nodes. In addition, a node periodically broadcasts its Stx and Dtx values to the neighbours (i.e., the nodes located within the transmission range). As a consequence, the Dtx is set to the lowest Stx received. As an example consider the scenario depicted in Figure 4 (a) whereby node a is located in a highly populated area and can receive packets at 700 kbs. Node b which is in a less populated area, is unaware of the traffic behind node a. Such a situation could negatively affect the availability of network resources of node a. That is, node b could transmit at a rate higher than 700 kbs considering it is suitable to do so as the sensed traffic is low. However, after exchanging a number of messages these two nodes, node b becomes aware of the maximum supported rate of node a. Similarly, node c sets its transmission values according to the maximum supported values of the vicinity. Furthermore, the bandwidth of a node is further distributed among the node’s service classes. As a result, each service class is also provided with their own Stx and Dtx values.

Consider now the case of node d arriving to the vicinity as shown Figure 4 (b). After a period of time, this node detects new traffic and requests the QoS settings (i.e., the Stx and Dtx values) to the nearest nodes. As a consequence, these values are provided and the QoS settings of node d are updated. Node d then informs of its new settings and the neighbouring nodes update their settings by taking into account the bandwidth that the new node will use. Figure 4 (c) shows the case of node c leaving the area. After a timeout has expired, the neighbouring nodes assume this node has left when messages from this node are no longer received. As a result, the bandwidth released by node c is fairly distributed among the nodes within the vicinity. That is, each node allocates itself a portion of the bandwidth according to the QoS settings of the neighbouring nodes.

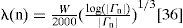

3.3.1QoS Management Protocol FormalisationA Mobile Ad Hoc Network (MANET) is a sextuple Ω = (M, V, v0, Γ, λ, δ) where M is a finite set, whose elements are mobile nodes; V is a finite set, whose elements are static nodes; v0 ε V is a static node, which starts the communication; Γ is a finite set of one-hop neighbours which can belong to different coverage areas and Γ ⊆ M, Γ1 ={Y1, Y2, …, Yh}, Γ2={Y1, Y2, …, Yi},…, Γn={Y1, Y2, …, Yj} h, i and j ∈ ℕ where Γ1 is defined as the neighbours set of node 1, Γ2 is defined as the neighbours set of node 2 and Γn is defined as the neighbours set of node n. A set of one-hop neighbours can have from 1 to z coverage areas; λ: Γ → Stx is a transcendent function of Yn of Ω; Stx is the maximum available bandwidth for a node to receive packets in a given area. The z= |Stx | is given by the number of coverage areas that a node belongs to. The Stxn for node n with coverage areas z, λ(n) is obtained as follows:

where |Γn| is the cardinality of the neighbours set and W represents the whole network bandwidth. Equation (3.3) was proposed in [36]. The constant 2000 was obtained in a heuristic way by the authors. Finally, δ=min(Stx) is referred to as Dtx of Ω.

Algorithm to obtain Stx and DtxThe following protocol is carried out periodically and when new nodes are detected within the neighbourhood:

Step 1. The cardinality of the coverage areas |Stx| is obtained.

Step 2. Each node calculates its Stx value.

Step 3. One-hop neighbours interchange their Stx values.

Step 4. All λ values and their corresponding δ values are calculated. The δ value is derived from all the coverage areas that the node belongs to.

Step 5. Each node adjusts its maximum transmission rate to δ.

The Stx and Dtx values can be used to identify the traffic bottleneck nodes.

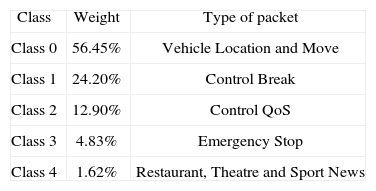

4Experimental scenarioOur simulation scenario involves an automatic car control system in which cars are able to operate independently and interchange messages in order to avoid car collisions. We consider a 1400m × 1400m urban area with 4 vertical, 2 horizontal streets and one main horizontal avenue. Vehicles are represented by 36 mobile nodes moving along the avenues whereas 64 static nodes represent hotspots sending information such as adverts of restaurants and theatres, and sport news. The QoS Management Framework was evaluated by simulations in ns-2.

Each node has an ID value within 0 to 99. These IDs are assigned as follows: 0 to 35 IDs are mobile nodes whereby 0 to 7 IDs are in main avenue to east direction, 8 to 15 are in main avenue to west direction, 16 to 19 are in vertical1 street to north direction, 20 to 23 are in vertical street to south direction, 24 to 30 are in vertical3 to north direction, 31 to 35 are in vertical to south direction and 36 to 99 IDs are static nodes. We defined five packet classes as follows: Class 0 (C0) has Move and carLocation packets, Class 1 (C1) has carBreak packets, Class 2 (C2) QoS Control packets, Class 3 (C3) has emergencyStop packets and Class 4 (C4) has blurb packets such as restaurantInfo, theatreInfo and sportNews packets.

The channel capacity is set up to 1Mbps. The employed packet length is 9 bytes. Although current physical capacity for wireless routers support a higher bandwidth, we have chosen 1 Mbps as means to obtain congested operation levels. As an alternative, we could have increased the amount of the information transmitted with a larger set of message types. The radio propagation model is a Two Ray Ground reflection model, which considers both the direct path and a ground reflection path, where the received power at distance d is predicted by [37]. The Antenna model is OmniAntenna at 1.5 GHz [38]. The IEEE 802.11 PHY uses Direct-Sequence Spread Spectrum (DSSS).The IEEE 802.11 MAC is used as the MAC protocol.

The transmission power consumption is 2 Watts whilst for reception is 1 Watt. The two layer simulation scenarios are based on the ns-2 model for the IEEE 802.11 standard. Some simulations parameters are shown in Table 3.

The simulation execution time is 300 seconds without using C0 and 200 seconds using C0. Each event type is associated with a particular class, as shown in Table 4.

As said earlier, classes have associated different weights (reserved bandwidth). Class 0, which includes vehicle location type messages, has a higher bandwidth share whereas class 4 has the lower share.

Fixed nodes generate messages containing adverts, which belong to class 4, as shown in Table 4.

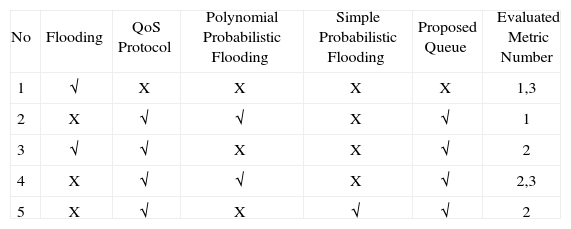

A number of experiments are considered to evaluate the proposed QoS Framework in which the elements of the framework are either enabled or disabled and evaluated according to Table 4.

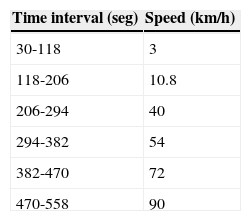

The mobility ratio is also evaluated versus different node mobility values and with (experiment 3) and without (experiment 1) the proposed framework. In this mobility experiment, we consider each mobile node has a speed incremented from 0 km/h to 72 km/h. Four speed values are evaluated for each experiment: 0 km/h (all nodes are fixed, and is used for reference and analysis purposes), 10.8 km/h slow speed, 54 km/h medium speed and 72 km/h high speed which is considered as maximum speed in a metropolitan area. From the general scenario used (experiments 2, 4 and 5) 100 nodes mentioned are selected.

The packet length is six bytes containing the following fields: event type, deadline, and the vehicle´s position represented as x and y coordinates. Node ID number 56 transmits 45 packets which IDs are from 0 to 44 at 0.5 seconds, node ID 58 transmits 16 packets which IDs are from 45 to 60 at 0.6 seconds, node ID 94 sends 16 packets which IDs are from 61 to 76 at 1.2 seconds, and finally node ID 88 sends 16 packets which IDs are from 77 to 92 at 2.1 seconds. In each simulation there are initially 4 coverage areas consisting of 1 transmitter and several receivers.

A coverage area membership is considered based on an initial 100% packet delivery ratio (membership condition). In other words, if a receiver obtains 100% of the initial transmitted packets for a given transmitter it is then considered as a member of the transmitter coverage area. The transmitters are nodes IDs: 56, 58, 88 and 94. The coverage membership was obtained as follows: for node 56 it is formed by nodes IDs 0, 1, 2, 3, 4 and 5; in the case of the node 58 by nodes 16, 17, 18 and 19; for node 88 it consists of nodes 24, 25, 26 and 27; and finally for node 94 it is formed by nodes 31, 32, 33, 34 and 35. The coverage area membership changes as nodes move throughout the simulation and the 100% packet delivery condition remains at all times.

4.1Performance MetricsIn order to evaluate the QoS Framework in the context of real-time event systems in highly mobile ad hoc environments the following metrics are considered. Some of these metrics are suggested by the IETF MANET working group for routing/multicast protocol evaluation [39].

The metrics shown below are evaluated with respect to speed mobility and network load since the main motivation of this research is to provide support for real-time event systems in highly mobile ad hoc network environments.

Other important metrics such as jitter, Normalized Routing Load (NRL), battery (energy) consumption, load balancing, and scalability can be employed to address issues beyond the scope of this work.

One Way Delay (OWD). It is the time measurement from the transmission of the first bit of a packet transmission to the reception of the last bit at the destination node [40].

- 1.

Packet delivered ratio. The ratio between the numbers of received packets versus the transmitted packets. This value presents the effectiveness of a protocol.

- 2.

Number of deadline misses. It is the number of packets that have not reached its destination within a given time deadline.

The results analysis is basically split into each considered metric. This is presented next.

5.1Experimental ResultsOne Way Delay (OWD) (Metric 1)To analyze end-to-end OWD we consider experiment 1 and 2 under same network load conditions, as shown in Table 5.

The first experiment employs a FIFO Queue whilst experiment 2 uses the Proposed Queue.

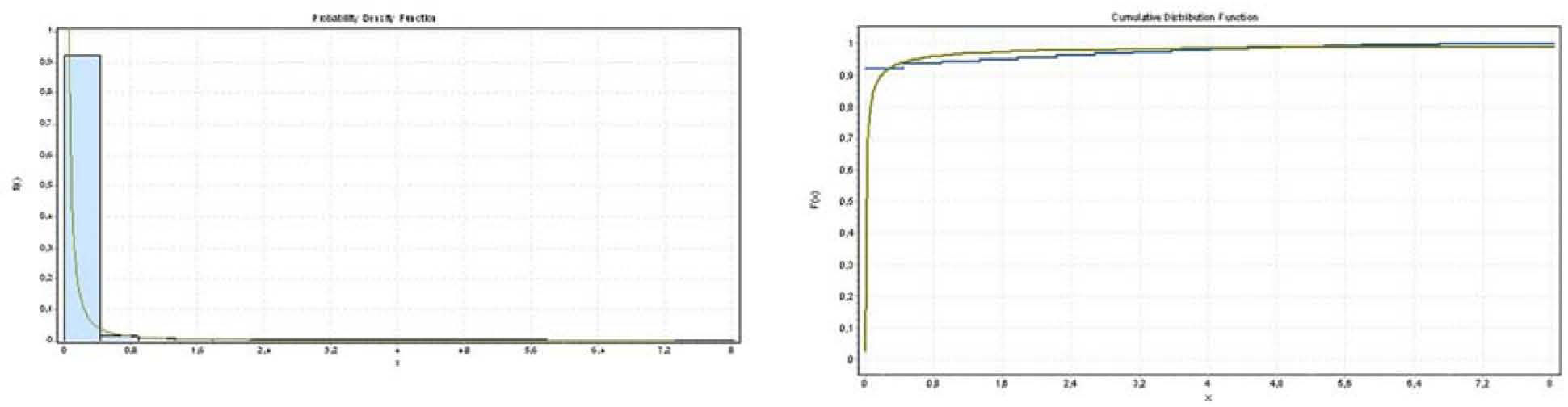

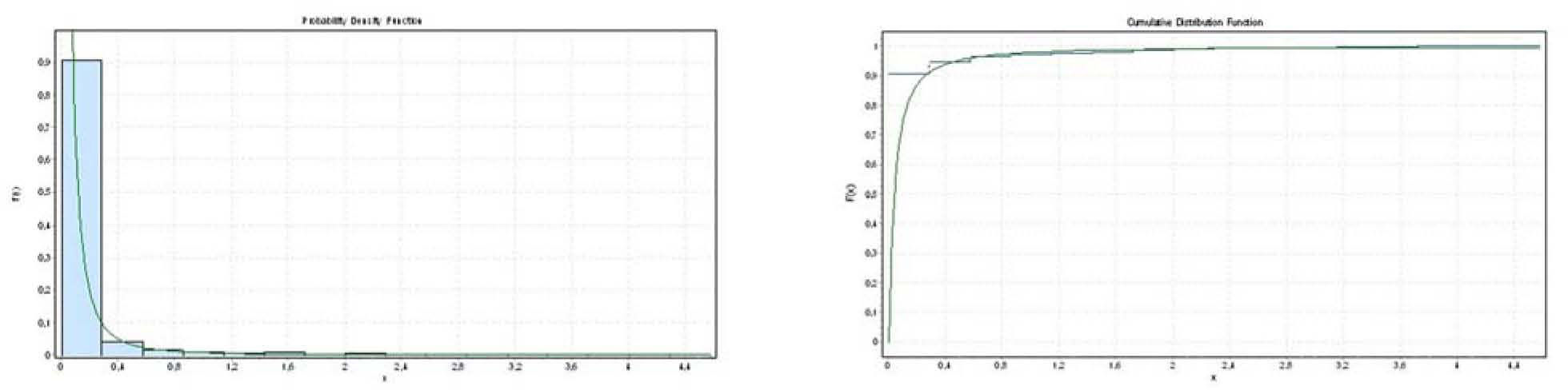

Figure 5 shows the delay probability distribution function when the FIFO Queue is enabled. We can note that delay’s behaviour is a typical long-tail distribution function [41].

The sample date size was 56,842 and the Kolmogorov-Smirnov method [42, 43, 44] was employed.

Figure 5 also shows the Cumulative Distribution Function (CDF) in which the blue curve represents the CDF of real end-to-end OWD and the green curve shows the CDF of the Pareto Distribution [41]. Both curves are very close.

For the Proposed Queue, Figures 6 shows the delay probability distribution function and the CDF. The Pareto Distribution is also obtained. For both scenarios the same network load is employed. However, for the Proposed Queue0 packets are classified and prioritised as shown in Figure 3. This changes the delay distribution characteristics, as shown in Figure 6. The delay is increased for low priority packets whilst for high priority packets it is decreased.

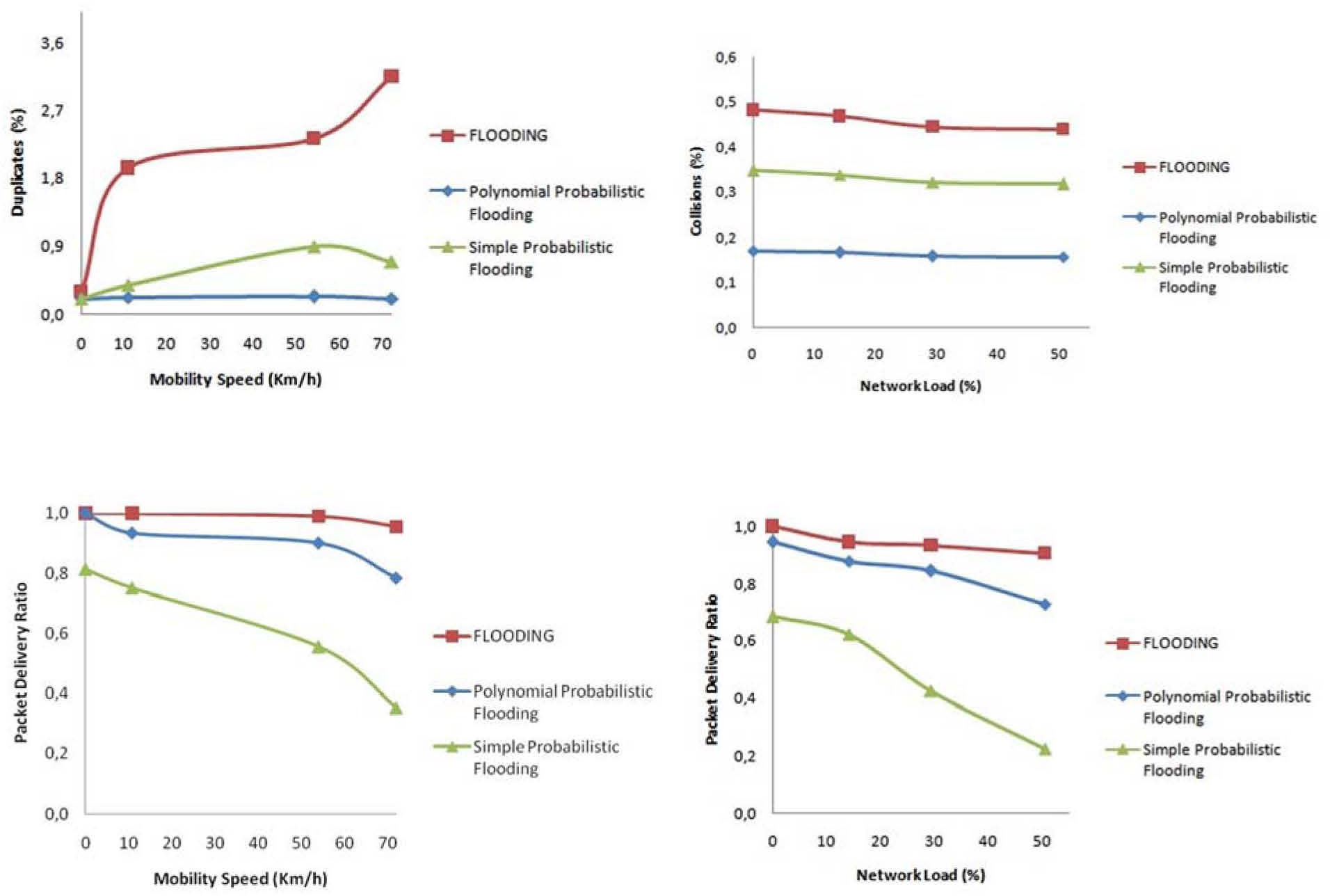

Packets delivered ratio (Metric 2)The packet delivery ratio for the different evaluated speeds and experiments are shown in Figure 7. We can note that packet delivery ratio is decreased as speed increases. For a speed range of 0-70 Km/h the average packet delivery ratio of the polynomial probabilistic flooding protocol is 85% and only 15% of non-duplicated packets are lost. In the case of the Flooding protocol the former is 94%. There is a packet delivery ratio difference of 9% between the two mentioned protocols. However, this QoS impact can be dealt reasonably by the higher logical layers from the protocol stack such as the transport, presentation and application layers. The number of packet duplicates is considerably higher for the flooding protocol than for polynomial probabilistic flooding. The QoS impact for most application and scenarios derived by this redundant traffic would be hardly mitigated specially when high network density and congestion occurs.

Nevertheless, when using the Flooding protocol (exp 3) and Polynomial probabilistic flooding protocol (exp 4) this ratio degrades slowly. It is observed a significant cost in terms of number of duplicates and collisions is paid for the Flooding protocol in relation to the other two, as shown in Figure 7. This condition would be worsened as the network node density is increased. In Figure 7, collisions are represented by percentage. Note that based on this representation the collisions percentage remains stable only decreasing slightly as the network load increases in all cases. Although it cannot be appreciated in Figure 7, all protocols have an increase in the number of collisions as the network load increases1. Note that the full QoSMMANET framework which consists of the QoS Protocol, the Polynomial Probabilistic Flooding and the Proposed Queue is enabled in exp4 whilst it is disabled for experiments 3 and 5; in other words, the QoS Protocol and the Proposed Queue are enabled while the Polynomial Probabilistic Flooding is disabled for both experiments.

The experimental scenario involves multiple-source multicasting in which both low and high mobility takes place. A high packet delivery ratio is relatively maintained while the number of duplicates is lower. This is achieved because of a number of reasons. In the first place, the polynomial probabilistic flooding generates less duplicates than both the flooding and the simple probabilistic flooding approaches.

The reason this happens is the rebroadcasting probabilities extracted from a negative exponential distribution function are lower than the probabilities used by the simple probabilistic flooding. Therefore, packets are less likely to be rebroadcasted. Secondly, since the use of both the queuing discipline and the QoS management protocol produce a lower number of collisions, the packet delivery ratio remains relatively high in different network load conditions. In contrast, this would not be the case for the Flooding protocol if highly dense network scenarios were considered. Note that although we are considering event-based scenarios in which packets are not sent continuously, the transmission and retransmission rates in terms of the number of packets sent per second is high enough to cause congestion and negatively impact the packet delivery ratio.

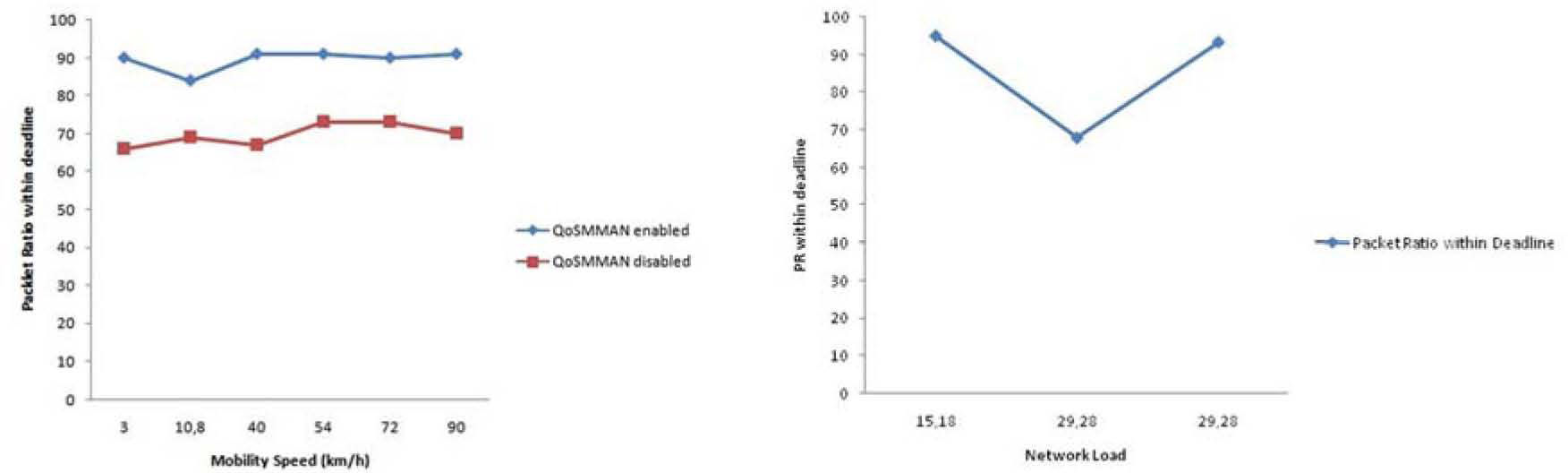

Number of deadline misses (Metric 3)The deadline miss ratio is evaluated with respect to mobility speed and network load. In the former, different speeds are associated with each time interval, as shown in Table 6. We can observe that when the QoSMMANET framework is enabled (exp. 4), a lower deadline miss rate is obtained compared to the scenario where the QoSMMANET framework is disabled (exp. 1), as depicted in Figure 8. In the former case, 90% of packets arrive within their deadline even in high mobility whereas in the latter only the 70% of packets arrive in time.

The deadline miss ratio is also evaluated with respect to network load. We have a scenario with different network load and the mobility speed is fixed to 72 km/h. Initially, the network load is 15.18% and the QoSMMANET framework is disabled (exp. 1), as shown in Figure 8. The network load is then increased to 29.28% where we can observe that the packet miss ratio increases. Later on, the QoSMMANET framework is enabled (exp. 4) and it should be noted that the packet delivery rate within deadline increases to nearly 100%.

The fact that the number of collisions is diminished, as shown in Figure 7, implies that the transmitted packets are less likely to suffer delays, as a consequence, the deadline miss ratio is improved. In addition, the queuing discipline directly benefits the higher priority classes by reducing their queuing time, hence, increasing the possibility that the associated packets arrive within their deadline.

Finally, we followed the design science paradigm as the research approach [45]. Design science, which is a problem-solving paradigm addressing research through two main processes: building and evaluating. One possible contribution, according to design science, involves designing an artifact (i.e a system prototype) whereby “it may extend the knowledge base or apply existing knowledge in new and innovative ways”.

Our main contribution regards the latter. Although, the protocols that were proposed in this paper for routing and bandwidth allocation are unique, the paper did not focus on evaluating and comparing them with other protocols of the same type; rather, the paper focused on integrating different protocols in a new and innovative way to provide QoS support to for real-time event systems in highly mobile environments.

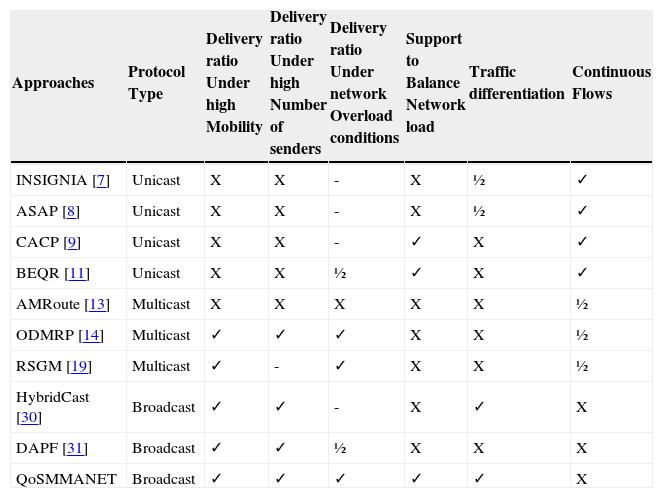

5.2DiscussionIn this section we present a qualitative analysis of our work, which compares QoSMMANET with other approaches, as shown in table 7. Packet delivery ratio under high mobility is not well supported by unicast routing protocols such as INSIGNIA, ASAP, CACP, and BEQR. In contrast, we can observe that most multicasting protocols and all broadcasting protocols provide good support for high mobility. Unicast routing protocols do not perform well in high mobility since established routes become rapidly invalid.

QoSMANNET vs. other approaches.22 ✓ is given for good support, a ½ for partial support, an X for little or no support, and a - for non-applicable or information not found.

| Approaches | Protocol Type | Delivery ratio Under high Mobility | Delivery ratio Under high Number of senders | Delivery ratio Under network Overload conditions | Support to Balance Network load | Traffic differentiation | Continuous Flows |

|---|---|---|---|---|---|---|---|

| INSIGNIA [7] | Unicast | X | X | - | X | ½ | ✓ |

| ASAP [8] | Unicast | X | X | - | X | ½ | ✓ |

| CACP [9] | Unicast | X | X | - | ✓ | X | ✓ |

| BEQR [11] | Unicast | X | X | ½ | ✓ | X | ✓ |

| AMRoute [13] | Multicast | X | X | X | X | X | ½ |

| ODMRP [14] | Multicast | ✓ | ✓ | ✓ | X | X | ½ |

| RSGM [19] | Multicast | ✓ | - | ✓ | X | X | ½ |

| HybridCast [30] | Broadcast | ✓ | ✓ | - | X | ✓ | X |

| DAPF [31] | Broadcast | ✓ | ✓ | ½ | X | X | X |

| QoSMMANET | Broadcast | ✓ | ✓ | ✓ | ✓ | ✓ | X |

The routes defined by the multicasting protocol AMRoute are more rigid than other multicasting protocols such as ODMRP and RSGM since the former does not provide redundant routes and drop packets are buffered until the tree is reconfigured. Similarly, unicast routing protocols do not support a high number of senders whereas most multicasting and brodcasting protocols report a good packet delivery ratio in this case.

Only ODMRP, RSGM and QoSMMANET report a good packet delivery ratio under network overload conditions.

On the other hand, support to balance network load is provided by a few approaches: CACP, BEQR and QoSMMANET.

Furthermore, only a few approaches consider traffic differentiation. INSIGNIA and ASAP only support two classes of traffic: non real-time traffic and real-time traffic. Only HybridCast and QoSMMANNET provide support for multiple classes of services based on a priority system. Finally, unicast routing protocols are best suited for continuous flows. Overall, QoSMMANET provides good support for all aspects evaluated apart from continuous flows as it was mainly designed for event-based communication. Although ODMRP and RSGM provide good support for high mobility, multiple number of senders, and overload network conditions, they do not support network load balancing nor traffic differentiation as QoSMMANET does.

These two aspects are also essential to the kind of target applications we are pursuing. For instance, a highly populated area in the automatic car control scenario without load balancing support may result on critical control messages (e.g. a emergency stop) arriving later or not arriving at all. Also, in overload conditions, control messages may not arrive in time when traffic differentiation is not supported.

6ConclusionsA new kind of applications are emerging which demand real-time constraints and are characterised for being highly mobile and requiring multiple-source multicasting. However, current approaches mainly focus on offering support for continuous flows in low mobile environments where single-source multicasting is assumed. Some approaches address these issues individually. We have presented the QoSMMANET framework, which integrally consider these issues. The main elements of our framework include a polynomial probabilistic flooding protocol, a queuing discipline, and a QoS management protocol. The flooding protocol is in charge of increasing network coverage under high node mobility conditions whilst minimising network congestion. The queuing discipline is oriented to offer different class services by providing packet differentiation and packet prioritisation. Lastly, the QoS management protocol is responsible to balance traffic flows and avoid bottle necks.

An evaluation of the QoSMMANET Framework was conducted in the ns-2 simulator. The experimental scenarios involved multiple-source multicasting in low and high mobility conditions. Compared with both the flooding protocol and the simple probabilistic flooding protocol, the results show that a reasonable packet delivery ratio is achieved even in high mobility and under different network load conditions and with the best performance in terms of collisions and packet duplicates. The latter would be a key advantage as network density is increased. Moreover, the deadline miss ratio obtained by our framework is lower. This issue is particularly important to applications demanding time constraints as the kind of applications we are focusing on.

At this time our framework offers soft real-time QoS support. Future work includes extending the framework to offer better than soft real-time QoS guarantees to hard real-time mobile ad hoc systems. Other issues can be addressed such as security, battery (energy) consumption, jitter, Normalized Routing Load (NRL), load balancing, and scalability. We believe that better QoS support can still be offered to such systems by providing both a high probability of meeting deadlines and an adaptable and flexible infrastructure.

✓ is given for good support, a ½ for partial support, an X for little or no support, and a - for non-applicable or information not found.

As an example of a typical percentage behavior and its relation with the number of collisions consider three increments in network load: 1) 100 packets with 10 packet collisions, 2) 2,000 packets with 200 packet collisions and 3) 1,000,000 packets with 10,000 packet collisions; so the collisions percentage is in 1) 10 %, 2) 10 % and 3) 1%.