In prior work, we have been developing a conceptual framework, called the Perspectives of Chemists, that attempts to capture a view of how student understanding progresses in chemistry. The framework was developed through Living by Chemistry (LBC), a chemistry curriculum project with, an assessment system for secondary and university chemistry objectives called ChemQuery, both funded by the National Science Foundation (NSF). ChemQuery is an assessment approach that uses this framework of key ideas in the discipline, and criterion-referenced analysis, to map student progress. It includes assessment questions, a scoring rubric, question exemplars, and a framework, which we refer to as the Perspectives of Chemists. Empirical data is then collected combined with Rasch family measurement models (IRT) to help analyze and interpret the data (Wilson, 2005). Student learning progress within or between courses can be described and individual differences can be explored for how students seem to be learning the scientific concepts. Our purpose was to study how students learn so that by knowing what they know we would know how to best help them. At the time, our work represented an early description of a possible learning progression in chemistry that we feel is still relevant today. Therefore this paper will focus on what we have learned about the pathway of student learning in chemistry through the development of the Perspectives framework in the ChemQuery assessment system.

Como parte de trabajo anterior, hemos venido desarrollando un marco conceptual conocido como Las Perspectivas de los Químicos, el cual busca capturar una visión de aprendizaje incremental en química. Este marco fue desarrollado junto con el proyecto curricular Living by Chemistry (LBC), el cual incluye un sistema de evaluación de conocimientos químicos para los niveles medio, medio-superior y universitario conocido como ChemQuery Estos proyectos han sido financiados por la National Science Foundation (NSF). ChemQuery es un sistema de evaluación que se basa en un conjunto de ideas claves en la disciplina, una rúbrica de evaluación, preguntas representativas, y el marco conceptual Las Perspectivas de los Químicos. El sistema permite combinar datos empíricos con modelos de medición de la familia Rasch (IRT), con el fin de analizar e interpretar dichos datos (Wilson, 2005). El sistema permite describir progreso en el aprendizaje de los estudiantes en un curso o entre cursos de química y explorar diferencias individuales en el nivel de compresión de conceptos científicos seleccionados. Nuestro propósito es estudiar cómo aprenden los estudiantes con el fin de saber lo que ellos saben y encontrar mejores maneras de apoyar su aprendizaje. En su momento, nuestro trabajo representó una descripción temprana de una progresión de aprendizaje en química que creemos es relevante hoy día. Por tanto, este trabajo se centra en la descripción de lo que hemos aprendido acerca de trayectorias de aprendizaje de los estudiantes en química a través del desarrollo del marco conceptual de Las Perspectivas dentro del sistema de evaluación ChemQuery.

Over the past ten years we, the Chemistry Education Group at University of California at Berkeley, have been developing the ChemQuery assessment system to describe the path of student understanding in chemistry. This work began before the term learning progressions was coined, yet we feel that we provide an early relevant model of how a pathway of understanding can emerge from analysis of assessment data. Moreover, this is a much different approach to the development of learning progressions, relying on statistical analysis to support and inform a proposed pathway of understanding (Claesgens et al., 2009).

In our work we have viewed student learning through a variety of lenses, both qualitatively and quantitatively. From a large body of evidence that we have collected at both the high school and college level, we are finding that there are patterns in the way students develop understanding. As described in A Framework for K-12 Science Education (National Research Council [NRC], 2011), which has synthesized existing research to provide a coherent definition in the field of science education, learning progressions are developmental progressions “designed to help students build on and revise their knowledge and abilities … with the goal of guiding student knowledge toward a more scientifically based understanding.” Similar to researchers working on learning progression, our goal in the development of the ChemQuery Assessment system has been to describe a path of how deep and meaningful understanding in chemistry develops, with the goal of guiding students in their learning. Our purpose was to study how students learn so that by knowing what they know we would know how to best help them.

Even though we are focused on chemistry, the lessons we are learning are quite general. Our work has required us to think innovatively about what we want to measure and how we are going to accomplish it. The task has involved a constant discourse between educators, teachers, students, chemists and measurement professionals, as well as considerable refection and revision based on the data we have gathered using our assessment system. As stated in the Framework, “A learning progression provides a map of routes that can be taken to reach that destination” (NRC, 2011). This paper will focus on what we have learned about pathways of student learning from the development of the Perspectives framework within the ChemQuery assessment system.

ChemQueryChemQuery is an assessment system that uses a framework of key ideas in chemistry, called the construct, and criterion-referenced analysis using item response theory (IRT) to map student progress. It includes assessment questions, a scoring rubric, question exemplars, and a framework to describe the paths of student understanding that emerge from the analysis. Integral to criterion-referenced measurement is a focus on what is being measured, which is referred to as the construct. The construct is the intention of the assessment, its purpose, and the context in which it is going to be used (Wilson, 2005). It is also a very different place to start development of an assessment instrument or the pathway of a learning progression.

As instructors or test developers interested in measuring student performance, we usually start our assessment work by designing the questions or tasks that we want students to be able to perform. We collect information focused on what we want to asses rather than on narrating the development of student understanding. In comparison, when using a construct, student responses are analyzed and scored based not only on the specific targets we want to evaluate, but also on how we are going to help students to get there. The construct allows us to intentionally acknowledge that what we teach, despite our best efforts, is not what students learn. The construct allows us to narrate the development of understanding that occurs as students “learn” over the course of instruction by providing a frame of reference to talk about the degrees and kinds of learning that actually take place. The variables of the construct provide both a visible representation of the level of understanding of the “big” ideas of a discipline and a trace of how students develop increasing complexity in their understanding of core concepts over time (NRC, 2011). This information is important both for students as they learn and for the teachers as they try to figure out how to build upon current levels of student understanding.

Our approach to research on student understanding allowed us to use quantitative measures to test and refine a hypothesis of how learning develops, and to relate that to qualitative evidence about that learning. Integral to the task of developing a construct-referenced measurement tool are the iterations in the design process that allow for the testing and refinement of the construct being measured. Thus, both the account of how the Perspectives construct has been developed together with the actual results on student learning obtained through the ChemQuery assessment system provide useful information about how student understanding progresses.

The Perspectives of Chemistry as a “construct”Describing what we want students to learn and what successful learning patterns look like is always a challenge. One approach to complete this task is to capture not only what mastery should look like, but also the patterns of progress of student understanding towards such mastery. In science education, this model of progress is described as a learning progression; in measurement theory, such progress is the “construct,” intended to express what we want to measure.

Often in educational and psychological research, the term “construct” refers to any variable that cannot be directly observed, such as intelligence or motivation, and thus needs to be measured through indirect methods. We might think that student understanding of key ideas in a discipline such as chemistry could be directly measured and would not need to involve constructs. However, understanding students’ developing perspective of the atomic view of matter or the increasing complexity and sophistication of their problem solving strategies surrounding reactivity, may involve exploring latent cognitive processes that are not directly observable and need to be inferred. For example, how students use a chemical model may be a latent variable for which both the correct and incorrect answers to particular questions in an assessment can serve as markers or indicators of understanding.

Using the language of construct reminds us that when assessing student understanding, we are often attempting to measure thinking and reasoning patterns that are not directly manifested — we do not “open” the brain and “see” reasoning. Instead, observables such as answers to questions and tasks give us information about what that reasoning, or latent construct, may be. This is true whether we think of reasoning in terms of knowledge structures, knowledge states, mental models, normative and alternative conceptions, higher order reasoning, or any of a number of other possible cognitive representations that are essentially latent. All of these mental constructs cannot be observed directly but rather through indirect manifestations that must be interpreted.

The Perspectives construct developed for this project attempted to specify some important aspects of conceptual understanding in chemistry. The construct, or larger framework of organizing ideas, helped us to a) theoretically structure and describe the relationships among the pieces of knowledge, and b) begin to describe how students learn.

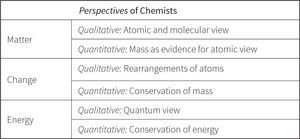

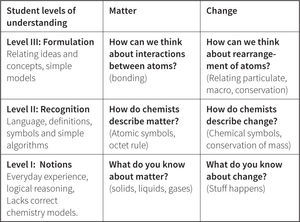

The Perspectives of Chemists: a Tale of developmentDesign of the ChemQuery Assessment System entailed developing a model to organize the overarching ideas of the discipline of chemistry into a framework that is described as the Perspectives of Chemists. The Perspectives is a multidimensional construct map, which describes a hierarchy of conceptual understanding of chemistry ranging from novice levels to expert levels along proposed progress variables. Each variable in the Perspectives construct is then scaled to describe a proposed progression of how students learn chemistry over the course of instruction. The resulting Perspectives framework makes explicit the relationship between domain knowledge and how students make sense of ideas as they learn chemistry.

The Perspectives framework is designed to measure both acquisition of domain knowledge and student ability to reason with this new knowledge as student understanding develops towards more correct and complete explanatory models in chemistry. Specifically, the framework is intended to describe how students learn and reason using models of chemistry to predict and explain phenomena. Therefore, along the one axis is domain knowledge and along the other axis is the perceived progression of explanatory reasoning as students gain understanding in chemistry. The emphasis is on understanding, thinking, and reasoning with chemistry that relates basic concepts (ideas, facts, and models) to analytical methods (reasoning). Simply stated, the aim of the organization framework is to capture how students learn to reason like chemists as they develop normative explanatory models of understanding in chemistry.

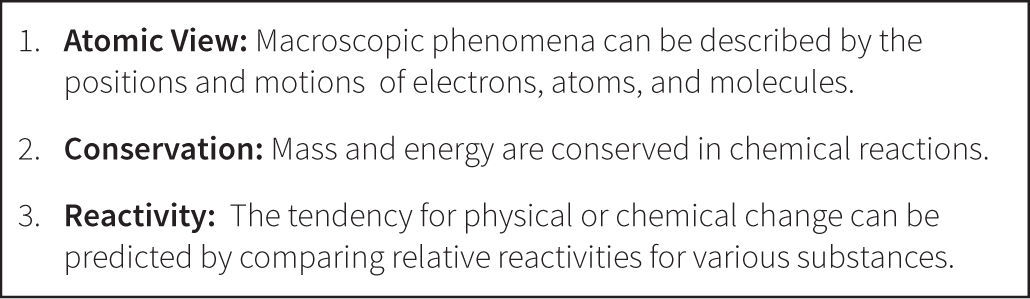

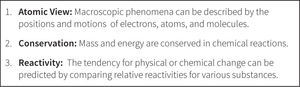

Since its initial conception, the Perspectives framework has undergone considerable revision over time. The development of the construct began as an attempt to inform the curriculum design and to provide an assessment system for the Living by Chemistry (LBC) high school chemistry program developed at University of California at Berkeley (Stacy, Coonrod, & Claesgens, 2009). The LBC team developed three guiding Principles of Chemistry to organize the design of the subject matter, as shown in Figure 1. The Principles — a particulate view of matter, conservation of mass and energy, and reactivity — were considered the “big ideas” in the discipline, or some of the primary models that chemists use to understand the field.

To serve as a framework for the ChemQuery assessment system, each of the proposed Principles in Figure 1 was used to build a construct by defining a succession of increasingly sophisticated ideas that described a progression of how student understanding of the big idea might develop. Collection of informant data and pilot testing then showed whether the construct held up under scrutiny, or whether it needed to be adjusted to better refect the empirical data. The story of the development of the Perspectives framework is summarized in the following sections.

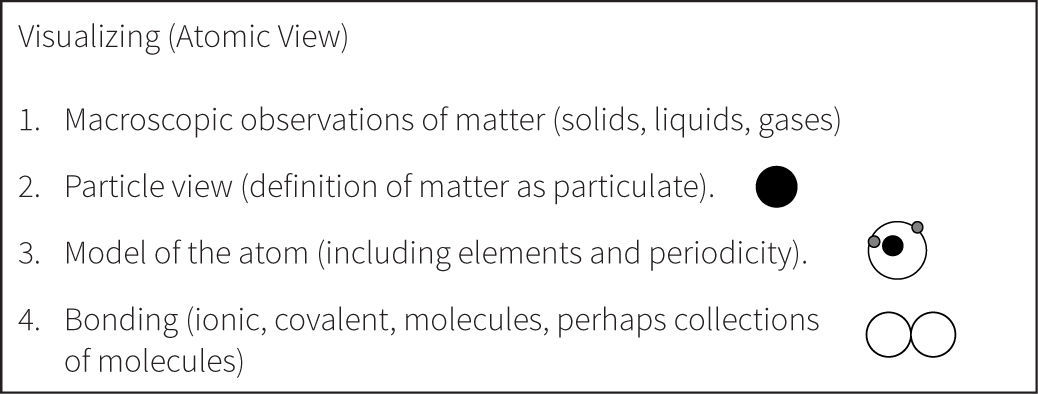

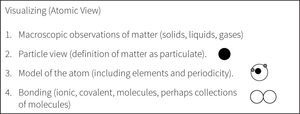

Towards an Atomic ViewIn the first iteration of the construct associated with Principle 1 in Figure 1, the assumption was that student understanding would mirror the historical development of the particulate theory of matter since research on student understanding finds that, as early chemists did, students describe matter “based on immediate perceptual clues” (Krnel, Watson, & Glazar, 1998) and maintain a continuous view instead of particulate view of matter (Driver et al., 1994; Krnel et al., 1998). Thus, as summarized in Figure 2, we assumed that novice students would have a macroscopic continuous view of matter; as they progress in their studies, they would understand atoms as particles, followed by the understanding that these particles have protons, neutrons, electrons. Finally, they would understand how the atoms come together to bond. However, the data we collected revealed a distinctly different progression of student understanding.

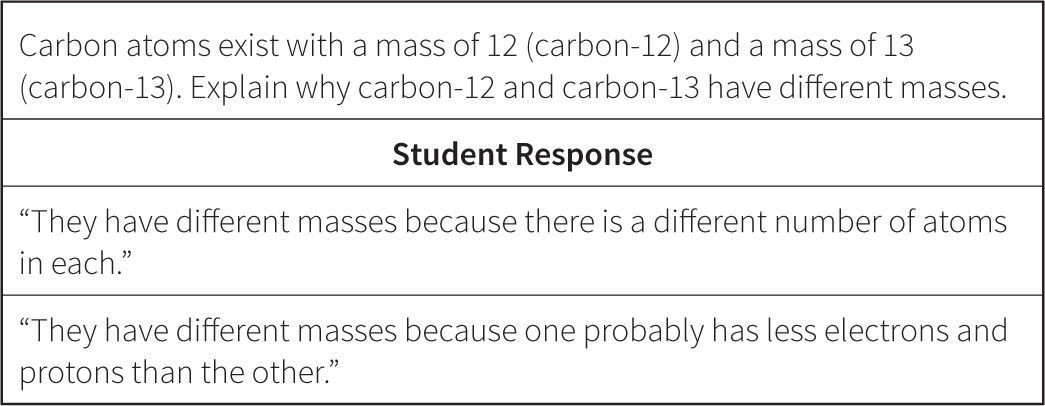

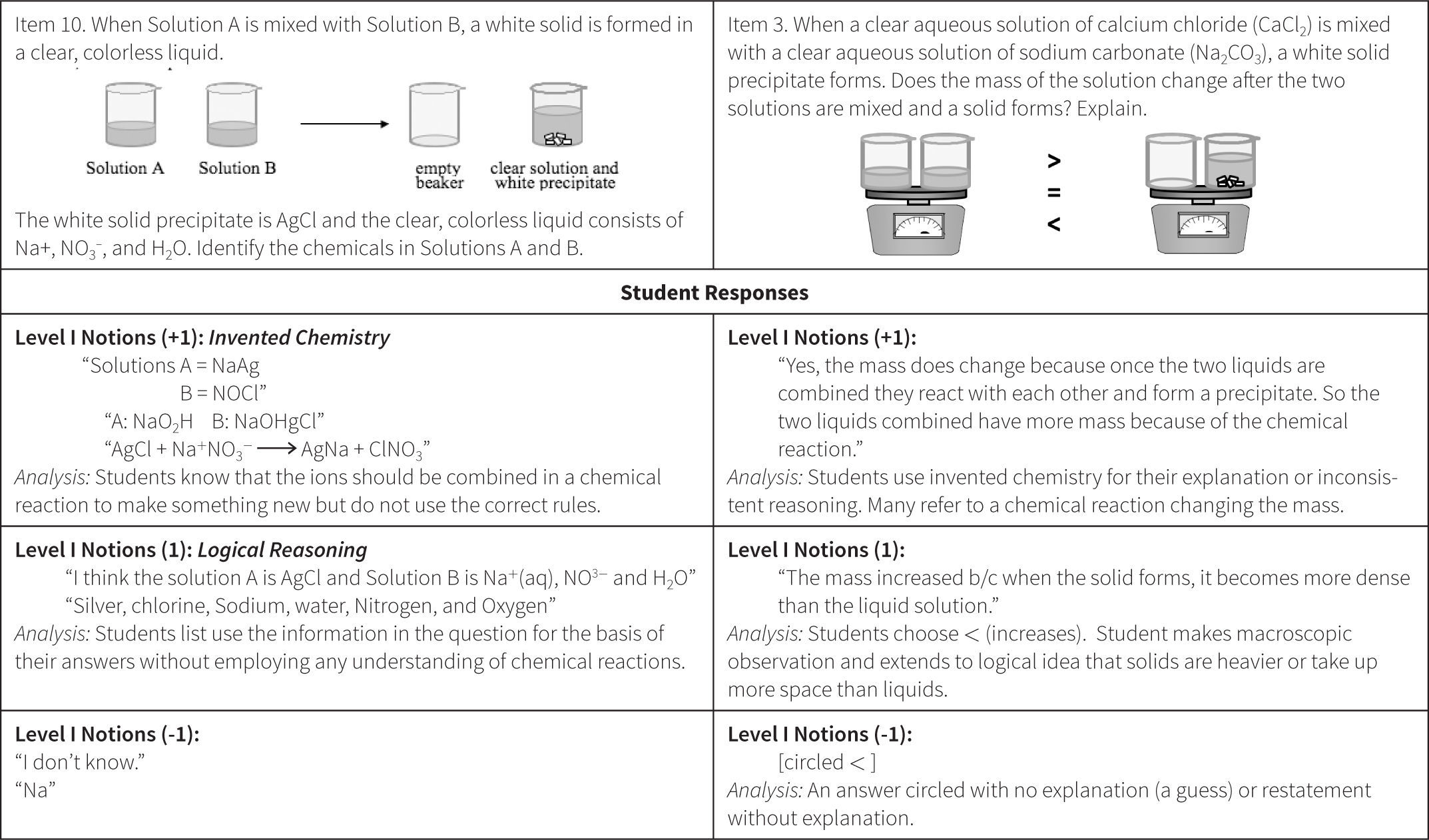

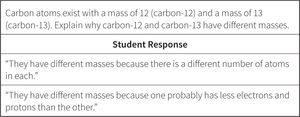

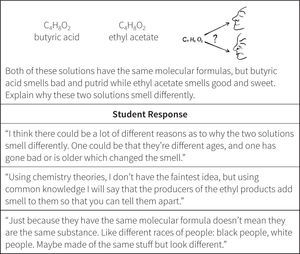

The Atomic View progress variable as initially proposed did not allow us to effectively score the answers of students who could only use simple rules or “name” something in their responses, but could not successfully apply this knowledge. For example, in their answers to questions such as the one included in Figure 3, many students used the terms atom, proton, and electrons without understanding their meaning. These types of answers could have simply been scored as wrong or not successful, but that would have ignored interesting information that seemed to reveal a variety of different levels of student ability prior to achieving a traditional level of “success” on an item. These types of results led us to revise our proposed model of student understanding of the atomic view of matter in the Perspectives construct map.

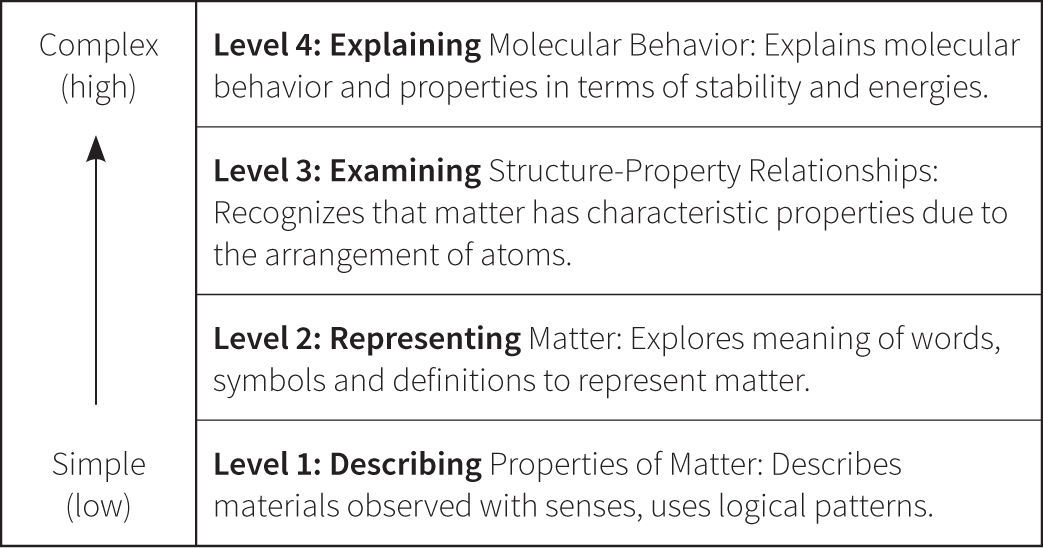

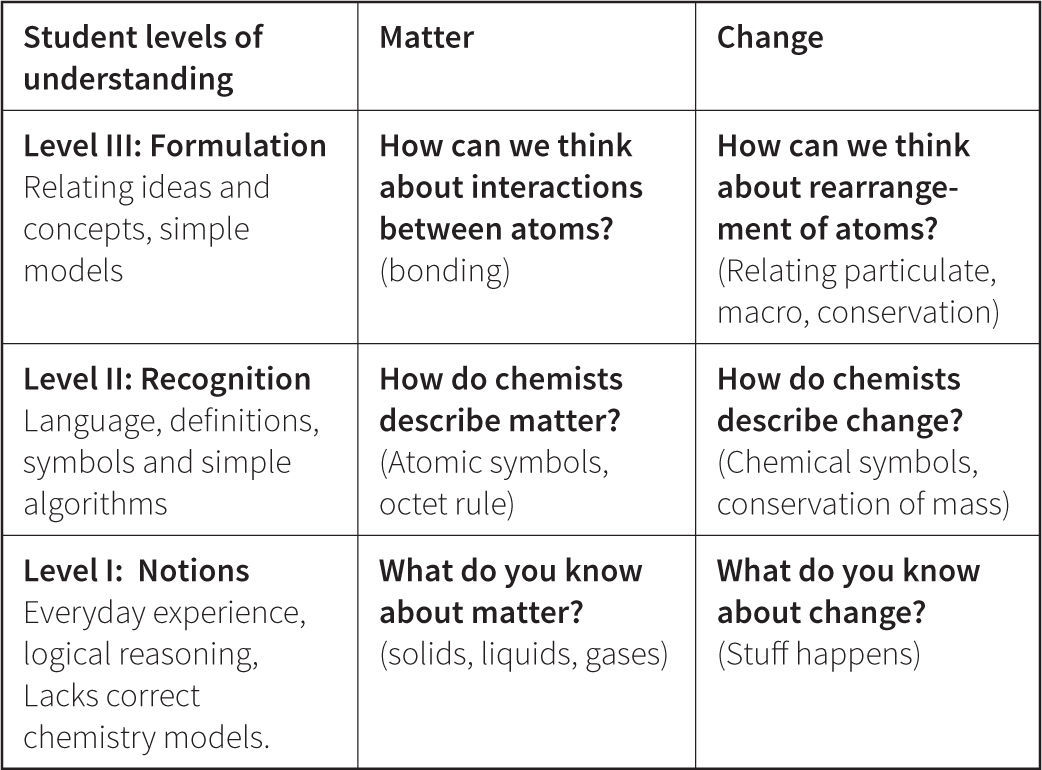

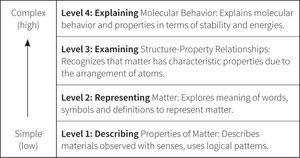

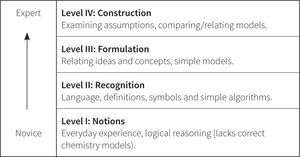

From Principles to Perspectives of ChemistsBased on the results of our pilot testing, our Principles of Chemists approach shifted to Perspectives of Chemists to emphasize how students think and reason with chemistry knowledge that included a particulate view of matter. The “new” construct map emphasized “habits of mind” or process skills associated with scientific inquiry, like observing, reasoning, modeling, and explaining (AAAS, 1993; NRC, 1996). In this iteration, the highest level of student understanding of chemistry was “explanation” of the properties of matter, as shown in Figure 4. In the first level of this new construct students could describe or observe the world around them; at the next level they could represent the world with “normative” chemistry terms, and as they progress in their learning they should eventually be able to explain the properties of matter.

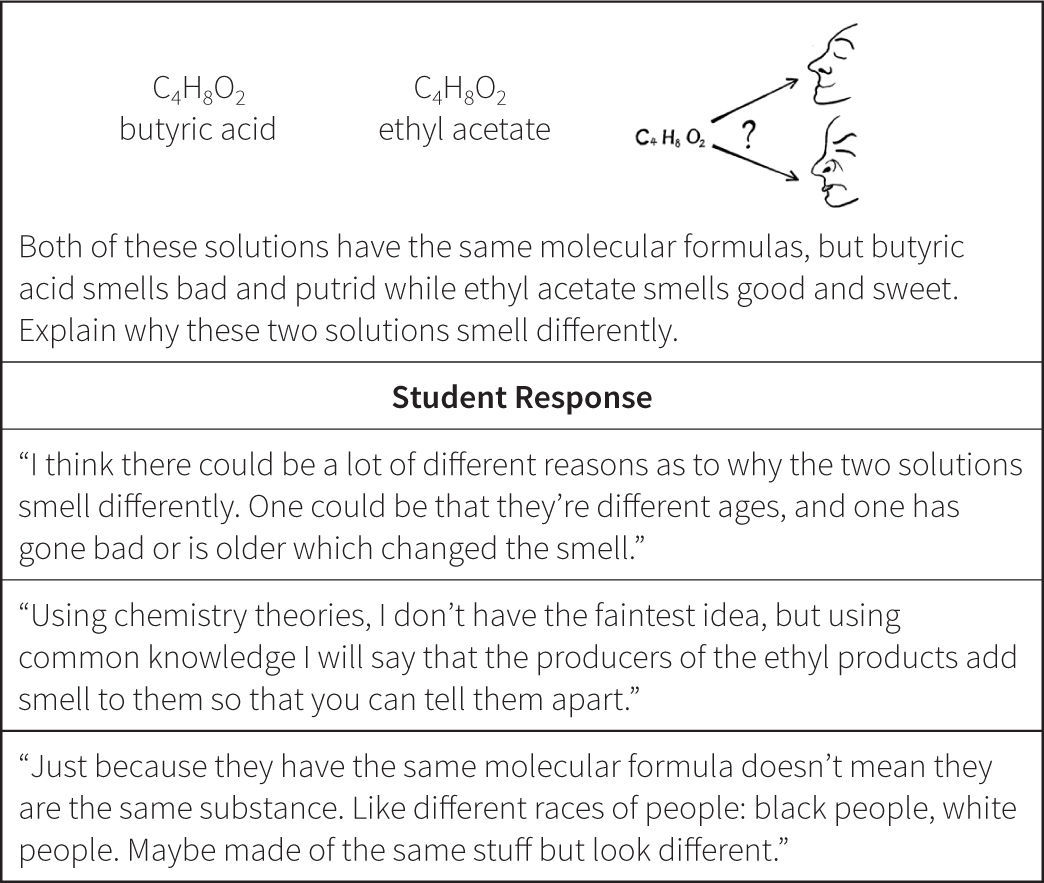

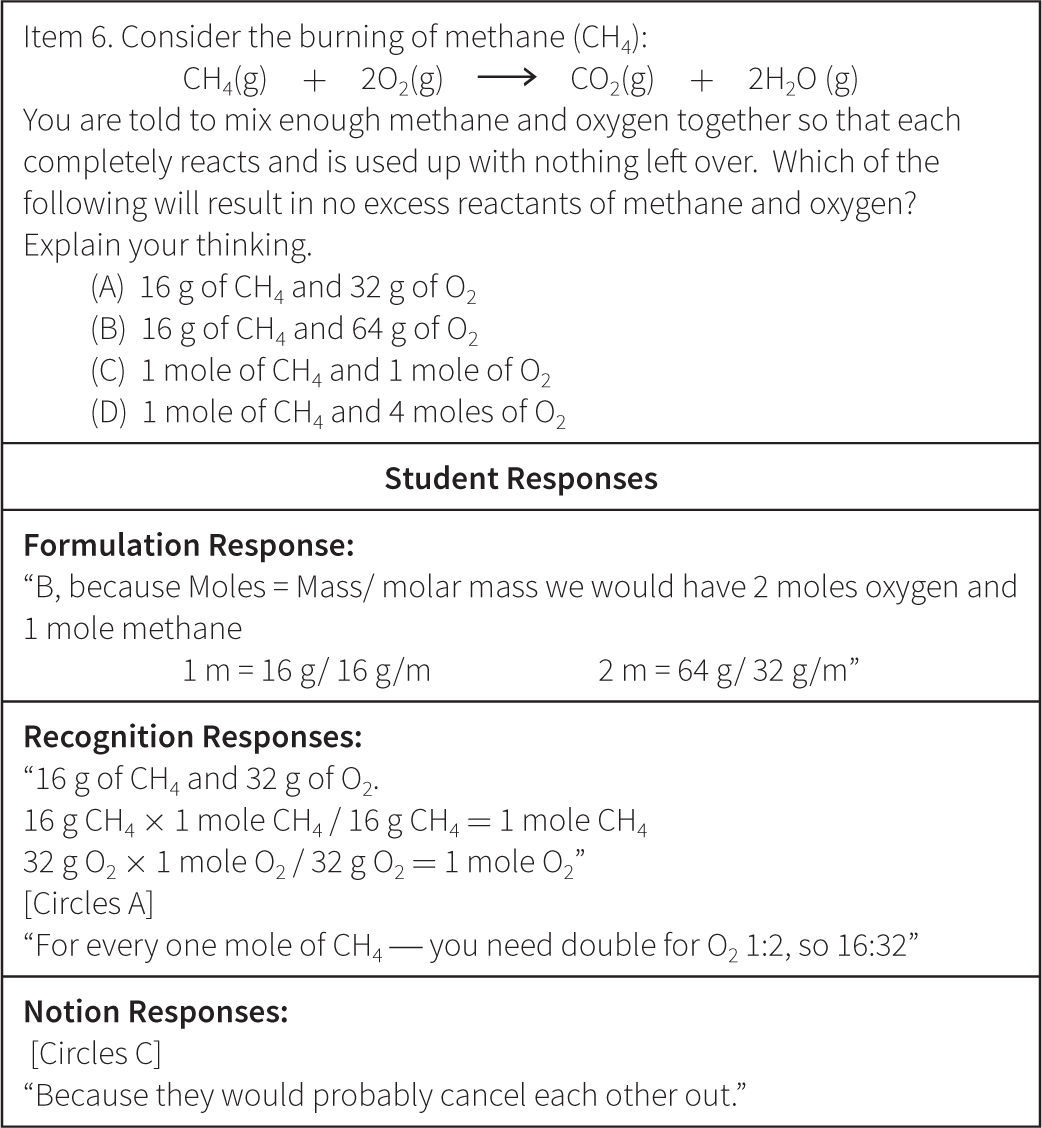

Testing of the framework summarized in Figure 4 again revealed failures to capture the complexity of student understanding. For example, the construct did not provide space for the possibility of good reasoning strategies without correct domain knowledge, which was highly evident in some student responses. As illustrated in Figure 5, students could reason at different levels of sophistication but it was the depth of their chemistry understanding that affected the quality of their answers. Metz (1995) argues that the limits to the understanding that novice learners exhibit is due to a lack of domain knowledge rather than limits in their general reasoning ability. ChemQuery findings thus far concur. The issue is not that novices cannot reason, but just that they do not reason like chemists, or with the domain knowledge of chemists (Metz, 1995; Samarapungavan & Robinson, 2001). This is especially significant in chemistry where students develop few particulate model ideas from experience and are more likely to rely on instruction. Based on the data, once again the construct was revised.

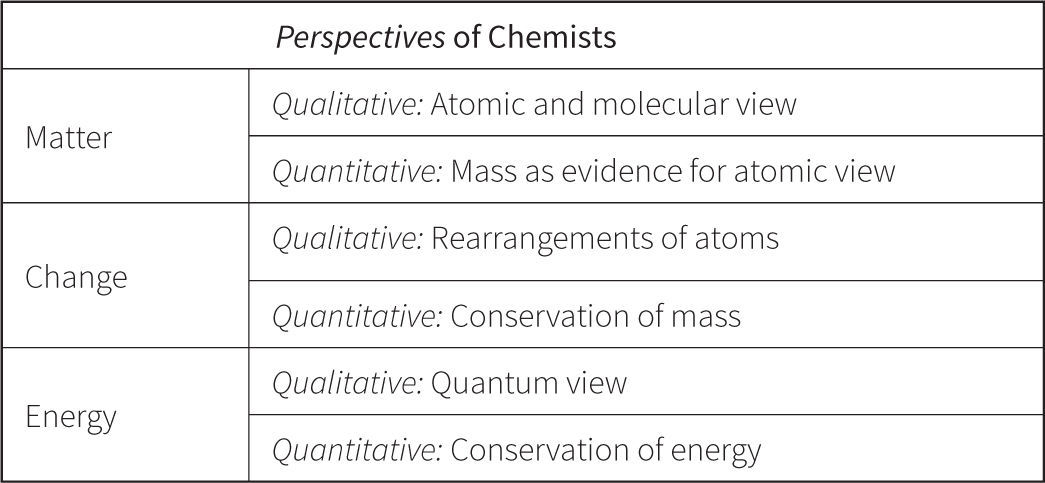

Quantitative vs. Qualitative UnderstandingIn the next iteration of the Perspectives framework, qualitative versus quantitative understanding was emphasized with the hypothesis that these were two distinct variables or types of understanding about matter, change, and energy (see Figure 6). Evidence of these distinct types of understanding can be found in studies where students who can answer traditional quantitative problems often do not show sound conceptual understanding (Bodner, 1991; McClosky, 1983).

With this revision of our framework, the big idea of Change was chosen as the next progress variable to develop.

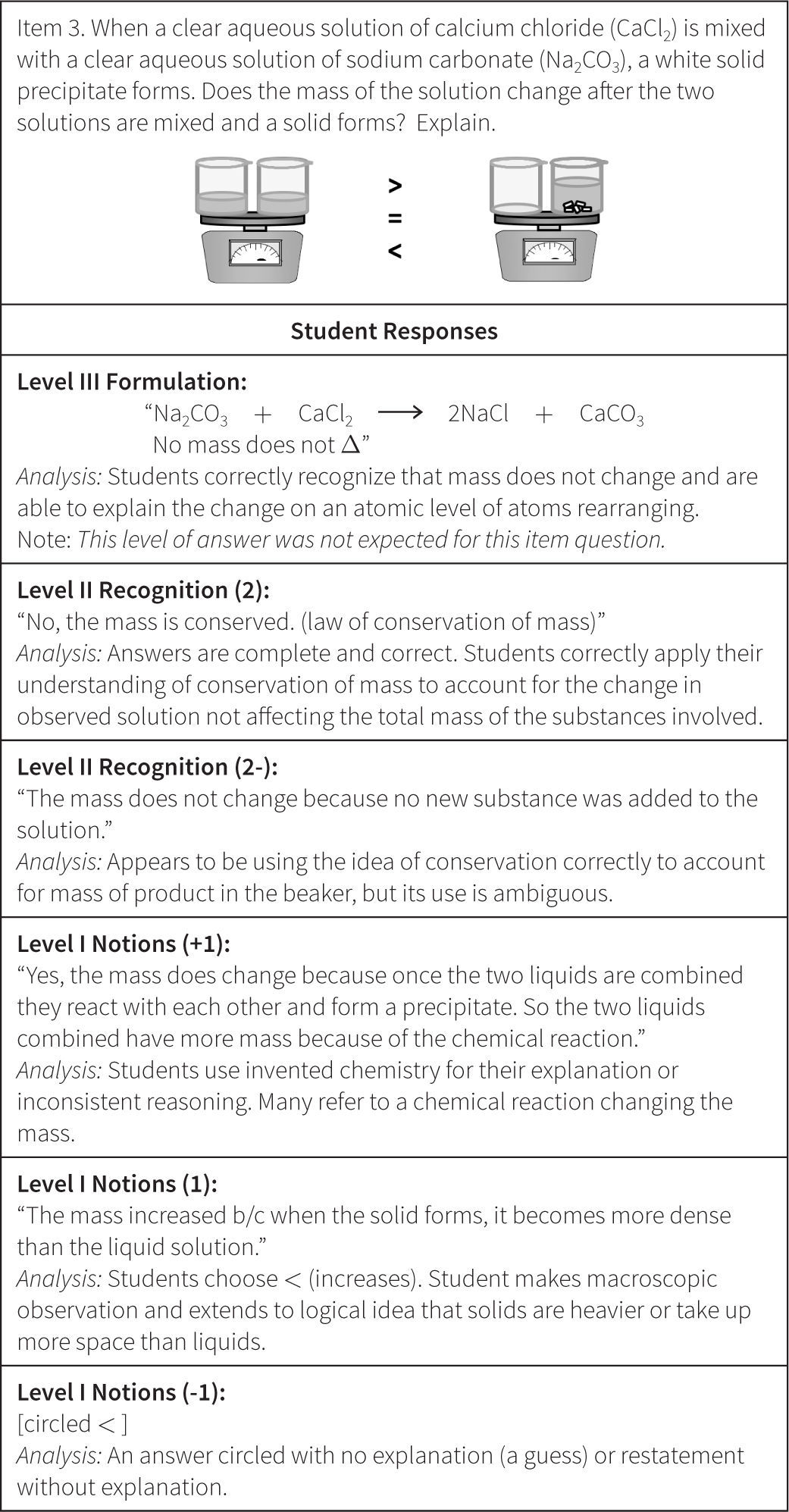

Almost immediately, we encountered problems with item development. As originally conceived, conservation (Quantitative) and reactivity (Qualitative) were treated as separate variables. However, chemical change and conservation did not seem to be distinct concepts when writing assessment questions and could not readily be decoupled as this version of Perspectives implied. We were unable to disentangle a qualitative understanding of change, i.e. the macroscopic understanding that “stuff happens,” from a quantitative understanding, as represented by the rearrangement of atoms and measured in the laboratory.

It became apparent that almost all questions in chemistry regarding chemical reactions and their products required a conceptual understanding of conservation — the idea that matter cannot be created or destroyed, and a quantitative view of counting atoms in chemical reactions. As reported in the research literature, students can memorize a list of chemical versus physical changes but do not connect the rearrangement of atoms to the new substance observed (Stavridou & Solomonidou, 1998). Research shows that students can balance equations without thinking about conservation of mass (Johnson, 2002; Yarroch, 1985). This was not the type of thinking that we felt supported conceptual understanding of chemical change. Due to this realization in the item development phase, Perspectives framework required further changes.

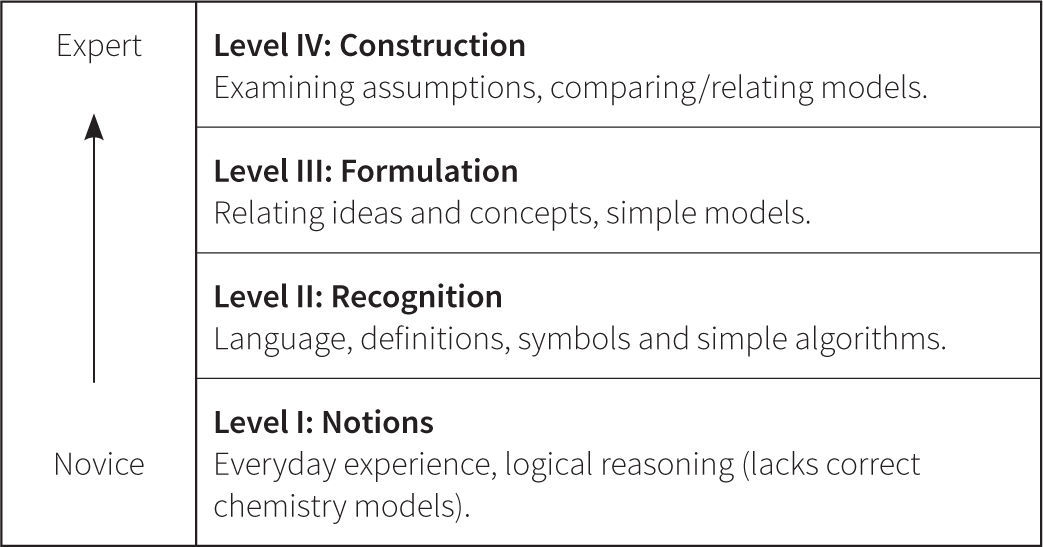

Patterns in the dataThe next iteration of the Perspectives framework was based on patterns in student responses that emerged both qualitatively and quantitatively. The data came from two large data sets. Subjects included 400+ high school students and 116 university students enrolled in general chemistry. Mixed methods were used, including interviews, coding, and statistical analysis. Students were administered pre- and posttests as linked quizzes on multiple forms. The initial patterns qualitatively observed in the student responses supported the progression of understanding from novice to expert shown in Figure 7. Further statistical analysis provided even more insight to the pathway of understanding that emerged.

Using the proposed Perspectives framework, patterns in student responses were analyzed using ACER Conquest 3.0 software to generate a Wright map of student scores based on IRT Rasch partial credit models. Item response models are statistical models that express the probability of an occurrence, such as the correct response on an assessment question or task, in terms of estimates of a person’s ability and the difficulty of the question or task. Specifically, the scores for a set of student responses and the questions are calibrated relative to one another on the same scale (a “log-it” or log of the odds scale) and their ft, validity, and reliability estimated (Wilson, 2005). This scale is the Wright Map that is generated in the statistical analysis using software, such as ConQuest.

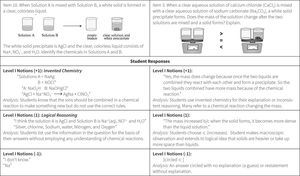

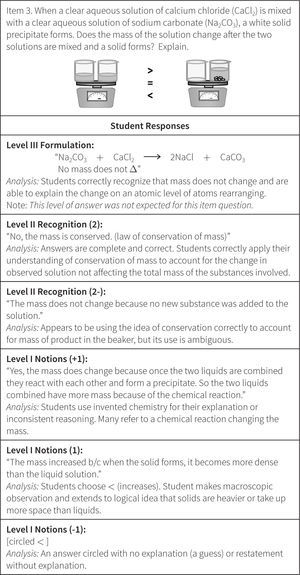

The Wright Map provides locations of both the students and the item questions, offering a description of student understanding based on the relative position of item questions and individual respondents. These scores are then matched against the ChemQuery Perspectives framework to describe levels of success in chemistry along each variable from novice to expert. Based on the statistical analysis, the proposed progression of student understanding depicted in Figure 7 was judged to be valid (Claesgens et al., 2009), and insights at a more fine grained level about how student understanding progresses within Level I Notions were also gained from this data. In particular, the initial Level I Notions of student understanding was found to fall into three general categories, which by preliminary IRT analysis could be scaled from low (1−) to high (1+). The first category of answers observed (scored 1−) were guesses or non-sensical answers (only blanks were scored as zero). The second type of answer (scored 1) employed no chemistry, but exhibited logical patterning and comparative reasoning; responses took into account observations and information present in the question stem. The third category (scored 1+) sought to use chemistry, but skewed the answer in an entirely incorrect direction. Examples of student responses scored at these three Level I sublevels are shown in Figure 8.

The ordering of these categories, all of which are incorrect answers, is interesting. Admittedly, when scoring these student responses the inclination was to score the invented chemistry answers (1+) lower than the responses that exhibited logical reasoning (1), but the statistical analysis provided a different story. The IRT analysis revealed that students who primarily held a 1+, or invented chemistry, approach or strategy to their answers had a significantly higher probability of answering some questions on the quizzes correctly than did students who offered answers showing primarily sublevel 1 reasoning (sound logical reasoning but no use of chemistry models) or 1− reasoning (guess). The so-called “invented” ideas are the beginnings of normative chemistry thinking and represent the kinds of prior knowledge and real world experiential reasoning that students can bring to the table. Reasoning with “invented” chemistry ideas, though often leading to incorrect answers, does appear to bring value to the development of understanding, as students who reason with these models are significantly more likely to produce some correct answers on other questions and tasks than do students who attempt to employ only prior reasoning and do not introduce even incorrect attempts at normative chemistry.

The Perspectives of Chemistry FrameworkAs shown in Figure 9, we have developed scales to describe progression in student understanding along each of the major threads in our Perspectives framework. The different levels within the proposed variables are constructed such that more complex and sophisticated responses are associated with higher scores. Students move from describing their initial ideas in Level I (Notions), to relating the language of chemists to their view of the world in Level II (Recognition), to formulating connections between several ideas in Level III (Formulation), to fully developing models in Level IV (Construction), to asking and researching new scientific questions in Level V (Generation). Advancement through the levels is designed to be cumulative. In other words, students at Level II (Recognition) are expected to be able to describe matter accurately and use chemical symbolism to represent it. This is essential before they can begin to relate descriptions of matter with an atomic scale view in Level III (Formulation).

The proposed levels in Figure 9 seem to be supported by misconception research in chemistry education that shows that students hold onto experiences and then superficially add new knowledge before integrating the knowledge into deeper understanding (Driver et al., 1994; Gabel & Samuel, 1987; Hesse & Anderson, 1992; Krnel, Watson, & Glazar, 1998; Mulford & Robinson, 2002; NRC 2005, 1996; Niaz & Lawson, 1985; Samarapungavan & Robinson, 2001; Sawrey, 1990; Smith, Wiser, Anderson, & Krajcik, 2006; Taber, 2000; Talanquer, 2006). The proposed framework was partially influenced by the SOLO taxonomy (Biggs & Collis, 1982), which helped us capture some of the trends seen in the student data. Perhaps the most influential idea from the SOLO taxonomy was the construction of levels of understanding from a uni-structural level, focusing on one aspect of information, followed by a multi-structural level, where students relate multiple aspects of information available, to a relational level in which multiple pieces of information are integrated. For the purposes of the Perspectives construct, this interpretation was applied to how individuals reason as they gain understanding within the domain of chemistry. Therefore, based on the results of the IRT analysis, the resulting Perspectives framework became a synthesis of content expert knowledge, empirical evidence from data gathered, the SOLO taxonomy described in the measurement field, and misconception research from research in the chemistry education literature.

Specific examples of how the progression of understanding summarized in Figure 9 manifests in student responses are shown in Figures 10 and 11. In Level I Notions, students can articulate their ideas about matter, and use prior experiences, observations, logical reasoning, and knowledge to provide evidence for their ideas. The focus is largely on macroscopic (not particulate) descriptions of matter, since students at this level rarely have particulate models to share. In Level II Recognition, students begin to explore the language and specific symbols used by chemists to describe matter. The ways of thinking about and classifying matter are limited to relating one idea to another at a simplistic level of understanding, and include both particulate and macroscopic ideas. In Level II Formulation, students are developing a more coherent understanding that matter is made of particles and the arrangements of these particles relate to the properties of matter. Their definitions are accurate, but understanding is not fully developed so that student reasoning often is limited to causal instead of explanatory mechanisms. In their interpretations of new situations students may over-generalize as they try to relate multiple ideas and construct formulas. Very few students in our samples demonstrated reasoning at Levels IV and V. In Level IV Construction, we speculate that students are able to reason using accurate and appropriate chemistry models in their explanations, and understand the assumptions used to construct the models. In Level V Generation, students are becoming experts as they gain proficiency in generating new understanding of complex systems through the development of new instruments and new experiments. We do not expect to see Level V until graduate school.

SummaryAs our data shows, students often hold onto prior beliefs in chemistry and develop “incorrect” answers on the pathway to understanding. However these results do not contradict the concept of learning progressions. As the Framework (NRC, 2011) describes, the notion of learning as a developmental progression: “… is designed to help children continually build on and revise their knowledge and abilities, starting from their curiosity about what they see around them and their initial conceptions about how the world works. The goal is to guide their knowledge toward a more scientifically based and coherent view of the natural sciences and engineering, as well as of the ways in which they are pursued and their results can be used.”

Therefore, understanding better both the process and progress of learning in science may help us characterize what understandings students actually develop, and what conceptual tools may be helpful for bridging to new and more powerful ways of thinking.

Overall there seem to be three major insights we have found in the development of the Perspectives framework. The first acknowledges how logical and resourceful students are in their attempts to answer the questions when they do not know the chemistry. Secondly, it seems that the “invented” chemistry (Level I Notions, score 1+) may be a key path to student understanding. Even though student answers may appear so wrong, this transitional route seems to demonstrate the need for students to use the language of chemistry as they are being introduced to simple normative chemistry models. In order for students to “speak the language of chemistry,” they need the opportunity to explore ideas using chemistry words and symbols, before they become fully able to reason in meaningful ways with them. For instance, in exploring patterns in the periodic table, students may know from real-world experience that silver, gold and copper are all metals. But when asked to talk about what properties these three metals share, students may focus directly on the symbolic language, citing for instance that Ag, Au and Cu all include the vowels A or U, rather than using what they know about these substances. It is important to recognize that the chemical symbolism is a new type of language and students should be allowed time to work on decoding its symbols, looking for patterns that help them learn to “speak” the new tongue.

Finally, our data indicates that the move from Notions to Recognition is a critical shift in building a foundational understanding in chemistry. It seems that reaching Level II Recognition provides students with a conceptual foundation on which correct understanding of models can begin to build. Students who remain at the Notions level are unable to knit ideas together and build connections that allow them to understand chemistry models, or to use them as conceptual tools in diverse tasks and activities. These students do not have a foundation on which they can build, and can thus be expected to be less successful at developing meaningful understandings.

The insights gained from our work have greatly influenced the development of the Living by Chemistry curriculum (Stacy et al., 2009), as well as course instruction at the university level at our institutions. Our research shows that a generalizable conceptual framework can be created and calibrated with latent variable methods and used to understand student understanding over time. In our approach, student learning was conceived not simply as a matter of acquiring more knowledge and skills, but as progress towards higher levels of competence as new knowledge is linked to existing knowledge, and deeper understandings are developed from earlier understandings. Moreover, this paper illustrates ways in which criterion-referenced assessments can help us to think about what students actually know and how to help them learn. This is a much more quantitative description of understanding than that typically encountered in work on learning progressions, but we feel it adds to the discussions about how to best explore and support student learning.

This material is based on work supported by the National Science Foundation under Grant No. DUE: 0125651. The authors thank Mark Wilson, Rebecca Krystyniak, Sheryl Mebane, Nathaniel Brown, Karen Cheng, Michelle Douskey and Karen Draney for their assistance with instrument and framework development, data collection, scoring, and discussion of student learning patterns.