The diversity-validity dilemma has been a dominant theme in personnel selection research and practice. As some of the most valid selection instruments display large ethnic performance differences, scientists attempt to develop strategies that reduce ethnic subgroup differences in selection performance, while simultaneously maintaining criterion-related validity. This paper provides an evidence-based overview of the effectiveness of six strategies for dealing with the diversity-validity dilemma: (1) using 'alternative' cognitive ability measures, (2) employing simulations, (3) using statistical approaches to combine predictor and criterion measures, (4) reducing criterion-irrelevant predictor variance, (5) fostering positive candidate reactions, and (6) providing coaching and opportunity for practice to candidates. Three of these strategies (i.e., employing simulation-based assessments, developing alternative cognitive ability measures, and using statistical procedures) are identified as holding the most promise to alleviate the dilemma. Potential areas in need for future research are discussed.

El dilema validez-diversidad ha sido un tema dominante en la investigación y la práctica de la selección de personal. Dado que algunos de los instrumentos de selección más válidos presentan grandes diferencias étnicas en sus puntuaciones, los científicos intentan desarrollar estrategias que reduzcan las diferencias de los subgrupos en la selección mientras mantienen simultáneamente la validez de criterio. Este artículo proporciona una revisión basada en la evidencia de la efectividad de seis estrategias utilizadas para manejar el dilema validez-diversidad: (1) usar medidas de capacidad cognitiva "alternativas", (2) emplear simulaciones, (3) utilizar procedimientos estadísticos para combinar las medidas predictoras y del criterio, (4) reducir la varianza de los predictores irrelevante para el criterio, (5) fomentar reacciones positivas en los candidatos y (6) facilitarles preparación y posibilidades de practicar. Tres estrategias (emplear evaluaciones basadas en simulaciones, desarrollar medidas alternativas de capacidad cognitiva y usar procedimientos estadísticos) son las más prometedoras para aminorar el problema. Se discuten áreas potenciales que requieren investigación en un futuro.

In personnel selection, some of the most valid selection instruments generally display large ethnic score differences in test performance (Hough, Oswald, & Ployhart, 2001; Ployhart & Holtz, 2008; Sackett, Schmitt, Ellingson, & Kabin, 2001). For example, cognitive ability tests are among the most valid predictors of job performance, but also display the largest ethnic subgroup differences in test performance as compared to other selection instruments. This phenomenon is labeled "the diversity-validity dilemma" in personnel selection (Ployhart & Holtz, 2008; Pyburn, Ployhart, & Kravitz, 2008). The dilemma implies that performance and diversity goals do not always converge in personnel selection, which hinders organizations that aim to employ valid instruments while at the same time achieving acceptable levels of employee diversity. So, increasing diversity by limiting the occurrence of ethnic subgroup differences in selection performance has emerged as a key issue in the agendas of both selection researchers and practitioners across the world. The main objective has been to discover strategies that reduce ethnic subgroup differences in selection performance, while simultaneously maintaining criterion-related validity. Note that subgroup differences are not restricted to White-Black differences in the USA but generally refer to selection performance differences between ethnic majority and ethnic minority members in a given country.

Over the years, various selection strategies have been proposed to diminish ethnic subgroup differences in selection test performance, while ensuring similar levels of criterion-related validity. In 2008, Ployhart and Holtz published an overview article that categorized the available strategies for dealing with the diversity-validity dilemma. They identified five main clusters of strategies: (1) the use of simulations and other predictors that display smaller ethnic subgroup differences as compared to cognitive ability, (2) statistically combining and manipulating scores, (3) reducing criterion irrelevant test variance, (4) fostering positive test-taker reactions, and (5) providing coaching and practice. Exactly five years after Ployhart and Holtz's review, we pose the following question: Where are we now with regard to strategies for dealing with the diversity-validity dilemma and where should we go?

The current paper aims to answer this question by updating the recent research evidence on strategies for dealing with the diversity-validity dilemma. We used the original five-strategy framework as an organizing heuristic for our review (Ployhart & Holtz, 2008). In the end, six broad groups of strategies emerged because for clarity reasons we split up the Ployhart and Holtz's first strategy (i.e., use of simulations and other predictors that display smaller ethnic subgroup differences as compared to cognitive ability) in two groups, namely (a) the use of alternative cognitive ability measures and (b) the use of simulations. For each strategy, we provide an overview of the current research thus far. We end each section with some domain-specific limitations and avenues for future research. Although there may be partial overlap with the Ployhart and Holtz's overview, we mainly focused on novel research lines and findings within each category of strategies. A first criterion for inclusion was that the study had to deal with the diversity-validity dilemma or with a strategy to increase diversity or lower ethnic performance differences. To this end, we searched on computerized databases (e.g., Web of Science) using a combination of general keywords such as 'selection', 'diversity', 'diversity-validity dilemma', and 'subgroup differences', and keywords that were more closely related to each strategy such as 'perceptions', 'practice', 'training', 'Pareto', etc. A second criterion for inclusion deals with the publication year. Studies that were published after 2007 or that were not yet included in the Ployhart & Holtz's summary were given particular attention. Additionally, we searched for unpublished manuscripts and abstracts of recent conference contributions (e.g., Annual Conference of Society for Industrial and Organizational Psychology). Our review presents scientists and practitioners with a research-based overview of the current state-of-the-art regarding the diversity-validity dilemma, while at the same time offering a number of evidence-based guidelines for organizations that aim to select a competent as well as ethnically diverse workforce.

Use "Alternative" Cognitive Ability Measures

As jobs evolve to be more complex and challenging, assessing cognitive ability continues to gain in the selection process (Gatewood, Feild, & Barrick, 2011). However, as noted above, cognitive ability measures have repeatedly demonstrated to display large ethnic subgroup differences in test performance in US (Hough et al., 2001; Ployhart & Holtz, 2008; Roth, Bevier, Bobko, Switzer, & Tyler, 2001; Sackett et al., 2001) as well as in European contexts (Evers, Te Nijenhuis, & Van der Flier, 2005), which substantially decreases hiring chances for members of ethnic minority groups (i.e., increased potential for adverse impact, which can be defined as less advantageous hiring rates for ethnic minority members as compared to ethnic majority members). Hence, researchers have advised exploring alternative measures of cognitive ability, which maintain validity and additionally exhibit substantially lower subgroup differences in test performance (e.g., Lievens & Reeve, 2012).

A first line of research within this domain has focused on logic-based measurement approaches as alternative measurement formats of cognitive ability (Paullin, Putka, Tsacoumis, & Colberg, 2010). Logic-based measurement instruments require the application of reasoning skills and aim to measure logical thought processes. The Siena Reasoning Test (SRT), which presents applicants with novel and unfamiliar reasoning problems, serves as a promising example of this approach (Yusko, Goldstein, Scherbaum, & Hanges, 2012). The test is time-constraint and consists of 25 or 45 reasoning items. Adequate criterion-related validity (r = .25-.48), and significantly smaller ethnic subgroup differences (d = 0.38) as compared to traditional cognitive ability tests have been observed for the SRT (Yusko et al., 2012).

Despite their promising psychometric characteristics and practical purposes, questions have also emerged about the effectiveness of logic-based measurement instruments. One concern is that some test items have a rather high verbal load, which makes it difficult to apply the instrument to the selection of lower-level functions or in lingual diverse groups (e.g., in which group members have different mother tongues). Another concern is that capturing cognitive ability skills by presenting candidates with logic reasoning tasks may not only change the measurement method: it can also influence the constructs assessed. In fact, logic-based measurement approaches may capture something different than g. As the cognitive load (or g-load) of an instrument has commonly been accepted as one of the most influential drivers of ethnic subgroup differences in test performance (Spearman's hypothesis, Jensen, 1998), the potentially lower g-load of logic-based measurement instruments would explain why they display lower ethnic score differences. Future studies that examine these assumptions are needed to gain insight on the effectiveness of logic-based measurement approaches for cognitive ability.

A second strategy within this category aims to improve the point-to-point correspondence of the cognitive predictor with the criterion (i.e., job performance). In this context, Ackerman and Beier (2012) suggest to measure the result of intellectual investments over time (typical performance, such as job knowledge tests) rather than to capture maximal intellectual capabilities at a given point in time (maximal performance, such as traditional cognitive ability tests). Their argument is based on the fact that maximal prediction only occurs to the extent that predictors and criteria are carefully matched (see Lievens, Buyse, & Sackett, 2005). However, in most cases maximal performance is assessed during the selection stage (e.g., by traditional cognitive ability tests), whereas typical performance is evaluated on the job. Consequently, Ackerman and Beier (2012) proposed to move away from measuring broad cognitive abilities, which are known for displaying substantial ethnic subgroup differences, and instead rely on knowledge tests as predictors. In a similar vein, a number of researchers plead for contextualizing cognitive ability measures. Contextualization refers to the process of adding circumstantial and situational (i.e., contextual) information to items as opposed to employing general and decontextualized items. In the case of personnel selection, this implies working with business-related cognitive items that confront applicants with realistic organizational issues and questions instead of generic items (Hattrup, Schmitt, & Landis, 1992). This approach may reduce implicit cultural assumptions that are often embedded in generalized cognitive items (Brouwers & Van De Vijver, 2012).

Finally, researchers have suggested assessing specific cognitive abilities rather than overall cognitive ability measures when dealing with the diversity-validity dilemma as this often results in small to moderate reductions in ethnic subgroup differences (Hough et al., 2001; Ployhart & Holtz, 2008). In this context, the specific concept "executive functioning", which has received substantial research attention lately, seems particularly interesting (e.g., Huffcutt, Goebl, & Culbertson, 2012). Executive functioning relates to monitoring of events, shifting between tasks, dealing with situational and social parameters, and inhibition of tasks. This concept bears resemblances to the specific cognitive demands that are required for effective job performance.

In sum, the search for alternative measures of cognitive ability as a substitute for or in combination with traditional cognitive ability tests covers an important first category of strategies for dealing with the diversity-validity dilemma. Logic-based measurement methods seem a valid alternative measure for cognitive skills, but in order to enhance our understanding of the drivers of ethnic score differences further research should focus on which characteristics of logic-based instruments cause lower adverse impact. In addition, the measurement of executive functioning instead of broad cognitive skills, capturing typical versus maximal cognitive performance, and developing contextualized versus decontextualized measures of cognitive ability holds promise with regard to tackling the diversity-validity dilemma. However, more studies are needed to investigate whether these strategies hold their promise for dealing effectively with the diversity-validity dilemma.

Use Simulations as Additional Selection Procedures

In their review article, Ployhart and Holtz (2008; but see also Hough et al., 2001; Sackett et al., 2001) identified the use of simulation exercises as one of the best strategies to deal with the diversity-validity dilemma. Simulations, such as assessment centers, work samples, and situational judgment tests (SJTs), refer to selection instruments wherein applicants perform exercises that physically and/or psychologically resemble the tasks to be performed on the job (see Lievens & De Soete, 2012 for an overview). Assessment centers and work samples are performance assessments that demand applicants to carry out job-related assignments (e.g., setting up a work planning, reprimanding an unmotivated employee), whereas SJTs confront applicants with job-related dilemmas (in paper-and-pencil or video-based format) and require them to select the most appropriate response out of a set of predetermined options (Motowidlo, Dunnette, & Carter, 1990).

In terms of ethnic subgroup differences in test performance, meta-analytic research has shown that simulations display lower subgroup differences than cognitive ability tests. For assessment centers, Dean, Bobko, and Roth (2008) demonstrated standardized Black-White subgroup differences of 0.52, with White test-takers systematically obtaining higher scores than Blacks. Roth, Huffcutt, and Bobko (2003) found similar effect sizes for work samples (d = 0.52), whereas other studies found d-values ranging from 0.70 to 0.73 (Bobko, Roth, & Buster, 2005; Roth, Bobko, McFarland, & Buster, 2008). Finally, Whetzel, McDaniel, and Nguyen (2008) noted small to moderate ethnic subgroup differences in SJT performance (d = 0.24-0.38). For nearly all types of simulations, cognitive load (defined as the correlation between test scores on the simulation exercise and cognitive ability test scores, Whetzel et al., 2008) has proven to be the most influential driver of ethnic subgroup differences (for assessment centers: Goldstein, Yusko, Braverman, Smith, & Chung, 1998; Goldstein, Yusko, & Nicolopoulos, 2001; for work samples: Roth et al., 2008; for SJTs: Whetzel et al., 2008).

Two conclusions follow from this review of simulation-based research findings. First, although ethnic subgroup differences on simulations are significantly smaller than those on cognitive ability tests, they are in most cases still substantial (e.g., Bobko & Roth, 2013). As this implies that the risk for adverse impact remains when adding simulations to the selection procedure, continued research efforts should be undertaken to develop simulation instruments with minimal adverse impact, while maintaining good validity. Second, our conceptual knowledge of the underlying mechanisms that cause or reduce ethnic subgroup differences in simulation performance is rather limited. As most prior studies in this domain have approached simulations as holistic entities (Arthur & Villado, 2008), alterations in the magnitude of subgroup differences could not be attributed to specific construct or method factors.

Therefore, in the last years an increasing number of studies have advocated a more systematic and theory-driven approach for examining subgroup differences in simulation performance (Arthur, Day, McNelly, & Edens, 2003; Arthur & Villado, 2008; Chan & Schmitt, 1997; Edwards & Arthur, 2007; Lievens, Westerveld, & De Corte, in press). Simulations are then treated as a combination of predictor constructs (i.e., the behavioral domain being sampled) and predictor methods (i.e., the specific techniques by which domain-relevant behavioral information is elicited, collected, and subsequently used to make inferences, Arthur & Villado, 2008, p. 435). Researchers are recommended to keep specific factors constant when manipulating either predictor constructs or predictor methods in order to increase our theoretical knowledge on the nature of subgroup differences, thereby advocating a "building block" approach (Lievens et al., in press) rather than a holistic approach. Along these lines, researchers have identified "fidelity" as a key method factor (i.e., building block) of ethnic subgroup differences in performance on simulation-based instruments in addition to cognitive load, which is a key construct factor (e.g., Chan & Schmitt, 1997; Ployhart & Holtz, 2008).

Fidelity can be defined as the extent to which the test situation resembles the actual job situation (Callinan & Robertson, 2000). It can be divided in stimulus fidelity on the one hand, which refers to the fidelity of the presented stimulus material, and response fidelity on the other hand, which refers to the fidelity of how participants' responses are collected. For example, when selecting applicants for sales functions, high stimulus and response fidelity are obtained by using video (as opposed to paper-and-pencil) fragments of client interactions and requiring oral (as opposed to written) responses.

Regarding the stimulus side of fidelity, Chan and Schmitt (1997) compared a written SJT to a content-wise identical video SJT. They found significantly smaller ethnic subgroup differences in performance on the latter variant. Similarly, other studies have demonstrated smaller ethnic subgroup differences on high stimulus fidelity simulations as compared to lower stimulus fidelity formats, but failed to keep test content and other factors constant (e.g., Schmitt & Mills, 2001; Weekley & Jones, 1997).

On the response side, fidelity has often been neglected as a potential factor of diversity in selection (e.g., Ryan & Greguras, 1998; Ryan & Huth, 2008). Arthur, Edwards, and Barrett (2002), and Edwards and Arthur (2007) showed that higher fidelity (constructed or open-ended) response formats generated lower ethnic performance differences than their low fidelity (multiple choice) counterparts. Recent studies have tried to extend Arthur et al.'s findings to simulations. De Soete, Lievens, Oostrom, and Westerveld (in press) focus on ethnic subgroup differences in performance on constructed response multimedia tests. A constructed response multimedia test presents applicants with video-based job-related scenes, with a webcam capturing how they acted out their response (De Soete et al., in press; Lievens et al., in press; Oostrom, Born, Serlie, & van der Molen, 2010, 2011). Preliminary effects on diversity have been promising, with constructed response multimedia tests displaying smaller ethnic subgroup differences than other commonly used instruments (De Soete et al., in press).

In sum, using simulations has demonstrated to be a fruitful strategy in light of the diversity-validity dilemma. Primarily, instruments that are characterized by low cognitive load on the one hand and high stimulus as well as high response fidelity on the other hand have shown to be effective in reducing ethnic performance differences without impairing criterion-related validity. To close the gap between selection research and the fast evolving simulation practice, we believe that future research should focus on examining the efficacy of other high stimulus and response fidelity formats. Examples are innovative computer-based simulation exercises such as 3D animated SJTs, two-dimensional and three-dimensional graphic simulations, avatar-based SJTs, serious games, and multimedia instruments. Initial results regarding their ethnic subgroup differences and validity have been promising (Fetzer, 2012), but more research on their effectiveness is needed.

Use Statistical Approaches for Predictor and/or Criterion Scoring

A third category of techniques that deal with the diversity-validity dilemma refers to a number of statistical methods to combine and adjust selection predictor scores, such as adding non-cognitive predictors to cognitive ones, together with explicit predictor weighting, criterion weighting, and score banding (Ployhart & Holtz, 2008).

A first strategy within this category makes use of non-cognitive predictors that exhibit smaller ethnic subgroup differences than cognitive predictors, and combines them with a cognitive predictor into a weighted sum called a predictor composite score. This is also known as a compensatory strategy, because lower scores on one predictor can be compensated for by higher scores on other predictors. Sackett and Ellingson (1997) have proposed several formulas to estimate the effect size resulting from the combination of predictors with different effect sizes in an equally or differently weighted composite score. By systematically varying the factors underlying the effect size of the predictor composites (e.g., the intercorrelation of the original predictors), researchers and practitioners can evaluate the potential consequences of different approaches to predictor selection and combination. In a related vein, De Corte, Lievens, and Sackett (2006) described an analytic method that evaluates the outcomes of single- and multi-stage selection decisions in terms of adverse impact and selection quality, as a result of the order in which the predictors are administered (either in the early or in the later stages of the selection process), and the selection rates at the different stages. Single stage selection decisions are taken after all predictors are administered, whereas multi-stage (or multiple hurdle) selection decisions administer the predictors in several different stages, with only the applicants obtaining a sufficiently high score in one stage passing to the subsequent stage(s). Although the proposed tools could be used to pursue the development of a set of guidelines for the design of multi-stage selection scenarios that optimize adverse impact and the selection quality, De Corte et al. (2006) and other authors (Sackett & Roth, 1996) warn against such a quest by stating that there are no simple rules to approach hurdle based selection. An illustration of the dangers implied in formulating such rules is provided in a paper by Roth, Switzer, Van Iddekinge, and Oh (2011), who demonstrate that the projected effects on the average level of job performance and adverse impact ratio (i.e., the ratio of the selection rate of the lower scoring applicant subgroup and the selection rate of the higher scoring applicant subgroup, oftentimes used as a measure of adverse impact, AIR) of multiple hurdle selection systems heavily depend on the input values that are used.

In order to rationally develop the weights that are assigned to the elementary predictors (also called predictor weights) to develop predictor composites, De Corte, Lievens, and Sackett (2007, 2008) and De Corte, Sackett, and Lievens (2011) proposed decision aids that can be applied to optimize both adverse impact and the quality of (multi-stage) selection decisions. The proposed decision aids focus on employers that plan selection decisions based on an available set of predictors, and determine the Pareto-optimal predictor weights that lead to Pareto-optimal trade-offs between selection quality and

diversity. A specific weighing scheme and corresponding trade-off is called Pareto-optimal when the level on one outcome value (i.e., quality) cannot be improved without doing worse on the other outcome (i.e., AIR). The regression-based predictor composite is one particular Pareto-optimal trade-off, and no other weighing of the predictors can outperform this composite in terms of expected selection quality. However, other Pareto-optimal trade-offs revealed by the decision aid show a more balanced trade-off between the outcomes so that they imply a higher level of AIR than the regression-based composite, for a concession in terms of quality. Furthermore, a similar decision aid was proposed for facilitating decision making in complex selection contexts (Druart & De Corte, 2012). Complex selection decisions handle situations with an applicant pool, several open positions, and applicants that are interested in at least one of the positions under consideration. Such situations can be encountered in large organizations (e.g., the military) and as admission decisions in educational contexts. Further research should go into the design of more user-friendly decision aids, and user reactions concerning these tools, as suggested by Roth et al. (2011).

Third, the approach that weights different predictors and combines them into a predictor composite score can be applied to criterion measures as well, and is then called criterion weighting (Ployhart & Holtz, 2008). Criterion weighting is based on the multidimensionality of the criterion space by taking into account task, contextual, and counterproductive behavior. The relative weights assigned to these different criterion dimensions may suggest using alternative weights for the cognitive and non-cognitive predictors within the predictor composite, thereby affecting the ethnic minority representation as shown by Hattrup, Rock, and Scalia (1997) and De Corte (1999). In line with the method to obtain Pareto-optimal trade-offs between selection quality and diversity (De Corte et al, 2007), where the amount of selection quality a decision maker indulges to obtain a more favorable AIR is a value issue, criterion weighting reflects an organization's values about the different job performance dimensions. As it is rarely the case that an organization's goal is univariate and thus only considers the maximization of task performance (Murphy, 2010; Murphy & Shiarella, 1997), criterion weighting seems to be a promising method to alleviate the diversity-validity dilemma. However, research that clearly evaluates its merits is scant thus far.

Finally, the last strategy within the category of statistical techniques is score 'banding' (Cascio, Outtz, Zedeck, & Goldstein, 1991). Banding involves grouping the applicant test scores within given ranges or bands, and treating the scores within a band as equivalent. The width of the bands is based on the standard error of the difference between scores, and reflects the unreliability in the interpretation of scores. Selection within bands then happens on the basis of other variables that show smaller subgroup differences (Campion et al., 2001). However, banding is controversial, due to several contradictions in the rationale behind this technique. For example, although banding seems to be effective in reducing adverse impact only when using racioethnic minority preferences to select or break ties within a band (Ployhart & Holtz, 2008), this approach is prohibited by law in the USA (Cascio, Jacobs, & Silva, 2010). Future research should investigate other methods than the classical test theory for computing bands, such as item response theory (see Bobko, Roth, & Nicewander, 2005).

Reduce Criterion-irrelevant Variance in Candidates' Selection Performance

A fourth strategy for reducing ethnic subgroup differences in selection performance consists of eliminating irrelevant variance caused by the predictor measure (Ployhart & Holtz, 2008). Irrelevant criterion variance denotes variance caused by predictor demands that are not related to the criterion (job performance). Irrelevant test demands may be correlated with ethnicity and therefore generate subgroup differences that are unrelated to actual on-the-job performance differences. In order to increase diversity, it is recommended to eliminate irrelevant test demands from the selection procedure. Below we review recent research regarding two potential sources of criterion irrelevant test variance: verbal load and cultural load.

An instrument's verbal load can be defined as the extent to which the predictor requires verbal (e.g., understanding, reading, writing texts) capacities in order to perform effectively (Ployhart & Holtz, 2008). Several studies have demonstrated that ethnic minorities score systematically lower on several measures of verbal ability (Hough et al., 2001). For instance, substantial ethnic subgroup differences have been found in performance on reading comprehension tasks (Barrett, Miguel, & Doverspike, 1997; Sacco et al., 2000). In their meta-analysis on subgroup differences in employment and educational settings, Roth et al. (2001) found d-values for verbal ability tests ranging from .40 (for Hispanic-White comparisons) to .76 (for Black-White comparisons), with White respondents receiving higher test scores. In addition, De Meijer, Born, Terlouw, and Van Der Molen (2006) demonstrated that score differences between ethnic minority and ethnic majority applicants on several selection instruments could partly be attributed to (a lack of) language proficiency. These findings confirm that it is advisable to limit the verbal demands of selection instruments strictly to the extent that they are required on the basis of job analysis (Arthur et al., 2002; Hough et al., 2001; Ployhart & Holtz, 2008). As we already discussed, Chan and Schmitt (1997) provided a good example of this strategy by comparing ethnic subgroup differences on a written SJT with a content-wise identical video SJT. The video SJT displayed significantly smaller ethnic subgroup differences, which was partly caused by the lower reading requirements of the video format as compared to the written format. Similarly, the recent use of technology-enhanced stimulus and response formats (e.g., two-dimensional and three-dimensional graphic animations, webcam testing, see Fetzer, 2012; Lievens et al., in press; Oostrom et al., 2010, 2011) also aims to lower reading and writing demands.

A second strategy to eliminate irrelevant test variance concerns reducing the cultural load of selection instruments. The rationale behind this approach is that test-takers from different cultures adhere to different values, interpretations, and actions, whereby the test-taker's cultural background may differentially influence test performance regardless of the individual's actual capabilities. For instance, non-Western cultures have been posited to adhere more value on orality, movement, and behavioral aspects, and use more high-context (non-verbal) communication styles as compared to Western societies (Gudykunst et al., 1996; Hall, 1976; Helms, 1992). Similarly, there might exist culture-based preferences for divergent thinking ("There are multiple answers for each problem") vs. convergent thinking ("There is only one correct answer", Outtz, Goldstein, & Ferreter, 2006). In fact, Helms (1992, 2012) has repeatedly argued that most selection instruments are developed against a majority cultural background. That is, test developers who belong to the ethnic majority group use their own culture as a reference framework when creating selection instruments. As this may systematically disadvantage respondents that do not belong to the ethnic majority culture, an option might be to develop culturally equivalent instruments (e.g., Helms, 1992). Culturally equivalent instruments aim to avoid interpretation discrepancies or performance (dis)advantages that are related to ethnicity apart from of the capabilities measured (Helms, 1992).

Several attempts have been undertaken to develop instruments that do not impose irrelevant cultural demands. Some researchers tried to incorporate the ethnic minority test-takers' culture, e.g., by presenting Blacks with cognitive items in a social context in order to integrate their emphasis on social relations (DeShon, Smith, Chan, & Schmitt, 1998), whereas others aimed to develop so-called 'culture-free' measurement tools ('fluid intelligence measures', Cattell, 1971). Unfortunately, thus far these approaches have not resulted in substantial reductions of ethnic subgroup differences. One exception is a study of McDaniel, Psotka, Legree, Yost, and Weekley (2011). They noticed that there exist White-Black mean differences in the preference for extreme responses on Likert scales. They reasoned that this common finding might affect ethnic subgroup differences on selection procedures that use Likert scales. Therefore, they developed a new scoring approach for SJTs that rely on Likert scales. In an attempt to make the SJT more culture-free they controlled for elevation and scatter in SJT scores. The results of this within-person standardization approach were encouraging: SJTs scored with this technique substantially reduced White-Black mean score differences and also yielded larger validities.

Other techniques to reduce cultural inequity in selection instruments are sensitivity review panels and cognitive interviewing. Reckase (1996) was the first to recommend the use of sensitivity review panels when constructing tools for culturally diverse groups. Subject matter experts regarding cultural groups (and sensitivities) are asked to review all items and evaluate them on their (in) sensitivity towards certain ethnic groups. Potentially offensive stereotypes or expressions are removed to increase test fairness. A similar technique, which requires the cooperation of actual test-takers, is called cognitive interviewing (Beatty & Willis, 2007). Cognitive interviewing can be defined as a qualitative technique to review tests and questionnaires. During the interview, test-takers are asked to think aloud while responding to items or to provide answers to additional questions (e.g., how do you interpret the instructions and items, and what are potential difficulties or ambiguities perceived while completing the instrument). On top of identifying problematic items that generate ethnic subgroup differences, this technique also provides additional insight in the underlying factors of these ethnic score discrepancies. Cognitive interviewing has already proven its merits in the health sector, by increasing the conceptual equivalence of medical questionnaires for ethnically diverse groups (e.g., Nápolez-Springer, Santoyo-Olsson, O'Brien, & Steward, 2006; Willis & Miller, 2011). In personnel selection, the application of cognitive interviewing is in its infancy. Oostrom and Born (submitted) used the technique to discover differences in interpretation between ethnic majority and minority test-takers in a role-play. Results demonstrated that several interpretation discrepancies could be identified, which were mostly explained by differences in language proficiency and attribution styles. Research on the effectiveness of the technique to reduce subgroup differences is needed.

A last tactic to reduce irrelevant cultural predictor variance concerns the identification and removal of culturally biased items through differential item functioning (DIF, Berk, 1982). The goal of DIF is to detect those items that lead to poorer performance of ethnic minority group test-takers as compared to evenly competent majority group test-takers (Sackett et al., 2001). Mostly unfamiliar or verbally difficult items are the focus of attention. Several past studies have found evidence for DIF in tests used in high-stakes contexts (Freedle & Kostin, 1990; Medley & Quirk, 1974; Whitney & Schmitt, 1997). More recently, Scherbaum and Goldstein (2008) found evidence for differentially functioning items in standardized cognitive tests. Imus et al. (2011) successfully applied the DIF technique to biodata employment items in an ethnically diverse sample. Mitchelson, Wicher, LeBreton, and Craig (2009) revealed DIF on 73% of the items of the Abridged Big Five Circumplex of personality traits. However, this technique also has its limitations. The usefulness of DIF is criticized by some scientists due to its unknown effects on validity (Sackett et al., 2001). Furthermore, studies have found little evidence of easily interpretable results as there are few available theoretical explanations for DIF effects (e.g., Imus et al., 2011; Roussos & Stout, 1996). Finally, differentially functioning items that disadvantage ethnic majority members have regularly been found as well (Sackett et al., 2001). In general, it can be concluded that DIF is often observed in two directions, thereby (dis)advantaging ethnic majority members equally to ethnic minority members. Consequently, its effect on diversity seems limited.

Taken together, as verbal and cultural predictor requirements may enhance ethnic subgroup differences in test performance, it is recommended to limit a predictor's verbal and cultural demands strictly to the extent that they are required in the context of job performance. Using video and multimedia during test administration has demonstrated to be effective in reducing the instrument's reading and writing demands and lowering the associated ethnic subgroup differences. To reduce a predictor's cognitive load, cognitive interviewing and DIF have been suggested as promising techniques. In future research, we need to examine the underlying causes of DIF, the specific effects of removing differentially functioning items or cognitive interviewing on the magnitude of ethnic subgroup differences and validity, and the combined effect of DIF and cognitive interviewing.

Foster Positive Test-Taker Reactions Among Candidates

A fifth strategy to approach the diversity-validity dilemma concerns fostering positive test-taker reactions (Ployhart & Holtz, 2008). The idea is that applicant perceptions may differ across ethnic subgroups, with ethnic minority group test-takers having less positive test perceptions. In turn, this may negatively influence their performance. It is therefore suggested that undertaking interventions to increase positive test perceptions among test-takers in general and ethnic minorities in particular may reduce ethnic subgroup differences in applicant withdrawal intentions and selection test performance (Hough et al., 2001; Ployhart & Holtz, 2008; Sackett et al., 2001). Changing test perceptions can be achieved by altering the selection test's instructional sets or by modifying the items and test format.

Several studies have been devoted to the relation between test perceptions and ethnicity. For example, Arvey, Strickland, Drauden, and Martin (1990) were among the first to demonstrate that motivational differences across ethnic subgroups do exist. They found that Whites reported higher test motivation and more believe in selection testing than Blacks, which was related to their performance on ability tests and work sample exercises. Other studies also noticed significantly lower test-taking motivation and higher test anxiety among Black test-takers as compared to Whites (e.g., Chan, Schmitt, DeShon, Clause, & Delbridge, 1997; Schmit & Ryan, 1997, but see Becton, Feild, Giles, & Jones-Farmer, 2008, for an exception). Along these lines, Edwards and Arthur (2007) demonstrated that lower ethnic subgroup differences on a constructed response knowledge test as compared to a multiple choice variant could be partly attributed to smaller subgroup differences in perceived fairness and test-taking motivation (see also Chan & Schmitt, 1997).

Another important line of research within this domain focuses on the effect of stereotype threat on the magnitude of ethnic subgroup differences. Stereotype threat comprises the idea that the mere knowledge of cultural stereotypes may affect test performance (e.g., Steele, 1997, 1998; Steele & Aronson, 1995, 2004). Accordingly, if ethnic minority group test-takers are made aware of negative stereotypes regarding ethnicity and selection test performance, it is suggested to deteriorate their performance (Steele & Aronson, 1995). Steele was the first to propose this hypothesis as an explanation for ethnic differences in performance. He demonstrated that when members of ethnic minority groups enter high stakes testing situations and when they are made aware of the commonly found ethnic group discrepancies, concerns to accomplish poorly arise and performance suffers (Steele, 1997; Steele & Aronson, 1995). In 2008, Nguyen and Ryan conducted a meta-analysis that demonstrated support for a modest effect of stereotype threat among ethnic minority group test-takers. The size of the effect was a function of the explicitness of the stereotype-activating cues, with moderate cues displaying larger effects than blatant or subtle cues (Nguyen & Ryan, 2008). Another moderator that emerged from earlier studies concerns the extent to which the minority test-taker identified with the domain measured. That is, stereotype threat only occurred for those individuals who regard the test domain as relevant for their self-image (Steele & Aronson, 1995). Nonetheless, Steele's hypothesis on stereotype threat has been severely criticized. Sackett has repeatedly expressed his concerns about Steele's research methods and about the misinterpretation and overgeneralization of his research findings (e.g., Sackett, 2003; Sackett, Hardison, & Cullen, 2004; Sackett et al., 2001). In addition, other studies failed to replicate stereotype threat effects (e.g., Cullen, Hardison, & Sackett, 2004; Gillespie, Converse, & Kriska, 2010; Grand, Ryan, Schmitt, & Hmurovic, 2011), thereby questioning the strength of the phenomenon.

To conclude, it seems that fostering positive test-taker perceptions may have in some cases positive albeit small influences on diversity. Additionally, it can enhance the organizational image among potential employees. The most promising strategy in this category regards altering the test format (and accordingly also test fidelity) in order to obtain higher face validity perceptions and test motivation. Further research is needed to shed light on the impact of test-taker perceptions on applicant withdrawal among ethnic minorities (e.g., Schmit & Ryan, 1997; Tam, Murphy, & Lyall, 2004). Regarding the phenomenon of stereotype threat, more research is required to explore to which extent the hypothesis holds in actual applicant situations and which factors perform as moderating influences (e.g., Sackett, 2003).

Provide Coaching Programs and Opportunity for Practice to Candidates

As a sixth strategy to alleviate the diversity-validity dilemma, the provision of coaching programs and the opportunity for practice and retesting to candidates has been suggested (Ployhart & Holtz, 2008). The underlying assumption is that ethnic subgroups differ in their test familiarity and therefore have differential test-taking skills, leading ethnic minority test-takers to perform more poorly in some cases (Sackett et al., 2001). Organizing practice opportunities and offering the possibility to retake the assessment should then allow test-takers to familiarize themselves with the test content and testing situation. Coaching programs go even one step further and intensively guide potential applicants through the selection process while teaching them test-taking strategies and featuring rigorous exercising. Several studies have demonstrated that practice, retesting, and coaching have small albeit consistently positive effects on test performance (Sackett, Burris, & Ryan, 1989; Sackett et al., 2001). Recently, this has been confirmed in a meta-analysis by Hausknecht, Halpert, Di Paolo, and Gerrard (2007). Results of 107 samples did not only reveal a consistent effect of practice and coaching on subsequent performance, but also specified that a combined approach of coaching and practice leads to the most beneficial results in terms of performance increase.

However, the effects of practice, retesting, and coaching on diversity and criterion-related validity are less straightforward (Hough et al., 2001; Sackett et al., 2001). In most cases, both ethnic majority and minority group test-takers benefit from practice and coaching (Sackett et al., 2001). Schleicher, Van Iddekinge, Morgeson, and Campion (2010) added some important insights to this stream of research by comparing ethnic performance differences after retesting for different types of assessment tools. In general, Whites benefitted more from retesting than Blacks and this effect held stronger for written tests as compared to sample-based tests. The effect of retesting on adverse impact ratios was highly dependent on the measure used, so that retesting on sample-based tests could enhance diversity, whereas retesting in the case of written tests had the potential to increase adverse impact (Schleicher et al., 2010). In terms of criterion-related validity, Van Iddekinge, Morgeson, Schleicher, and Campion (2011) discovered no negative influence of retesting.

In sum, practice, retesting, and coaching have demonstrated to have small but consistently positive effects on performance, and in some cases a modest reduction in ethnic subgroup differences as a result of these techniques has been observed. To extend our knowledge on coaching and practice effects in light of the diversity-validity dilemma, additional research is required on the moderating variables that trigger retesting and coaching influences. First, it is imperative to investigate the role of test attitudes, test motivation, and perceptions of procedural fairness (Schleicher et al., 2010). Although these mechanisms have been demonstrated to influence learning performance (e.g., Sackett et al., 1989) and the magnitude of subgroup performance differences (e.g., Ryan, 2001), they have not been examined in the context of retesting as a strategy for reducing adverse impact. Second, future studies should differentiate retesting and coaching effects according to the constructs of interest. Up until now, several studies have focused on retesting for cognitive skills

(Sackett et al., 2001), which are known to produce substantial ethnic subgroup differences. It would be interesting to investigate the effectiveness of the retesting strategy in the context of interpersonal skills (Roth, Buster, & Bobko, 2011).

Discussion

Main Conclusions

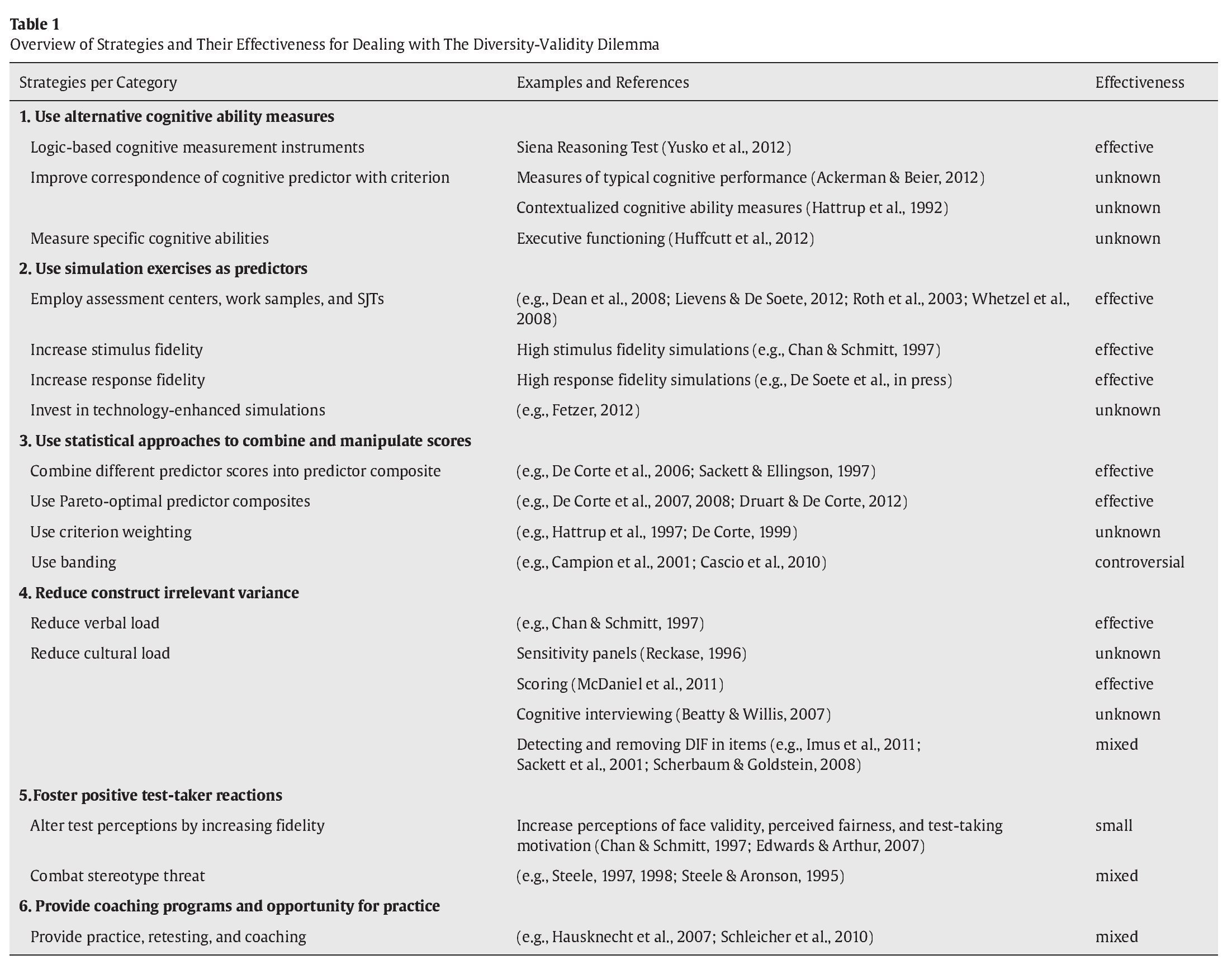

The current paper aimed to provide an updated overview of strategies for dealing with the diversity-validity dilemma. To this end, Table 1 summarizes the main results. As a general conclusion of our review, there does not seem to be an easy way to tackle the diversity-validity quandary. However, throughout our review, a number of strategies have emerged as particularly useful in the context of the diversity-validity dilemma. We present them below.

First, employing logic-based measurement methods to capture cognitive skills seems to be a new and fruitful strategy to reduce ethnic subgroup differences and at the same time identify applicants with job-relevant reasoning capabilities. Second, it seems worthwhile to increase the response fidelity of (simulation) instruments as this does not only enhance the point-to-point matching between predictor and criterion, but also appears to lower ethnic subgroup differences. Third, investments in advanced assessment technologies seem to pay off. In fact, initial research findings suggest lower ethnic subgroup differences and good criterion-related validity coefficients for several new multimedia simulations. Simultaneously, these instruments pose low reading demands, provide the possibility for exercise by means of practice items, and are well received by both ethnic majority group as well as ethnic minority group test-takers, thereby satisfying several strategies for reducing ethnic performance differences. Fourth, statistical strategies that take into account workforce diversity as one of the primary goals of selection decisions, besides selection quality, seem to hold promise and gain in importance. In particular, decision aids that result in Pareto-optimal trade-offs between selection quality and diversity, in single- and multi-stage, as well as in complex selection situations, have emerged as effective approaches for balancing the different outcomes of selection decisions.

Avenues For Future Research

Across the various avenues for further research that have already been pointed out in the current manuscript, we make the following key suggestions for future research. First, this research domain is in need of a more systematic operationalization of ethnicity or race. Currently, there exists little consensus on appropriate labels or terminology for certain ethnic groups (Foldes, Duehr, & Ones, 2008). As a result, researchers use their own interpretation of ethnicity, thereby complicating the generalizability of research findings.

Second, as the current research on ethnic subgroup differences has mainly focused on Black-White performance discrepancies, it is imperative to expand this domain with European research findings and to compare ethnic subgroup differences in American and European settings (e.g., Hanges & Feinberg, 2009; Ruggs et al., 2013; for exceptions in European contexts: De Meijer et al., 2006; De Meijer, Born, Terlouw, & van der Molen, 2008; Ones & Anderson, 2002).

Third, we recommend researchers to apply a building block approach to study subgroup differences on simulation exercises as opposed to a holistic approach (Lievens et al., in press). Specifically, it is advised to conceptualize simulations as a combination of predictor constructs and predictor methods. In order to increase our knowledge of the theoretical drivers of diversity, specific factors of the simulation should be kept constant when manipulating either predictor constructs or predictor methods, as this permits us to increase our knowledge of the theoretical drivers of diversity.

Fourth, research on ethnic subgroup differences in personnel selection would greatly benefit from cross-fertilization with other psychology branches. That is, cross-cultural psychology offers research methodologies that could easily be applied to the research domain of adverse impact (Leong, Leung, & Cheung, 2010). Similarly, some scholars have stressed the potential of including social psychological theories (e.g., Helms, 2012) in the study of ethnic score differences.

Conflicts of interest

The authors declare that they have no conflicts of interest.

Financial support

This research was supported by a PhD fellowship from the Fund for Scientific Research - Flanders (FWO).

ARTICLE INFORMATION

Manuscript received: 12/10/2012

Revision received: 20/12/2012

Accepted: 20/12/2012

DOI: http://dx.doi.org/10.5093/tr2013a2

*Correspondence concerning this article should be sent to

Britt De Soete, Department of Personnel Management,

Work and Organizational Psychology, Henri Dunantlaan 2, 9000 Ghent, Belgium.

E-mail: de.soete.britt@gmail.com

References

Ackerman, P. L., & Beier, M. E. (2012). The problem is in the definition: g and intelligence in I-O psychology. Industrial and Organizational Psychology-Perspectives on Science and Practice, 5, 149-153. doi: 10.1111/j.1754-9434.2012.01420.x

Arthur, W., Day, E. A., McNelly, T. L., & Edens, P. S. (2003). A meta-analysis of the criterion-related validity of assessment center dimensions. Personnel Psychology, 56, 125-154. doi: 10.1111/j.1744-6570.2003.tb00146.x

Arthur, W., Edwards, B. D., & Barrett, G. V. (2002). Multiple-choice and constructed response tests of ability: Race-based subgroup performance differences on alternative paper-and-pencil test formats. Personnel Psychology, 55, 985-1008. doi: 10.1111/j.1744-6570.2002.tb00138.x

Arthur, W., & Villado, A. J. (2008). The importance of distinguishing between constructs and methods when comparing predictors in personnel selection research and practice. Journal of Applied Psychology, 93, 435-442. doi: 10.1037/0021-9010.93.2.435

Arvey, R. D., Strickland, W., Drauden, G., & Martin, C. (1990). Motivational components of test taking. Personnel Psychology, 43, 695-716. doi: 10.1111/j.1744-6570.1990. tb00679.x

Barrett, G. V., Miguel, R. F., & Doverspike, D. (1997). Race differences on a reading comprehension test with and without passages. Journal of Business and Psychology, 12, 19-24. doi: 10.1023/a:1025053931286

Beatty, P. C., & Willis G. B. (2007). Research synthesis: The practice of cognitive interviewing. Public Opinion Quarterly, 71, 287-311. doi: 10.1093/poq/nfm006

Becton, J. B., Feild, H. S., Giles, W. F., & Jones-Farmer, A. (2008). Racial differences in promotion candidate performance and reactions to selection procedures: A field study in a diverse top-management context. Journal of Organizational Behavior, 29, 265-285. doi: 10.1002/job.452

Berk, R. A. (1982). (Ed.). Handbook of methods for detecting test bias. Baltimore: John Hopkins University Press.

Bobko, P., & Roth, P. L. (2013). Reviewing, categorizing, and analyzing the literature on Black-White mean differences for predictors of job performance: Verifying some perceptions and updating/correcting others. Personnel Psychology, 1, 91-126. doi: 10.1111/peps.12007

Bobko, P., Roth, P. L., & Buster, M. A. (2005). Work sample selection tests and expected reduction in adverse impact: A cautionary note. International Journal of Selection and Assessment, 13, 1-10. doi: 10.1111/j.0965-075X.2005.00295.x

Bobko, P., Roth, P. L., & Nicewander, A. (2005). Banding selection scores in human resourcemanagement decisions: Current inaccuracies and the effect of conditional standard errors. Organizational Research Methods, 8, 259-273. doi: 10.1177/1094428105277416

Brouwers, S. A., & Van De Vij ver, F. J. R. (2012). Intelligence 2.0 in I-O psychology: Revival or Contextualization? Industrial and Organizational Psychology-Perspectives on Science and Practice, 5, 158-160. doi: 10.1111/j.1754-9434.2012.01422.x

Callinan, M., & Robertson, I. T. (2000). Work sample testing. International Journal of Selection and Assessment, 8, 248-260. doi: 10.1111/1468-2389.00154

Campion, M. A., Outtz, J. L., Zedeck, S., Schmidt, F. L., Kehoe, J. F., Murphy, K. R., & Guion, R. M. (2001). The controversy over score banding in personnel selection: Answers to 10 key questions. Personnel Psychology, 54, 149-185. doi: 10.1111/j.1744-6570.2001. tb00090.x

Cascio, W. F., Jacobs, R., & Silva, J. (2010). Validity, utility, and adverse impact: Practical implications from 30 years of data. In J. L. Outtz (Ed.), Adverse impact. Implications for organizational staffing and high stakes selection (pp. 271-288). New York, NY: Routledge.

Cascio, W. F., Outtz, J., Zedeck, S., & Goldstein, I. L. (1991). Statistical implications of six methods of test score use in personnel selection. Human Performance, 4, 233-264. doi:10.1207/s15327043hup0404_1

Cattell, R. B. (1971). Abilities: Their structure, growth, and action. Boston: Houghton Mifflin.

Chan, D., & Schmitt, N. (1997). Video-based versus paper-and-pencil method of assessment in situational judgment tests: Subgroup differences in test performance and face validity perceptions. Journal of Applied Psychology, 82, 143-159. doi: 10.1037/0021-9010.82.1.143

Chan, D., Schmitt, N., DeShon, R. P., Clause, C. S., & Delbridge, K. (1997). Reactions to cognitive ability tests: The relationships between race, test performance, face validity perceptions, and test-taking motivation. Journal of Applied Psychology, 82, 300-310. doi: 10.1037//0021-9010.82.2.300

Cullen, M. J., Hardison, C. M., & Sackett, P. R. (2004). Using SAT-grade and ability-job performance relationships to test predictions derived from stereotype threat theory. Journal of Applied Psychology, 89, 220-230. doi: 10.1037/0021-9010.89.2.220

De Corte, W. (1999). Weighing job performance predictors to both maximize the quality of the selected workforce and control the level of adverse impact. Journal of Applied Psychology, 84, 695-702. doi: 10.1037/0021-9010.84.5.695

De Corte, W., Lievens, F., & Sackett, P. (2006). Predicting adverse impact and multistage mean criterion performance in selection. Journal of Applied Psychology, 91, 523-537. doi: 10.1037/0021-9010.91.3.523

De Corte, W., Lievens, F., & Sackett, P. (2007). Combining predictors to achieve optimal trade-offs between selection quality and adverse impact. Journal of Applied Psychology, 92, 1380-1393. doi: 10.1037/0021-9010.92.5.1380

De Corte, W., Lievens, F., & Sackett, P. (2008). Validity and adverse impact potential of predictor composite formation. International Journal of Selection and Assessment, 16, 183-194. doi: 10.1111/j.1468-2389.2008.00423.x

De Corte, W., Sackett, P., & Lievens, F. (2011). Designing pareto-optimal selection systems: Formalizing the decisions required for selection system development. Journal of Applied Psychology, 96, 907-926. doi: 10.1037/a0023298

De Meijer, L. A. L., Born, M. P ., Terlouw, G., & van der Molen, H. T. (2006). Applicant and method factors related to ethnic score differences in personnel selection: A study at the Dutch police. Human Performance, 19, 219-251. doi: 10.1207/ s15327043hup1903_3

De Meijer, L. A. L., Born, M. P., Terlouw, G., & van der Molen, H. T. (2008). Criterion-related validity of Dutch Police-Selection Measures and Differences between Ethnic Groups. International Journal of Selection and Assessment, 16, 321-332. doi: 10/1111/j.1468-2389.2008.00438.x

De Soete, B., Lievens, F., Oostrom, J., & Westerveld, L. (in press). Alternative predictors for dealing with the diversity-validity dilemma in personnel selection: The constructed response multimedia test. International Journal of Selection and Assessment.

Dean, M. A., Bobko, P., & Roth, P. L. (2008). Ethnic and gender subgroup differences in assessment center ratings: A meta-analysis. Journal of Applied Psychology, 93, 685-691. doi: 10.1037/0021-9010-93.3.685

DeShon, R. P., Smith, M. R., Ch an, D., & Schmitt, N. (1998). Can racial differences in cognitive test performance be reduced by presenting problems in a social context? Journal of Applied Psychology, 83, 438-451. doi: 10.1037/0021-9010.83.3.438

Druart, C., & De Corte, W. (2012). Designing Pareto-optimal systems for complex selection decisions. Organizational Research Methods, 15, 488-513. doi: 10.1177/1094428112440328

Edwards, B. D., & Arthur, W. (2 007). An examination of factors contributing to a reduction in subgroup differences on a constructed-response paper-and-pencil test of scholastic achievement. Journal of Applied Psychology, 92, 794-801. doi: 10.1037/0021-9010.92.3.794

Evers, A., Te Nijenhuis, J., & Van der Flier, H. (2005). Ethnic bias and fairness in personnel selection: Evidence and consequences. In A. Evers, N. Anderson, & O. Voskuijl (Eds.), Handbook of Personnel Selecion (pp. 306-328). Oxford, UK: Blackwell.

Fetzer, M. S. (2012, April). Current research in advanced assessment technologies. Symposium presented at the 27 Annual Conference of the Society for Industrial and Organizational Psychology, San Diego, CA.

Foldes, H. J., Duehr, E. E., & Ones, D. S. (2008). Group differences in personality: Meta-analyses comparing five US racial groups. Personnel Psychology, 61, 579-616. doi: 10.1111/j.1744-6570.2008.00123.x

Freedle, R., & Kostin, I. (1990 ). Item difficulty of 4 verbal itemtypes and an index of differential item functioning for Black and White examinees. Journal of Educational Measurement, 27, 329-343. doi: 10.1111/j.1745-3984.1990.tb00752.x

Gatewood, R., Feild, H., & Barr ick, M. (2011). Human Resource Selection (6th ed.).

Cincinnati, OH: South-Western.Gillespie, J. Z., Converse, P. D., & Kriska, S. D. (2010). Applying recommendations from the literature on stereotype threat: Two field studies. Journal of Business and Psychology, 25, 493-504. doi: 10.1007/s10869-010-9178-1

Goldstein, H. W., Yusko, K. P., Braverman, E. P., Smith, D. B., & Chung, B. (1998). The role of cognitive ability in the subgroup differences and incremental validity of assessment center exercises. Personnel Psychology, 51, 357-374. doi: 10.1111/j.1744-6570.1998.tb00729.x

Goldstein, H. W., Yusko, K. P., & Nicolopoulos, V. (2001). Exploring Black-White subgroup differences of managerial competencies. Personnel Psychology, 54, 783-807. doi: 10.1111/j.1744-6570.2001.tb00232.x

Grand, J. A., Ryan, A. M., Schm itt, N., & Hmurovic, J. (2011). How far does stereotype threat reach? The potential detriment of face validity in cognitive ability testing. Human Performance, 24, 1-28. doi: 10.1080/08959285.2010.518184

Gudykunst, W. B., Matsumoto, Y. , TingToomey, S., Nishida, T., Kim, K., & Heyman, S. (1996). The influence of cultural individualism-collectivism, self construals, and individual values on communication styles across cultures. Human Communication Research, 22, 510-543. doi: 10.1111/j.1468-2958.1996.tb00377.x

Hall, E. T. (1976). Beyond cult ure. New York, NY: Doubleday.

Hanges, P. J., & Feinberg, E. G. (2009). International perspectives on adverse impact: Europe and beyond. In J. L. Outtz (Ed.), Adverse Impact: Implications for Organizational Staffing and High Stakes Selection (pp. 349-373). New York, NY: Routledge.

Hattrup, K., Rock, J., & Scalia, C. (1997). The effects of varying conceptualizations of job performance on adverse impact, minority hiring, and predicted performance. Journal of Applied Psychology, 82, 656-664. doi: 10.1037/0021-9010.82.5.656

Hattrup, K., Schmitt, N., & Lan dis, R. S. (1992). Equivalence of constructs measured by job-specific and commercially available aptitude-tests. Journal of Applied Psychology, 77, 298-308. doi: 10.1037/0021-9010.77.3.298

Hausknecht, J. P., Halpert, J. A., Di Paolo, N. T., & Gerrard, M. O. M. (2007). Retesting in selection: A meta-analysis of coaching and practice effects for tests of cognitive ability. Journal of Applied Psychology, 92, 373-385. doi: 10.1037/0021-9010.92.2.373

Helms, J. E. (1992). Why is the re no study of cultural equivalence in standardized cognitive ability testing? American Psychologist, 47, 1083-1101. doi: 10.1037/0003-066x.47.9.1083

Helms, J. E. (2012). A legacy o f eugenics underlies racial-group comparisons in intelligence testing. Industrial and Organizational Psychology-Perspectives on Science and Practice, 5, 176-179. doi: 10.1111/j.1754-9434.2012.01426.x

Hough, L. M., Oswald, F. L., & Ployhart, R. E. (2001). Determinants, detection and amelioration of adverse impact in personnel selection procedures: Issues, evidence and lessons learned. International Journal of Selection and Assessment, 9, 152-194. doi: 10.1111/1468-2389.00171

Huffcutt, A. I., Goebl, A. P., & Culbertson, S. S. (2012). The engine is important, but the driver is essential: The case for executive functioning. Industrial and Organizational Psychology-Perspectives on Science and Practice, 5, 183-186. doi: 10.1111/j.1754-9434.2012.01428.x

Imus, A., Schmitt, N., Kim, B., Oswald, F. L., Merritt, S., & Wrestring, A. F. (2011). Differential item functioning in biodata: Opportunity access as an explanation of gender- and race-related DIF. Applied Measurement in Education, 24, 71-94.

Jensen, A. R. (1998). The g factor: The science of mental ability. Westport, CT: Praeger. Leong, F. T. L., Leung, K., & Cheun g, F. M. (2010). Integrating cross-cultural psychology research methods into ethnic minority psychology. Cultural Diversity & Ethnic Minority Psychology, 16, 590-597. doi: 10.1037/a0020127

Lievens, F., Buyse, T., & Sackett, P. R. (2005). The operational validity of a video-based situational judgment test for medical college admissions: Illustrating the importance of matching predictor and criterion construct domains. Journal of Applied Psychology, 90, 442-452. doi: 10.1037/0021-9010.90.3.442

Lievens, F., & De Soete, B. (2012). Simulations. In N. Schmitt (Ed.), Handbook of Assessment and Selection: Oxford University Press.

Lievens, F., & Reeve, C. L. (2012). Where I-O psychology should really (re)start its investigation of intelligence constructs and their measurement. Industrial and Organizational Psychology-Perspectives on Science and Practice, 5, 153-158. doi: 10.1111/j.1754-9434.2012.01421.x

Lievens, F., Westerveld, L., & De C orte, W. (in press). Understanding the building blocks of selection procedures: Effects of response fidelity on performance and validity. Journal of Management.

McDaniel, M. A., Psotka, J., Legree, P. J., Yost, A. P., & Weekley, J. A. (2011). Toward an understanding of situational judgment item validity and group differences. Journal of Applied Psychology, 96, 327-336. doi: 10.1037/a0021983

Medley, D. M., & Quirk, T. J. (1974 ). Application of a factorial design to study cultural bias in general culture items on national teacher examination. Journal of Educational Measurement, 11, 235-245. doi: 10.1111/j.1745-3984.1974.tb00995.x

Mitchelson, J. K., Wicher, E. W., L eBreton, J. M., & Craig, S. B. (2009). Gender and ethnicity differences on the Abridged Big Five Circumplex (AB5C) of personality traits: A differential item functioning analysis. Educational and Psychological Measurement, 69, 613-635. doi: 10.1177/0013164408323235

Motowidlo, S. J., Dunnette, M. D., & Carter, G. W. (1990). An alternative selection procedure: The low-fidelity simulation. Journal of Applied Psychology, 75, 640-647. doi: 10.1037/0021-9010.75.6.640

Murphy, K. R. (2010). How a broader definition of the criterion domain changes our thinking about adverse impact. In J. L. Outtz (Ed.), Adverse impact. Implications for organizational staffing and high stakes selection. (pp. 137-160). New York, NY: Routledge.

Murphy, K. R., & Shiarella, A. H. (1997). Implications of the multidimensional nature of job performance for the validity of selection tests: Multivariate frameworks for studying test validity. Personnel Psychology, 50, 823-854. doi: 10.1111/j.1744-6570.1997.tb01484.x

Nápolez-Springer, A. M., Santoyo-Olsson , J., O'Brien, H., & Stewart, A. L. (2006). Using cognitive interviews to develop surveys in diverse populations. Medical Care, 44, 21-30. doi: 10.1097/01.mlr.0000245425.65905.1d

Nguyen, H. H. D., & Ryan, A. M. (2008). Does stereotype threat affect test performance of minorities and women? A meta-Analysis of experimental evidence. Journal of Applied Psychology, 93, 1314-1334. doi: 10.1037/a0012702

Ones, D. S., & Anderson, N. (2002). Gender and ethnic group differences on personality scales in selection: Some British data. Journal of Occupational and Organizational Psychology, 75, 255-276. doi: 10.1348/096317902320369703

Oostrom, J. K., & Born, M. Ph. (submitted). Using cognitive pretesting to explore causes for ethnic differences on role-plays.

Oostrom, J. K., Born, M. Ph., Serlie, A. W., & van der Molen, H. T. (2010). Webcam testing: Validation of an innovative open-ended multimedia test. European Journal of Work and Organizational Psychology, 19, 532-550. doi: 10.1080/13594320903000005

Oostrom, J. K., Born, M. Ph., Serlie, A. W., & van der Molen, H. T. (2011). A multimedia situational test with a constructed-response format: Its relationship with personality, cognitive ability, job experience, and academic performance. Journal of Personnel Psychology, 10, 78-88. doi: 10.1027/1866-5888/a000035

Outtz, J., Goldstein, H., & Ferreter, J. (2006, April). Testing divergent and convergent thinking: Test response format and adverse impact. Paper presented at the 20 Annual Conference of the Society for Industrial and Organizational Psychology, San Diego, CA.

Paullin, C., Putka, D. J., Tsacoumis, S., & Colberg, M. (2010, April). Using a logic-based measurement approach to measure cognitive ability. In C. Paullin (Chair), Cognitive ability testing: Exploring new models, methods, and statistical techniques. Symposium conducted at the 25 Annual Conference of the Society for Industrial and Organizational Psychology, Atlanta, GA.

Ployhart, R. E., & Holtz, B. C. (2008). The diversity-validity dilemma: Strategies for reducing racioethnic and sex subgroup differences and adverse impact in selection. Personnel Psychology, 61, 153-172. doi: 10.1111/j.1744-6570.2008.00109.x

Pyburn, K. M., Ployhart, R. E., & Kravitz , D. A. (2008). The diversity-validity dilemma: Overview and legal context. Personnel Psychology, 61, 143-151. doi: 10.1111/j.1744-6570.2008.00108.x

Reckase, M. D. (1996). Test construction in the 1990s: Recent approaches every psychologist should know. Psychological Assessment, 8, 354-359. doi: 10.1037/1040-3590.8.4.354

Roth, P. L., Bevier, C. A., Bobko, P., Switzer, F. S., & Tyler, P. (2001). Ethnic group differences in cognitive ability in employment and educational settings: A meta-analysis. Personnel Psychology, 54, 297-330. doi: 10.1111/j.1744-6570.2001.tb00094.x

Roth, P. L., Bobko, P., McFarland, L., & Buster, M. (2008). Work sample tests in personnel selection: A meta-analysis of black-white differences in overall and exercise scores. Personnel Psychology, 61, 637-661. doi: 10.1111/j.1744-6570.2008.00125.x

Roth, P. L., Buster, M. A., & Bobko, P. ( 2011). Updating the trainability tests literature on Black-White subgroup differences and reconsidering criterion-related validity. Journal of Applied Psychology, 96, 34-45. doi: 10.1037/a0020923

Roth, P. L., Huffcutt, A. I., & Bobko, P. (2003). Ethnic group differences in measures of job performance: A new meta-analysis. Journal of Applied Psychology, 88, 694-706. doi: 10.1037/0021-9010.88.4.694

Roth, P. L., Switzer, F. S., Van Iddekinge, C. H., & Oh, I. S. (2011). Toward better meta-analytic matrices: How input values can affect research conclusions in human resource management simulations. Personnel Psychology, 64, 899-935. doi: 10.1111/j.1744-6570.2011.01231.x

Roussos, L., & Stout, W. (1996). A multid imensionality-based DIF analysis paradigm. Applied Psychological Measurement, 20, 355-371. doi: 10.1177/014662169602000404

Ruggs, E. N., Law, C., Cox, C. B., Roehling, M. V., Wieners, R. L., Hebl, M. R., & Barron, L. (2013). Gone fishing: I/O psychologists' missed opportunities to understand marginalized employees' experiences with discrimination. Industrial and Organizational Psychology-Perspectives on Science and Practice 6, 39-60. doi: 10.1111/ iops.12007

Ryan, A. M. (2001). Explaining the Black- White test score gap: The role of test perceptions. Human Performance, 14, 45-75. doi: 10.1207/S15327043HUP1401_04

Ryan, A. M., & Greguras, G. J. (1998). Li fe is not multiple choice: Reactions to the alternatives. In M. D. Hakel (Ed.), Beyond Multiple Choice: Evaluating Alternatives to Traditional Testing for Selection (pp. 183-202). Mahwah, NJ: Lawrence Erlbaum Associates.

Ryan, A. M., & Huth, M. (2008). Not much more than platitudes? A critical look at the utility of applicant reactions research. Human Resource Management Review, 18, 119-132. doi: 10.1016/j.hrmr.2008.07.004

Sacco, J. M., Scheu, C. R., Ryan, A. M., Schmitt, N., Schmidt, D. B., & Rogg, I. U. (2000,

April). Reading level as a predictor of subgroup differences and validities of situational judgment tests. Paper presented at the 14 Annual Conference for the Society for Industrial and Organizational Psychology, New Orleans, LA.

Sackett, P. R. (2003). Stereotype threat in applied selection settings: A commentary. Human Performance, 16, 295-309. doi: 10.1207/s15327043hup1603_6

Sackett, P. R., Burris, L. R., & Ryan, A. M. (1989). Coaching and practice effects in personnel selection. In C. L. Cooper and I. T. Robertson (Eds.), International Review of Industrial & Organizational Psychology. West Sussex, England: John Wiley and Sons.

Sackett, P. R., & Ellingson, J. E. (1997). The effects of forming multipredictor composites on group differences and adverse impact. Personnel Psychology, 50, 707-721. doi: 10.1111/j.1744-6570.1997.tb00711.x

Sackett, P. R., Hardison, C. M., & Cullen , M. J. (2004). On interpreting stereotype threat as accounting for African American-White differences on cognitive tests. American Psychologist, 59, 7-13. doi: 10.1037/0003-066x.59.1.7

Sackett, P. R., & Roth, L. (1996). Multi-stage selection strategies: A Monte Carlo investigation of effects on performance and minority hiring. Personnel Psychology, 49, 549-572. doi: 10.1111/j.1744-6570.1996.tb01584.x

Sackett, P. R., Schmitt, N., Ellingson, J . E., & Kabin, M. B. (2001). High-stakes testing in employment, credentialing, and higher education: Prospects in a post-affirmative-action world. American Psychologist, 56, 302-318. doi: I0.IO37/AJO03-O66X.56.4.302

Scherbaum, C. A., & Goldstein, H. W. (200 8). Examining the relationship between race-based differential item functioning and item difficulty. Educational and Psychological Measurement, 68, 537-553. doi: 10.1177/0013164407310129

Schleicher, D. J., Van Iddekinge, C. H., Morgeson, F. P., & Campion, M. A. (2010). If at first you don't succeed, try, try again: Understanding race, age, and gender differences in retesting score improvement. Journal of Applied Psychology, 95, 603-617. doi: 10.1037/a0018920

Schmit, M. J., & Ryan, A. M. (1997). Appl icant withdrawal: The role of test-taking attitudes and racial differences. Personnel Psychology, 50, 855-876. doi: 10.1111/j.1744-6570.1997.tb01485.x

Schmitt, N., & Mills, A. E. (2001). Tradi tional tests and job simulations: Minority and majority performance and test validities. Journal of Applied Psychology, 86, 451-458. doi: 10.1037//0021-9010.86.3.451

Steele, C. M. (1997). A threat in the air : How stereotypes shape intellectual identity and performance. American Psychologist, 52, 613-629. doi: 10.1037//0003-066x.52.6.613

Steele, C. M. (1998). Stereotyping and it s threat are real. American Psychologist, 53, 680-681. doi: 10.1037/0003-066x.53.6.680

Steele, C. M., & Aronson, J. A. (1995). S tereotype threat and the intellectual test performance of African-Americans. Journal of Personality and Social Psychology, 69, 797-811. doi: 10.1037/0022-3514.69.5.797

Steele, C. M., & Aronson, J. A. (2004). S tereotype threat does not live by Steele and Aronson (1995) alone. American Psychologist, 59, 47-48. doi: 10.1037/0003-066x.59.1.47

Tam, A. P., Murphy, K. R., & Lyall, J. T. (2004). Can changes in differential dropout rates reduce adverse impact? A computer simulation study of a multi-wave selection system. Personnel Psychology, 57, 905-934. doi: 10.1111/j.1744-6570.2004.00010.x

Van Iddekinge, C. H., Morgeson, F. P., Sc hleicher, D. J., & Campion, M. A. (2011). Can I retake it? Exploring subgroup differences and criterion-related validity in promotion retesting. Journal of Applied Psychology, 96, 941-955. doi: 10.1037/a0023562

Weekley, J. A., & Jones, C. (1997). Video -based situational testing. Personnel Psychology, 50, 25-49. doi: 10.1111/j.1744-6570.1997.tb00899.x

Whetzel, D. L., McDaniel, M. A., & Nguyen , N. T. (2008). Subgroup differences in situational judgment test performance: A meta-analysis. Human Performance, 21, 291-309. doi: 10.1080/08959280802137820

Whitney, D. J., & Schmitt, N. (1997). Rel ationship between culture and responses to biodata employment items. Journal of Applied Psychology, 82, 113-129. doi: 10.1037/0021-9010.82.1.113

Willis, G. B., & Miller, K. (2011). Cross-cultural cognitive interviewing: Seeking comparability and enhancing understanding. Field Methods, 23, 331-341. doi: 10.1177/1525822X11416092

Yusko, K. P., Goldstein, H. W., Scherbaum, C. A., & Hanges, P. J. (2012, April). Siena Reasoning Test: Measuring intelligence with reduced adverse impact. Paper presented at the 27 Annual Conference of the Society for Industrial and Organd: 20/12/ 2012